DoorDash Data Engineer Interview Guide: Questions, Process & Prep (2026)

Introduction

The DoorDash data engineer role sits at the core of one of the most complex real-time logistics and commerce platforms in the world. Every order placed, delivery routed, and estimate shown to a customer is powered by data systems that must be accurate, timely, and resilient at massive scale. As DoorDash continues to expand beyond food delivery into groceries, retail, and local commerce, data engineering has become critical to how teams measure performance, optimize delivery speed, and support experimentation across the marketplace.

The DoorDash data engineer interview reflects this responsibility. You are evaluated on much more than whether you can write a correct SQL query or build a basic pipeline. Interviewers look for strong data modeling instincts, practical pipeline design decisions, and the ability to reason about data reliability in production environments. This guide breaks down each stage of the DoorDash data engineer interview, the most common Doordash specific question types candidates face, and proven preparation strategies to help you approach the process with confidence and stand out as a data engineer who can operate at DoorDash scale.

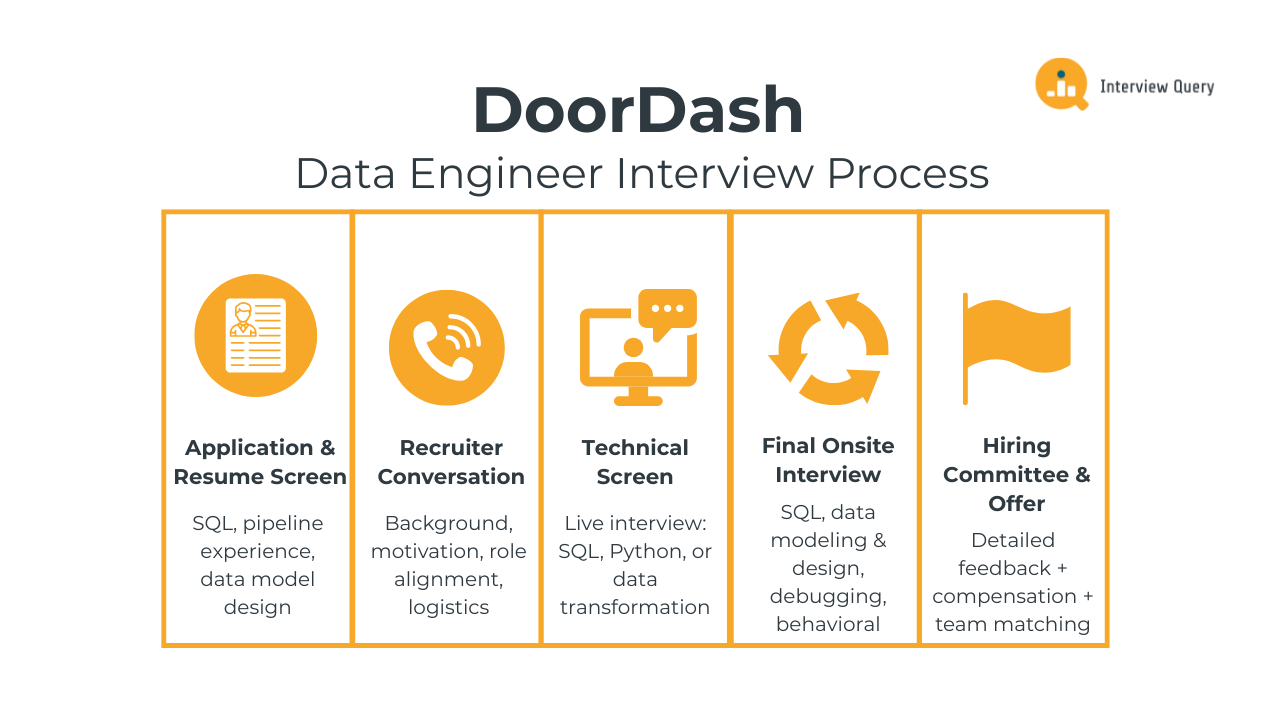

DoorDash Data Engineer Interview Process

The DoorDash data engineer interview process is designed to evaluate how well you can build reliable data systems, reason about data correctness at scale, and communicate tradeoffs in production environments. The process focuses heavily on SQL depth, data modeling judgment, pipeline design, and ownership of data quality rather than abstract theory. Most candidates move through the full loop in three to five weeks, depending on scheduling and team needs.

Below is a breakdown of each stage and what interviewers at DoorDash look for at every step.

Application and Resume Screen

During the resume review, recruiters look for evidence that you have owned production data pipelines and supported real downstream users such as analysts, product teams, or machine learning systems. Strong candidates clearly demonstrate SQL proficiency, experience with batch or streaming pipelines, and the ability to design data models that scale. Impact matters more than tooling. A resume that shows how your work improved reliability, reduced latency, or enabled better decision-making stands out quickly.

Tip: Highlight where your pipelines were used and what broke if they failed. This signals production ownership and shows interviewers that you understand how data engineering decisions affect real teams.

Initial Recruiter Conversation

The recruiter call is a short, non-technical conversation focused on your background, role alignment, and motivation for DoorDash. You will walk through your experience, discuss the types of data systems you have worked on, and clarify what kind of teams or problems interest you. Recruiters also confirm logistics such as location, timeline, and compensation expectations. This stage sets context for the technical interviews that follow.

Tip: Clearly explain the scale and complexity of the systems you have worked on. This helps recruiters assess whether your experience maps to DoorDash’s marketplace data challenges.

Technical Screen

The technical screen typically consists of one live interview focused on SQL and basic Python or data transformation logic. You may be asked to analyze order or delivery-style schemas, write joins and window functions, or reason through edge cases like late events and duplicates. Interviewers evaluate how you structure your solution, validate assumptions, and explain your reasoning as much as the final answer.

Tip: Talk through your approach before writing queries. This demonstrates analytical clarity and shows that you can collaborate effectively when solving data problems.

Final Onsite Interview

The onsite interview loop is the most comprehensive stage of the DoorDash data engineer interview process. It usually includes four to five interviews, each lasting about 45 to 60 minutes. These rounds assess how you design data systems, reason about reliability, and work across teams in ambiguous environments.

SQL and analytics engineering round: This interview focuses on advanced SQL and analytics use cases. You may work with large tables representing orders, deliveries, or events and be asked to compute metrics, build funnels, or identify data quality issues. Interviewers look for clean logic, correct handling of edge cases, and queries that would hold up in production.

Tip: Explicitly call out how you would validate results and handle bad data. This shows strong data intuition and an understanding of analytics reliability.

Data modeling and pipeline design round: In this round, you design data models and pipelines to support analytics or operational use cases. You might model an orders system, design a delivery events pipeline, or explain how you would handle late-arriving data and backfills. Interviewers evaluate your schema design, tradeoff reasoning, and ability to plan for scale and change.

Tip: Always discuss how your design supports downstream users. This signals strong system thinking and empathy for analytics and product teams.

Debugging and data reliability round: This interview tests how you respond when data breaks. You may be given a scenario involving incorrect metrics, delayed pipelines, or inconsistent dashboards and asked how you would diagnose and fix the issue. The focus is on prioritization, investigation strategy, and communication during incidents.

Tip: Walk through your debugging process step by step. This demonstrates calm problem-solving and operational maturity, which are highly valued at DoorDash.

Behavioral and cross-functional collaboration round: This round evaluates how you work with partners, handle feedback, and take ownership of long-running projects. Expect questions about disagreements with stakeholders, balancing speed versus correctness, and making tradeoffs under pressure. Clear communication and accountability matter as much as technical skill.

Tip: Emphasize moments where you influenced decisions or protected data quality. This shows leadership and trustworthiness as a data owner.

Hiring Committee and Offer

After the onsite, interviewers submit detailed feedback independently. A hiring committee reviews your performance across all rounds, focusing on technical strength, communication clarity, and consistency of ownership signals. If approved, the team determines level and compensation based on scope, experience, and interview performance. Team matching may also happen at this stage depending on organizational needs.

Tip: If you are excited about a specific problem space like experimentation or marketplace analytics, communicate that interest early. It helps teams assess fit and long-term impact.

Want to get realistic practice on SQL and data engineering questions? Try Interview Query’s AI Interviewer for tailored feedback that mirrors real interview expectations.

DoorDash Data Engineer Interview Questions

The DoorDash data engineer interview includes a mix of SQL depth, data modeling, pipeline design, debugging, and behavioral reasoning. These questions are designed to evaluate how well you work with large scale marketplace data, reason about data correctness under real production constraints, and support analytics, experimentation, and machine learning teams. Interviewers are less interested in textbook answers and more focused on how you structure problems, handle edge cases, and communicate tradeoffs clearly in ambiguous, fast-moving environments at DoorDash.

SQL and Analytics Engineering Interview Questions

This portion of the interview focuses heavily on SQL used in real analytics workflows. DoorDash SQL questions often involve order, delivery, Dasher, and merchant data with time-based logic, joins across multiple entities, and edge cases such as late events or duplicates. The goal is to assess whether you can write production-ready queries that analysts and product teams can trust.

Write a query to find orders that were delivered later than their promised delivery time.

This question tests how well you reason about time-based performance metrics that directly affect customer experience at DoorDash. Late deliveries impact retention, refunds, and operational decisions, so engineers must compare promised delivery timestamps with actual completion times reliably. To solve it, you would join orders with delivery completion events, compare actual delivery time against the latest promised estimate, and filter to completed orders. You should also explain how to handle missing promises or updated estimates to avoid false positives.

Tip: Always explain how you choose the “source of truth” for delivery timestamps. This shows judgment around data consistency, a key skill DoorDash engineers rely on when metrics drive real operational actions.

How would you calculate daily active Dashers by city?

This question evaluates your ability to define metrics clearly and aggregate event data correctly. At DoorDash, “active” must be explicitly defined, such as a Dasher who completed at least one delivery or accepted an order that day. You would filter relevant Dasher activity events, join to city or zone mappings, group by date and city, and count distinct Dashers. The interviewer is assessing whether you think carefully about definitions, not just grouping logic.

Tip: Call out how different “active” definitions change the metric. This shows you understand how analytics choices influence operational decisions and staffing models.

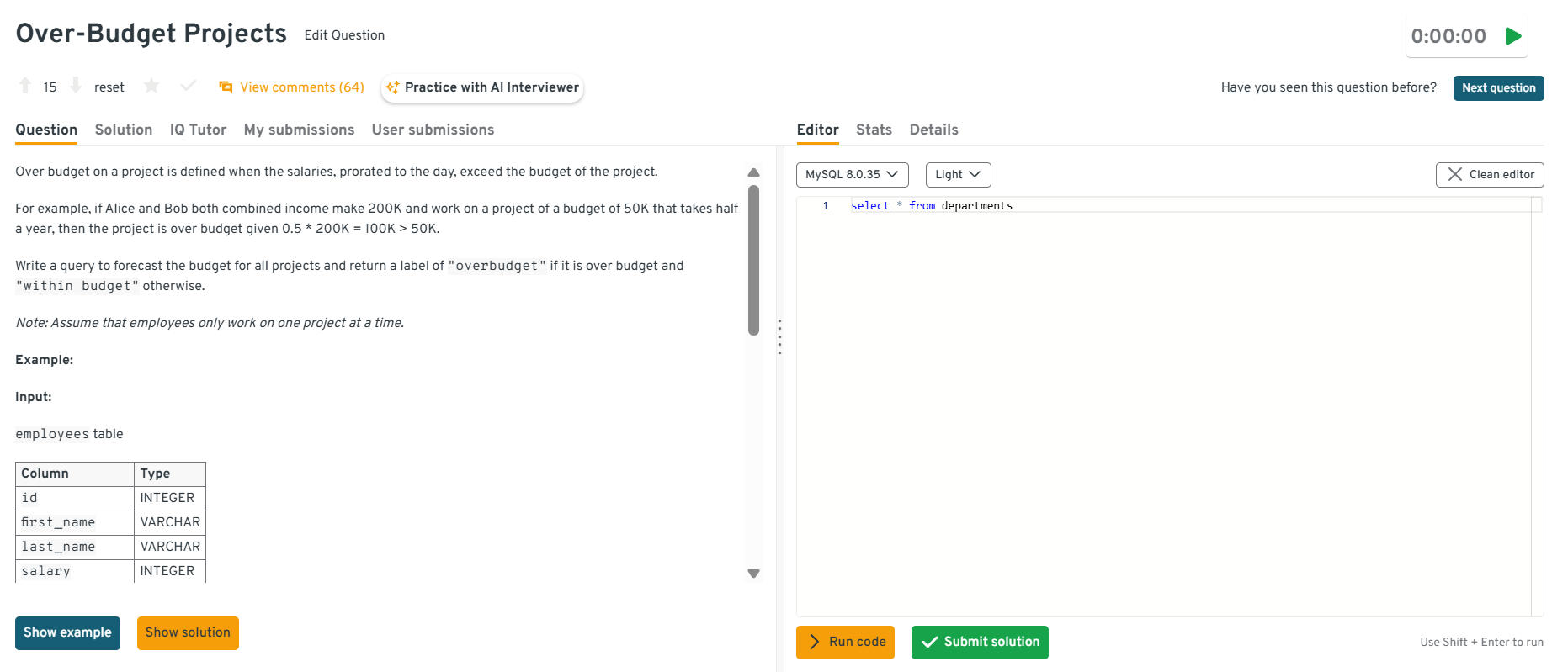

-

This question tests cost modeling and conditional logic, which matter at DoorDash when evaluating efficiency across teams and initiatives. You would calculate projected cost by prorating salaries based on time allocation, aggregate costs per project, and compare them against budget thresholds using conditional statements. The important part is explaining assumptions, such as time windows and allocation logic, since finance and planning rely on these outputs.

Tip: Be explicit about assumptions and edge cases. DoorDash values engineers who surface uncertainty early rather than hiding it behind clean numbers.

Head to the Interview Query dashboard, which brings together the exact SQL, data modeling, pipeline reliability, debugging, and behavioral questions tested in DoorDash data engineer interviews. With built-in code execution, performance analytics, and AI-guided feedback, it helps you practice production-style problems, identify gaps in your reasoning, and sharpen how you explain trade-offs in a fast-moving marketplace context.

-

This question tests multi-table joins, filtering logic, and optimization thinking, all common in DoorDash’s marketplace problems. You would join recipes to ingredients, filter out pantry items already available, limit to acceptable brands, compute minimum cost per ingredient, and aggregate total recipe cost. Feasibility is determined by whether all required ingredients have valid options. It mirrors how DoorDash evaluates menu availability and pricing constraints.

Tip: Explain how you ensure missing ingredients fail the recipe. This demonstrates careful handling of completeness, which is critical in production analytics.

-

This question evaluates your ability to combine behavioral analysis with user-level attributes. At DoorDash, understanding address usage helps teams reason about reliability, fraud, and user behavior patterns. You would join orders to user address data, flag whether each order used the primary address, aggregate counts per user, and compute percentages. Interviewers look for clean logic and correct denominator handling.

Tip: Mention how you would validate users with multiple “primary” addresses or none at all. This shows strong data skepticism and protects downstream analyses from silent errors.

Watch next: Top 10+ Data Engineer Interview Questions and Answers

In this walkthrough, Interview Query founder Jay Feng breaks down core data engineering problems that closely mirror what DoorDash evaluates, including advanced SQL, distributed data systems, pipeline reliability, and practical data modeling. The examples focus on real production scenarios, helping you strengthen the exact skills DoorDash interviewers look for when assessing ownership, correctness, and system-level thinking.

For more in-depth preparation tailored to DoorDash-style interviews, explore a curated collection of 100+ data engineer interview questions with detailed answers.

Data Modeling and Schema Design Interview Questions

This section focuses on how you structure data so it remains trustworthy, flexible, and easy to use as DoorDash scales. These questions test whether you can design schemas that support analytics, experimentation, and operational use cases without creating ambiguity or downstream fragility. Interviewers are looking for clean separation of concerns, thoughtful handling of time and change, and an understanding of how modeling decisions affect real teams at DoorDash.

How would you design a data model for orders and deliveries?

This question tests whether you can model a core DoorDash domain with clarity and scalability. Orders and deliveries have a one-to-many relationship, so a strong answer separates order-level facts from delivery-level facts while sharing common dimensions like merchant, customer, geography, and time. You should explain how this model supports metrics like delivery latency, cancellation rates, and historical trend analysis without double counting or confusion.

Tip: Explicitly explain which metrics live at the order level versus the delivery level. This shows strong domain modeling judgment and prevents downstream analytics errors.

-

This question evaluates your ability to design high-volume event schemas. DoorDash relies on clickstream-style data to understand user behavior across app surfaces. A solid answer describes an append-only events table with clear event types, timestamps, user identifiers, and contextual attributes, paired with derived session or page-level tables. You should also mention how to balance flexibility with schema consistency.

Tip: Call out how you would version events over time. This demonstrates foresight and protects analytics when product instrumentation evolves.

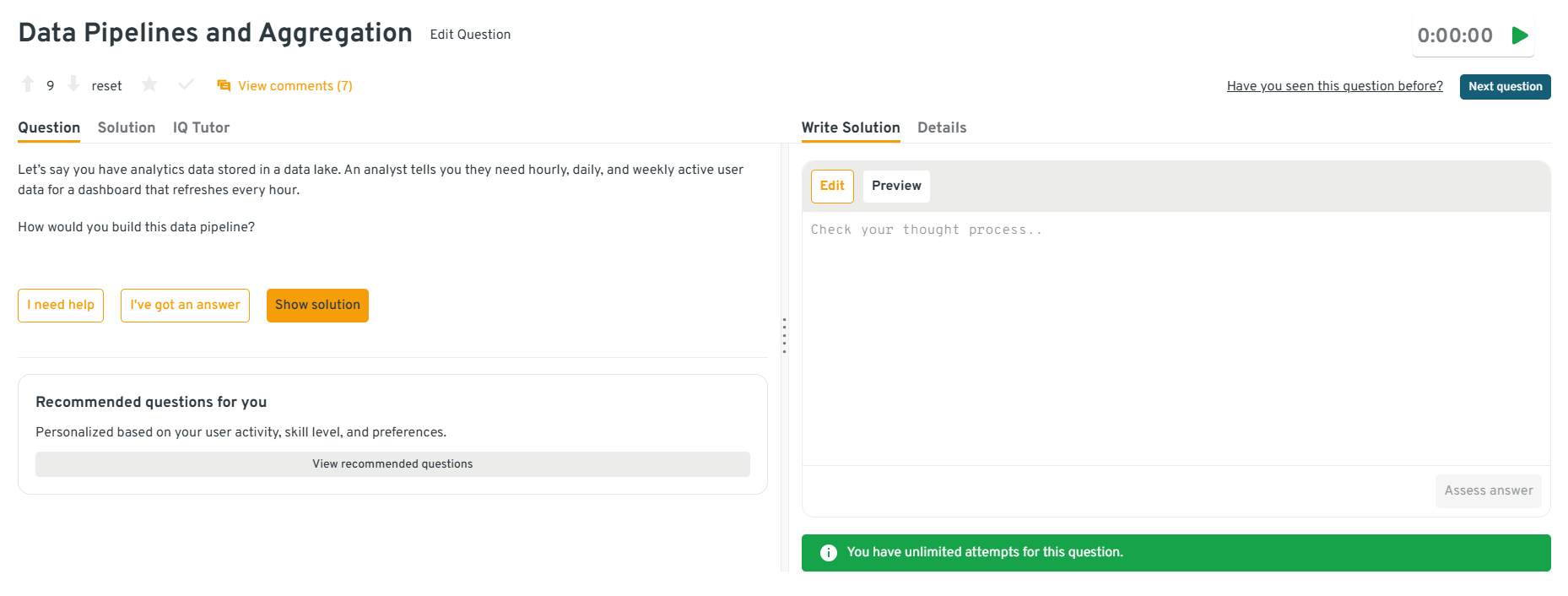

-

This question tests end-to-end system thinking across modeling and orchestration. You should explain how raw events are filtered into activity signals, aggregated at multiple grains, and stored in summary tables that support fast dashboard queries. Interviewers want to hear how you avoid recomputation, manage late data, and ensure consistent definitions across time windows.

Tip: Emphasize idempotent aggregations. This signals reliability awareness and reduces the risk of silently corrupted metrics.

Head to the Interview Query dashboard, which brings together the exact SQL, data modeling, pipeline reliability, debugging, and behavioral questions tested in DoorDash data engineer interviews. With built-in code execution, performance analytics, and AI-guided feedback, it helps you practice production-style problems, identify gaps in your reasoning, and sharpen how you explain trade-offs in a fast-moving marketplace context.

-

This question evaluates temporal modeling skills. At DoorDash, address history matters for delivery accuracy, fraud prevention, and customer experience. A strong answer explains using effective start and end timestamps to track address validity over time rather than overwriting records. You should describe how this supports point-in-time analysis and avoids retroactive data changes.

Tip: Explain why overwriting addresses breaks historical analysis. This shows you understand long-term data integrity, not just schema mechanics.

How would you design a table to support experimentation analysis?

This question tests experimentation literacy and data correctness. A strong answer describes a stable assignment table keyed by user or entity, experiment identifiers, variant assignment, and assignment timestamp. You should explain how this table joins cleanly to event data and avoids issues like reassignment or metric leakage that invalidate experiment results.

Tip: Call out safeguards against multiple active assignments per user. This demonstrates discipline around experimentation accuracy, which DoorDash relies on heavily for product decisions.

Want to master the full data engineering lifecycle? Explore our Data Engineering 50 learning path to practice a curated set of data engineering questions designed to strengthen your modeling, coding, and system design skills.

Pipeline Debugging and Data Reliability Interview Questions

This part of the DoorDash data engineer interview tests how you handle real production pressure: broken metrics, risky schema changes, opaque systems, and noisy data. The common theme is operational judgment. Interviewers want to see that you can debug methodically, protect downstream consumers, and make changes safely on large datasets without creating outages or eroding trust in data.

A dashboard metric suddenly drops after a pipeline change. How do you investigate?

This question tests whether you debug like a production owner, not a one-off analyst. At DoorDash, a sudden drop might trigger operational decisions or product rollbacks, so you need a disciplined workflow. Start by confirming the drop is real with a quick sanity query, then check freshness and job status, compare row counts and key distributions before and after the change, and isolate where the divergence begins in the lineage. Finally, validate upstream sources and confirm whether logic changes, filtering, or joins caused the shift.

Tip: Narrate your triage order and what you would communicate at each step. This shows incident ownership and builds interviewer confidence that you can protect data trust under pressure.

-

This question tests safe change management at scale, which is critical when DoorDash tables feed dashboards, experimentation, and downstream pipelines. A strong answer explains using a non-blocking schema change, writing the column with a default or nullable first, and backfilling incrementally in partitions or batches to control load. You also call out verification queries, dual-writing if needed, and a phased rollout where consumers read the new column only after coverage is complete.

Tip: Emphasize incremental backfills with monitoring and rollback plans. This demonstrates production judgment and performance awareness, two traits DoorDash expects from senior data owners.

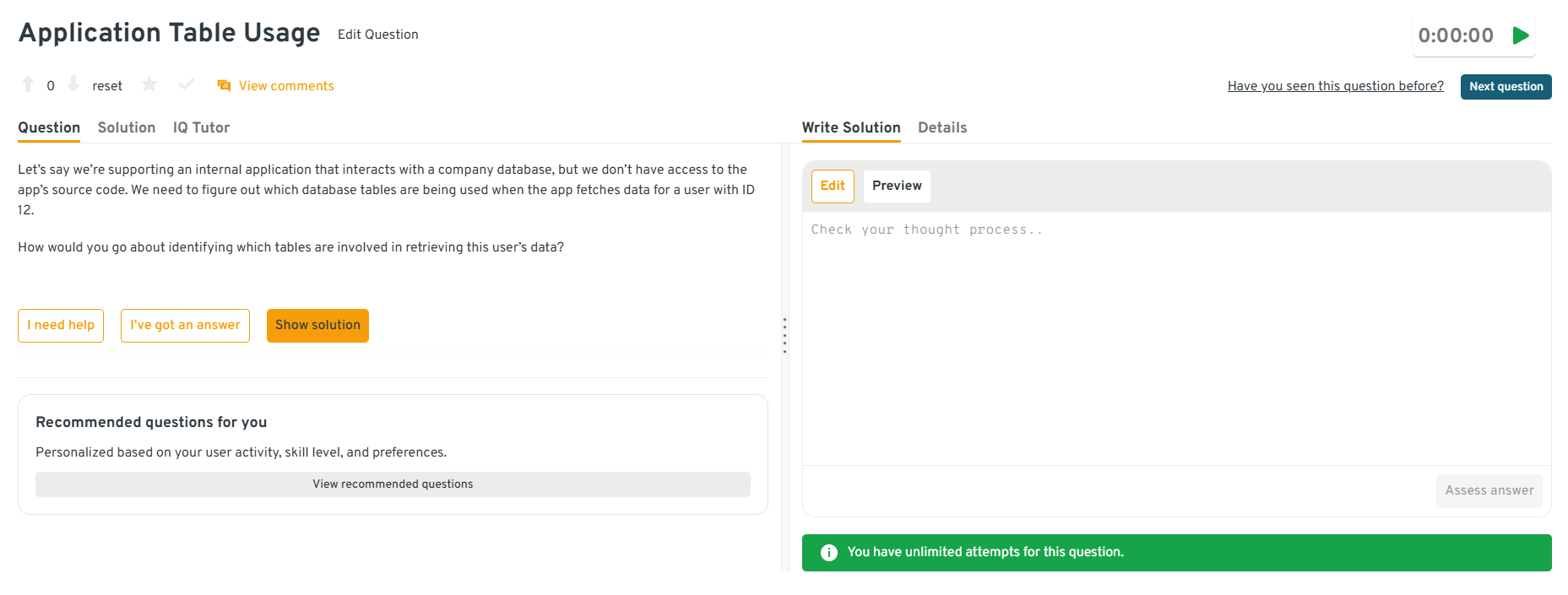

-

This question evaluates your ability to debug in a messy real-world environment, which is common at DoorDash when systems are owned by different teams. You should explain using query logs, database audit logs, or warehouse query history to filter by the application’s user, role, or connection signature, then tracing queries executed around the time the request happens. If logs are limited, you can use network tracing at the database layer or temporarily tag requests through controlled test accounts to identify query patterns.

Tip: Mention how you would reproduce the request with a controlled user and time window. This shows investigative rigor and reduces guesswork, which is exactly what keeps incidents from dragging on.

Head to the Interview Query dashboard, which brings together the exact SQL, data modeling, pipeline reliability, debugging, and behavioral questions tested in DoorDash data engineer interviews. With built-in code execution, performance analytics, and AI-guided feedback, it helps you practice production-style problems, identify gaps in your reasoning, and sharpen how you explain trade-offs in a fast-moving marketplace context.

How do you monitor data quality in production pipelines?

This question tests preventative thinking because DoorDash cannot afford silent metric drift. A strong answer covers freshness checks, volume and distribution monitoring, null and uniqueness constraints, schema change detection, and business-rule validations such as impossible timestamps or invalid status transitions. You should also explain alert routing, severity tiers, and how you tune thresholds to avoid alert fatigue while still catching real issues early.

Tip: Tie each check to who it protects, like analytics, ops, or experimentation. This shows you think like an owner of downstream trust, not just a builder of pipelines.

-

This question looks simple, but at DoorDash it maps to real issues like delivery accuracy, geocoding consistency, and fraud signals. You should describe parsing and normalizing inputs, joining against a trusted city–state reference, handling missing or ambiguous mappings, and producing a consistent output format. A strong answer also mentions defensive handling of casing, whitespace, and invalid postal codes, plus logging or surfacing errors instead of silently generating bad addresses.

Tip: Call out how you would handle ambiguous city names and missing zips. This shows data robustness and attention to edge cases, which DoorDash relies on for reliable logistics outcomes.

Behavioral Interview Questions

This section evaluates how you operate as a long-term owner of data systems at DoorDash. Interviewers look for evidence of accountability, clear communication under pressure, and the ability to balance speed with correctness while working across teams. Strong answers show ownership beyond execution and demonstrate how you influence outcomes when things are ambiguous or high stakes at DoorDash.

Tell me about a time you owned a critical pipeline end to end.

This question assesses whether you take responsibility for design, delivery, and reliability, not just implementation. Interviewers want to see how you handled tradeoffs, incidents, and long-term maintenance.

Sample answer: I owned a core orders aggregation pipeline used by analytics and ops teams. I designed the schema, implemented validation checks, and set up monitoring. When a late-arriving events issue caused undercounting, I paused downstream refreshes, communicated the impact, and rolled out an incremental backfill. Afterward, I added freshness alerts and documented failure modes, which reduced incidents by over thirty percent.

Tip: Emphasize how you protected downstream users during failures. This shows ownership and production judgment, two traits DoorDash values deeply.

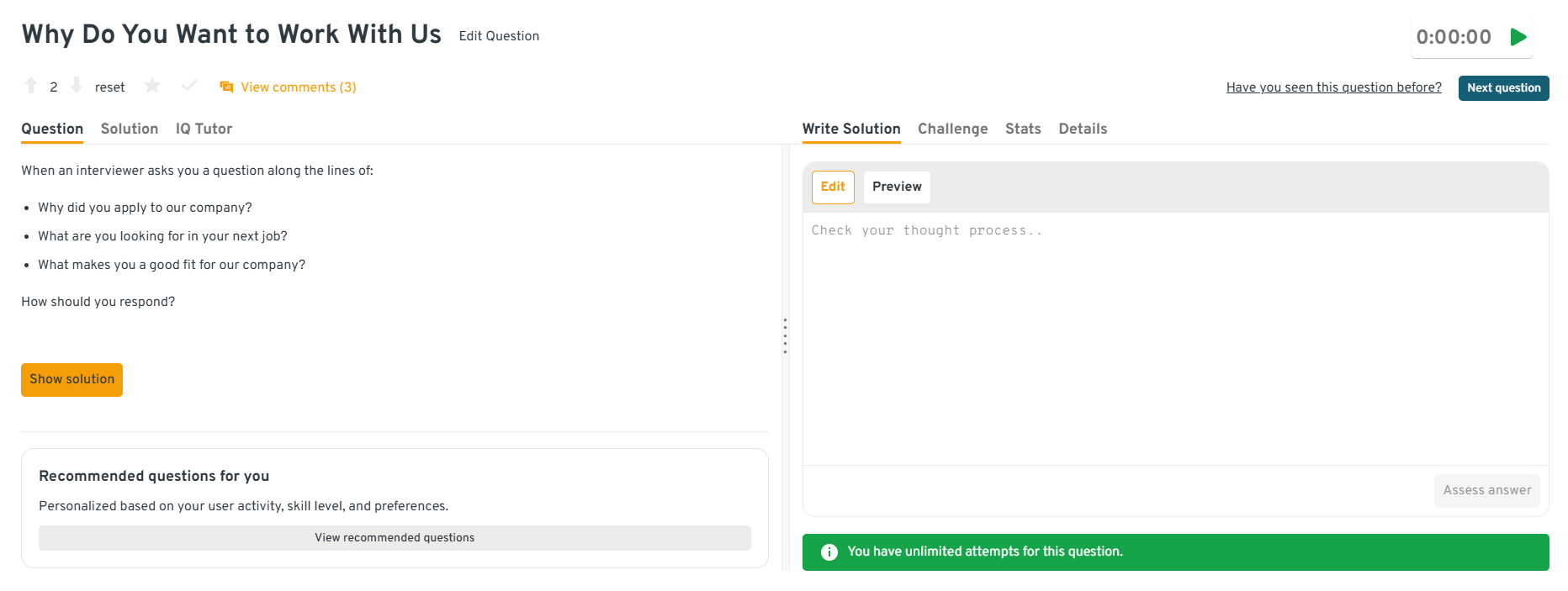

What makes you a good fit the Doordash data engineer position?

This question evaluates alignment with DoorDash’s operating style and expectations. Interviewers want to hear how your skills and mindset match the company’s emphasis on ownership, impact, and reliability.

Sample answer: My background is building analytics pipelines that directly support operational decisions. In my last role, I partnered closely with product and ops teams, owned data quality end to end, and prioritized reliability over quick fixes. That approach led to more trusted metrics and faster decision-making, which aligns well with DoorDash’s marketplace-driven environment.

Tip: Tie your strengths to ownership and impact, not just tools. This signals long-term fit rather than short-term technical match.

Head to the Interview Query dashboard, which brings together the exact SQL, data modeling, pipeline reliability, debugging, and behavioral questions tested in DoorDash data engineer interviews. With built-in code execution, performance analytics, and AI-guided feedback, it helps you practice production-style problems, identify gaps in your reasoning, and sharpen how you explain trade-offs in a fast-moving marketplace context.

Describe a time you pushed back on a stakeholder request.

This question tests how you protect data quality while maintaining strong relationships. Interviewers want to see respectful pushback grounded in reasoning, not rigidity.

Sample answer: A stakeholder asked for a metric change that would have mixed incompatible data sources. I explained the risk using concrete examples, proposed an alternative definition, and walked through the tradeoffs. We agreed on a phased approach that met their timeline without compromising accuracy, and the metric was later adopted as the standard.

Tip: Show how you aligned on outcomes, not positions. This demonstrates influence and the ability to safeguard data without slowing teams down.

-

This question assesses your ability to adapt communication styles and rebuild trust. DoorDash engineers frequently work with partners who have different technical depth and priorities.

Sample answer: I once shared pipeline metrics that stakeholders found confusing and hard to act on. I scheduled a follow-up, asked what decisions they needed to make, and reframed the data around those questions. After simplifying the visuals and adding clear definitions, adoption improved and the metrics were used in weekly planning.

Tip: Focus on how you adapted your approach. This shows empathy and communication maturity, which are essential for cross-functional ownership.

How do you prioritize multiple pipeline requests from different teams?

This question evaluates decision-making under pressure. Interviewers want to see how you balance urgency, impact, and risk while keeping stakeholders aligned.

Sample answer: I prioritize by evaluating business impact, data risk, and effort. When requests conflict, I make tradeoffs explicit, share timelines transparently, and align on sequencing. In one case, this prevented a low-impact request from delaying a reliability fix that affected multiple teams.

Tip: Explain how you make priorities visible. This signals leadership and builds trust in your decisions, which is critical at DoorDash scale.

Practicing data engineering questions like these on the Interview Query dashboard articulating your reasoning clearly is one of the most effective ways to prepare for the DoorDash data engineer interview. Focus on explaining why you made decisions, not just what you built, since that is where strong candidates consistently stand out.

What Does a DoorDash Data Engineer Do?

A DoorDash data engineer builds and maintains the data systems that power real-time delivery logistics, marketplace analytics, experimentation, and machine learning across the platform. The role sits at the intersection of high-volume event data, operational workflows, and analytical decision-making, supporting everything from delivery time estimates to merchant performance reporting. Data engineers at DoorDash design reliable pipelines and data models that make raw order, Dasher, and consumer data usable for analytics, product, and operations teams operating at marketplace scale.

| What They Work On | Core Skills Used | Tools And Methods | Why It Matters At DoorDash |

|---|---|---|---|

| Order and delivery pipelines | Data modeling, SQL optimization, pipeline reliability | Batch and streaming ETL, data validation | Powers accurate delivery metrics and customer facing estimates |

| Marketplace analytics data | Dimensional modeling, aggregation logic | Fact and dimension tables, transformation layers | Enables teams to measure conversion, supply, and demand health |

| Experimentation data support | Event design, data consistency, backfills | Experiment logging, metric definitions | Ensures product experiments can be trusted and acted on |

| Operational reporting | Data freshness, latency monitoring | Scheduling, alerting, data quality checks | Supports real-time decisions for logistics and support teams |

| Downstream ML readiness | Schema design, data completeness | Feature tables, historical snapshots | Feeds machine learning models with clean, reliable inputs |

Tip: DoorDash interviewers look for engineers who think beyond building pipelines. When you describe your work, explain how you ensured data correctness under failure, late events, or schema changes, and how those decisions protected business metrics. This shows strong production judgment and ownership, which are critical signals for success at DoorDash.

How to Prepare for a DoorDash Data Engineer Interview

Preparing for the DoorDash data engineer interview requires more than practicing isolated SQL queries or reviewing generic data pipeline patterns. You are preparing for a role that supports real-time logistics, marketplace experimentation, and operational decision-making across a fast-moving three-sided marketplace. Strong candidates demonstrate not only technical competence, but also production judgment, data ownership, and the ability to design systems that remain reliable as business requirements evolve. Below is a focused framework to help you prepare with intention.

Read more: How to Prepare for a Data Engineer Interview

Develop strong intuition for marketplace data flows: DoorDash data systems ingest events from consumers, Dashers, merchants, and internal services. Spend time understanding how these signals relate, where latency is introduced, and how state changes propagate across systems. Practice mapping business events to data events before thinking about implementation.

Tip: Be ready to explain how a single order moves through multiple tables and pipelines. This shows systems thinking and an understanding of real production data lifecycles.

Practice designing for data correctness, not just throughput: DoorDash values engineers who protect metric integrity under imperfect conditions. Review strategies for handling late events, partial failures, schema evolution, and reprocessing historical data without breaking downstream consumers.

Tip: Call out failure scenarios proactively in your designs. This signals ownership and reliability awareness, which are critical traits for production data engineers.

Strengthen your ability to communicate tradeoffs clearly: Many interview questions are designed to surface how you reason about cost, freshness, and complexity. Practice explaining why you would choose one design over another and what you give up with each decision.

Tip: Frame tradeoffs in terms of downstream impact. This demonstrates business alignment and earns trust from interviewers.

Prepare structured walkthroughs of past data engineering work: DoorDash interviewers often ask you to dive deep into previous projects. Rehearse explaining the problem context, the data challenges involved, the decisions you made, and how you validated success after launch.

Tip: Emphasize what you monitored post-launch and how you handled issues. This shows maturity and long-term ownership.

Simulate full interview loops under realistic conditions: Practice moving between SQL, design, debugging, and behavioral discussions in a single session. This helps you maintain clarity and energy across different problem types.

Use Interview Query’s mock interviews and coaching sessions to practice DoorDash-style scenarios with targeted feedback.

Tip: After each mock, identify one explanation you could make simpler. Clear communication is often the biggest differentiator at this stage.

Looking for hands-on problem-solving? Test your skills with real-world challenges from top companies. Ideal for sharpening your thinking before interviews and showcasing your problem solving ability.

Average DoorDash Data Engineer Salary

DoorDash’s compensation framework is designed to reward engineers who can build reliable data systems, support real-time decision-making, and scale analytics across a high-volume marketplace. Data engineers receive a mix of competitive base salary, annual cash bonus, and meaningful equity through restricted stock units. Your total compensation depends on level, scope, location, and the complexity of the systems you own. Most candidates interviewing for data engineering roles at DoorDash fall into mid-level or senior bands, especially if they have experience supporting analytics, experimentation, or production pipelines at scale.

Read more: Data Engineer Salary

Tip: Confirm your target level with your recruiter early. At DoorDash, level alignment directly determines scope expectations and can shift total compensation significantly.

| Level | Role Title | Total Compensation (USD) | Base Salary | Bonus | Equity (RSUs) | Signing / Relocation |

|---|---|---|---|---|---|---|

| L3 | Data Engineer I | $140K – $180K | $120K – $145K | Performance based | Standard RSUs | Rare |

| L4 | Data Engineer II | $170K – $225K | $140K – $165K | Performance based | RSUs included | Case by case |

| L5 | Senior Data Engineer | $200K – $275K | $155K – $185K | Above target possible | Larger RSU grants | Common |

| L6 | Staff Data Engineer | $250K – $350K+ | $175K – $210K | High performer bonuses | High RSUs + refreshers | Frequently offered |

Note: These estimates are aggregated from data on Levels.fyi, Glassdoor, TeamBlind, public job postings, and Interview Query’s internal salary database.

Tip: Pay attention to equity weighting at senior levels. At DoorDash, stock becomes a larger portion of compensation after vesting begins in year two.

Average Base Salary

Average Total Compensation

Negotiation Tips That Work for DoorDash

Negotiating compensation at DoorDash is most effective when you understand how leveling, scope, and market benchmarks interact. Recruiters expect candidates to ask thoughtful questions and anchor discussions in data rather than speculation.

- Confirm your level early: DoorDash leveling from L4 to L5 often represents a meaningful jump in scope and compensation. Clarifying level alignment upfront prevents misaligned expectations late in the process.

- Use verified benchmarks and communicate impact: Anchor conversations with sources like Levels.fyi and Interview Query salaries. Frame your value around ownership, reliability improvements, or enabling faster decision-making at scale.

- Account for location and team scope: Compensation can vary across San Francisco, Seattle, New York, and remote roles. Ask for location-specific bands and understand how team charter affects leveling.

Tip: Ask for a full compensation breakdown, including base salary, bonus target, equity vesting schedule, and refresh cadence. This shows financial maturity and helps you evaluate long-term value, not just year-one pay.

FAQs

How long does the DoorDash data engineer interview process usually take?

Most candidates complete the process in three to five weeks. Timelines can extend if multiple teams are reviewing your profile or if scheduling onsite interviews takes longer. Recruiters typically share clear next steps after each round.

Does DoorDash include a take-home assignment for data engineers?

Some teams include a take-home exercise, but many roles rely entirely on live SQL, design, and debugging interviews. When a take-home is used, it focuses on realistic data modeling or pipeline reasoning rather than long implementations.

How deep do SQL questions go in DoorDash interviews?

SQL is a core skill and questions often go beyond basics. Expect multi-join queries, window functions, time-based logic, and edge case handling that mirrors real marketplace analytics and operational metrics.

Is streaming experience required for a DoorDash data engineer role?

Streaming experience is helpful but not mandatory. Interviewers care more about whether you understand freshness requirements, latency tradeoffs, and how to design reliable systems, regardless of whether they are batch or streaming.

What level of system design is expected for mid-level versus senior candidates?

Mid-level candidates are expected to reason clearly about schemas and pipelines, while senior candidates must demonstrate ownership, scalability thinking, and failure handling. Scope and depth increase significantly at senior levels.

How important is business context during technical interviews?

Very important. DoorDash interviewers look for engineers who connect technical decisions to downstream impact on analytics, experimentation, and operations rather than treating problems as isolated exercises.

Do DoorDash data engineers work closely with analytics and product teams?

Yes. The role is highly cross-functional. Strong candidates show they can collaborate with analysts and product managers, define metrics clearly, and protect data quality that drives business decisions.

Can candidates be evaluated for multiple teams at DoorDash?

Yes. If your skill set aligns with more than one team, recruiters may explore multiple options during the process. Communicating your interests early helps with better team matching at DoorDash.

Become a DoorDash Data Engineer with Interview Query

Preparing for the DoorDash data engineer interview means building strong SQL fundamentals, developing sound data modeling and pipeline design instincts, and learning how to operate confidently in a real-time marketplace environment. By understanding DoorDash’s interview structure, practicing real-world SQL, working through ETL and reliability scenarios, and sharpening how you communicate tradeoffs, you can approach each stage of the process with clarity and confidence. For targeted preparation, explore the full Interview Query question bank, practice with the AI Interviewer, or work with a mentor through Interview Query’s Coaching Program to refine your approach and position yourself to stand out in DoorDash’s data engineering hiring process.