DoorDash Data Analyst Interview: Analytics Exercise, Case Study & BI Engineer Prep

Introduction

Breaking into DoorDash’s data team means more than just writing SQL—it means influencing how millions of deliveries happen every day. If you’re prepping for the DoorDash data analyst interview, this guide covers everything from the interview process to the types of analytics questions you’ll face.

Role Overview & Culture

DoorDash Data Analysts transform raw order, Dasher, and customer data into insights that drive product features, logistics efficiency, and marketplace health. The role sits inside a culture that values rapid experimentation, ownership, and data-driven decision-making. Whether you’re analyzing delivery time outliers or A/B testing promotions, the data analyst DoorDash position calls for strong collaboration and fast iteration.

Why This Role at DoorDash?

As an analyst, you’ll have the chance to directly influence pricing, promotions, and new vertical launches. With DoorDash’s rapid growth, analysts are trusted with high-impact work from day one—backed by strong compensation packages and a clear promotion ladder. Read on to see how the DoorDash data analyst interview process is structured—and how you can stand out.

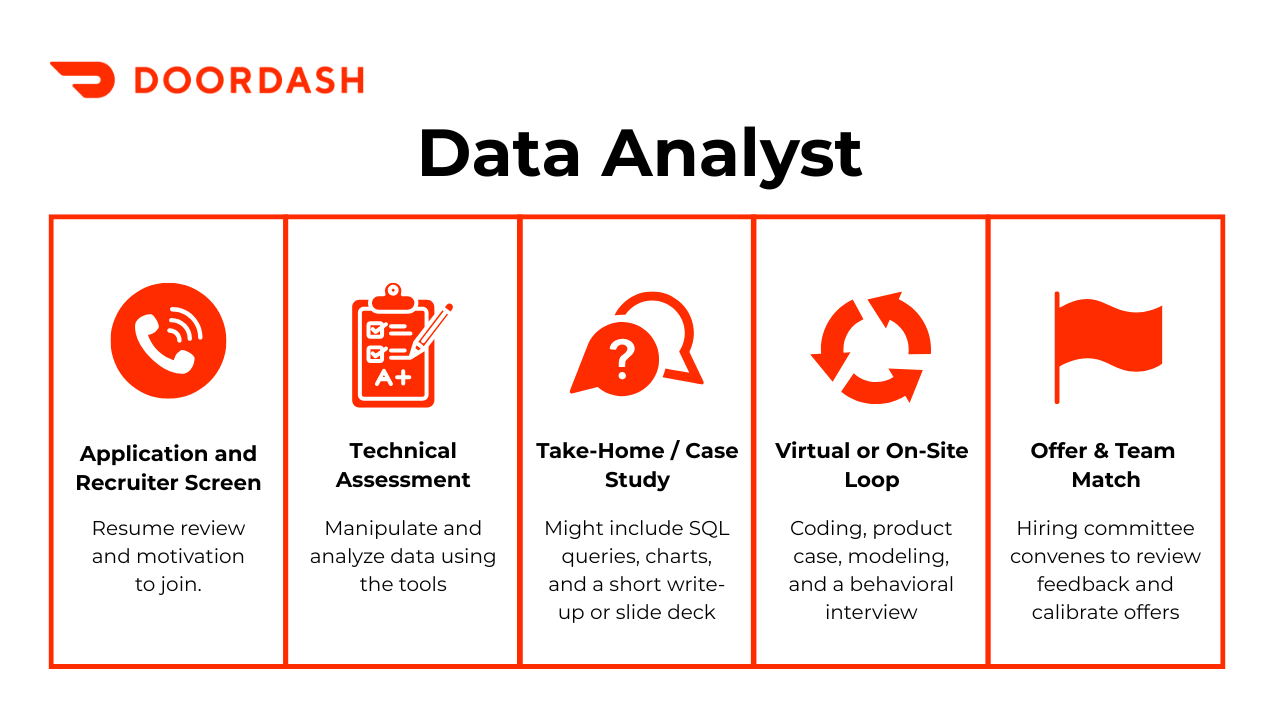

What Is the Interview Process Like for a Data Analyst Role at DoorDash?

Getting hired as a DoorDash Data Analyst involves a multi-stage interview process that balances SQL rigor with business intuition. Here’s what to expect at each stage.

Recruiter Screen

This is a quick conversation focused on resume alignment, your motivation for applying, and your overall fit for the DoorDash data analyst role. Come prepared to explain your past projects and why you’re excited about marketplace analytics.

Technical Screen

Next comes a 30-minute SQL assessment and basic statistics check. You’ll be tested on core data manipulation skills, often pulled from actual DoorDash data tables. Practice solving DoorDash SQL interview questions in under 20 minutes to simulate real pacing.

Take-Home Analytics Exercise

In this round, you’ll work on a DoorDash analytics exercise that usually involves metric deep-dives. The prompt may ask you to identify trends in Dasher reliability or evaluate experiment outcomes. You’ll synthesize your findings in a short slide deck and present your thinking.

Virtual On-Site Loop

This is a full interview loop covering live SQL coding, an analytics case, and a behavioral round. The case may resemble a DoorDash analytics case study and test how you frame metrics and handle ambiguous problems. This is the core of the DoorDash data analyst interview process.

Hiring Committee

After the loop, your packet is reviewed and benchmarked across candidates. A bar-raiser is involved in evaluating consistency, and decisions are typically finalized within a week.

Behind the Scenes

DoorDash prioritizes fast feedback—most interviewers submit evaluations within 24 hours. For more senior roles, you might also be asked to present a strategic business case with scenario planning and deeper forecasting logic.

What Questions Are Asked in a DoorDash Data Analyst Interview?

Expect a blend of SQL execution, business judgment, and storytelling. Each question is designed to evaluate how you translate raw data into insights that matter.

SQL / Data Manipulation Questions

In this section of the interview, you’ll be given sample DoorDash tables—such as orders, customers, dashers, or menus—and asked to perform complex joins, window functions, or funnel calculations. Common DoorDash SQL interview questions require slicing data across time, geography, or customer cohorts. These DoorDash SQL questions often evaluate both accuracy and query speed.

-

DoorDash uses this prompt to see if you know sampling tricks that avoid a full table scan. Good answers compare

TABLESAMPLE SYSTEMblocks, ID-range randomization with sparse retries, and hashing approaches that leverage existing indexes. You should mention trade-offs in randomness quality, read amplification, and how techniques like reservoir sampling or Bernoulli filters keep latency predictable even when the table lives on a sharded warehouse. -

The exercise checks mastery of window functions: partition by the truncated date, order by

created_atDESC, then filter onROW_NUMBER = 1. Strong explanations cover time-zone safety, why an index on(created_at DESC)prevents memory spills, and how such logic translates to DoorDash payment or refund tables that refresh in near real time. Which customers placed more than three transactions in both 2019 and 2020?

This query combines conditional aggregation with set intersection, a pattern analysts reuse when segmenting power users. Interviewers expect a CTE that tallies yearly order counts, pivots them into columns, and filters where both exceed three. Bringing up incremental ETL tables or bitmap indexes shows you understand performance on production-scale fact data.

-

Candidates should reach for

DENSE_RANK()partitioned by department and filter onrank ≤ 3. A crisp explanation touches on why you sort salary DESC inside the partition, how null salaries might distort rankings, and how the result informs compensation-equity dashboards. -

The problem tests grouping, binning, and null-handling: left join January activity to the full user list, default nulls to zero, group by bucket, and order ascending. DoorDash values that you discuss scalability (pre-aggregating daily comment counts) and why a class-interval-one histogram highlights heavy-comment outliers for community-health teams.

What SQL returns the second-highest salary in Engineering when multiple people tie for first?

Use

DENSE_RANK()over Engineering salaries ordered DESC and filter onrank = 2. Interviewers want to hear whyLIMIT 1 OFFSET 1fails under ties and how an index on(department, salary DESC)speeds the ranking scan.How many customers were “upsold” (bought a new product on a later day than their first purchase)?

A solid solution finds each user’s first-purchase date via a window, then counts users with purchases on strictly later dates. Explain why same-day multi-SKU orders don’t qualify, and note performance tricks—date partitions or a materialized first-purchase dimension—that keep billion-row scans quick.

-

This question probes ordered window logic: use

LAG(title)within each user’s timeline, filter for the specified title pair, count, and divide by total users. Mentioning edge cases such as overlapping employment dates or missing end dates shows attention to real-world résumé data quirks. How would you find the top five product pairs most frequently purchased together by the same user?

The task blends self-joins and aggregation: generate all distinct item pairs per order, aggregate counts, alphabetize

(p1, p2)to treat “A B” like “B A,” then order by frequency and limit to five. Discuss how aGROUPING SETSor Spark job might be required when the transactions fact table exceeds a billion rows.Write a cumulative distribution of comments-per-user (bucket size = 1) across the entire platform.

You first build a frequency table of comments per user, then apply

SUM(freq) OVER (ORDER BY bucket)to produce the CDF. Interviewers look for clarity on why cumulative percentages aid product insights (e.g., identifying the comment “power law”) and how you’d surface the 90th-percentile commentator threshold for moderation tooling.

Analytics Case Study & Business Metrics

Many candidates receive a DoorDash analytics case study focused on a real problem: for example, how to measure retention, analyze promotions, or detect declining user cohorts. These interviews test your ability to structure problems, select metrics, and deliver clear recommendations. Be ready to explain trade-offs in metric choice and run mock A/B test walkthroughs.

-

Lay out a workflow that first deseasonalizes the data (e.g., STL decomposition) so you aren’t fooled by predictable holiday spikes. Next, construct month-to-month deltas and model them as a stationary series; a two-sided t-test or Bayesian credible interval on the latest delta tells you if it exceeds natural variance. Mention safeguards such as Bonferroni adjustment when inspecting many months and control-chart alarms to automate ongoing significance checks.

-

Explain that margin of error in simple random sampling shrinks with the square root of n. Going from 3 % to 0.3 % requires 100× the sample size (since 0.3 % is 1⁄10 of 3 %). Therefore you need roughly 99 n more observations. Highlight that analysts must balance the cost of extra data against the practical benefit of tighter confidence bands.

-

Start with cohorting users by top merchants, then compute card-centric KPIs—frequency, average ticket size, and share-of-wallet shift after an existing partnership launch. Build an uplift model to forecast incremental GMV and interchange revenue under different reward scenarios. Operational filters (merchant brand fit, customer overlap, risk profile) narrow the shortlist; a financial scorecard ranks remaining candidates, ensuring the chosen partner boosts both spend and loyalty.

-

Propose a difference-in-differences analysis: compare treated new users to a pre-redesign cohort matched on acquisition channel and seasonality. Incorporate time-series regression with controls for promo spend and site changes. If instrumentation allows, run a hold-back test on a small slice of new users to isolate causal impact prospectively. Only after isolating confounders should you credit the redesign for the 3-point bump.

-

Use observational causal-impact methods: create a synthetic control group from similar companies that didn’t receive many messages and track divergence in hire rate or time-to-fill. Complement that with an interrupted time-series analysis at platform level and matched-pairs propensity scoring at candidate level. Triangulating these views helps separate feature effect from macro hiring trends.

Running a D2C socks store, which business-health metrics belong on your executive dashboard?

Highlight a balanced scorecard: revenue and AOV, new-to-repeat purchase ratio, contribution margin after shipping, inventory turn, CAC vs. lifetime value, on-time-delivery rate, refund percentage, and NPS. Each metric links daily ops to long-term profitability—insight the analytics team must surface for quick decision-making.

-

Show that the median exceeds 3 only when at least two draws exceed 3. The probability a single draw is > 3 is 1⁄4, so the probability of exactly two successes is 3 × (¼)² × (¾), and all three successes is (¼)³. Summing yields 3⁄16 + 1⁄64 = 13⁄64 ≈ 0.203 . Walking through the combinatorics demonstrates comfort with order-statistic reasoning common in delivery-time percentile analysis.

-

Model the process: true-win probability on first flip is 0.5. For those who flip tails first (0.5), a second heads occurs with 0.5 and triggers a lie, adding 0.25 apparent winners who are actual losers. Let x be actual winners; apparent winners = x + 0.25 × (100 − x). Setting that equal to 30 and solving gives x ≈ 14. Illustrating hidden-bias correction mirrors real-world fraud-detection adjustments DoorDash analysts make when reported events include noisy or strategic behavior.

Segment & Marketplace Analysis

DoorDash serves different cities, cuisines, and verticals. You may be asked to perform a DoorDash segment analysis using dimensional breakdowns—like time-of-day, geography, or restaurant partner type. Strong answers tie observations to strategic recommendations.

Which U.S. cities generated the highest incremental order growth after DoorDash introduced grocery delivery, and what share of that growth came from entirely new customers versus existing restaurant-only users?

Interviewers want to see you slice the orders fact table by

verticalandcity, calculate month-over-month deltas, and create two cohorts—first-time DoorDash buyers and repeat buyers branching into grocery. A crisp explanation should end with a recommendation, e.g., “Denver and Austin show the strongest net-new demand; marketing dollars there will expand TAM rather than cannibalize restaurant spend.”During late-night hours (10 p.m.–4 a.m.), which cuisine categories experience the greatest conversion-rate drop-off from “menu view” to “checkout,” and how would you act on that insight?

Start by computing a funnel conversion metric for each

(cuisine, hour)slice. Point out that wings and pizza retain high late-night conversion while poke bowls plummet, possibly due to stock-outs or prep-time anxiety. Recommend surfacing ready-made meal options or surge-pricing incentives for kitchens willing to remain open.How does average delivery distance vary across suburban versus urban zones, and what does that imply for driver scheduling?

A solid answer joins order ZIP codes to census-based

urbanicityflags, then plots distance distributions. If suburban orders average 2 mi longer, you’d justify assigning more dashers with larger vehicles and adjusting pay multipliers to maintain ETA parity.Which restaurant partner tiers (local independent, regional chain, national chain) contribute the highest margin per order after accounting for promo subsidies?

Compute margin as

delivery_fee + service_fee – incentive_cost – courier_pay. Break down bypartner_tier, rank in descending order, and discuss portfolio mix: maybe independents generate lower margin but boost selection diversity—critical for customer retention—so cuts can’t rely on margin alone.After DoorDash launched alcohol delivery in select states, how did basket size and tip percentage change among existing users versus brand-new users?

You would identify states in the pilot, define pre-/post-launch windows, and build difference-in-differences tables for

basket_valueandtip_pct. If existing users’ baskets grew 25 % but tips stayed flat, consider nudging tip suggestions higher; if new users over-index on alcohol-only orders, propose cross-selling food bundles.Which hour-of-day and day-of-week combinations have the highest rate of order cancellations, and what operational levers could reduce them?

Analysts should pivot cancellation counts over total orders by

(dow, hour). If Sunday evenings spike due to football traffic bottlenecks, recommend pre-scheduling more dashers or dynamically throttling marketing promos during peak windows.Compare courier on-time arrival rates for apartment buildings versus single-family houses across the top 20 metros. Where is the gap largest, and what data would you surface to product teams?

Join delivery addresses to a dwelling-type classifier, then calculate

on_time = (driver_arrival ≤ promised_eta). Explain that high-rise buildings in New York show a 9 pp lag; suggest in-app PIN codes, lobby photo guidance, or batching algorithms that pair two nearby high-rise deliveries.If DoorDash introduces a “DashMart Flash” 15-minute convenience offering, how would you forecast demand by neighborhood before launch?

Outline a predictive model that blends historical late-night convenience orders, population density, and distance to the nearest DashMart warehouse. Present how you’d segment neighborhoods into high, medium, and low expected volume and stage inventory accordingly.

Behavioral & Cross-Functional Collaboration

Analysts work closely with PMs, engineers, and operations. Your interviewer will ask about past examples of working with stakeholders and communicating insights. Good stories reflect DoorDash’s culture of ownership and urgency. Use these moments to naturally reference your past DoorDash analytics–style projects.

-

Strong answers follow a clear arc: scope, obstacles (e.g., missing fields, shifting KPIs, stakeholder churn), actions you took to unblock progress, and the quantifiable result—such as reducing report latency or driving a feature launch. DoorDash values resilience and bias-for-action, so highlight pragmatic trade-offs (quick wins vs. perfect models) and any process improvements you institutionalized after the project wrapped.

How have you made complex data or analyses more accessible to non-technical partners?

Explain specific tactics—interactive dashboards with plain-language tooltips, “data bites” newsletters, or workshop sessions that teach stakeholders to pull their own cuts. Emphasize measurable impact (fewer follow-up questions, faster decision cycles) and how translating insights into business terms aligns with DoorDash’s fast-moving operating cadence.

-

Pick strengths that map to DoorDash’s needs—say, deep SQL tuning or storytelling with data—and support them with concrete wins. Choose one genuine growth area (perhaps delegating or prioritizing) and describe the system you’ve adopted—weekly retros, peer reviews—to show continuous self-development without undermining credibility.

Tell us about a time you struggled to communicate with stakeholders. How did you close the gap?

A compelling story starts with mis-alignment (e.g., ops leaders misinterpreting a retention metric), details your diagnostic steps, and ends with the tools you used—reframed metrics, prototypes, recurring syncs—that rebuilt trust. Highlight how improved communication accelerated deliverables or unlocked an initiative.

Why do you want to work at DoorDash, and why in a data-analytics capacity?

Connect personal motivation—optimizing local commerce or logistics efficiency—to DoorDash’s mission. Then link your past dashboarding, funnel-analysis, or cohort-segmentation work to the posted role, finishing with how the company’s values (“one team, one fight,” “bias for action”) align with your work style.

Describe a situation where you had to juggle an urgent ad-hoc request while owning a long-term analytics initiative. How did you prioritize and deliver both?

DoorDash analysts often support real-time ops as well as strategic projects. Show how you assessed impact, negotiated timelines, or automated routine parts of the larger project to free bandwidth—demonstrating time-management savvy without dropping quality.

Tell us about a decision you influenced when the data was incomplete or noisy. What approach did you take to build confidence in your recommendation?

Highlight methods such as triangulating multiple proxies, running sensitivity analyses, or clearly communicating assumption ranges. Emphasize that you acknowledged uncertainty yet still provided direction—mirroring DoorDash’s need to move fast while managing risk.

DoorDash Business Intelligence Engineer

If you’re interviewing for or considering BI roles, check out our DoorDash Business Intelligence Engineer interview guide. The DoorDash business intelligence engineer role emphasizes ETL design, dashboarding, and working across data platforms.

How to Prepare for a Data Analyst Role at DoorDash

Success in the DoorDash interview isn’t just about knowing SQL—it’s about being able to reason through metrics, defend assumptions, and present insightfully to stakeholders. Here’s how to prepare like a top candidate.

Master SQL Fast

Focus on speed and accuracy. Practice 20 SQL problems per day with a strict 15–20 minute time limit per query. This will help you handle pressure during the DoorDash SQL interview questions section.

Rehearse Analytics Exercises

The DoorDash analytics exercise tests how clearly you can communicate insights. Practice summarizing metric trends (e.g. retention drop, order delays) with one-slide takeaways and recommendations.

Case Study Frameworks

Expect a DoorDash analytics case study focused on business levers like growth vs. retention or Dasher utilization. Learn to quickly frame hypotheses, select metrics, and weigh trade-offs.

Explain Trade-Offs

In every case, you’ll need to clarify assumptions. Whether it’s SQL joins or stakeholder decisions, interviewers want to hear how you think—so state your rationale clearly. Make sure to frame your thought process like a true DoorDash data analyst would.

Mock Interviews & Feedback

Leverage mock sessions via Interview Query or peers who’ve done data analyst DoorDash interviews. Repeating real question types with feedback will sharpen your timing and confidence.

Conclusion

Preparing for the DoorDash data analyst interview comes down to three pillars: SQL speed, business-metric storytelling, and clear stakeholder communication. Set yourself up for success by grabbing our free SQL cheat sheet, booking a mock interview, and testing your skills with our AI interviewer tool.

Want proof that it works? Read Dania Crivelli’s success story and follow the same learning path she did.