DoorDash Data Scientist Interview Guide: Process, Questions, Salary

Introduction

Preparing for a DoorDash data scientist interview in 2026 means getting comfortable solving problems at true marketplace scale. DoorDash now processes well over 2 billion orders annually, operating one of the largest three-sided marketplaces in the world across consumers, merchants, and Dashers. Small changes in pricing, delivery times, or incentives can ripple across the entire ecosystem, making data science central to nearly every product and operational decision.

Data scientists at DoorDash sit within the Analytics organization and partner closely with Product, Engineering, and Operations teams. The role goes far beyond reporting. You are expected to design experiments, build statistical or machine learning models, and translate noisy marketplace data into decisions that improve growth, efficiency, and customer experience. Whether you work on consumer growth, Dasher logistics, or international expansion, success depends on strong SQL fundamentals, experimentation judgment, and clear product reasoning.

In this guide, we break down the DoorDash data scientist interview process, including what each stage evaluates, how marketplace thinking shows up in interviews, and how to prepare for SQL, experimentation, and product case discussions.

DoorDash Data Scientist Interview Process

The DoorDash data scientist interview process is designed to evaluate how well candidates reason through real marketplace problems rather than isolated technical exercises. Across three to six weeks and four to six rounds, interviewers assess SQL fluency, experimentation design, statistical reasoning, and the ability to connect analysis to decisions that balance consumers, merchants, and Dashers.

While exact structure varies slightly by team and seniority, the core stages and expectations are consistent across analytics roles.

| Interview stage | What to expect |

|---|---|

| Recruiter screen | Background, motivation, and role alignment |

| Technical screen | SQL assessment and product case discussion |

| Virtual onsite loop | Business, experimentation, statistics, and ML interviews |

| Hiring manager round | Final evaluation of judgment, impact, and team fit |

Recruiter Screen (30–45 minutes)

The process begins with a recruiter screen conducted over phone or video. This conversation focuses on your background, interest in DoorDash, and alignment with the data scientist role. Recruiters also discuss compensation expectations, location preferences, and the structure of the remaining interview stages.

You should expect questions around why DoorDash’s marketplace interests you and how your past work has driven measurable business or product impact.

Tip: Be ready to clearly articulate how your analysis influenced decisions, not just what metrics you tracked.

Technical Screen (60 minutes)

The technical screen is often a high-stakes gatekeeper round and is typically split into two equal halves: a SQL assessment and a product-focused case discussion.

| Component | Focus | What interviewers look for |

|---|---|---|

| SQL assessment (30 min) | Analytics queries and logic | Clean structure, correct assumptions, edge-case handling |

| Product case study (30 min) | Marketplace reasoning | Metric framing, structured diagnosis, trade-offs |

SQL assessment:

Candidates usually solve three to four analytics-style SQL problems on a shared editor. Questions emphasize joins, CTEs, window functions, and time-based analysis rather than obscure syntax. Many candidates prepare using Interview Query’s SQL interview questions and hands-on practice from the challenges section.

Product case study:

This portion evaluates product intuition and structured thinking. You may be asked vague prompts such as diagnosing increasing delivery times or declining order completion. Interviewers care about how you define success metrics, guardrails, and hypotheses before diving into analysis. Practicing decision-first thinking using product analytics interview questions helps mirror what DoorDash expects.

Tip: Start by clarifying which side of the marketplace you’re optimizing and what trade-offs you’re willing to accept.

Virtual Onsite Loop (4–6 interviews)

The final stage consists of a multi-hour virtual onsite with 45–60 minute interviews across different stakeholders. These interviews test depth, consistency, and how you reason under ambiguity.

Virtual Onsite Interview Types

| Interview type | What it evaluates |

|---|---|

| Business partner / behavioral | Ownership, collaboration, values alignment |

| Experimentation & statistics | A/B testing, power, significance, marketplace effects |

| Technical case studies | SQL logic, metrics, and analytical judgment |

| Machine learning (mid–senior) | Forecasting, ETA modeling, recommendations |

| Hiring manager | End-to-end thinking and role fit |

Experimentation and statistics rounds focus heavily on real-world constraints, such as network effects, interference between users, and uneven regional rollout. Candidates often prepare using Interview Query’s data science interview questions and the data science learning path to sharpen applied reasoning.

Behavioral interviews emphasize DoorDash’s core values, including customer obsession, bias for action, and ownership. Answers are typically expected in STAR format, with clear emphasis on decision impact.

Tip: Across all onsite rounds, interviewers reward candidates who explain why a metric or approach matters, not just how to compute it.

Hiring Manager Interview (45–60 minutes)

The hiring manager round is the final step of the DoorDash data scientist interview process and serves as a consistency and judgment check across everything you’ve shown so far. This interview focuses less on raw execution and more on how you think end to end about marketplace problems, trade-offs, and impact.

Hiring managers typically ask you to walk through one or two past projects in depth, probing how you defined the problem, selected metrics, handled ambiguity, and influenced cross-functional decisions. You may also be asked to reason through a high-level marketplace scenario, such as balancing delivery speed with Dasher earnings or evaluating trade-offs between consumer fees and order volume.

Interviewers pay close attention to whether your thinking aligns with DoorDash’s three-sided marketplace dynamics and whether you can clearly articulate why a particular metric, experiment, or modeling choice mattered. Candidates often prepare by practicing structured walkthroughs using Interview Query’s data science interview questions and refining delivery through mock interviews.

Tip: Lead with the decision you were trying to influence, then explain how your analysis supported that decision. Hiring managers care more about judgment and ownership than technical detail at this stage.

DoorDash Data Scientist Interview Questions

The DoorDash data scientist interview includes a mix of SQL, analytics, experimentation, product reasoning, and applied modeling. These questions are designed to evaluate how well you reason about a three-sided marketplace, handle noisy operational data, and turn analysis into decisions that balance consumers, merchants, and Dashers. Interviewers care deeply about how you structure problems, define metrics, and explain trade-offs under real-world constraints.

Read more: Top Data Science Interview Questions

SQL and Analytics Interview Questions

DoorDash relies heavily on SQL-driven analysis to monitor marketplace health, diagnose operational issues, and evaluate product changes. SQL questions typically involve large event tables, time-based aggregation, segmentation, and window functions. Interviewers look for clean query logic, correct assumptions, and strong intuition around data quality issues.

How would you calculate weekly consumer retention for DoorDash users by cohort?

DoorDash asks this to assess whether you can measure product stickiness in a subscription and marketplace context. A strong answer explains defining cohorts by first order date, tracking repeat orders by week, and calculating retention as the percentage of users returning in subsequent weeks. You should mention handling delayed orders, cancellations, and cohort size normalization.

Tip: Explain how retention trends would inform growth or pricing decisions, not just how to compute the metric.

How would you identify inactive users using SQL?

This question evaluates your ability to translate behavioral signals into actionable segments. You should explain defining inactivity thresholds based on order frequency, recency, and historical behavior, then using SQL to flag users with no activity within a defined window.

Tip: Call out how different inactivity definitions might be used for reactivation versus churn modeling.

How would you detect duplicate events in a delivery event log?

This tests your ability to handle instrumentation issues common in real-time logistics systems. You should explain using

ROW_NUMBER()orCOUNT(*) OVERto flag duplicate records and define rules for keeping the correct event.Tip: Explain how duplicates could bias metrics like delivery time or acceptance rate before proposing a fix.

How would you calculate average order value by merchant over a rolling 30-day window?

This question assesses comfort with window functions and time-based analysis. You should describe grouping by merchant, ordering by date, and computing rolling averages using window frames.

Tip: Call out how rolling metrics help smooth volatility in smaller merchants.

Write a query to calculate and rank total delivery distance by Dasher.

DoorDash uses questions like this to test aggregation logic and ranking with real operational data. A strong answer joins delivery and Dasher tables, sums distance per Dasher, and applies a window function to rank results.

Tip: Mention filtering out canceled or incomplete deliveries to ensure metric integrity.

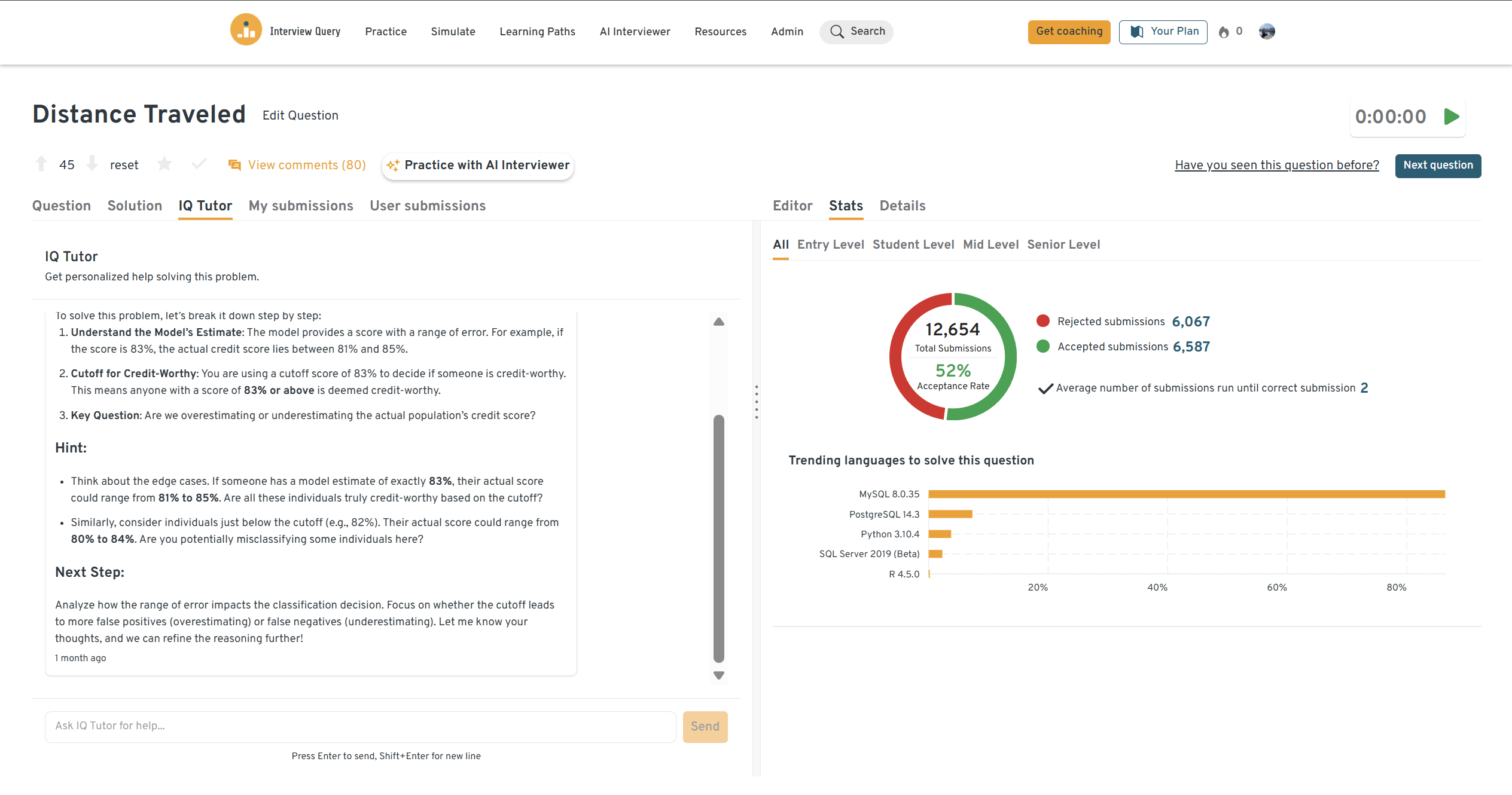

Try this question yourself on the Interview Query dashboard. You can run SQL queries, review real solutions, and see how your results compare with other candidates using AI-driven feedback.

Practice similar SQL questions using the Interview Query challenges to build fluency under time pressure.

Experimentation and A/B Testing Interview Questions

Experimentation questions at DoorDash focus on whether you can design tests that hold up in a live marketplace with interference, spillovers, and uneven supply-demand dynamics. Interviewers care more about judgment and safety than textbook statistical purity.

How would you design an experiment to reduce average delivery time?

DoorDash asks this to test whether you can isolate causal impact in a logistics-heavy system. A strong answer discusses randomization level (city, zone, or user), primary metrics like delivery ETA, and guardrails such as cancellation rate, Dasher earnings, and customer satisfaction.

Tip: Explicitly discuss spillover effects and how you would mitigate them.

How would you determine the winning variant in an A/B test when data is limited?

This tests whether you can adapt experimentation methods under constraints. You should explain alternatives such as non-parametric tests, bootstrapping, or Bayesian approaches, and emphasize effect sizes over p-values.

Tip: Explain how you would communicate uncertainty to stakeholders.

How would you calculate statistical power for an experiment?

DoorDash uses this to assess your understanding of experiment sizing and feasibility. You should explain baseline rates, minimum detectable effect, variance, and sample size trade-offs.

Tip: Tie power calculations back to business timelines and rollout decisions.

An experiment improves consumer conversion but hurts Dasher acceptance. What do you do?

This evaluates marketplace thinking. A strong answer explains segmenting results, evaluating long-term impact, and possibly recommending a partial rollout or follow-up experiment.

Tip: Emphasize balancing all sides of the marketplace rather than optimizing a single metric.

Product and Marketplace Case Interview Questions

These case-style questions test how you reason about DoorDash’s marketplace as a system. Interviewers evaluate how you structure ambiguous problems, prioritize signals, and connect analysis to decisions.

Delivery times increased last month. How would you investigate?

You should decompose delivery time into supply, demand, batching, and routing efficiency, then examine each component by region and time of day.

Tip: State the order in which you would investigate signals and why.

What metrics would you track to understand marketplace demand?

This tests metric design for real-time systems. You should discuss order requests, active Dashers, and imbalance ratios.

Tip: Mention how thresholds vary by city and time.

How would you prioritize markets for international expansion?

DoorDash asks this to evaluate strategic judgment. A strong answer compares demand density, unit economics, regulatory complexity, and operational readiness.

Tip: Clearly explain what you would deprioritize.

How would you estimate the lifetime value of a consumer after their first 30 days?

This evaluates your ability to blend modeling with business context. You should explain cohort analysis, retention modeling, and uncertainty handling.

Tip: Emphasize how LTV estimates guide acquisition spend and promotions.

Behavioral Interview Questions

Behavioral interviews at DoorDash evaluate how you operate in ambiguous, cross-functional environments where data scientists are expected to influence decisions across Product, Engineering, and Operations. Interviewers look for ownership, sound judgment, and the ability to move marketplace decisions forward using data while balancing consumer, merchant, and Dasher outcomes.

Answers should follow the STAR method, with clear emphasis on decision impact rather than analysis volume.

Tell me about a time your analysis changed a product decision.

DoorDash asks this to assess whether you can translate insights into action in a fast-moving marketplace. Interviewers want to see that your work influenced a real decision rather than living in a dashboard.

Sample answer:

In my previous role, a team planned to roll out a batching feature to reduce delivery costs. I analyzed historical delivery data and found that while batching improved efficiency, it increased late deliveries in high-density zones during peak hours. I presented a segmented view by time of day and zone density and recommended limiting batching to off-peak windows. The team adopted the recommendation, which preserved on-time delivery rates while still reducing cost per order by 6 percent.

Tip: Quantify both the insight and the decision outcome. DoorDash values impact over analysis complexity.

Describe a time you disagreed with a stakeholder. How did you handle it?

This question evaluates collaboration and influence without authority. DoorDash expects data scientists to challenge assumptions respectfully and align teams around evidence.

Sample answer:

A product manager wanted to prioritize reducing delivery fees to drive order growth. My analysis showed that lower fees increased short-term demand but reduced Dasher supply in certain markets. I shared the data and reframed the discussion around long-term marketplace balance. We agreed to test a targeted subsidy instead of a global fee cut, which improved order volume without hurting Dasher availability.

Tip: Emphasize alignment on shared goals rather than “winning” the disagreement.

Tell me about a failed experiment or analysis. What did you learn?

DoorDash uses this to assess learning mindset and accountability. Interviewers want to see how you adapt after imperfect results.

Sample answer:

I once ran an experiment to increase consumer conversion that showed no significant lift. After reviewing logs, I discovered that traffic routing caused uneven exposure across variants. I documented the failure, worked with engineering to fix assignment logic, and redesigned the experiment. The follow-up test produced a 4 percent lift, and the learnings became part of our experiment checklist.

Tip: Focus on what changed in your process after the failure, not just the result.

How do you explain technical findings to non-technical partners?

This tests communication clarity. DoorDash data scientists frequently present to operators and business leaders who care about decisions, not methodology.

Sample answer:

When presenting to operations leaders, I start with the recommendation and the expected impact. In one case, instead of explaining a regression model, I showed how different staffing levels affected late deliveries using a simple scenario chart. This helped leadership quickly decide on a staffing adjustment that reduced late orders by 7 percent.

Tip: Lead with outcomes and trade-offs before explaining how the analysis was done.

Why do you want to work as a data scientist at DoorDash?

This question evaluates motivation and role fit. Interviewers want to hear why DoorDash’s marketplace problems genuinely interest you.

Sample answer:

I’m drawn to DoorDash because of the complexity of its three-sided marketplace. I enjoy working on problems where optimizing one metric can negatively affect another, and decisions require careful trade-offs. DoorDash’s focus on experimentation, logistics optimization, and marketplace balance aligns closely with the type of data science work I want to do at scale.

Tip: Tie your answer to DoorDash-specific problems, not generic data science interests.

In this statistics-focused deep dive, Jay, the founder of Interview Query, breaks down the recurring patterns behind statistics questions asked at top companies like Google, Netflix, and Wall Street firms, and shows how to approach each with confidence. This breakdown is especially valuable for Doordash data scientist candidates, as it sharpens intuition around hypothesis testing, variance, and experiment interpretation, all of which are critical when evaluating marketplace metrics, pricing tests, and incentive experiments at Doordash.

Role Overview: DoorDash Data Scientist

In 2026, data scientists at DoorDash primarily operate within the Analytics organization, partnering closely with Product, Engineering, and Operations teams to improve growth, efficiency, and marketplace balance. The role is highly embedded in decision-making and extends well beyond reporting or dashboard maintenance.

Data scientists work across DoorDash’s three-sided marketplace, supporting initiatives that affect consumers, merchants, and Dashers simultaneously. Depending on team and seniority, responsibilities often include:

- Driving strategic decisions by analyzing large-scale marketplace data and surfacing actionable insights for leaders

- Designing and evaluating experiments to validate product features, pricing changes, and operational improvements

- Building statistical or machine learning models for funnel optimization, segmentation, forecasting, or marketplace balancing

- Creating and maintaining analytics infrastructure, including SQL-based pipelines and executive-facing dashboards

- Partnering cross-functionally to translate complex findings into clear recommendations

Specialized analytics teams at DoorDash focus on domains such as consumer growth, Dasher logistics, merchant performance, people analytics, and international expansion. While tooling varies by team, most data scientists work extensively with SQL and Python or R, along with modern data warehouses and visualization platforms.

Senior and lead-level data scientists are expected to operate with significant autonomy. In addition to owning high-impact analyses, they mentor junior team members, shape experimentation standards, and influence analytical strategy across multiple initiatives.

Culturally, DoorDash values customer obsession, ownership, and bias for action. Data scientists are expected to move quickly, ask the right questions, and use data to guide decisions even when information is incomplete or signals conflict. Those who thrive in the role enjoy ambiguity, think in systems, and take responsibility for outcomes rather than just analysis.

How to Prepare for the DoorDash Data Scientist Interview

Preparing for the DoorDash data scientist interview requires more than reviewing SQL syntax or memorizing statistical formulas. DoorDash evaluates whether you can reason through marketplace trade-offs, design credible experiments, and communicate insights that drive action across Product, Engineering, and Operations.

Your preparation should mirror how data scientists actually work inside DoorDash.

Practice SQL in marketplace contexts

SQL is a core evaluation area, especially during the technical screen. Focus on writing clean, readable queries that handle time-based aggregation, segmentation, and window functions. Practice problems involving rolling metrics, cohort analysis, and ranking rather than isolated joins.

Working through Interview Query’s SQL interview questions and timed practice in the challenges section helps build fluency under interview conditions.

Tip: When explaining queries, walk interviewers through your assumptions before diving into syntax.

Strengthen experimentation and statistical judgment

DoorDash places heavy emphasis on experimentation because nearly every product or operational change is validated through A/B tests. You should be comfortable designing experiments with real-world constraints such as spillovers, network effects, and uneven regional exposure.

Use Interview Query’s data science interview questions and the data science learning path to practice power calculations, metric selection, and interpreting results beyond p-values.

Tip: Always connect experiment results to a launch, rollback, or follow-up decision.

Develop strong marketplace intuition

Many interview questions test whether you understand how changes affect all three sides of DoorDash’s marketplace. A decision that improves consumer conversion may hurt Dasher supply or merchant margins.

Practice structuring answers that explicitly consider consumer, merchant, and Dasher impact. Product-style prompts from Interview Query’s product analyst interview questions help reinforce this systems-level thinking.

Tip: Call out trade-offs early. Interviewers reward candidates who acknowledge second-order effects.

Prepare decision-driven behavioral stories

Behavioral interviews are not about listing responsibilities. DoorDash looks for ownership, bias for action, and judgment under ambiguity. Prepare stories where your analysis directly influenced a product or operational decision.

Refining delivery through mock interviews or practicing aloud with the AI interview tool helps ensure clarity and confidence.

Tip: Lead with the decision and outcome, then explain the analysis that supported it.

Average DoorDash Data Scientist Salary

According to Levels.fyi, DoorDash data scientist compensation in the United States spans a wide range depending on level, scope, and ownership. Monthly total compensation ranges from ~$19,000 at E3 to ~$42,000 at E6, reflecting DoorDash’s strong emphasis on equity at senior levels and its marketplace-critical analytics roles.

The table below annualizes the reported monthly figures from Levels.fyi for easier comparison.

| Level | Title | Total (Year) | Base (Year) | Stock (Year) | Bonus (Year) |

|---|---|---|---|---|---|

| E3 | DS0 | $228,000 | $168,000 | $54,000 | $2,500 |

| E4 | DS1 | $252,000 | $180,000 | $68,400 | $0 |

| E5 | DS2 | $276,000 | $192,000 | $84,000 | $0 |

| E6 | DS3 | $504,000 | $264,000 | $240,000 | $2,000 |

DoorDash compensation becomes increasingly equity-heavy at senior levels. While E3–E5 roles skew toward base salary with meaningful stock grants, E6 packages show a sharp increase in equity, often exceeding base pay. This structure aligns incentives with long-term marketplace health and company performance.

Average Base Salary

Average Total Compensation

DoorDash also uses an irregular equity vesting schedule (commonly 40% / 30% / 20% / 10%), which is why Levels.fyi reports stock on a monthly average basis rather than by cliff year.

What this means for candidates

- Early-career candidates (E3–E4) should expect strong cash compensation with moderate equity upside.

- Mid-level data scientists (E5) see equity become a more material component of total pay.

- Senior data scientists (E6) are compensated as business owners, with large equity exposure tied to long-term outcomes.

For candidates preparing for leveling discussions, practicing role-aligned questions from Interview Query’s data science interview questions and validating expectations through mock interviews can help anchor compensation conversations to demonstrated scope and impact.

FAQs

How hard is the DoorDash data scientist interview?

The DoorDash data scientist interview is considered challenging but fair. It emphasizes practical problem-solving, SQL fluency, experimentation judgment, and marketplace intuition rather than abstract theory. Candidates who struggle typically under-prepare for product-style cases or fail to reason across DoorDash’s three-sided marketplace.

What SQL topics should I prioritize for DoorDash?

You should be very comfortable with joins, CTEs, window functions, cohort analysis, and time-based aggregation. Many questions resemble real analytics work, such as diagnosing delivery delays or measuring retention. Practicing Interview Query’s SQL interview questions is one of the most effective ways to prepare.

Does DoorDash ask machine learning questions?

Yes, but mostly for mid-to-senior roles. Machine learning questions tend to be applied rather than theoretical, focusing on forecasting demand, ETA prediction, segmentation, or recommendation systems. Entry-level roles lean more heavily toward analytics and experimentation.

How important is experimentation knowledge?

Very important. DoorDash runs extensive A/B testing across product and operations. You are expected to design experiments, choose the right success and guardrail metrics, and interpret results under real-world constraints like spillovers and network effects.

What does DoorDash look for in behavioral interviews?

DoorDash evaluates ownership, bias for action, and decision-making under ambiguity. Strong candidates show how their analysis influenced real decisions, not just how they produced insights. Practicing structured responses with Interview Query’s behavioral interview questions helps refine delivery.

Start Your DoorDash Data Scientist Interview Prep Today

The DoorDash data scientist interview rewards candidates who can turn messy marketplace data into clear decisions. Beyond SQL and statistics, interviewers look for structured thinking, experimentation judgment, and the ability to reason across consumers, merchants, and Dashers.

To prepare effectively, practice real DoorDash-style problems using Interview Query’s data science interview questions, follow a structured plan through the data science learning paths, and refine your communication in realistic settings with mock interviews. Focused preparation makes the difference between “good analysis” and clear interview wins.