DoorDash Machine Learning Engineer Interview Guide: Process, Questions, Salary

Introduction

The DoorDash machine learning engineer role sits at the core of one of the largest real-time marketplaces in the world. DoorDash operates a three-sided ecosystem of consumers, merchants, and dashers, and its ML systems make billions of predictions every day across logistics, personalization, pricing, fraud detection, and supply–demand balancing.

As of 2026, DoorDash reports that machine learning models directly influence ETA accuracy, delivery batching, ranking and recommendations, fraud prevention, and incentive optimization across millions of daily orders. At the senior level, machine learning engineers are expected to own models end to end, from feature engineering and training to deployment, monitoring, and iteration in production. This makes the DoorDash machine learning engineer interview especially rigorous, with a strong emphasis on coding fundamentals, ML system design, and applied judgment under real marketplace constraints.

DoorDash Machine Learning Engineer Interview Process

The DoorDash machine learning engineer interview is designed to assess whether candidates can operate machine learning systems in a high-stakes, real-time marketplace. For senior and staff roles, the process places equal weight on coding fundamentals, ML system design, and applied judgment, especially in logistics, fraud detection, and personalization.

The full loop typically takes 3 to 6 weeks, depending on scheduling and seniority, and consists of multiple stages that progressively increase in technical depth and ownership expectations.

Resume Review and Recruiter Screen

The process begins with a resume review and an initial recruiter phone screen. This stage focuses on understanding your background in applied machine learning or ML infrastructure, the scale of systems you have worked on, and how your experience maps to DoorDash’s marketplace problems.

Recruiters are particularly attentive to whether you have owned models or ML systems end to end, including deployment and monitoring, rather than working only on offline experimentation. For senior candidates, this conversation often influences whether the loop emphasizes applied ML, ML infrastructure, or a hybrid role.

| Aspect | Details |

|---|---|

| Duration | ~30 minutes |

| Focus | Background, motivation, seniority alignment |

| Signals evaluated | Ownership, communication clarity, domain relevance |

Tip: Be explicit about production impact. Saying you “trained a model” is weaker than explaining how it affected ETA accuracy, fraud rates, or marketplace balance.

Technical Screen

The technical screen is usually a 60-minute interview and serves as an early signal of coding strength and ML reasoning. Most candidates encounter a coding-focused session, though some teams substitute or supplement this with a deep dive into a prior machine learning project.

Coding questions are typically medium to hard difficulty and reflect real engineering constraints DoorDash cares about, such as graph traversal, scheduling, or stateful services. Even in ML-focused tracks, strong coding fundamentals are non-negotiable.

To prepare for this stage, many candidates practice algorithmic and applied problems directly in the Interview Query question library, which mirrors the style and difficulty DoorDash uses.

| Track | What to Expect | Common Tools |

|---|---|---|

| Coding | 1–2 LeetCode-style problems | HackerRank CodePair |

| ML project deep dive | Prior system walkthrough | Whiteboard or shared doc |

| Hybrid | Coding plus ML reasoning | Interviewer-led |

Tip: Talk through edge cases and time complexity. DoorDash interviewers evaluate how your solution scales under real traffic, not just whether it passes sample tests.

Virtual Onsite Loop

The virtual onsite is the most demanding stage and typically lasts 3 to 5 hours, consisting of multiple back-to-back interviews. This is where DoorDash evaluates whether you can design, ship, and operate ML systems that serve millions of users across a three-sided marketplace.

For senior machine learning engineers, the loop usually includes two coding rounds, one ML system design round, one ML concepts or domain round, and one behavioral interview focused on DoorDash’s ownership-driven culture.

| Interview | Primary Focus | Example Scenarios |

|---|---|---|

| Coding round 1 | Algorithmic clarity | Graph routing, batching |

| Coding round 2 | Software design | Stateful services |

| ML system design | End-to-end ML architecture | ETA prediction, fraud detection |

| ML concepts | Modeling judgment | Cold start, evaluation metrics |

| Behavioral | Culture and ownership | Bias for action, learning |

ML system design interviews are especially important at DoorDash. Candidates are expected to design systems that handle real-time inference, feedback loops, and trade-offs between accuracy, latency, and cost. Practicing applied prompts from machine learning case studies and reinforcing fundamentals through the modeling and machine learning learning path closely matches this round.

Tip: Always anchor your design decisions to DoorDash’s three sides. Interviewers listen carefully for how models affect consumers, merchants, and dashers simultaneously.

Behavioral Interview

Behavioral interviews assess how you operate as an owner in ambiguous, high-impact environments. DoorDash places strong emphasis on values like Customer Obsession, Bias for Action, and Learning Mindset, especially for senior and staff roles.

Candidates are expected to share concrete examples involving production failures, difficult trade-offs, or cross-functional disagreement. Rehearsing these stories out loud using structured practice like mock interviews helps ensure clarity under pressure.

| Focus area | What interviewers look for |

|---|---|

| Ownership | End-to-end accountability |

| Judgment | Trade-offs under uncertainty |

| Collaboration | Working across functions |

| Learning | Growth after failure |

Tip: Stories involving rollbacks or mistakes are often stronger than “perfect” launches. DoorDash values engineers who learn quickly and protect users.

DoorDash Machine Learning Engineer Interview Questions

The DoorDash machine learning engineer interview is built around one core idea: can you ship ML that improves a three-sided marketplace in production. Questions are grounded in logistics, ranking, incentives, and safety systems where data is noisy, feedback loops are real, and decisions affect consumers, merchants, and dashers at the same time. Interviewers evaluate how you reason about trade-offs, how you design systems that stay reliable under load, and how you debug when production behavior diverges from offline metrics.

If you want one place to practice the same question styles across modeling, ML system design, and coding, the fastest workflow is drilling in the Interview Query question library and then pressure-testing your explanations with the AI interview tool. For structured study, the modeling and machine learning learning path is the most aligned prep for DoorDash-style ML reasoning.

ML Fundamentals and Modeling Questions

These questions test whether you can choose and evaluate models under real marketplace constraints like imbalance, shifting behavior, and asymmetric costs. DoorDash interviewers listen for how you connect modeling decisions to operational outcomes like on-time delivery rate, cancellation risk, fraud losses, or dasher efficiency.

-

DoorDash sees imbalanced outcomes in fraud detection, cancellation prediction, and rare safety events, so this question tests whether you know how to model “needle in a haystack” problems responsibly. Strong answers start by clarifying what the positive class represents and what mistakes cost the business most. Interviewers expect you to compare resampling, class weighting, threshold tuning, and calibration, then explain why your choice fits the operational trade-off. They also listen for how you validate the approach using the right metric family, since ROC-AUC can look good while PR-AUC collapses in rare-event regimes. The best answers include how you would monitor post-launch for distribution shifts that change the effective prevalence.

Tip: Tie the metric choice to cost, for example false positives blocking good customers versus false negatives leaking fraud.

How would you explain the bias-variance tradeoff in the context of a production model?

This question checks whether you can match model complexity to stability, which matters when the marketplace shifts weekly and models must stay predictable. Interviewers want to hear how you decide between a simple baseline and a more complex model when the marginal offline gain may not survive production noise. Strong answers discuss how variance shows up as unstable rankings, volatile ETAs, or inconsistent fraud flags after retrains. You should connect the trade-off to retraining cadence, feature drift, and monitoring burden, not just a textbook curve. The strongest answers also describe how you use experiments and repeated retrains to test stability, not just a single offline benchmark.

Tip: Emphasize stability across retrains, since DoorDash cares about consistent marketplace behavior.

-

DoorDash uses this to probe reproducibility and experimentation discipline. Strong answers explain randomness from initialization, sampling, train-test splits, and nondeterministic pipelines, plus how leakage or subtle preprocessing changes can inflate results. Interviewers look for whether you can distinguish true model improvement from evaluation noise, especially when deciding what to ship. A good answer includes practical controls such as fixed seeds, versioned datasets, consistent feature pipelines, and repeated runs with confidence intervals. It also helps to mention how you would avoid “p-hacking” by predefining metrics and analysis plans for offline evaluation.

Tip: Mention repeated training runs and confidence intervals, not just “set a random seed.”

How would you interpret coefficients of logistic regression for categorical and boolean variables?

DoorDash often uses simpler models for interpretable risk scoring, merchant quality, or guardrail systems, so this question tests whether you can explain model behavior clearly. Strong answers cover how one-hot encoding changes coefficient interpretation and what the baseline category represents. Interviewers expect you to explain log-odds versus probability effects and why marginal impacts differ by operating point. You should also address pitfalls like multicollinearity and how regularization can shrink coefficients in ways that change interpretability. The best responses connect interpretation back to actionable decisions, such as threshold selection or policy design.

Tip: Explain the baseline category explicitly, because many mistakes come from misreading one-hot coefficients.

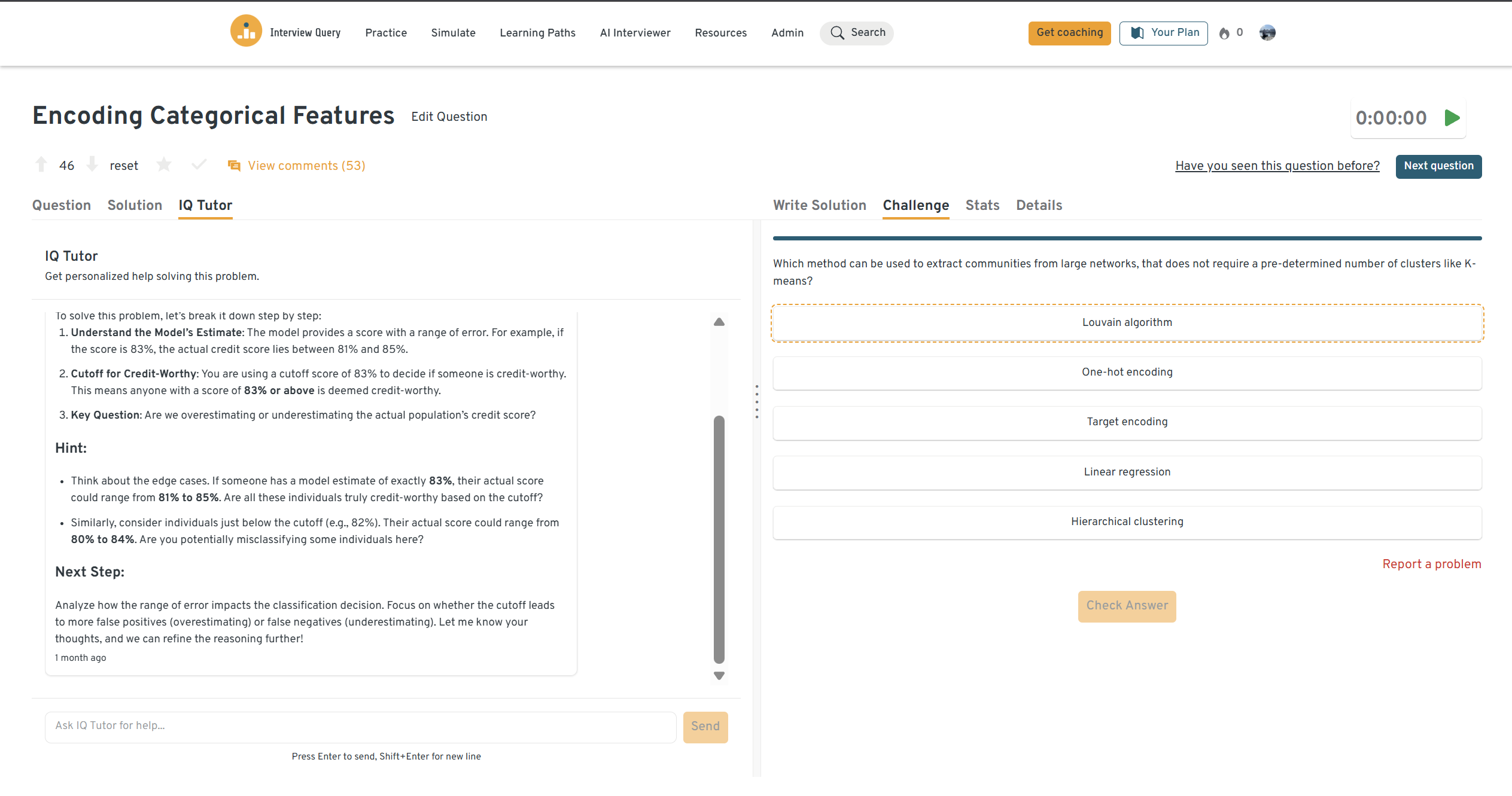

How would you encode a categorical variable with thousands of distinct values?

High-cardinality features show up in DoorDash item IDs, merchant IDs, and location encodings, so this tests whether you can balance performance with practicality. Strong answers compare hashing, target encoding, learned embeddings, and frequency bucketing, then explain leakage and cold-start trade-offs. Interviewers want to hear how you would evaluate stability when new categories arrive daily and how you control for exploding memory or serving cost. You should also discuss offline-to-online skew, since encoding strategies can behave differently in production. The strongest answers mention monitoring for cardinality growth and setting guardrails for unseen-category handling.

Tip: Call out cold-start handling for new merchants or items, because that is where encodings fail in production.

Try this question yourself on the Interview Query dashboard. You can run SQL queries, review real solutions, and see how your results compare with other candidates using AI-driven feedback.

ML System Design and Production Questions

System design questions evaluate whether you can architect ML systems that operate reliably in real time, handle feedback loops, and degrade gracefully when data or services fail. DoorDash interviewers care about latency budgets, observability, rollout safety, and how model decisions affect the marketplace equilibrium.

How would you determine if the new model predicts delivery times better than the old model?

This question maps directly to DoorDash’s core problem: predicting delivery times accurately and safely improving the estimator. Strong answers go beyond MSE and talk about segment performance, calibration, and how errors affect customer trust and cancellation behavior. Interviewers expect you to propose both offline evaluation and an online experiment plan, including guardrails like late deliveries or support contact rates. You should address how you handle feedback loops, since shown ETAs change user and dasher behavior. The best answers include a rollback strategy if the new model worsens tail outcomes like p95 lateness.

Tip: Segment by context (distance, region, merchant prep time), because averages hide the failures that hurt trust.

How would you monitor and evaluate the real-time performance of this model after deployment?

DoorDash uses monitoring questions to test whether you can own systems after launch. Strong answers cover layered monitoring: input distribution checks, prediction drift, calibration, latency, error budgets, and downstream marketplace metrics. Interviewers want to hear how you set alert thresholds that trigger investigation rather than panic, and how you separate data issues from model issues. You should also describe how you validate labels, since production labels can be delayed or biased by operations. The best responses include automated rollback or traffic shaping when anomalies exceed safe bounds.

Tip: Tie alerts to impact, such as cancellation rate or on-time delivery, not only model metrics.

-

Fraud is a first-class DoorDash use case, and this question tests end-to-end thinking from signals to action. Strong answers start by defining fraud types, attack surfaces, and what the system is optimizing for, since fraud prevention has direct customer friction costs. Interviewers expect feature ideas across user behavior, device signals, merchant anomalies, and network patterns, plus how you handle adversarial adaptation. You should discuss real-time scoring architecture, human review loops, and how you measure success without inflating false positives. The strongest answers include a plan for model iteration as fraud patterns evolve.

Tip: Treat false positives as a product problem, because blocking good orders can be more expensive than some fraud.

How would you design the recommendation system?

This recommendation system prompt transfers cleanly to DoorDash home feed and search ranking. Strong answers cover candidate generation, ranking, and re-ranking, plus how you incorporate context like time, location, and availability constraints. Interviewers want you to address cold start, exploration versus exploitation, and feedback loops from exposure bias. You should also discuss online evaluation metrics and guardrails that reflect marketplace health, not just clicks. The best answers include operational considerations like feature freshness, caching, and latency budgets.

Tip: Mention inventory and availability constraints, because DoorDash recommendations must stay feasible in real time.

How would you build the recommendation algorithm for a type-ahead search model?

Search is latency-sensitive, and this question tests whether you can balance relevance with speed. Strong answers separate retrieval from ranking, then explain how you keep inference light enough for interactive response times. Interviewers look for how you use embeddings, prefix matching, and popularity signals while preventing spam or low-quality results. You should also discuss how you evaluate success beyond CTR, including conversion and downstream satisfaction. The best responses include fallback behavior when features are missing or services degrade.

Tip: Treat latency as part of product quality, because slow suggestions are effectively wrong suggestions.

For more reps, drill applied system prompts in machine learning case studies and reinforce fundamentals in the machine learning learning path.

Coding and Applied Problem-Solving Questions

Coding rounds test whether you can write clean, efficient code under time constraints and reason about performance at scale. DoorDash ML engineers are expected to be strong software engineers, so these questions often probe algorithm choice, edge cases, and complexity.

-

This tests graph modeling and BFS reasoning, which maps to routing and state-transition problems in delivery systems. Strong answers explain why BFS guarantees shortest path and how you reduce neighbor lookup cost to avoid quadratic scans. Interviewers listen for clean data structures and boundary-case handling. You should also discuss complexity and how the approach scales. The best answers show how you’d optimize neighbor generation using pattern indexing.

Tip: Emphasize preprocessing to cut neighbor lookup cost, since that is the real scalability lever.

-

This tests whether you can translate evaluation definitions into correct, defensive code. DoorDash uses classification metrics heavily in fraud and risk systems where costs are asymmetric. Strong answers compute TP, FP, and FN carefully, handle division-by-zero, and explain what the numbers mean operationally. Interviewers also want to hear when precision matters more than recall, and vice versa. The best responses tie metric trade-offs back to business impact, such as blocking legitimate orders versus missing fraud.

Tip: Explain how you would choose thresholds based on cost, not on maximizing a single metric.

-

This checks whether you understand optimization mechanics well enough to debug training behavior. Strong answers walk through loss definition, gradient updates, learning rate choice, and convergence diagnostics. Interviewers listen for numerical stability concerns and stopping criteria, not just equations. You should mention how you detect divergence and adjust learning rate. The best responses connect training stability to reliable model retraining pipelines.

Tip: Always include convergence checks, because production retrains need guardrails.

Build a logistic regression model from scratch.

This tests end-to-end implementation discipline: stable sigmoid computation, log-likelihood, gradients, and iterative optimization. Strong answers focus on correctness, numerical stability, and interpretability. Interviewers want to see that you can implement core ML logic without relying on libraries, since this reflects debugging readiness. You should mention gradient checking and how you validate the implementation. The best answers also explain how you would regularize or calibrate in real systems.

Tip: Mention numerical stability explicitly, because overflow issues are common in real training loops.

k Nearest Neighbors classification model from scratch.

This tests distance computation and scalability trade-offs. Strong answers explain vectorized distance calculation, tie-breaking, and why naive KNN does not scale for large candidate sets. Interviewers want you to connect the method to retrieval use cases and discuss approximations or indexing in production. You should also address memory and compute costs. The best responses frame KNN as a baseline or component in a larger retrieval system rather than a final production model at DoorDash scale.

Tip: Explain how you would avoid full pairwise distance computation in large-scale systems.

To build speed, combine question practice with timed runs in Interview Query challenges and targeted practice in the Interview Query dashboard.

Behavioral and Collaboration Questions

Behavioral interviews evaluate whether you operate with an owner mindset and can collaborate across engineering, product, and operations teams. Strong answers should use STAR, lead with your decision, and quantify outcomes.

-

This tests whether you can translate technical trade-offs into decisions partners can act on. Strong answers explain how you reframed the conversation around shared metrics and risk. Interviewers listen for clarity, empathy, and how you handled disagreement without escalating. The best responses include a concrete change in process or communication that prevented repeat confusion. You should quantify impact, such as faster alignment or fewer last-minute reversals.

Tip: Lead with the decision you enabled, then explain the technical context.

-

DoorDash values engineers who can present model trade-offs clearly under time pressure. Strong answers describe how you tailor message depth to the audience and keep focus on decision implications. Interviewers want to hear how you use visuals, guardrails, and crisp summaries to avoid misunderstanding. The best answers include how you handle pushback and iterate the narrative. Quantify what changed after your presentation, such as a roadmap shift or rollout decision.

Tip: Always include a recommendation, because “here are the results” is not enough at senior levels.

Why Do You Want to Work With Us

This evaluates alignment with DoorDash’s marketplace and ML use cases. Strong answers tie your experience to logistics, recommendations, or fraud detection, and show excitement about operating ML in real time. Interviewers listen for specificity, not generic “I like ML at scale” statements. The best responses reference owning systems end to end and caring about measurable customer outcomes. Mentioning DoorDash’s three-sided marketplace makes the motivation more credible.

Tip: Connect your background to one DoorDash ML domain, then explain why the trade-offs excite you.

Tell me about a time your model caused an incident in production.

This tests accountability and operational maturity. Strong answers include rapid containment, root cause analysis, and the durable fixes you implemented, such as better monitoring or safer rollouts. DoorDash interviewers want to see calm ownership, not heroics. Quantify impact, such as latency increase, cancellation uplift, or fraud leakage, and the post-fix results. The best answers explain how you changed the process so the incident class could not recur.

Tip: Emphasize the permanent change you made, like canary policy, alerting, or feature validation.

Describe a disagreement with product or operations about fraud, ETA, or ranking trade-offs.

This tests cross-functional influence under competing incentives. Strong answers show how you used data, experiments, and guardrails to align the team. Interviewers listen for whether you respected operational constraints and customer experience. Quantify the outcome, such as reduced false positives, improved on-time rate, or faster experimentation cadence. The best responses end with how you institutionalized the decision framework for future trade-offs.

Tip: Show how you turned conflict into an experiment, because DoorDash rewards measurable resolution.

For more practice, explore curated machine learning interview questions on Interview Query.

In this ML system design walkthrough, Sachin, a senior data scientist, tackles a common question: how would you architect YouTube’s homepage recommendation system? You’ll learn how to approach real-time vs. offline recommendation pipelines, handle user context, optimize for different devices, and incorporate metrics like NDCG and MRR, ideal for machine learning engineers preparing for design rounds.

Role Overview: DoorDash Machine Learning Engineer

Machine learning engineers at DoorDash build the systems that make a three-sided marketplace work in real time. Unlike ML roles that live mostly in offline experimentation, DoorDash MLE roles are production-heavy. Engineers are expected to own the full ML lifecycle and to ship models that improve marketplace outcomes under latency, reliability, and feedback-loop constraints.

At a high level, DoorDash MLE work falls into four core domains: logistics and fulfillment, personalization and ranking, incentives and causal inference, and safety and fraud. Senior and staff engineers typically own larger surface areas, such as shared feature infrastructure, real-time inference services, or multi-region model rollouts.

Core responsibilities

- Build and deploy ML models that support ETA prediction, routing, assignment, and batching

- Develop ranking and recommendation systems for home feed and search

- Create models for incentives and marketplace balance using causal inference and experimentation

- Design ML infrastructure such as feature stores, online training pipelines, and real-time serving

- Build fraud detection and anomaly systems that prevent abuse while minimizing customer friction

- Monitor model performance, detect drift, and operate safe retraining and rollback workflows

What interviewers expect by seniority

| Level | What interviewers look for |

|---|---|

| Mid-level MLE | Strong coding, solid ML fundamentals, clear modeling choices |

| Senior MLE | End-to-end ownership, production monitoring, clear trade-offs |

| Staff MLE | Systems leadership, platform thinking, scaling across teams |

What makes the role unique at DoorDash

| Dimension | DoorDash emphasis |

|---|---|

| ML vs SWE | ML engineers are expected to be strong software engineers |

| Real-time constraints | Latency and reliability matter as much as accuracy |

| Feedback loops | Models change behavior, so evaluation must be marketplace-aware |

| Cross-functional work | Heavy collaboration with product and operations |

| Safety and fraud | Fraud prevention is core, not a side project |

How to Prepare for a DoorDash Machine Learning Engineer Interview

Preparing for a DoorDash machine learning engineer interview means training for a production environment where models influence a three-sided marketplace in real time. DoorDash interviewers evaluate whether you can write clean code under pressure, design ML systems that remain stable under feedback loops, and make decisions that protect consumer experience, merchant performance, and dasher efficiency at the same time.

A good prep plan mirrors the interview loop. You build coding speed first, then sharpen ML system design and applied modeling judgment, then rehearse behavioral stories that show ownership and learning.

Build coding speed and clarity

DoorDash’s technical screen and onsite include at least two coding-heavy rounds. You should be ready for medium-to-hard problems involving graphs, arrays, and scheduling logic, then explain complexity and edge cases clearly.

Use the Interview Query question library to drill coding prompts in a way that matches interview pacing. If you want a more structured cadence, practice under time pressure using Interview Query challenges, since they force you to reason quickly and write production-style logic rather than perfect but slow solutions.

Tip: After you solve a problem, do a fast “production pass” where you improve readability, add guardrails for bad inputs, and simplify the flow. DoorDash interviewers care about clean engineering, not just passing test cases.

Practice ML system design in marketplace settings

DoorDash ML system design interviews expect end-to-end architecture, including training pipelines, feature stores, real-time inference, monitoring, and rollback. Strong candidates show they can reason about feedback loops, latency budgets, and business guardrails.

Practice applied architecture using machine learning case studies, then reinforce the underlying trade-offs in the modeling and machine learning learning path. This combination helps you get reps in both structured ML reasoning and full-system design.

Tip: Always name the online metric you would protect first, like on-time delivery or cancellation rate. Then explain how your design prevents tail regressions.

Strengthen applied modeling and evaluation judgment

DoorDash models often run in noisy, shifting environments where offline gains can disappear in production. Interviewers want you to handle imbalance, drift, and asymmetric costs, especially in fraud detection and logistics.

Use the machine learning interview questions hub to practice how you explain model selection, evaluation, and monitoring. You should be comfortable discussing why PR-AUC may matter more than ROC-AUC for rare events and how thresholding decisions change customer friction.

Tip: Talk about stability across retrains. DoorDash cares about whether your model stays consistent after weekly training updates, not just whether it wins one offline benchmark.

Prepare fraud and safety reasoning explicitly

DoorDash has many fraud and safety use cases, and these interviews often test how you balance fraud prevention against customer experience. Interviewers look for feature ideas, risk controls, and how you measure success without over-blocking legitimate orders.

For targeted practice, use scenario-based prompts from the Interview Query question library and practice explaining metrics and thresholds the way you would to product and operations stakeholders.

Tip: State what the model is optimizing for before you propose features. Fraud systems fail when teams optimize the wrong objective.

Rehearse behavioral stories that show ownership

DoorDash emphasizes an owner mindset. Behavioral interviews probe for times you made trade-offs under pressure, handled an incident, or influenced a cross-functional decision.

Practice delivery using the AI interview tool, then pressure-test pacing and clarity through mock interviews. Strong answers lead with the decision you made, quantify impact, and explain what you changed to prevent repeat failures.

Tip: A production failure story is often your strongest asset if you explain containment, root cause, and long-term fixes clearly.

Build a senior-level weekly prep plan

| Prep area | Weekly target | What success looks like |

|---|---|---|

| Coding | 4–6 problems | Clean solutions with fast refactors |

| ML system design | 1–2 full designs | Clear trade-offs, metrics, rollback |

| Modeling judgment | 2–3 prompts | Strong evaluation and drift plan |

| Behavioral | 2 stories | STAR with measurable outcomes |

| Review | 1 simulation | Timed or live-style practice |

Tip: If you have an upcoming senior interview, spend more time on system design and production ownership than on advanced theory. DoorDash hires ML engineers who can ship.

Average DoorDash Machine Learning Engineer Salary

Machine learning engineers at DoorDash are among the highest-paid ICs in the organization, reflecting the role’s direct impact on logistics efficiency, personalization, fraud prevention, and marketplace balance. Compensation is heavily equity-weighted and scales quickly at senior levels.

Based on the most recent data available, the median total compensation for a DoorDash machine learning engineer in the United States is approximately $371,000 per year, according to Levels.fyi. Total compensation typically includes base salary, annual bonus, and RSUs, with DoorDash sometimes using irregular vesting schedules (for example, 40% / 30% / 20% / 10%).

DoorDash Machine Learning Engineer Salary by Level (U.S.)

| Level | Approx. Role Scope | Median Total Compensation | Notes |

|---|---|---|---|

| E3 | Entry-level MLE | ~$181,000 | Limited ownership, scoped problems |

| E4 | Mid-level MLE | ~$260,000 | Owns models end to end |

| E5 | Senior MLE | ~$320,000–$340,000 | Leads projects, mentors others |

| E6 | Staff MLE | ~$540,000–$650,000 | Platform or multi-team ownership |

| E7+ | Principal / Senior Staff | $650,000+ | Rare, org-level impact |

Compensation grows steeply from E5 onward, where equity becomes the dominant component of total pay. Senior and staff-level machine learning engineers often earn more than equivalent generalist software engineers due to the specialized nature of the role and its direct business leverage.

Average Base Salary

Average Total Compensation

Compensation structure and equity notes

- Base salary typically ranges from $180K to $300K, depending on level and location

- Equity grants are a major driver of upside, especially at E6+

- Bonus amounts are smaller relative to equity but still meaningful

- Some offers use non-linear vesting, which can front-load equity value early in the grant

For the most up-to-date breakdown by level, location, and vesting structure, see Levels.fyi.

FAQs

What does a machine learning engineer do at DoorDash?

Machine learning engineers at DoorDash own models end to end, from feature engineering and training to deployment and monitoring in production. The work directly supports a three-sided marketplace of consumers, merchants, and dashers, with use cases spanning ETA prediction, routing, recommendations, fraud detection, and experimentation. Unlike research-heavy roles, this position emphasizes production reliability, latency, and real business impact. Engineers are expected to collaborate closely with product, data, and infrastructure teams. Senior roles often extend beyond modeling into system design and technical leadership.

What is the DoorDash machine learning engineer interview process like?

The DoorDash machine learning engineer interview process typically includes a recruiter screen, a technical screen, and a virtual onsite loop. Candidates are evaluated across coding, ML fundamentals, ML system design, and behavioral interviews. Coding rounds resemble SWE-style interviews, while ML rounds focus on applied decision-making and production trade-offs. Behavioral interviews emphasize ownership, learning from failure, and DoorDash’s operator mindset. You can review similar formats by practicing questions in Interview Query’s machine learning interview questions section.

How hard is the DoorDash technical screen?

The technical screen is considered challenging, especially for senior machine learning engineer candidates. Coding problems usually fall in the medium to hard range and test data structures, algorithms, and clean problem solving under time pressure. Some tracks may also include an ML project deep dive or applied modeling discussion. Strong candidates demonstrate not just correct solutions, but also clear reasoning, edge case handling, and time complexity awareness. Practicing with realistic coding prompts and ML scenarios is critical.

What skills matter most for senior machine learning engineer roles at DoorDash?

For senior roles, DoorDash looks for strong applied ML experience combined with system-level thinking. This includes designing scalable inference pipelines, handling model drift, choosing appropriate evaluation metrics, and operating models safely in production. Communication skills are equally important, especially when aligning with product and engineering stakeholders. Senior candidates are also expected to mentor others and influence technical direction. Depth in Python, ML frameworks, and data tooling is assumed.

How much do machine learning engineers make at DoorDash?

In the United States, the median total compensation for a DoorDash machine learning engineer is around $371,000 per year, according to Levels.fyi. Compensation increases sharply at senior and staff levels, where equity becomes a major component of pay. Staff and principal machine learning engineers can earn well over $600,000 annually in total compensation. Exact numbers vary by level, location, and vesting structure. Equity grants may use non-linear vesting schedules, which can affect year-by-year payouts.

Prepare for Your DoorDash Machine Learning Engineer Interview With Interview Query

The DoorDash machine learning engineer interview is designed to identify engineers who can ship ML that improves a three-sided marketplace in production. Strong candidates show clean coding under pressure, clear ML system design thinking, and disciplined judgment around trade-offs like accuracy versus latency, fraud prevention versus customer friction, and offline gains versus online impact.

To prep efficiently, focus on the same skills DoorDash evaluates across the loop. Reinforce modeling and evaluation fundamentals with the modeling and machine learning learning path, then pressure-test end-to-end reasoning with machine learning case studies. Build speed and confidence with hands-on practice in the Interview Query challenges and targeted drilling in the Interview Query question library. If you want realistic rehearsal before the onsite, practice out loud using the AI interview tool or get direct feedback through mock interviews.

Ready to sharpen your DoorDash MLE prep? Start practicing on Interview Query today and walk into your interview with a repeatable system for coding, ML system design, and behavioral rounds.