Top 50 Machine Learning System Design Interview Questions (2025 Guide)

Introduction

Preparing for a machine learning interview this year means going beyond memorizing algorithms—you need to show you can design scalable systems and apply ML to real-world business problems. With the machine learning market projected to reach $113 billion, companies are actively hiring candidates who can both architect ML solutions and deliver impact at scale.

In this guide, we’ve broken down the most common machine learning interview questions into key categories: system design, algorithms and theory, applied modeling, case studies, recommendation systems, and coding challenges. Each section reflects the latest hiring trends so you can focus on what matters most to recruiters and technical interviewers.

Let’s dive into the most high-impact interview topics you’ll face in 2025.

Machine Learning System Design Interview Questions

Machine learning system design interview questions test your ability to architect scalable, production-ready ML solutions. You need to demonstrate technical fluency in structuring the end-to-end machine learning pipeline, designing systems for real-time data ingestion, model training, and deployment, scaling models to millions of users, and ensuring reliability, efficiency, and performance.

How would you build a machine learning system to generate Spotify’s Discover Weekly playlist?

To build a machine learning system for Spotify’s Discover Weekly, you would need to consider user data such as listening history, playlist data, and user interactions. The system could leverage collaborative filtering techniques, content-based filtering, or a hybrid approach to recommend songs tailored to individual user preferences. Evaluating the system will require metrics that capture user satisfaction and engagement with the playlist.How would you build a sentiment analysis model based on a WallStreetBets subreddit dataset? What potential problems could arise?

Building a sentiment analysis model for the WallStreetBets subreddit requires processing textual data to train the model on labeled data to identify sentiment. Challenges include dealing with slang, sarcasm, and noise within user posts, as well as biased data, which can impact model performance and accuracy. Continuous monitoring and updating of the model with new data would be vital to maintaining its effectiveness.How would you build an ML model to predict a hotel’s occupancy rate?

To design an ML model for hotel occupancy prediction, gather data including historical occupancy rates, special events, pricing, and other relevant data. Feature engineering would be crucial to capture seasonal and temporal trends. The model’s performance can be evaluated using metrics like mean absolute error or root mean squared error, and by comparing predictions against actual occupancy data.How would you create a system to detect if a firearm is listed on a marketplace?

Creating a firearm detection system on a marketplace involves designing a model that processes text descriptions and images of listings to classify and identify firearm-related content. Training data would need to include a diverse set of firearm images and descriptions along with non-firearm items to improve classification accuracy. Challenges include accurately distinguishing firearms from similar-looking objects and adhering to regulations.

How would you build a dynamic pricing system for Airbnb based on demand and availability?

A dynamic pricing system for Airbnb can be built by leveraging historical data on demand, pricing patterns, and availability trends. The system would utilize machine learning algorithms to predict optimal prices that balance profitability and occupancy. Considerations include demand fluctuations, competitor pricing, seasonal influences, and user experience. Real-time data integration would be essential for adapting to changing market conditions.How would you come up with a machine learning system design to find good investors?

To identify good investors on a platform like Robinhood using machine learning, you can analyze transaction-level data to determine patterns of successful investment strategies. Start by defining metrics of successful investing, such as consistent portfolio growth or successful trades. Then, apply these metrics through machine learning models to classify users accordingly and identify the characteristics of high-performing investors.

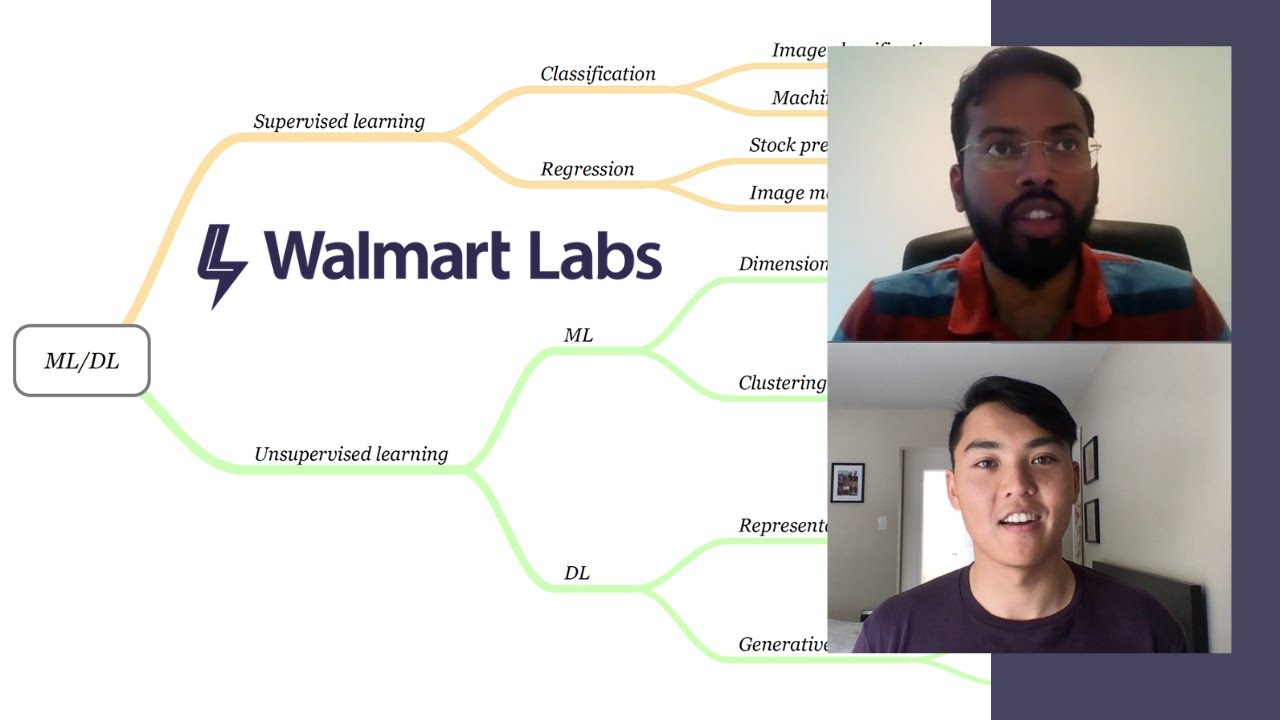

Machine Learning Algorithms and Theory Questions

Machine learning algorithms and theory questions evaluate how well you understand the mathematical foundations and modeling choices behind machine learning. Interviewers want to see if you can reason through trade-offs, explain key concepts like bias-variance, and apply core ideas such as regularization, uncertainty quantification, or ensemble methods. Expect a mix of comparison problems, definition explanations, and deeper modeling intuition checks.

Between linear regression and random forest regression, which model would perform better for predicting booking prices on Airbnb and why?

The performance between linear regression and random forest regression depends on the data’s complexity and the relationships between features. Linear regression assumes a linear relationship, making it suitable for simple, non-complex datasets. Random forest, being a flexible ensemble method, can capture more complex interactions and is often better suited for datasets where relationships are nonlinear or where interactions between features are significant.How would you explain the bias-variance tradeoff when choosing a model?

The bias-variance tradeoff involves balancing two types of errors in a model: bias, which is error due to too simplistic assumptions leading to underfitting, and variance, which arises from a model being too complex and sensitive to variations in the training data, causing overfitting. Optimal modeling requires finding the right complexity level, minimizing total error by balancing these two.Given a time-series forecasting model, how would you quantify the uncertainty of predictions?

To quantify uncertainty in time-series forecasts, one can employ methods such as confidence intervals or prediction intervals that provide a range of plausible values for predictions. Techniques like bootstrapping, Bayesian inference, or using specialized models that inherently estimate uncertainty, like Gaussian Processes, can also be utilized to understand and account for prediction uncertainty.When should you use regularization versus cross-validation in machine learning?

Regularization is useful to prevent overfitting by penalizing complex models, ensuring smoothness in regression coefficients, and enhancing model generalization, especially when dealing with high-dimensional data. Cross-validation, on the other hand, is mainly used for model evaluation and hyperparameter tuning, offering a way to assess model performance on unseen data, helping to choose the most robust and generalizable model.What does the backpropagation algorithm do in neural networks? What are its drawbacks?

Backpropagation is an algorithm used to train neural networks by minimizing the error/loss function. It calculates the gradient of the loss function concerning each weight through the chain rule and updates the weights iteratively to reduce overall error. Despite its effectiveness, drawbacks include the potential for getting stuck in local minima, slow convergence rates in complex networks, and the vanishing gradient problem in deep networks.How would you interpret coefficients of logistic regression for categorical and boolean variables?

In logistic regression, coefficients for categorical and boolean variables represent the change in the log odds of the outcome for a one-unit change in the predictor variable. For binary predictors, the coefficient indicates how the presence (1) versus absence (0) of a category affects the log odds, allowing comparison of the relative odds of different outcomes.What are MLE and MAP? What is the difference between the two?

Maximum Likelihood Estimation (MLE) focuses on parameter values that maximize the likelihood of the observed data under the model. Contrarily, Maximum A Posteriori (MAP) estimation integrates prior distributions about parameters with data likelihood, embodying a Bayesian method that combines prior beliefs with observed data.What are the key differences between classification models and regression models?

Classification models predict categorical labels or classes, often focusing on separating different categories through decision boundaries. Regression models, conversely, predict continuous outcomes, focusing on estimating a relationship between input features and the continuous target variable, quantifying this relationship through regression coefficients.What is the concept of LDA (Linear Discriminant Analysis) in machine learning?

Linear Discriminant Analysis (LDA) is a dimensionality reduction technique used for classification, aiming to find a linear combination of features that best separates two or more classes. It maximizes the distance between class means while minimizing the variation within each class, projecting data onto a lower-dimensional space while preserving class-discriminatory information.Compare bagging and boosting algorithms and explain tradeoffs between the two.

Bagging, or Bootstrap Aggregating, enhances model stability and accuracy through averaging predictions from multiple independent models trained on bootstrapped data subsets, effectively reducing variance. In contrast, boosting sequentially builds models to correct errors of prior ones, focusing on reducing bias and tackling challenging instances, often achieving higher accuracy but risking overfitting and higher computational costs. Bagging typically offers robustness with simpler computations, but might miss intricate patterns compared to boosting.

Machine Learning Case Studies

Machine learning case study questions test your ability to solve real-world business problems by building practical, end-to-end models. Interviewers are looking for candidates who can define assumptions, select the right features, reason through trade-offs, and structure a model development approach clearly. Expect open-ended scenarios where your ability to think systematically matters just as much as your technical depth.

Describe how you would build a model to predict Uber driver ETAs after a rider requests a ride.

This involves creating a model to estimate the expected time of arrival (ETA) for a ride request. You would need sufficient data about past trips, which might include features such as distance, location, traffic conditions, and time of day to evaluate whether the dataset is comprehensive and representative enough for the model to make accurate predictions.How would you build a model to predict whether an Uber driver will accept a ride request?

This requires selecting an appropriate algorithm and identifying relevant features that influence a driver’s decision to accept a ride request. Various classifiers could be considered, such as logistic regression, random forests, or gradient boosting, each with their tradeoffs, like interpretability, training speed, and handling of data complexity.

How would you build a bank fraud detection model with limited labeled data?

In a scenario with limited labeled data, you could use semi-supervised learning techniques or anomaly detection methods to build a fraud detection model. Leveraging techniques like clustering or outlier detection can help identify fraudulent transactions when explicit labels are scarce, and incorporating user feedback could further refine the model’s accuracy.You have a categorical variable with thousands of distinct values; how would you encode it?

For encoding a categorical variable with many distinct values, techniques such as one-hot encoding, ordinal encoding, or using embeddings could be considered. If the number of categories is exceptionally high, target encoding, frequency encoding, or hashing trick might be efficient alternatives to handle memory and computation challenges while maintaining the usefulness of the categorical information.How would you design a pizza delivery no-show prediction model?

To design a no-show prediction model, relevant features such as order time, order location, customer history, and external factors like weather conditions could be considered. By analyzing patterns in these features, the model aims to predict the likelihood of a customer not showing up.How would you estimate someone’s birthday without labeled data?

To estimate birthdays, data such as user engagement history, posts related to birthday events, and interactions around likely birthday dates may be analyzed. Machine learning models could use these indirect cues to predict the month and day of a user’s birthday.How would you assess a clustering algorithm without pre-labeled data?

The success of a clustering algorithm can be evaluated using internal validation metrics like silhouette score, which assesses the compactness and separation of the clusters. Additionally, domain knowledge may be applied to understand whether clusters make sense based on the known attributes of the data.How would you fix an algorithm that underprices products on an e-commerce site?

To diagnose an underpricing issue in an e-commerce algorithm, start by analyzing the input features such as product availability, demand, and logistics costs to identify potential inaccuracies or missing data. Consider conducting an A/B test with a modified algorithm to compare performance, and utilize statistical methods or machine learning models to adjust pricing dynamically based on the identified key factors.

How would you design a health insurance risk prediction model?

Designing a health insurance risk prediction model requires selecting relevant features such as age, medical history, lifestyle, and more. Use these features to train a classification model capable of predicting the likelihood of major health issues. Evaluate various machine learning algorithms, like logistic regression or decision trees, to optimize accuracy in identifying high-risk individuals.How would you handle missing square footage data in a real estate dataset?

To address missing square footage data in a real estate dataset, options include imputing missing values using statistical methods such as mean or median imputation, or employing more advanced techniques like regression imputation based on other available features. Alternatively, excluding these entries or applying machine learning techniques to model the missing data are viable strategies depending on the specific data context and model objectives.

Applied Modeling Questions

Applied modeling questions test how you navigate real-world challenges like noisy data, outliers, missing information, and confounding factors. Instead of textbook answers, interviewers look for clear, structured reasoning — how you balance trade-offs, diagnose problems, and adapt your modeling approach to imperfect conditions. Strong responses show not just technical skill, but judgment in how to handle uncertainty and complexity.

How would you justify the complexity of building a neural network?

To justify the use of a neural network to non-technical stakeholders, emphasize the model’s ability to capture complex patterns and relationships in data that simpler models might miss. Explain how accuracy improvements from using neural networks can lead to better decision-making and ultimately drive business value.

How do we give each rejected applicant a reason why they got rejected?

To provide a reason for loan rejection without access to feature weights, consider utilizing methods like LIME or SHAP that offer model interpretability by highlighting significant features contributing to the rejection decision. These techniques help in approximating the behavior of the model around specific predictions, allowing you to offer insights into crucial factors leading to rejection.One million rides – how would we know if we have enough data to create an accurate enough model?

While having one million ride records is substantial, verifying data quality, diversity, and relevance is crucial to ensure accuracy in predictive modeling for ETA. It’s important to consider data granularity, distribution across various conditions (e.g., times of day, weather conditions), and the presence of potential outliers or missing information.How would you test whether having more friends now increases the probability that a Facebook member is still an active user after 6 months?

To test this hypothesis, you could perform a regression analysis where the number of friends is the independent variable and active status after six months is the dependent variable. This analysis could reveal if there is a positive relationship between having more friends and the likelihood of being an active user. Additionally, you may apply propensity score matching to mitigate confounding variables and ensure more robust results.How would you correct for extreme outliers in flight delay prediction data?

To correct for extreme outliers in flight delay prediction data, you could use techniques such as winsorization or trimming to reduce the effect of outliers. Another approach is to apply robust statistical methods like median-based regression or using a modified z-score metric to minimize outlier influence.How would you test if having more friends increases Facebook user retention after six months?

Testing if having more friends increases Facebook user retention can involve conducting an observational study where you collect data about users’ friend counts and their retention rates. You could use survival analysis to determine the impact of the number of friends on the likelihood of retention over a six-month period. Adjusting for covariates like age, activity level, and region could help to control for potential confounding factors.How would you explain the bias-variance tradeoff with regards to building and choosing a model to use?

The bias-variance tradeoff is crucial in balancing the accuracy and generalization of a model. High bias can lead to underfitting, where the model is too simplistic, while high variance can cause overfitting, where the model is too complex and closely tied to the training data. The goal is to find a balance where the model performs well on unseen data by minimizing both bias and variance.What are some reasons why measuring bias would be important in building this particular model?

Measuring bias in a model that predicts food preparation times is important to ensure accurate and fair predictions across various scenarios. A model with high bias may systematically misestimate preparation times, leading to operational inefficiencies and customer dissatisfaction. Accounting for bias helps in improving the model’s accuracy and ensuring that predictions are equitable and reliable.Why would the same machine learning algorithm generate different success rates using the same dataset?

Same algorithms can yield different success rates due to several factors including different initial parameter settings, data preprocessing steps, or even stochastic components within the algorithm itself. Differences in hyperparameter tuning, feature selection, and training techniques can also lead to varied performance outcomes on the same dataset.

Recommendation and Search Engine Questions

Recommendation and search engine questions test your ability to design machine learning systems that personalize user experiences at scale. These problems focus on data collection strategies, ranking algorithms, collaborative and content-based filtering, and how to optimize performance for millions of users in real time. Strong answers balance technical system design with considerations like user engagement, privacy, and computational efficiency.

How would you build a restaurant recommendation engine for Facebook?

To build a restaurant recommendation engine for Facebook, start by gathering data through user interactions, location, and preferences. Use collaborative filtering or content-based filtering to suggest restaurants based on this data. Considerations include data privacy, user consent, and ensuring recommendations are diverse and useful.How would you design a YouTube video recommendation system to maximize user engagement?

Designing a YouTube video recommendation system involves using user activity data, video metadata, and collaborative filtering techniques to predict user preferences. Critical factors include understanding user behavior, maintaining user privacy, and ensuring fresh, relevant content delivery to keep users engaged.How would you build a job recommendation engine for LinkedIn profiles?

Building a job recommendation engine for LinkedIn involves analyzing user profiles, job application history, and user responses to job-related questions. Use this data to implement machine learning models that can predict suitable job matches by employing collaborative filtering and content-based strategies.How would you design a type-ahead search recommendation system for Netflix?

To design a type-ahead search recommendation system for Netflix, leverage user search patterns and interaction histories to improve real-time predictions. Consider using algorithms that rank results based on relevance and popularity, while maintaining performance speed and accuracy for seamless user experience.How would you evaluate a model that predicts news relevance when shared on Twitter?

To evaluate a model predicting news relevance, consider metrics such as precision, recall, F1-score, and accuracy to assess its performance. You can also conduct cross-validation to ensure that the model generalizes well with different datasets, and use A/B testing if the model is used in production to understand its impact on user engagement.How would you scale model training across millions of Netflix users and movies?

To scale model training for Netflix’s extensive dataset, consider leveraging distributed computing techniques like parallelization and data partitioning to handle the large data volume efficiently. Utilizing cloud computing resources, such as AWS, and frameworks like Apache Spark or TensorFlow, can help manage and train the model on a distributed data architecture, optimizing computational resources across several nodes.How would you create a rental housing recommendation engine?

Creating a rental housing recommendation engine involves using collaborative filtering, content-based filtering, or a hybrid approach by analyzing user demographics, preferences, and engagement metrics with property features such as location, price, and amenities. This data-driven approach helps in personalizing listings and improving user satisfaction by recommending the most relevant rental options.How would you design a facial recognition system for employee access and time tracking?

In designing a facial recognition system for employee access and time tracking, it is crucial to ensure high accuracy and security by using a reliable dataset to train the recognition model, focusing on key facial features and employing real-time verification techniques. Implementing robust data privacy practices, such as encryption and stringent access controls, is essential to protect the sensitive information involved in facial recognition systems.

Algorithm Coding Questions

Algorithm coding questions test your ability to implement machine learning-related logic from scratch without relying on libraries like scikit-learn. These problems focus on coding precision, algorithmic thinking, memory efficiency, and a deep understanding of how core techniques like random forests, linked lists, and string operations work internally. Interviewers want to see clean, optimized code that handles edge cases gracefully.

Write a function to calculate precision and recall from a confusion matrix

Given a 2-D matrix of predicted values and actual values, the function should calculate precision and recall metrics by extracting the true positives, false positives, and false negatives from the matrix.Build a random forest model from scratch using only pandas and numpy

The task involves creating a random forest model without using scikit-learn, utilizing permutations to generate all subspaces of the data. Each decision tree in the forest will check each permutation’s feature columns in the data frame and split the data based onnew_point.Write a function to find the maximal substring shared by two strings

This involves writing a function to find the longest contiguous sequence of characters that are common between two input strings. If multiple substrings exist with the same length, the function can return any one of them.Write a function to search for a value in a linked list.

To search for a value in a linked list, iterate through the list beginning with the head node and compare each node’s value with the target. ReturnTrueimmediately if the target is found. If the end of the list is reached without finding the target, returnFalse.Write a function to find the shortest word transformation sequence.

To find the length of the shortest transformation sequence frombegin_wordtoend_word, use Breadth-First Search (BFS). The function starts transformation atbegin_wordand explores all possible words by changing one character at a time, keeping track of the depth of the path untilend_wordis reached.Given a rotated sorted array, write an efficient search function.

Use a modified binary search to find the target value in a rotated sorted array efficiently in (O(\log n)) time. The algorithm identifies the pivot and uses the properties of sorted subarrays to narrow down where to search for the target value, ensuring the search space reduces logarithmically.Write a function

search_listto find if a value exists in a linked list.

Iterate over the linked list starting from the head node and check whether each node’s value matches the target value. If a match is found, returnTrue; otherwise, continue until the end of the list, returningFalseif the value does not exist in the list.Given two strings A and B, write a function

can_shiftto return whether or not A can be shifted some number of places to get B.

To determine if string A can be shifted to form string B, concatenate A with itself to encompass all possible rotations. Then, check if B is a substring of this concatenated result. If B appears in the combined string A + A, it indicates a successful shift, otherwise, it doesn’t.Given a dictionary with keys of letters and values of a list of letters, write a function

closest_keyto find the key with the input value closest to the beginning of the list.

To identify the key that has the input value closest to the beginning of its corresponding value list, iterate through the dictionary and track the minimum index at which the input value appears. The dictionary key with the smallest index position for this letter is the closest key. Return this key.

Conclusion

Preparing for machine learning interviews in 2025 demands more than technical knowledge — it requires strategic thinking, system design fluency, and practical modeling skills. The questions in this guide cover every critical area: from designing scalable systems to reasoning through algorithm trade-offs, solving open-ended business problems, and building efficient solutions from scratch.

As you prepare, focus on understanding the why behind each solution — not just memorizing answers. Practice structuring your thoughts clearly, asking clarifying questions, and weighing trade-offs in real-world scenarios. With the right mindset and preparation, you’ll be ready to tackle even the toughest machine learning interview challenges and stand out to top employers.

For more resources, explore Interview Query’s AI Interview Simulator and targeted Machine Learning Interview Path to sharpen your system design and modeling skills. You can also check out our Data Science Course, Python Machine Learning Questions, Amazon Machine Learning Interview Guide, and Google Machine Learning Interview Guide for even deeper practice.