80+ Python ML Interview Questions (2025 Guide)

Introduction

Python remains the default language for machine learning this year thanks to its rich libraries (NumPy, pandas, scikit-learn, TensorFlow, PyTorch), quick prototyping, and strong community support. According to Fortune Business Insights, the global machine learning market is projected to reach $310 billion by 2032, making Python fluency an essential interview skill. Most technical interviews now expect you to reason in Python, write clean code, and translate business problems into data workflows.

Whether you’re a data scientist, ML engineer, or AI engineer, this guide is built to help you level up, from mastering the basics to designing production-ready ML systems.

How to use this guide:

- Start with basics (Python + ML concepts) to firm up core knowledge.

- Practice coding (from-scratch implementations, scikit-learn usage).

- Tackle scenario-based questions to map models to real business problems.

- Review MLOps topics (deployment, monitoring, data/feature pipelines).

This guide gives you everything you need, from technical depth to real-world reasoning, to ace your next Python machine learning interview.

Python Machine Learning Basics (For Freshers)

If you’re just starting your ML journey, this is where you build your foundations fast with core Python data structures, essential NumPy operations, and the ML concepts interviewers expect at the entry level. This section targets machine learning interview questions for freshers, focusing on practical understanding you can apply in code.

Read more: Top 27 Pandas Interview Questions with Answers

Core Python Machine Learning Interview Questions

Learn how lists vs NumPy arrays differ in memory layout and performance, when to use vectorization for speed, and how shallow vs deep copy impacts mutability and bugs. These topics frequently appear in Python interview questions for freshers because they determine real-world data pipeline efficiency.

Q1. What is the main difference between Python lists and NumPy arrays in ML workflows?

Lists are flexible containers for mixed data types, but are slow for numerical computation. NumPy arrays store homogeneous data in contiguous memory, enabling vectorization and fast matrix operations crucial for ML tasks like batch gradient updates.

Tip: Keep this distinction in mind, using lists instead of arrays in ML code can drastically increase runtime.

Q2. Why is NumPy preferred over pure Python for ML computations?

NumPy uses optimized C libraries under the hood, offering vectorized operations and broadcasting. This drastically reduces loop overhead, making ML algorithms like linear regression or k-means clustering faster and more scalable.

Tip: If a loop looks slow in Python, rewrite it with NumPy, since it cuts execution time by 10–100×.

Q3. Explain shallow vs deep copy in the context of NumPy arrays.

A shallow copy (like slicing) creates a new reference but shares the same underlying data, while a deep copy duplicates the entire array. In ML pipelines, shallow copies can cause data leakage or unintended feature modification if not handled properly.

Tip: Always use copy.deepcopy() when modifying arrays during preprocessing to avoid unexpected value changes downstream.

Q4. How does vectorization improve ML code?

Vectorization allows entire arrays or matrices to be processed at once, avoiding Python loops. For instance, updating all weights in a neural network can be expressed in one NumPy operation, improving speed and readability.

Tip: Interviewers love examples, show how a single vectorized line replaces multiple nested loops.

Q5. What are potential pitfalls of broadcasting in NumPy?

Broadcasting simplifies arithmetic across mismatched shapes but may cause unintended memory expansion or shape errors. For example, aligning a (1000,1) array with a (1,1000) array can lead to large intermediate matrices if not carefully structured.

Tip: Always check array shapes before arithmetic operations, array.shape is your best debugging friend.

Basic ML Conceptual Questions in Python

Clarify supervised vs. unsupervised learning, detect and mitigate overfitting via the bias–variance tradeoff, and structure train/validation/test splits the right way. Freshers should be able to connect each idea to concrete Python workflows, aligning with machine learning interview questions for freshers.

Q1. What is the difference between supervised and unsupervised learning?

Supervised learning uses labeled data (e.g., predicting house prices), while unsupervised learning finds hidden patterns in unlabeled data (e.g., clustering customers). Python libraries like scikit-learn provide tools for both approaches.

Tip: Relate examples to business use cases, such as classification (spam detection) and clustering (customer segmentation) to stand out in interviews.

Q2. How do you detect overfitting in an ML model?

Overfitting occurs when training accuracy is high but test accuracy drops significantly. Using cross-validation, regularization, and learning curves in Python helps identify and mitigate it.

Tip: Be ready to mention metrics or plots (like learning curves) when discussing overfitting. Interviewers love visual reasoning.

Q3. What is the bias-variance tradeoff?

Bias represents underfitting (oversimplification), while variance represents overfitting (too much complexity). The tradeoff is finding a balance where the model generalizes well (balance between overfitting and underfitting). Techniques like regularization and ensemble methods address this.

Tip: Use a real-world analogy—think of bias as “too few features” and variance as “too many unnecessary patterns.”

Q4. Why are train/test splits important?

Splitting prevents models from memorizing data. Python’s train_test_split ensures the model is validated on unseen data, helping estimate real-world performance and avoid leakage.

Tip: Remember to use a random seed for reproducibility, random_state can save you during live coding rounds.

Q5. What role does cross-validation play in ML evaluation?

Cross-validation splits the dataset into multiple folds, training and testing on each to reduce variance in evaluation. It provides a more reliable performance estimate than a single train/test split.

Tip: Mention KFold or StratifiedKFold from scikit-learn, they show you know practical implementation details.

Interview Questions on Data Preprocessing with Pandas

Master handling missing values (drop, impute, domain-aware strategies) and feature scaling (standardization, normalization, robust scaling) using pandas and NumPy. These are day-one skills referenced in Python interview questions for ML freshers and are essential for reliable model training.

Q1. How do you handle missing values in pandas for ML?

Options include dropping rows/columns, filling with mean/median, forward/backward filling, or using scikit-learn’s SimpleImputer. The choice depends on data size and business context.

Tip: Mention domain-specific imputations like using “0” for missing prices or median for incomes, to show critical thinking.

Q2. Why is feature scaling important?

Scaling ensures features contribute equally to models sensitive to magnitude (e.g., KNN, SVM). Without scaling, larger numeric ranges dominate distance-based calculations, leading to biased results.

Tip: Know which algorithms need scaling like for example, mention “tree-based models don’t, while distance-based ones do” and explain why based on the model architecture.

Q3. How do you standardize features in Python?

Using scikit-learn’s StandardScaler, features are transformed to zero mean and unit variance. This improves optimization convergence for algorithms like gradient descent.

Tip: Standardization usually helps gradient descent converge faster, highlight this when asked about optimization.

Q4. When would you use MinMax scaling instead of standardization?

MinMax scaling rescales values between 0 and 1, useful when features must be bounded (e.g., neural network activation functions). It preserves data shape but is sensitive to outliers.

Tip: Say you’d avoid MinMax if the dataset has extreme outliers, it shows nuanced understanding.

Q5. What preprocessing steps are essential before training ML models in pandas?

Typical steps include handling missing values, encoding categorical variables, scaling numeric features, and ensuring consistent datatypes. This ensures models train reliably and avoid runtime errors.

Tip: Bring up pipeline automation like using ColumnTransformer or Pipeline in scikit-learn earns extra points.

Intermediate Python ML Interview Questions (Experienced Candidates)

This section focuses on Python interview questions for ML engineers and machine learning interview questions for experienced candidates. It covers algorithm implementation, evaluation metrics, framework-specific queries, and practical problem-solving for real-world ML workflows.

Read more: Apple Machine Learning Engineer Interview Guide

ML Algorithm Implementation Questions in Python

Here, you’ll find questions testing whether you can implement core algorithms (decision tree, k-means, logistic regression) directly in Python, showing both coding ability and mathematical understanding.

-

A succinct answer highlights boosting’s handling of complex nonlinearities, ability to optimize custom loss, and built-in regularization contrasted with bagging. Discussion of hyper-parameter grids, GPU acceleration, and feature-importance interpretation rounds out the coding angle.

Tip: Show that you understand the difference between boosting (sequential) and bagging (parallel), it demonstrates awareness of trade-offs in bias, variance, and runtime.

-

A complete reply explains the elbow and silhouette methods, Davies–Bouldin index, and information-theoretic criteria. Then outline a Python loop that fits multiple k values, logs metrics in Pandas, and auto-plots a screen curve, demonstrating data-driven hyper-parameter search rather than guesswork.

Tip: Mention using silhouette score automation or elbow plots in Python scripts, it reflects both analytical and coding proficiency.

-

Candidates should note that both optimize variance in orthogonal subspaces, that first principal components approximate cluster centroids, and that PCA can de-noise and speed distance computations. An effective explanation finishes with a short scikit-learn pipeline showing dimensionality reduction feeding directly into

KMeans.Tip: Emphasize that PCA helps remove noise and correlated dimensions before clustering to show a practical understanding of preprocessing pipelines.

-

Interviewers want to see reasoning about the monotonically decreasing within-cluster SSE objective and the finite set of possible cluster assignments. Wrap up by mentioning you’d unit-test convergence in Python by asserting improvement of inertia at each step.

Tip: Bring up that convergence is guaranteed because the within-cluster variance monotonically decreases, a great way to blend theory with implementation logic.

How would you implement gradient descent for linear regression in Python?

Gradient descent minimizes the mean squared error (MSE) by iteratively updating weights:

w:=w−α⋅∇L(w), where α (alpha) is the learning rate and ∇L(w) is the gradient.

In Python:

import numpy as np

def gradient_descent(X, y, lr=0.01, epochs=1000):

m, n = X.shape

weights = np.zeros(n)

for _ in range(epochs):

predictions = X.dot(weights)

errors = predictions - y

gradient = (1/m) * X.T.dot(errors)

weights -= lr * gradient

return weights

Tip: Always mention hyperparameter tuning like learning rate and early stopping, it shows maturity in debugging optimization issues.

Model Evaluation Questions on scikit-learn

This subsection covers accuracy, precision, recall, F1, and AUC-ROC — key evaluation metrics you’ll be asked to calculate, interpret, and implement in interviews.

What’s the limitation of accuracy as a metric in ML?

Accuracy fails on imbalanced datasets, e.g., predicting 99% negatives correctly still yields 99% accuracy, but misses all positives. Use precision, recall, or AUC-ROC instead.

Tip: Use a class imbalance example (like fraud detection) to make your answer feel applied rather than theoretical.

How would you compute precision and recall from a 2-D confusion-matrix-style list in Python?

First, validate that the input is square and numeric, then derive true positives, false positives, and false negatives for each class. Precision is TP / (TP + FP,) and recall is TP / (TP + FN); implement safeguards against division by zero by returning NaN or zero. A vectorized NumPy solution is preferred for readability and speed, but explicit loops demonstrate mastery of fundamentals. The problem gauges both conceptual fluency with common ML metrics and the ability to implement numerically robust code.

Tip: Add that you’d validate metrics with

classification_report()to signal that you know how to verify code results effectively.What is the F1-score and why is it important?

The F1-score is the harmonic mean of precision and recall. It balances false positives and false negatives, making it ideal for imbalanced classification tasks like fraud detection.

Tip: Bring up real-world cases (e.g., medical diagnosis) where recall and precision trade-offs directly impact outcomes.

How do you interpret an ROC curve?

ROC plots TPR vs FPR. AUC closer to 1 indicates strong discrimination; 0.5 is random. Python’s

roc_auc_scorecomputes it. Used when class balance varies.Tip: Mention plotting ROC curves using Matplotlib, interviewers like seeing that you can visualize and interpret model performance.

How do you use a confusion matrix in evaluation?

A confusion matrix shows TP, FP, TN, FN counts. It helps identify where errors are concentrated (false positives vs false negatives). In scikit-learn:

from sklearn.metrics import confusion_matrix

confusion_matrix(y_true, y_pred)

Tip: Suggest normalizing confusion matrices for clearer interpretation, it’s a subtle touch that shows attention to presentation.

ML Framework-Specific Interview Questions

This subsection focuses on the three most common ML frameworks in interviews and production: scikit-learn, TensorFlow, and PyTorch. Interviewers want to see whether you can explain tradeoffs, use core APIs, and reason about tuning, optimization, and pitfalls. These questions test both conceptual mastery and hands-on coding ability.

What’s the difference between scikit-learn, TensorFlow, and PyTorch in ML?

Library Best For Strengths Typical Interview Angle scikit-learn Classical ML (tabular) Pipelines, CV, metrics, fast baselines Feature engineering, metrics, leakage prevention TensorFlow/Keras Production DL TFX, TF Serving, mobile/TPU SavedModel, deployment, serving A/B PyTorch Research-to-prod DL Pythonic API, dynamic graphs Custom modules, training loops, TorchScript/TorchServe Tip: Relate each framework to the type of project you’d use it for, example: “I’d use scikit-learn for tabular ML, PyTorch for deep learning R&D.”

-

Expect to reason about variance reduction vs. bias reduction, convergence rates, and sensitivity to noisy labels. Implementation notes might touch on parallelism for bagging versus sequential dependency in boosting and how that shapes pipeline scheduling.

Tip: Highlight that boosting can overfit on noisy data since awareness of when not to use an algorithm is equally valuable.

-

The answer covers adaptive moment estimates, per-parameter learning rates, and quick convergence on sparse gradients. Explain beta coefficients, learning-rate warm-ups, and how you’d code a custom callback to monitor exploding gradients.

Tip: Talk about using adaptive learning rates and monitoring loss convergence, it shows you understand optimization beyond the default settings.

-

You should mention Mercer’s condition, dimensionality blow-ups, and computational cost. Include a quick Python snippet for checking a Gram matrix’s positive semi-definiteness and talk about cross-validation for kernel hyper-parameters.

Tip: Discuss scaling data before applying kernel methods, a subtle but key practical insight.

-

A strong reply sketches the chain-rule math, shows how forward and backward passes share activations, and notes common hazards like vanishing gradients and exploding updates. Candidates then discuss practical mitigations—weight initialization, gradient clipping, and batch-norm—and illustrate with a short NumPy snippet that tracks per-layer gradients to verify correctness, demonstrating both conceptual mastery and hands-on debugging skills.

Tip: Stress on gradient-checking as a debugging tool, it’s a senior-level answer that shows hands-on experience.

-

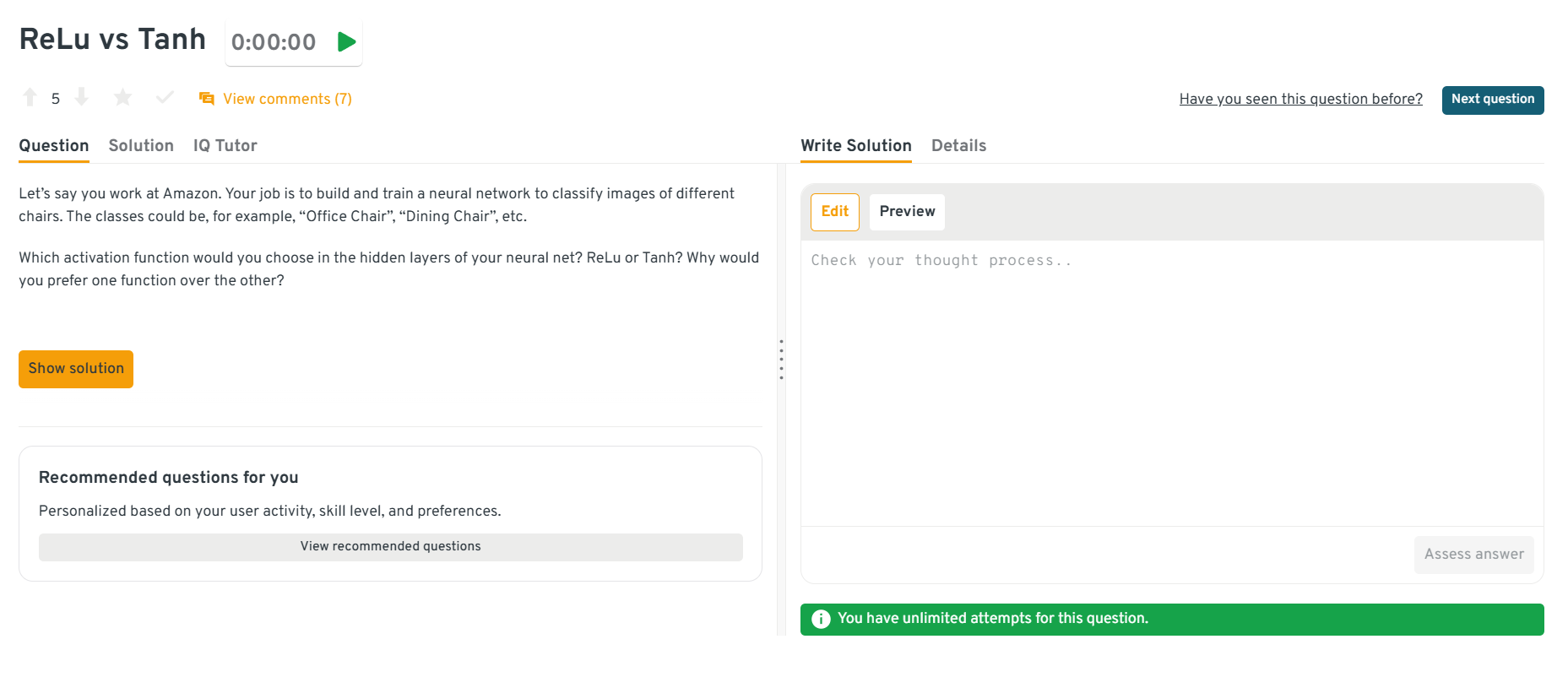

Good answers compare saturation behavior, gradient flow, and computational cost. You should cite empirical findings (ReLU’s sparsity, Tanh’s bounded outputs), suggest leaky variants for dead-neuron risk, and show how you’d swap activations in Keras or PyTorch while monitoring training curves—tying concept directly to implementation.

Tip: Mention monitoring for “dead ReLU” problems during training to show practical troubleshooting knowledge.

You can explore the Interview Query dashboard that lets you practice real-world Machine Learning interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering Python ML problems across domains.

-

The explanation should discuss bias–variance trade-off, diminishing returns after ensemble variance stabilizes, and memory/latency costs. Detail a Python grid search over

n_estimators, plotting OOB error to pinpoint the elbow and support capacity planning decisions.Tip: Reference plotting OOB (Out-of-Bag) error vs.

n_estimatorsto signal that you understand empirical model validation. -

Candidates must contrast L1’s feature-selection sparsity with L2’s shrinkage, mention scenarios like collinearity, and show how they’d cross-validate

alphavalues with scikit-learn’sElasticNetCV, demonstrating both conceptual nuance and hands-on tuning.Tip: Mention feature selection benefits of L1 and robustness of L2 under collinearity to tie the math directly to model design choices.

9 . How do you save and load models in PyTorch?

torch.save(model.state_dict(), "model.pth")

model.load_state_dict(torch.load("model.pth"))

This saves parameters efficiently. Use torch.save(model) for full objects, but it’s less portable.

Tip: Mention versioning checkpoints during training, it demonstrates awareness of production best practices.

10 . How do you tune hyperparameters in scikit-learn?

Use GridSearchCV or RandomizedSearchCV. Example:

from sklearn.model_selection import GridSearchCV

param_grid = {'max_depth': [3, 5, 7]}

grid = GridSearchCV(estimator=DecisionTreeClassifier(),

param_grid=param_grid,

cv=5,

scoring='f1')

grid.fit(X, y)

print(grid.best_params_)

GridSearchCV exhaustively tests parameter grids; RandomizedSearchCV is faster on large spaces.

Tip: Add that you’d visualize results from cv_results_, small insights like this show depth in workflow optimization.

Handling Real-World ML Problems

This subsection introduces questions testing your ability to solve imbalanced data, correlated features, and debugging pipelines in applied ML contexts.

-

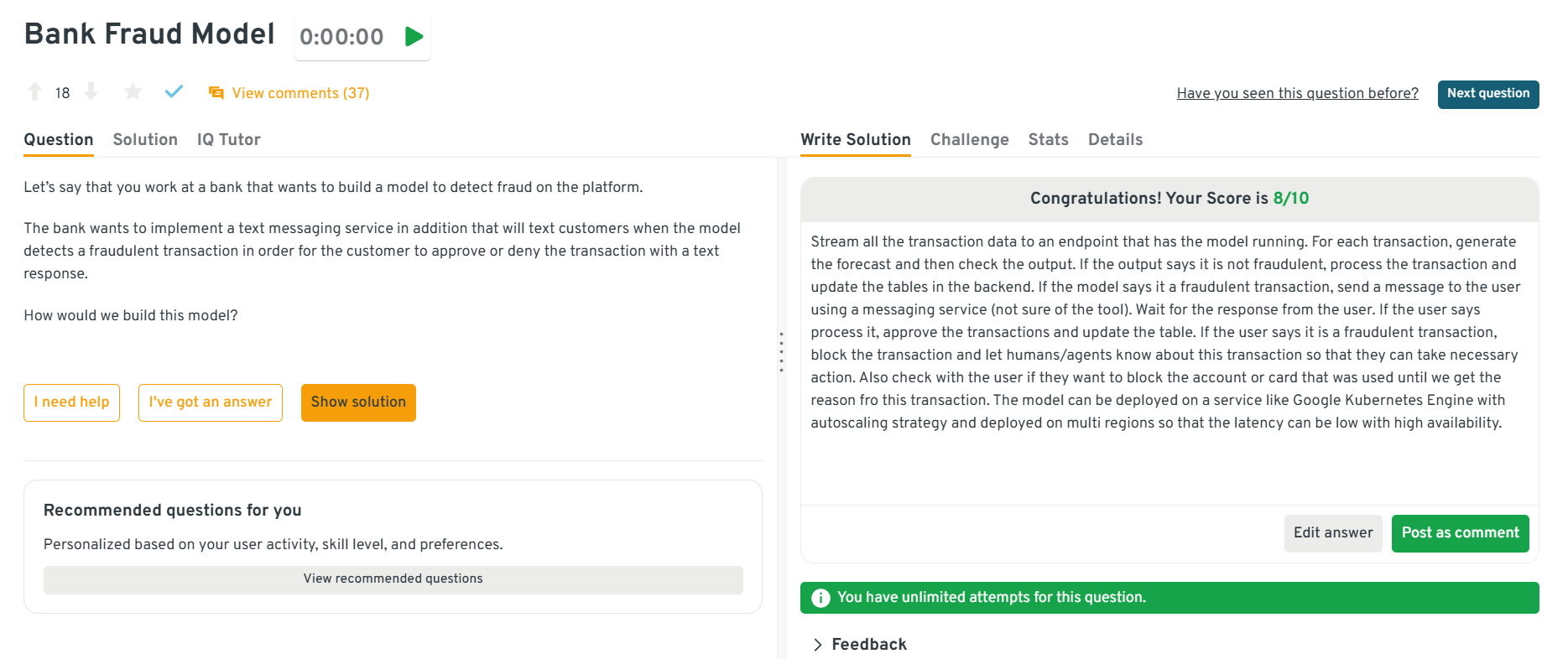

The explanation should compare ROC-AUC, precision@k, and false-negative cost, then show how to calculate them in Python over streaming batches. Mention alert thresholds, rolling windows, and canary testing for new model versions to demonstrate operational maturity.

Tip: Highlight setting custom alert thresholds in production to show that you think operationally.

-

Good candidates translate the classic trade-off into concrete plots (learning curves, residual histograms) and discuss remedial actions like regularization strength or feature engineering, all scripted in notebooks that fit into the CI/CD pipeline.

Tip: Show familiarity with monitoring residuals and drift dashboards to imply that practical tools matter more than just theory.

-

Solid answers explain permutation-importance dilution and bias toward high-cardinality splits. Show how you’d calculate a correlation matrix, apply hierarchical clustering to drop redundant columns, and verify gain in out-of-bag accuracy—bridging theory with practical refactoring.

Tip: Talk about using

PermutationImportanceor SHAP to verify corrected importance since modern, tool-aware responses stand out. -

The interviewer looks for storytelling clarity (probability p(x, y) vs. p(y|x)), discussion of data requirements, and a brief Python outline—e.g., Naïve Bayes vs. Logistic Regression—with trade-offs in precision and interpretability.

Tip: Simplify it for non-technical audiences since interviewers love seeing communication clarity.

-

A complete answer walks through shuffling,

groupbysampling to preserve class proportions, and efficient counting via Boolean masks. Interviewers listen for edge-case handling (very small classes) and a clear explanation of why stratification lowers variance in model evaluation.Tip: Bring up reproducibility with

random_stateto highlight small but impactful best practices.

Python AI & ML Scenario-Based Interview Questions

This section focuses on scenario-based Python interview questions and AI/ML interview queries that mimic real-world business and engineering problems. These questions test not only coding ability but also applied reasoning: how to design solutions, handle messy data, and explain tradeoffs under interview pressure.

Read more: Top 17 Machine Learning Case Studies to Look Into Right Now

Applied Business Case ML Questions

These scenario-based questions simulate end-to-end ML case studies where you map a business problem to a data-driven solution. Expect topics like ETA prediction in ride-share platforms (feature engineering, regression pipelines) or fraud detection (imbalanced classification, real-time scoring). You’ll need to show how Python tools can be used to structure the solution and justify modeling decisions.

-

In this prompt, you must outline feature engineering (competition, historical CTR for similar terms, seasonality), pick a supervised-learning algorithm, and explain how you’d train on sparse long-tail data. The explanation should also cover how to refresh bids in near-real time, guard against over-spend with business rules, and measure lift through an A/B auction test—demonstrating both ML design and production thinking.

Tip: Emphasize transfer learning or similarity-based features for unseen keywords to show strategic thinking about cold-start problems.

-

Interviewers expect you to balance statistical power, feature diversity (time-of-day, weather, region), and model complexity when arguing for or against more data. A solid answer includes error-bar simulations, variance-reduction techniques (stratified sampling, k-fold validation), and real-world latency/compute trade-offs in retraining cadence.

Tip: Back your reasoning with data coverage metrics or variance plots since interviewers love when you quantify “enough data” instead of guessing.

-

In this question, you need to blend concept—handling extreme class imbalance, precision-recall tuning, with coding realities like stream processing (Kafka), model serving latencies, and feedback loops from customer replies. Walk through feature stores, monitoring false positives, and A/B testing thresholds to minimize lost revenue.

Tip: Bring up real-time constraints like <200 ms latency and how you’d handle delayed labels, this proves production-awareness.

You can explore the Interview Query dashboard that lets you practice real-world Machine Learning interview questions in a live environment. Here you can write and run codes, and submit answers while getting instant feedback, perfect for mastering Python ML problems across domains.

-

The explanation should cover domain-driven features (age, vitals), SMOTE or cost-sensitive loss for minority-class recall, evaluation metrics (ROC, PR curve), and the MLOps story—feature store schema, batch scoring job, and PII-compliant logging in production.

Tip: Mention cost-sensitive learning or threshold tuning to prioritize recall for high-risk groups to reflect business-context understanding.

How would you handle missing or noisy GPS data in an ETA model?

Options include interpolation (linear or spline) for short gaps, Kalman filtering for noisy signals, or imputing with nearest-neighbor historical routes. Outliers (teleporting GPS points) should be clipped or dropped. In production, fallback to average route ETA if signals fail.

Tip: Explain how you’d validate imputation accuracy against ground-truth test sets to highlight data validation skills beyond just cleaning.

Scenario-Based ML Coding Questions

Here, you’ll encounter hands-on Python coding interview questions for data scientists. These include open-ended exercises where you must manipulate data, implement algorithms, or debug ML code within the context of a business scenario. The focus is on writing clean, correct, and efficient Python code while thinking through constraints.

1 . How would you implement a rolling average of website traffic in Python to smooth daily fluctuations?

import pandas as pd

df = pd.DataFrame({'date': pd.date_range(start='2025-01-01', periods=7),

'visits': [120, 150, 180, 90, 200, 220, 160]})

df['rolling_avg'] = df['visits'].rolling(window=3).mean()

print(df)

This approach calculates a 3-day rolling average, smoothing spikes for trend detection. Useful in analytics interviews where candidates must handle noisy time-series data.

Tip: Mention adjusting window size dynamically for seasonality since small insights like this show deeper time-series intuition.

2 . Write Python code to detect and remove outliers from a customer purchase dataset using the IQR method.

import pandas as pd

df = pd.DataFrame({'amount': [50, 52, 48, 300, 55, 60, 49]})

Q1 = df['amount'].quantile(0.25)

Q3 = df['amount'].quantile(0.75)

IQR = Q3 - Q1

filtered = df[(df['amount'] >= Q1 - 1.5*IQR) & (df['amount'] <= Q3 + 1.5*IQR)]

print(filtered)

This snippet removes extreme purchase values. Scenario relevance: fraud detection or sales forecasting interviews.

Tip: Bring up domain thresholds or z-score alternatives since showing flexibility in method choice makes your approach stronger.

3 . How would you write a Python function to calculate the Gini impurity for a binary classification split?

def gini_impurity(labels):

total = len(labels)

if total == 0:

return 0

p1 = sum(labels) / total

p0 = 1 - p1

return 1 - (p0**2 + p1**2)

print(gini_impurity([0, 0, 1, 1, 1]))

The function computes impurity for a label list. This is often tested in interviews when candidates need to show decision tree fundamentals.

Tip: Add that you’d validate outputs with scikit-learn’s DecisionTreeClassifier for consistency. Interviewers like such verification habits.

4 . Write Python code to calculate user churn rate given a DataFrame of active/inactive user flags.

import pandas as pd

df = pd.DataFrame({'user_id': [1,2,3,4,5],

'active': [1,0,1,0,0]})

churn_rate = 1 - df['active'].mean()

print(f"Churn Rate: {churn_rate:.2%}")

This scenario mimics SaaS analytics, where interviewers want to see if you can quickly derive key business KPIs from raw user activity.

Tip: Tie this to real retention metrics to show that you think in terms of business KPIs, not just numbers.

5 . Suppose you have transaction timestamps for an e-commerce site. Write Python code to calculate session length per user.

import pandas as pd

df = pd.DataFrame({

'user_id': [1,1,1,2,2],

'timestamp': pd.to_datetime([

'2025-09-01 09:00:00',

'2025-09-01 09:05:00',

'2025-09-01 09:25:00',

'2025-09-01 10:00:00',

'2025-09-01 10:30:00'

])

})

session_lengths = df.groupby('user_id')['timestamp'].apply(lambda x: x.max() - x.min())

print(session_lengths)

This calculates session durations, commonly asked in data scientist interviews related to user behavior analysis.

Tip: Point out how you’d handle cross-day sessions or inactivity timeouts since operational realism sets strong candidates apart.

Machine Learning Project Interview Questions

This subsection focuses on machine learning project interview questions that test whether you can design and reason about practical ML systems. Expect case studies on messy, real-world datasets, bias handling, and pipeline-level decision-making. These often appear in interviews when employers want to assess applied thinking, not just coding ability.

-

This question checks understanding of sample-selection bias, target leakage, and fairness across cuisine types or peak hours. Strong responses describe bias audits (stratified error analysis), corrective re-weighting, and how bias metrics integrate into continuous-integration tests for the model.

Tip: Suggest fairness dashboards or subgroup error analysis to show that you think about equity and reliability in ML.

-

Interviewers want to hear pros/cons of mean, median, KNN, or model-based imputations, plus the code path for data-quality flags. Good answers include sensitivity experiments, performance before/after imputation, and guardrails for production drift.

Tip: Note that you’d benchmark models before and after imputation, demonstrating you evaluate data interventions empirically.

-

A strong response describes building prediction intervals via residual bootstrapping, visualizing confidence bands, and alerting when actuals fall outside the band. Touch on NumPy bootstraps, Matplotlib plots, and cron-driven report generation.

Tip: Mention visualizing prediction intervals or residual variance, it shows clarity in communicating uncertainty to stakeholders.

-

The best solution leverages

groupby('city').apply(lambda g: g.interpolate('linear')), highlights whymethod='time'fails without a DatetimeIndex, and discusses forward/backward filling for leading or trailing NaNs.Tip: Add that you’d validate filled values by comparing against weather-station records, which is a smart touch of real-world validation.

-

Candidates should bucket with

pd.cut, aggregate withgroupby(['grade','bucket']).size(), and compute running totals viagroupby('grade').cumsum() / count_per_grade. Explaining how to present the result as a tidy table shows data-reporting polish.Tip: Bring up visualization, as plotting cumulative curves in seaborn or matplotlib helps you show storytelling with data.

Watch Next: How to Ace a Machine Learning Mock Interview - Design a recommendation engine

In this mock interview session, Ved, a PhD student and ML research scientist intern at LinkedIn, walks through a real-world ML challenge prompt showing how he breaks down the problem into features, modeling strategy, evaluation metrics, and delivery-ready presentation, giving you an interview-ready template you can apply in your own prep.

Machine Learning Coding Interview Questions in Python

This section targets machine learning coding interview questions, focusing on algorithmic and numerical coding challenges. Unlike conceptual or case-based questions, these test whether you can quickly implement ML-relevant functions, mathematical operations, and algorithmic puzzles in Python. They are common in ML coding interview questions and AI coding interview questions for data scientists and ML engineers.

Read more: 100 Most Asked Python Data Science Interview Questions

Implementing Core ML Algorithms from Scratch in Python

This subsection includes questions where you’ll need to implement fundamental ML algorithms in pure Python or NumPy, without relying on high-level libraries. Examples include building logistic regression from scratch, implementing k-nearest neighbors (k-NN) classification, and writing a function to compute RMSE. These demonstrate your ability to connect math with code efficiently.

Here are 5 coding-style interview Q&As designed to test whether you can implement ML fundamentals directly in Python. These combine algorithm design with vectorized coding practices.

-

A comprehensive answer presents a baseline double-loop NumPy implementation, then proposes KD-trees or ball trees, chunked broadcasting, and approximate neighbors (FAISS) for scalability. This shows you can move from algorithmic concept to efficient Python code suitable for production experimentation.

Tip: Show you understand scalability and mention how KD-trees or FAISS can handle millions of points efficiently without brute force.

-

A complete solution defines vectorized forward and backward passes, stops when the change in loss falls below ε, and returns learned weights that you can verify on a toy dataset. You should also discuss numerical-stability tricks (e.g., clamping logits), regularization hooks, and how you’d profile convergence to decide on step size or momentum.

Tip: Discuss how you’d monitor convergence or prevent numerical instability to signal real-world experience with training loops.

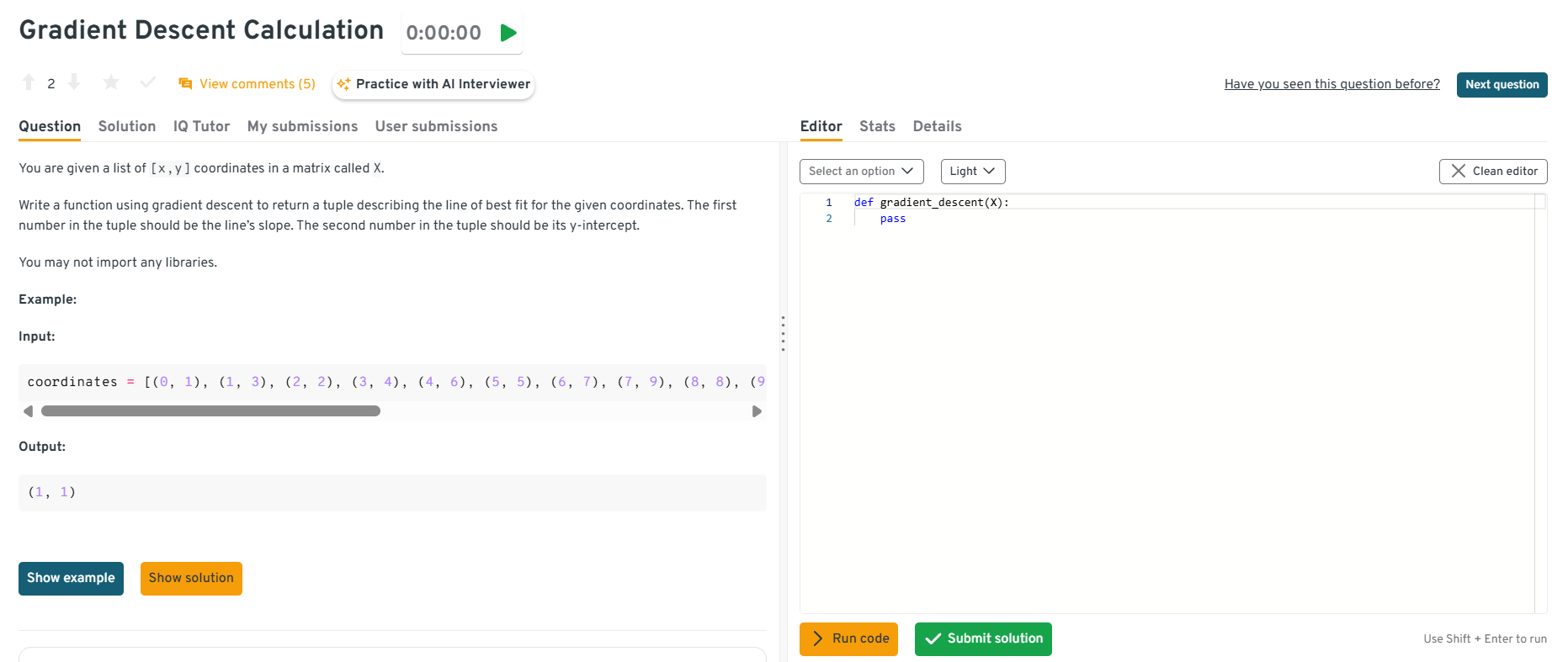

-

Interviewers look for clear math, loop-less vectorization, and tracking of MSE after each epoch. Good answers include a learning-rate scheduler, early-stopping criteria, and a brief explanation of why gradient magnitudes shrink as you approach the optimum.

Tip: Explain how tracking loss across epochs helps debug exploding gradients or learning-rate issues since interviewers love that insight.

You can explore the Interview Query dashboard that lets you practice real-world Machine Learning interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering Python ML problems across domains.

-

Beyond summing squared residuals, highlight type checking, guarding against empty inputs, and returning a float rounded to a sensible precision. This tests your ability to recreate essential evaluation metrics from first principles.

Tip: Highlight that you’d validate equal-length lists before computing RMSE, it shows defensive, production-ready coding habits.

-

A complete solution initializes centroids (random or k-means++), assigns points based on Euclidean distance, recomputes means, and stops when no labels change or after a max-iteration threshold. You should highlight vectorized distance computation, tie-breaking when clusters empty out, and a runtime of roughly O(k n t).

Tip: Emphasize initialization strategy (like k-means++) and convergence criteria to show algorithmic depth, not just code skills.

Probability & Math-Based Coding

This subsection emphasizes mathematical coding tasks that validate your understanding of probability, statistics, and their role in ML. These are frequent in ML coding interview questions where you’re expected to implement metrics or statistical tools from scratch in Python, not just call built-in functions.

-

Compute the mean, sum the squared deviations, divide by n−1n − 1n−1 for an unbiased estimate, then round the result using

round(var, 2). Edge cases include lists shorter than two elements, which should return None or raise an exception. Emphasize constant-space streaming solutions for very long inputs by updating running mean and M2 (Welford’s algorithm). The task mixes statistical correctness with attention to numerical stability and runtime efficiency.Tip: Bring up Welford’s algorithm, it’s a neat trick to compute variance in one pass and impresses interviewers with numerical awareness.

-

Iterate through the dictionaries, extract integer lists, and apply Welford’s one-pass algorithm to each for O(n)O(n)O(n) time and O(1)O(1)O(1) space per list. Return a dictionary mapping the original keys to their deviations. Explain why avoiding NumPy may be necessary in constrained environments and how streaming formulas prevent overflow. Interviewers probe understanding of numerical algorithms beyond canned library calls.

Tip: Talk about streaming computation for memory efficiency to show you can scale simple math to large data.

-

Determine bin width as ⌈(max−min+1)/x⌉\lceil(\text{max}−\text{min}+1)/x\rceil⌈(max−min+1)/x⌉, then iterate through the list, incrementing the correct bin key using integer division. Remove entries with a zero count before returning the dictionary. Highlight trade-offs between fixed and adaptive (e.g., Sturges) bin sizes and discuss memory usage for sparse data. The problem tests data-profiling intuition and the ability to translate mathematical binning logic into Python dictionaries.

Tip: Explain how dynamic binning (e.g., Sturges’ rule) adapts to data distribution to demonstrate practical thinking beyond syntax.

How would you simulate drawing a ball from a jar given lists of ball colors and counts in Python?

Treat the color list as a categorical distribution where each count represents the sampling weight. A clean solution builds an expanded list or uses

random.choiceswith the counts as weights to perform a single draw, returning the selected color. Edge cases include empty jars and negative counts, which should raise descriptive errors. Conceptually, this tests your understanding of probability mass functions and Monte Carlo simulation, while the coding portion checks your ability to translate that reasoning into concise, idiomatic Python.Tip: Mention using clean, and production-grade code such as

random.choices()for weighted probability.-

Efficient candidates reuse vectorized broadcasting instead of Python loops, then average across simulations, illustrating practical Monte-Carlo skills.

Tip: Reference vectorization over loops for simulation efficiency, it signals fluency with NumPy and statistical reasoning.

Algorithmic Challenges in Python

This subsection covers algorithmic-style interview problems that frequently appear in ML coding interviews to test raw problem-solving skills. These challenges may not directly involve ML libraries, but are critical because they measure your ability to write clean, efficient Python code under constraints. Expect classic problems like matrix rotation (used in image preprocessing), triplet sum (array manipulation), and rainwater trapping (space/time complexity reasoning).

-

The brute-force approach nests two loops over the range [N] and appends pairs whose product falls inside [L, R]. A more elegant method pre-computes powers of 3 and 5 once, then leverages two-pointer or binary-search techniques to prune search space and hit O(NlogN)O(N\log N)O(NlogN) or better. Handling large L/R bounds requires 64-bit integers and careful overflow checks. Interviewers look for the candidate’s skill at combining exponential growth insights with algorithmic optimization in Python.

Tip: Suggest precomputing powers or binary search. Such small optimizations show algorithmic maturity.

-

Normalize case, strip punctuation, and use

collections.Counterto tally tokens, then call.most_common(N). Present runtime as O(m+klogk)O(m + k\log k)O(m+klogk) where m is word count and k unique tokens, or explain heap-based alternatives for large k. Discuss stop-word removal or stemming as potential extensions. Interviewers evaluate text-processing and complexity analysis clarity.Tip: Point out how stopword removal improves insight. Blending NLP intuition with coding efficiency works great in interviews.

Rotate a 2-D array 90 degrees clockwise in place.

First transpose the square matrix (

matrix[i][j] ↔ matrix[j][i]fori<j), then reverse each row—overall O(n2)O(n^{2})O(n2) time and O(1)O(1)O(1) space. Non-square matrices require allocating a new result grid. Return early for empty inputs. Watch for index boundaries. The problem tests index arithmetic accuracy.Tip: Walk through each transformation clearly (transpose → reverse) since clarity beats brevity in coding interviews.

Compute how much rainwater is trapped in a 2-D terrain grid.

Seed a min-heap with boundary cells, repeatedly pop the lowest wall, flood neighbors, and push them back with

max(neighbor_height, wall_height), akin to Dijkstra. Complexity is O(rclog(rc))O(rc\log(rc))O(rclog(rc)).Tip: Show why naïve flooding is quadratic and leaks water. Handle bounds and potential integer overflow. Interviewers want heap usage tied to sound elevation logic.

-

The cleanest solution XORs every element in both lists so paired values cancel, leaving only the orphan; summation-difference also works but risks overflow on very large integers. Make sure to verify the longer list is exactly one element bigger and that all items are numeric.

Tip: Explain why a hash set is unnecessary for uniqueness yet still viable. Discuss the O(n)O(n)O(n) time, O(1)O(1)O(1) space guarantees. Interviewers want to hear careful input validation and trade-off reasoning.

Write a function that returns the number of business days between two dates.

Convert inputs to

datetime.date, compute full weeks via integer division by seven, then handle any leftover days by iterating and skipping Saturdays and Sundays. This yields O(1)O(1)O(1) time for the bulk calculation and O(1)O(1)O(1) space. Ask whether local holidays count, and clarify endpoint inclusivity. Off-by-one errors and malformed formats are common pitfalls. The task tests date-arithmetic intuition and defensive assumptions.Tip: Ask clarifying questions (holidays, inclusivity) before coding to show real-world readiness.

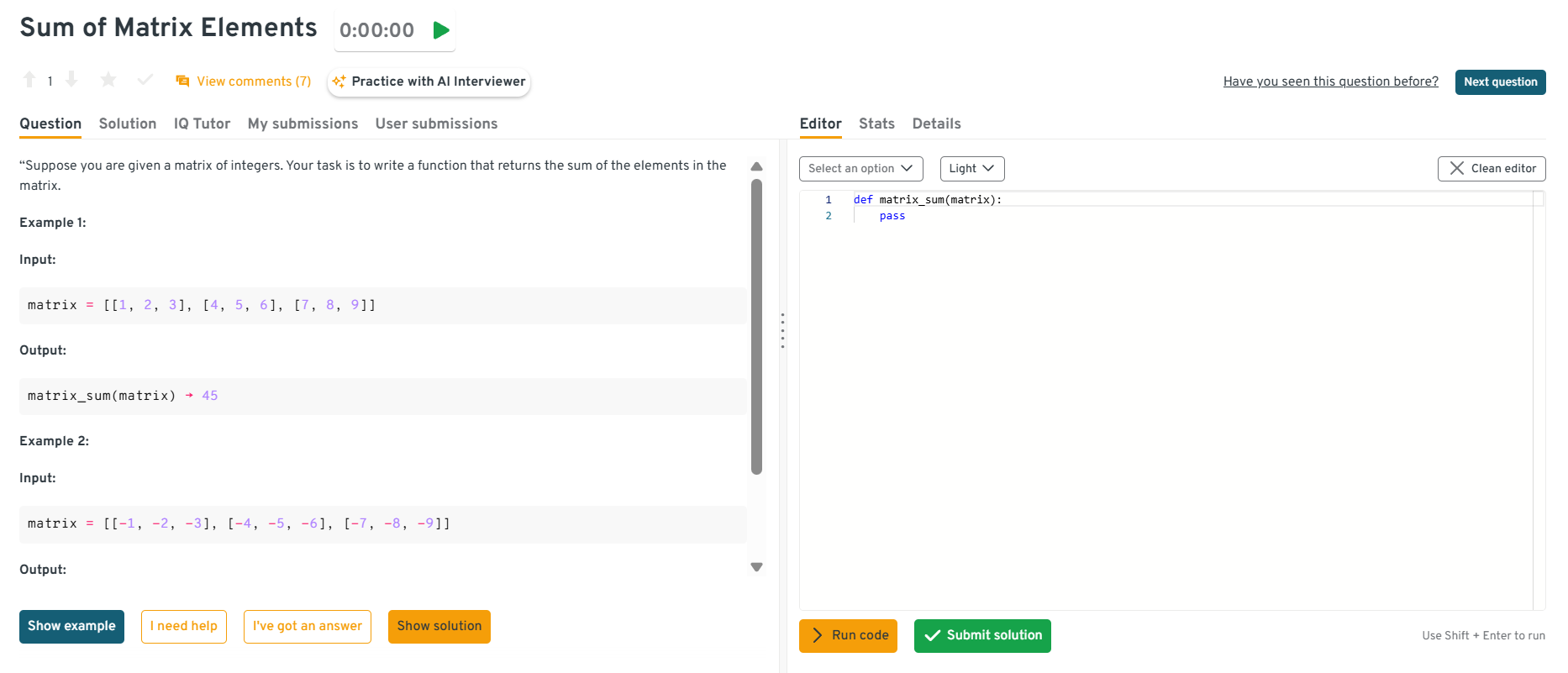

How would you compute the sum of all elements in a 2-D integer matrix?

Nest two loops or call

sum(map(sum, matrix)); both take O(r⋅c)O(r·c)O(r⋅c) time and O(1)O(1)O(1) auxiliary space. Point out that sparse matrices stored in CSR avoid scanning zeros. Return zero for empty or ragged inputs after validation. Mention integer overflow concerns in low-level languages. Interviewers watch for constant-memory aggregation and edge-case coverage.Tip: Note sparse matrix optimization to talk about computational efficiency.

You can explore the Interview Query dashboard that lets you practice real-world Machine Learning interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering Python ML problems across domains.

Find all triplets in an integer array whose sum equals a target

k.Sort the array, fix one element, then use a two-pointer scan on the rest to hit O(n2)O(n^{2})O(n2) time and O(1)O(1)O(1) space. Skip equal neighbors to deduplicate results. Explain why a brute-force cubic scan is infeasible beyond toy inputs. Integer-overflow checks matter in strongly typed languages. The exercise measures algorithmic refinement and duplicate handling.

Tip: Explain how sorting reduces time complexity, proving that you understand why it’s efficient.

How would you generate all bigrams from a sentence in order?

Tokenize by whitespace or regex, then build adjacent pairs with a loop or list comprehension. Runtime is O(n)O(n)O(n) over tokens with O(n)O(n)O(n) output. Return an empty list for sentences shorter than two words.

Tip: Clarify whether case folding or punctuation stripping is required. The interviewer mainly checks concise Pythonic construction and boundary handling.

Write a function that returns the sum of all digits in a floating-point number string.

Strip signs and decimal points, iterate over remaining characters, cast each digit to

int, and accumulate. Complexity is O(n)O(n)O(n) time and O(1)O(1)O(1) space. Reject invalid formats like multiple decimals or scientific notation unless specified. Use a generator expression for memory efficiency. Interviewers look for robust parsing and minimal footprint.Tip: Talk about error handling for malformed inputs, which shows you think beyond ideal paths.

Swap the values of keys

aandbin a dictionary without introducing new variables.Tuple unpacking—

d['a'], d['b'] = d['b'], d['a']—accomplishes the swap atomically in O(1)O(1)O(1). Confirm both keys exist to avoidKeyError. Explain that XOR swaps suit integers but hurt readability. Note Python’s transient tuple under the hood. This tests idiomatic syntax and micro-optimization awareness.Tip: Tuple unpacking is your friend here. Mention that one-liners are a clean and compact way of coding.

Which list contains more release dates after a given cutoff date?

Parse each date string to

datetime, count entries on or after the cutoff, and return the list with the higher tally (or both on a tie). Complexity is O(m+n)O(m+n)O(m+n) with constant space. Clarify date format and time-zone neutrality. Handle malformed inputs gracefully. The exercise checks comparison logic and input sanitation.Tip: Clarify date format assumptions early to prevent logic bugs and shows meticulousness.

Return the last node of a singly linked list, or

Noneif the list is empty.Traverse until

node.nextisNone, giving O(n)O(n)O(n) time and O(1)O(1)O(1) space. Caching a tail pointer makes this O(1)O(1)O(1) per query at the cost of extra maintenance. Detect cycles with Floyd’s algorithm to avoid infinite loops. Handle single-node lists gracefully. The interviewer checks pointer literacy and defensive coding.Tip: Mention cycle detection (Floyd’s algorithm) since tiny details like this demonstrate strong fundamentals.

-

Put buffer and cursor logic in the base class, extend navigation in the moving editor, and add undo/redo or autocomplete in the smart editor. Use a gap buffer or twin stacks so edits stay O(1)O(1)O(1) amortized. Include bounds checks and clear method docs. Discuss why composition beats deep inheritance for history storage. Interviewers gauge OOP design clarity and time-space reasoning.

Tip: Discuss why composition beats inheritance, showing design thinking scores major points in OOP interviews.

Advanced Topics & MLOps Interview Questions

This section explores mlops interview questions and emerging trends in deploying, monitoring, and scaling ML systems. While earlier sections test coding and algorithms, this one assesses whether you can handle real-world production challenges, integrate MLOps tools, and address ethics in AI. These are often the best MLOps interview questions asked for senior ML Engineer, AI Engineer, or Data Scientist roles in 2025.

Read more: Python Interview Questions for Data Engineers

ML Model Deployment & Monitoring

This subsection covers the practical aspects of deploying models and keeping them reliable in production. Expect questions about saving and loading models with joblib, TensorFlow, and PyTorch, setting up APIs for inference, and monitoring models after release. You’ll also be asked about model drift (changes in data distribution) and retraining strategies that keep predictions accurate over time.

Q1. How do you save and load a trained ML model in Python?

For scikit-learn, use joblib:

import joblib

joblib.dump(model, 'model.pkl')

model = joblib.load('model.pkl')

For PyTorch: torch.save(model.state_dict(), "model.pth") and model.load_state_dict(torch.load("model.pth")). For TensorFlow/Keras: model.save("model.h5") and keras.models.load_model("model.h5"). Persistence enables reproducibility and fast deployment.

Tip: Mention security considerations and avoid storing models with sensitive data or paths in serialized files like .pkl or .pth.

Q2. How do you monitor a deployed model for drift?

Track input data distribution (feature histograms, KS-test), output probability distributions, and business KPIs. Compare live data against training data baselines. If drift exceeds thresholds, trigger alerts or retraining. Tools like Evidently AI, MLflow monitoring, or custom dashboards are common solutions.

Tip: Discuss setting automated retraining thresholds and logging data snapshots to investigate anomalies when drift is detected.

Q3. What strategies can you use to retrain models in production?

Options include:

- Scheduled retraining (weekly, monthly).

- Trigger-based retraining (when drift exceeds tolerance).

- Incremental/online learning (partial_fit or streaming).

Choice depends on business criticality and data velocity.

Tip: Emphasize automation and highlight how retraining pipelines can be triggered by Airflow DAGs or CI/CD workflows.

Q4. How do you version control ML models in production?

Use model registries (MLflow, SageMaker Model Registry, or TensorFlow Hub) to track model versions, metadata (hyperparameters, dataset hashes), and performance metrics. This ensures rollback capability and auditability for compliance.

Tip: Emphasize stress traceability and link each model version to its data source and training configuration for full reproducibility.

Q5. What are best practices for exposing a model as an API?

Wrap the model in a lightweight server (FastAPI, Flask, or TorchServe). Expose REST endpoints for inference, ensure request validation, and add monitoring (latency, throughput, error rates). Use Docker for portability and Kubernetes for scaling. Logging and authentication are essential for enterprise-grade APIs.

Tip: Note that asynchronous endpoints or batch APIs can prevent timeouts in high-latency inference workloads.

MLOps Frameworks

Here, questions focus on popular MLOps tools and frameworks that automate training, deployment, and orchestration. Candidates should understand MLflow for experiment tracking, Airflow for scheduling and pipelines, and Docker for containerization. These are standard in modern ML stacks, and interviewers often expect you to compare their strengths and explain how to integrate them into workflows.

Q1. What is MLflow and how is it used in ML pipelines?

MLflow is an open-source platform for managing the ML lifecycle. It provides:

Tracking: log metrics, parameters, and artifacts.

Projects: package reproducible code with dependencies.

Models: standard format to save models.

Registry: centralized versioning and promotion of models.

In Python, you log metrics with:

import mlflow

mlflow.log_metric("accuracy", 0.92)

Tip: Mention MLflow’s integration with cloud services like Databricks or AWS Sagemaker for end-to-end lifecycle management.

Q2. How does Airflow help in machine learning workflows?

Apache Airflow is used to schedule and orchestrate ML tasks via Directed Acyclic Graphs (DAGs). For example, data preprocessing, model training, and evaluation can each be a DAG node. Benefits: monitoring, retries, and modularity. Airflow integrates with Python scripts, Spark jobs, or cloud services.

Tip: Demonstrate practical use by describing a DAG that automates data ingestion, model training, and metric logging sequentially.

Q3. How do you containerize a Python ML model with Docker?

Create a Dockerfile specifying dependencies, copy your model and inference script, and expose a port with FastAPI or Flask:

FROM python:3.10

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . /app

CMD ["python", "app.py"]

Containerization ensures portability across environments.

Tip: Include health checks in your container to ensure inference services restart automatically on failure.

Q4. What is the difference between MLflow and Airflow in MLOps?

- MLflow: Focused on experiment tracking, model packaging, and registry.

- Airflow: Focused on orchestration of tasks (data prep, training, deployment).

They often complement each other: Airflow triggers pipelines while MLflow records metrics/models.

Tip: Highlight that MLflow is for “experimentation,” while Airflow orchestrates the “production” workflow, knowing when to use both shows maturity.

Q5. Why is Docker important in MLOps, and what are best practices?

Docker ensures reproducibility by packaging the model with its environment. Best practices:

- Use lightweight base images (e.g.,

python:slim). - Pin dependency versions.

- Separate build and runtime stages (multi-stage builds).

- Keep images small for faster deployment.

Tip: Point out that multi-stage builds and caching layers drastically speed up container rebuild times in CI/CD setups.

Ethics, Fairness, and Data Privacy in ML

This subsection explores Python AI ML interview questions at the intersection of responsible AI and MLOps. Companies increasingly want to know not only if you can deploy models, but also if you understand how to ensure fairness, privacy, and compliance. These questions often blend technical methods (like differential privacy) with ethical reasoning.

Q1. What is a bias audit in machine learning, and how is it performed?

A bias audit checks whether a model treats different groups (e.g., gender, region, income level) fairly. Steps include: stratify evaluation metrics across subgroups, identify disparities, and measure fairness metrics such as demographic parity or equalized odds. Tools like AIF360 or Fairlearn in Python can automate these audits.

Tip: Reference using disaggregated performance dashboards to visualize bias impact across demographic segments.

Q2. How does federated learning help with data privacy?

Federated learning trains models directly on distributed devices without centralizing raw data. Only gradients or model updates are sent to a central server. This preserves user privacy (e.g., smartphones for keyboard predictions) while still enabling collective learning. Challenges include handling non-IID data and communication overhead.

Tip: Mention that secure aggregation ensures gradients can’t be reverse-engineered to reveal user data.

Q3. What is differential privacy, and how can it be applied in ML?

Differential privacy adds carefully calibrated noise to data or model outputs to ensure that the inclusion/exclusion of a single record doesn’t significantly affect results. In ML, it’s used in training (DP-SGD in TensorFlow Privacy) and publishing aggregates. It provides mathematical privacy guarantees critical in healthcare and finance.

Tip: Bring up privacy-utility trade-offs, since adding too much noise can cripple model accuracy, so tuning ε is key.

Q4. How would you handle ethical concerns when deploying an AI system?

Key steps:

- Assess societal impact of predictions (who benefits, who may be harmed).

- Ensure transparency in decision-making (document assumptions, publish model cards).

- Implement feedback loops for users to contest or flag errors.

- Align with regulatory frameworks (GDPR, CCPA).

Tip: Show awareness of “human-in-the-loop” oversight. Real-world systems should allow manual intervention for critical decisions.

Q5. How do you balance model accuracy with fairness and privacy in production ML?

Explain tradeoffs clearly: strict privacy budgets (ε in differential privacy) may reduce accuracy; fairness constraints (reweighting data, adversarial debiasing) may lower AUC but build trust. Best practice: present multiple scenarios to stakeholders with quantitative metrics (accuracy vs fairness gaps) and guide decisions collaboratively.

Tip: Mention building dashboards that visualize fairness vs. performance trade-offs so stakeholders can make informed, data-driven choices.

How to Prepare for a Python ML Interview (By Experience Level)

Preparing for a Python machine learning interview isn’t just about memorizing syntax, it’s about demonstrating how you think, structure problems, and apply theory in practice. Whether you’re a fresher starting your ML journey or an experienced professional managing large-scale models, your preparation should reflect your experience level and career goals.

Read more: How to Prepare for Data Science Interviews

For Freshers: Build Strong Foundations

If you’re preparing for AI and ML interview questions for freshers, focus on mastering the essentials, both in Python and core ML theory. Interviewers want to see that you can reason through concepts, write clean code, and connect ideas to real-world examples. You won’t be asked to optimize large-scale pipelines, but you should confidently navigate core ML workflows.

How to prepare:

- Review core Python concepts like data types, loops, functions, and key differences between lists and NumPy arrays.

- Practice simple coding problems such as reversing strings, computing averages, and filtering data using pandas.

- Understand machine learning basics including features vs labels, classification vs regression, and the bias-variance tradeoff.

- Work on hands-on projects using pandas and scikit-learn for data cleaning, train/test splits, and basic model training.

- Connect theory to real use cases, e.g., spam detection for supervised learning, customer segmentation for unsupervised learning.

Tip: Keep one well-documented project (e.g., churn prediction, sentiment analysis) in a GitHub repo. It shows initiative and gives you a story to discuss in interviews.

For Experienced Candidates: Demonstrate System Thinking

For professionals facing machine learning interview questions for experienced candidates, the focus shifts to ownership, scalability, and MLOps. You’re expected to explain how you design, deploy, and maintain ML systems in production.

How to prepare:

- Be ready to discuss end-to-end pipelines, from data ingestion and preprocessing to deployment and monitoring.

- Master MLOps tools like Airflow (orchestration), Docker (deployment), and MLflow (tracking and model registry).

- Practice writing optimized Python code for large datasets and efficient inference.

- Understand scalability tradeoffs, e.g., batch vs streaming predictions, or accuracy vs latency.

- Prepare examples where you’ve balanced model performance, fairness, and cost efficiency in real-world projects.

Tip: Interviewers value narrative, so frame your experience around problems solved, trade-offs made, and measurable impact (e.g., “Reduced model inference latency by 40% using model quantization and Docker optimization”).

ML Core Tools & Libraries to Master

Regardless of your level, fluency with Python’s ML ecosystem is non-negotiable. Focus on:

- pandas - data manipulation and preprocessing

- NumPy - numerical operations and vectorization

- scikit-learn - model training, pipelines, and evaluation

- TensorFlow or PyTorch - for deep learning prototypes

- FastAPI or Flask - for model deployment

Tip: Be ready to write small snippets during interviews, e.g., using train_test_split, calculating RMSE, or scaling data with StandardScaler.

Where to Practice

Consistent, targeted practice is key to success in ML interviews. Use these platforms to strengthen different skill areas:

- Interview Query: for real-world Python and ML interview questions aligned with top tech companies.

- LeetCode: to refine your algorithmic and problem-solving thinking.

- Kaggle: for applying ML on real datasets, joining competitions, and learning from public notebooks.

Tip: Combine all three and practice structured interview questions on Interview Query, algorithms on LeetCode, and end-to-end projects on Kaggle for complete readiness.

FAQs on Python ML Interview Prep

What are common Python interview questions for AI engineers?

AI engineers are often asked about both ML fundamentals and production deployment. Expect questions on NumPy vectorization, scikit-learn pipelines, TensorFlow vs PyTorch differences, and saving/loading models. Scenario-based coding (e.g., k-means from scratch) and MLOps concepts (model drift, Dockerization) are also common.

How do I pass a Python machine learning assessment?

Focus on three areas:

- Python fluency—data structures, NumPy array manipulations, pandas preprocessing.

- ML fundamentals—supervised vs unsupervised learning, evaluation metrics, and overfitting vs bias-variance tradeoff.

- Applied coding—implementing algorithms (logistic regression, k-NN), and debugging small ML pipelines.

Practice on Interview Query and Kaggle to mimic real assessment formats.

What’s the best way to prepare for Python coding interview questions?

Start with algorithmic practice on LeetCode (arrays, matrices, dynamic programming). Layer in ML-specific coding like logistic regression, gradient descent, and RMSE functions. Focus on writing clean, vectorized Python code since interviews often assess clarity as much as correctness.

Is Python enough to get a machine learning job?

Python is typically sufficient to start a career in machine learning. It’s the dominant language for ML development, with libraries like NumPy, pandas, scikit-learn, TensorFlow, and PyTorch covering most workflows. However, complementing Python with SQL for data querying, statistics for model evaluation, and cloud or MLOps tools (like AWS, Docker, or MLflow) can make you more competitive for real-world ML roles.

What are the best MLOps interview questions in 2025?

In 2025, MLOps interviews focus on scalability, automation, and ethical deployment. Expect questions on model saving/loading with joblib, TensorFlow, and PyTorch; detecting model drift and retraining; experiment tracking using MLflow; workflow orchestration with Airflow; and containerized deployment with Docker or Kubernetes. Interviewers also emphasize responsible AI—including bias audits, federated learning, and differential privacy—to ensure fairness and compliance in production systems.

What is the difference between supervised and unsupervised learning?

Supervised learning uses labeled data to train models where the output is already known, making it ideal for tasks like classification and regression. Unsupervised learning, on the other hand, works with unlabeled data to uncover hidden patterns or structures, commonly used for clustering, dimensionality reduction, and anomaly detection.

What are bias and variance in machine learning, and how do they relate to overfitting and underfitting?

Bias is the error introduced by simplifying assumptions in a model, which can cause underfitting when the model fails to capture underlying trends. Variance is the sensitivity of a model to small fluctuations in training data, leading to overfitting. A good model balances bias and variance to generalize well on unseen data.

Conclusion

Python continues to be the backbone of machine learning interviews. Whether you’re preparing for AI ML interview questions for freshers or tackling MLOps interview questions as an experienced candidate, success comes from mastering both the fundamentals and the real-world workflows.

By combining this guide with consistent coding practice and end-to-end projects, you’ll be well-prepared to handle any Python ML interview question, from foundational array manipulations to advanced deployment strategies.

Practice Next

You can only get good at Python ML interviews by coding in real-world contexts. Here’s where to sharpen your skills before the big day:

If you are looking to prep like top candidates at FAANG, explore our Machine Learning System Design Interviews covering pipelines, scalability, and production-ready reasoning.

Need a structured roadmap? Dive into Interview Query’s ML Learning Path for Python ML, covering everything from NumPy and pandas fundamentals to advanced MLOps concepts. Pair this with Interview Query’s Mock Interviews to test your skills live in a coding environment with real feedback.