37 Data Analytics Project Ideas and Datasets (Updated in 2025)

Introduction

Data analytics projects help you build a portfolio and conduct land interviews. It is not enough just to do a novel analytics project; you must also market your project to ensure it gets found.

The first step for any data analytics project is to develop a compelling problem to investigate. Then, you need to find a dataset to analyze the problem. Some of the strongest categories for data analytics project ideas include:

- Beginner Analytics Projects - For early-career data analysts, beginner projects help you practice new skills.

- Python Analytics Projects - Python allows you to scrape relevant data and perform analysis with pandas data frames and SciPy libraries.

- Rental and Housing Data Analytics Projects - Housing data is readily available from public sources, or can be simple enough to create your own dataset. Housing is related to many other societal forces, and because we all need some form of it, the topic will always interest many people.

- Sports and NBA Analytics Projects - Sports data can be easily scraped, and by using player and game stats you can analyze strategies and performance.

- Data Visualization Projects - Visualizations allow you to create graphs and charts to tell a story about the data.

- Music Analytics Projects - Contains datasets for music-related data and identifying music trends.

- Economics and Current Trends - From exploring GDPs of respective countries to the spread of the COVID-19 virus, these datasets will allow you to explore a wide variety of time-relevant data.

- Advanced Analytics Projects- For data analysts looking for a stack-filled project.

A data analytics project portfolio is a powerful tool for landing an interview. But how can you build one effectively?

Start with a data analytics project and build your portfolio around it. A data analytics project involves taking a dataset and analyzing it in a specific way to showcase results. Not only do they help you build your portfolio, but analytics projects also help you:

- Learn new tools and techniques.

- Work with complex datasets.

- Practice packaging your work and results.

- Prep for a case study and take-home interviews.

- Give you inbound interviews from hiring managers who have read your blog post!

Beginner Data Analytics Projects

Projects are one of the best ways for beginners to practice data science skills, including visualization, data cleaning, and working with tools like Python and pandas.

1. Relax Predicting User Adoption Take-Home

This data analytics project take-home assignment, which has been given to data analysts and data scientists at Relax Inc., asks you to dig into user engagement data. Specifically, you’re asked to determine who an “adopted user” is, which is a user who has logged into the product on three separate days in at least one seven-day period.

Once you’ve identified adopted users, you’re asked to surface factors that predict future user adoption.

How you can do it: Jump into the Relax take-home data. This is an intensive data analytics project take-home challenge, which the company suggests you spend 12 hours on (although you’re welcome to spend more or less). This is a great project for practicing your data analytics EDA skills and surfacing predictive insights from a dataset.

2. Salary Analysis

Are you in some sort of slump, or do you find the other projects a tad too challenging? Here’s something that’s really easy; this is a salary dataset from Kaggle that is easy to read and clean, and yet still has many dimensions to interpret.

This salary dataset is a good candidate for descriptive analysis, and we can identify which demographics experience reduced or increased salaries. For example, we could explore the salary variations by gender, age, industry, and even years of prior work.

How you can do it: The first step is to grab the dataset from Kaggle. You can either use it as-is and use spreadsheet tools such as Excel to analyze the data, or you can load it into a local SQL server and design a database around the available data. You can then use visualization tools such as Tableau to visualize the data, either through Tableau MySQL Connector or Tableau’s CSV import feature.

3. Skilledup Messy Product Data Analysis Take-Home

This data analytics project take-home from Skilledup asks participants to analyze a dataset of product details that is formatted inconveniently. This challenge provides an opportunity to show your data-cleaning skills and your ability to perform EDA and surface insights from an unfamiliar dataset. Specifically, the assignment asks you to consider one product group named Books.

Each product in the group is associated with categories. Of course, there are tradeoffs to categorization, and you’re asked to consider these questions:

- Is there redundancy in the categorization?

- How can redundancy be identified and removed?

- Is it possible to dramatically reduce the number of categories by sacrificing relatively few category entries?

How you can do it: You can access this EDA takehome on Interview Query. Open the dataset and perform some EDA to familiarize yourself with the categories. Then, you can begin to consider the questions that are posed.

4. Marketing Analytics Exploratory Data Analysis

This marketing analytics dataset on Kaggle includes customer profiles, campaign successes and failures, channel performance, and product preferences. It’s a great tool for diving into marketing analytics, and there are a number of questions you can answer from the data like:

- What factors are significantly related to the number of store purchases?

- Is there a significant relationship between the region the campaign is run in and that campaign’s success?

- How does the U.S. compare to the rest of the world regarding total purchases?

How you can do it: This Kaggle Notebook from user Jennifer Crockett is a good place to start and includes quite a few visualizations and analyses.

If you want to take it a step further, there is quite a bit of statistical analysis you can perform as well.

5. UFO Sightings Data Analysis

The UFO Sightings dataset is a fun one to dive into, and it contains data from more than 80,000 sightings over the last 100 years. This is a robust source for a beginner EDA project, and you can create insights into where sightings are reported most frequently sightings in the U.S. vs the rest of the world and more.

How you can do it: Jump into the dataset on Kaggle. There are a number of notebooks you can check out with helpful code snippets. If you’re looking for a challenge, one user created an interactive map with sighting data.

6. Data Cleaning Practice

This Kaggle Challenge asks you to clean data and perform various data cleaning tasks. This is a perfect beginner data analytics project, which will provide hands-on experience performing techniques like handling missing values, scaling and normalization, and parsing dates.

How you can do it: You can work through this Kaggle Challenge, which includes data. Another option, however, would be to choose your own dataset that needs to be cleaned and then work through the challenge and adapt the techniques to your own dataset.

Python Data Analytics Projects

Python is a powerful tool for data analysis projects. Whether you are web scraping data - on sites like the New York Times and Craigslist - or you’re conducting EDA on Uber trips, here are three Python data analytics project ideas to try:

7. Enigma Transforming CSV file Take-Home

This take-home challenge requires 1-2.5 hours to complete - is a Python script writing task. You’re asked to write a script to transform input CSV data to desired output CSV data. A take-home like this is good practice for the type of Python take-homes that are asked of data analysts, data scientists, and data engineers.

As you work through this practice challenge, focus specifically on the grading criteria, which include:

- How well you solve the problems.

- The logic and approach you take to solving them.

- Your ability to produce, document, and comment on code.

- Ultimately, the ability to write clear and clean scripts for data preparation.

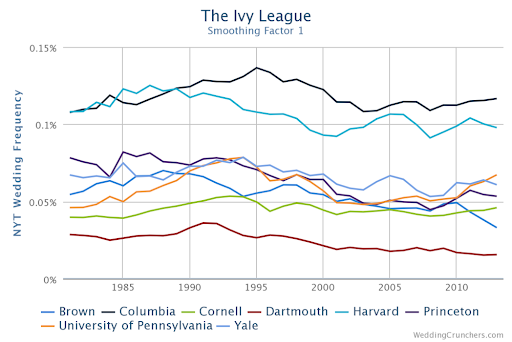

8. Wedding Crunchers

Todd W. Schneider’s Wedding Crunchers is a prime example of a data analysis project using Python. Todd scraped wedding announcements from the New York Times, performed analysis on the data, and found intriguing tidbits like:

- Distribution of common phrases.

- Average age trends of brides and grooms.

- Demographic trends.

Using the data and his analysis, Schneider created a lot of cool visuals, like this one on Ivy League representation in the wedding announcements:

How you can do it: Follow the example of Wedding Crunchers. Choose a news or media source, scrape titles and text, and analyze the data for trends. Here’s a tutorial for scraping news APIs with Python.

9. Scraping Craigslist

Craigslist is a classic data source for an analytics project, and you can analyze a wide range of things. One of the most common listings is for apartments.

Riley Predum created a handy tutorial that walks you through the steps of using Python and Beautiful Soup to scrape the data to pull apartment listings, and then was able to do some interesting analysis of pricing when segmented by neighborhood and price distributions. When graphed, his analysis looked like this:

How you can do it: Follow the tutorial to learn how to scrape the data using Python. Some analysis ideas: Look at apartment listings for another area, analyze used car prices for your market, or check out what used items sell on Craigslist.

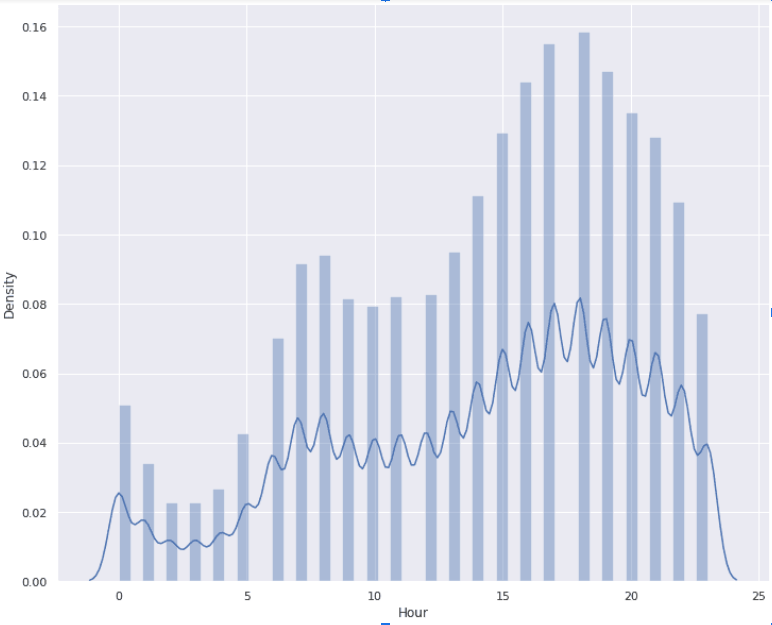

10. Uber Trip Analysis

Here’s a cool project from Aman Kharwal: An analysis of Uber trip data from NYC. The project used this Kaggle dataset from FiveThirtyEight, containing nearly 20 million Uber pickups. There are a lot of angles to analyze this dataset, like popular pickup times or the busiest days of the week.

Here’s a data visualization on pickup times by hour of the day from Aman:

How you can do it: This is a data analysis project idea if you’re prepping for a case study interview. You can emulate this one using the dataset on Kaggle or similar taxies and Uber datasets on data.world,, including one for Austin, TX.

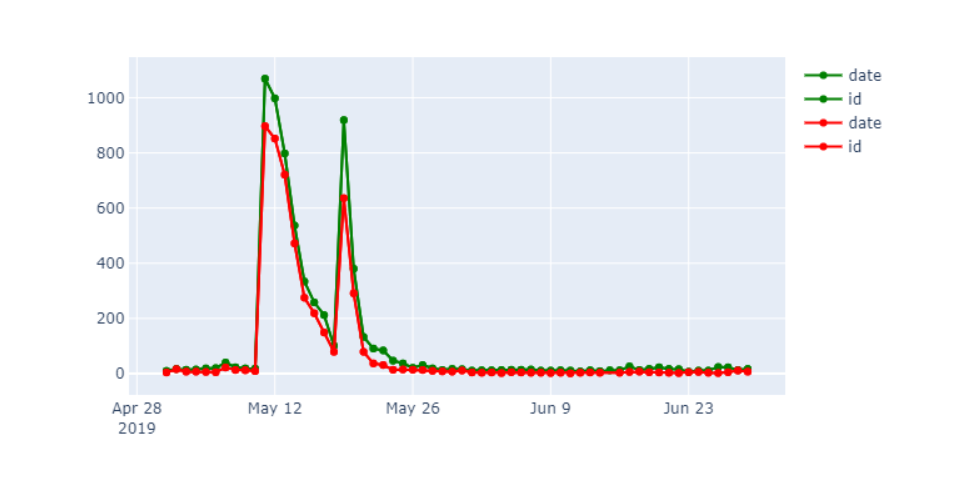

11. Twitter Sentiment Analysis

Twitter (now X) is the perfect data source for an analytics project, and you can perform a wide range of analyses based on Twitter datasets. Sentiment analysis projects are great for practicing beginner NLP techniques.

One option would be to measure sentiment in your dataset over time like this:

How you can do it: This tutorial from Natassha Selvaraj provides step-by-step instructions to do sentiment analysis on Twitter data. Or see this tutorial from the Twitter developer forum. For data, you can scrape your own or pull some from these free datasets.

12. Home Pricing Predictions

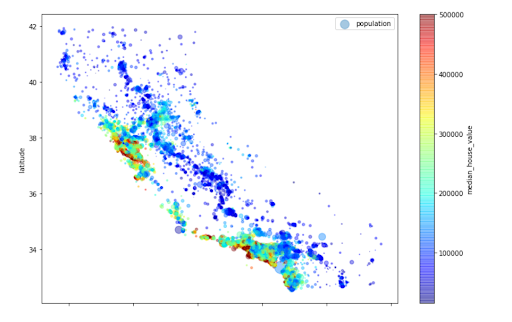

This project has been featured in our list of Python data science projects. With this project, you can take the classic California Census dataset, and use it to predict home prices by region, zip code, or details about the house.

Python can be used to produce some stunning visualizations, like this heat map of price by location.

How you can do it: Because this dataset is so well known, there are a lot of helpful tutorials to learn how to predict prices in Python. Then, once you’ve learned the technique, you can start practicing it on various datasets like stock prices, used car prices, or airfare.

13. Delivery Time Estimator

This take-home exercise - which requires 5-6 hours to complete - is a two-part task involving both machine learning model development and application engineering. You’re tasked with building a model to predict delivery times based on historical data, then writing an application to make predictions using this model. An exercise like this is excellent practice for the type of challenges that are typically given to machine learning engineers and data scientists.

As you work through this exercise, focus specifically on the evaluation criteria, which include:

- The performance of your model on the test data set.

- The feature engineering choices and data processing techniques you employ.

- The clarity and thoroughness of your explanations and write-up.

- You can write modular, well-documented, and production-ready code for the prediction application.

14. Trucking in High Winds

This take-home exercise - which is intended to take 2-3 hours to complete - is focused on estimating the mean distance to failure for wind-induced rollover events on a specified route. You’re asked to analyze historical weather data to assess the frequency of high wind events and to use this information to estimate the risk of rollover incidents. A task like this is good practice for the type of data-driven safety analyses that are relevant to data science roles in the logistics and transportation industry.

Rental and Housing Data Analytics Project Ideas

There’s a ton of accessible housing data online, e.g. sites like Zillow and Airbnb, and these datasets are perfect for analytics and EDA projects.

If you’re interested in price trends in housing, market predictions, or just want to analyze the average home prices for a specific city or state, jump into these projects:

15. Airbnb Data Analytics Take-Home Assignment

- Overview: Analyze the provided data and make product recommendations to help increase bookings in Rio de Janeiro.

- Time Required: 6 hours

- Skills Tested: Analytics, EDA, growth marketing, data visualization

- Deliverable: Summarize your recommendations in response to the questions above in a Jupyter Notebook intended for the Head of Product and VP of Operations (who is not technical).

This take-home is a classic product case study. You have booking data for Rio de Janeiro, and you must define metrics for analyzing matching performance and make recommendations to help increase the number of bookings.

This take-home includes grading criteria, which can help direct your work. Assignments are judged on the following:

- Analytical approach and clarity of visualizations.

- Your data sense and decision-making, as well as the reproducibility of the analysis.

- Strength of your recommendations

- Your ability to communicate insights in your presentation.

- Your ability to follow directions.

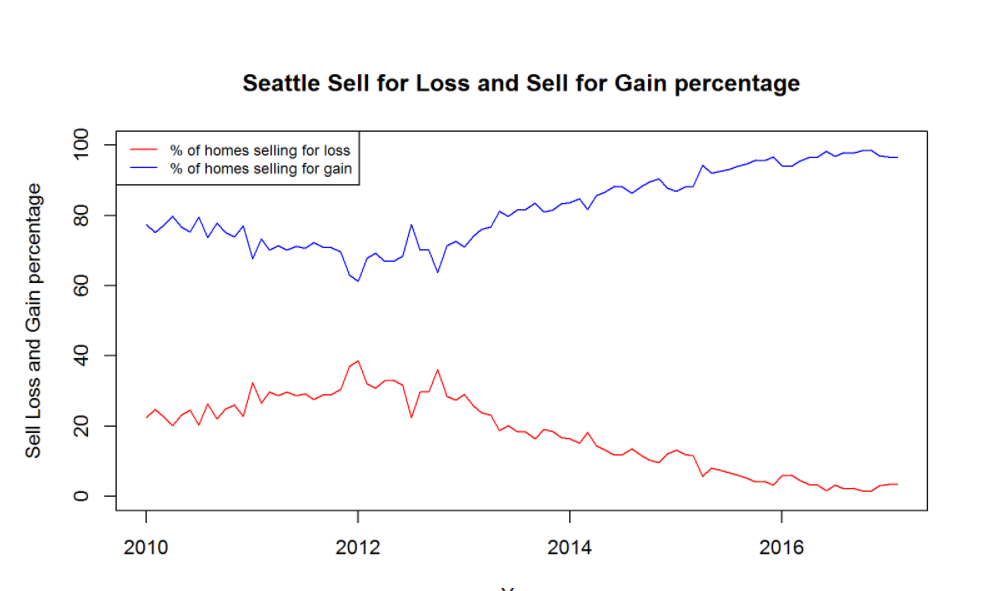

16. Zillow Housing Prices

Check out Zillow’s free datasets. The Zillow Home Value Index (ZHVI) is a smoothed, seasonally adjusted average of housing market values by region and housing type. There are also datasets on rentals, housing inventories, and price forecasts.

Here’s an analytics project based in R that might give you some direction. The author analyzes Zillow data for Seattle, looking at things like the age of inventory (days since listing), % of homes that sell for a loss or gain, and list price vs. sale price for homes in the region:

How you can do it: There are a ton of different ways you can use the Zillow dataset. Examine listings by region, explore individual list price vs. sale price, or take a look at the average sale price over the average list price by city.

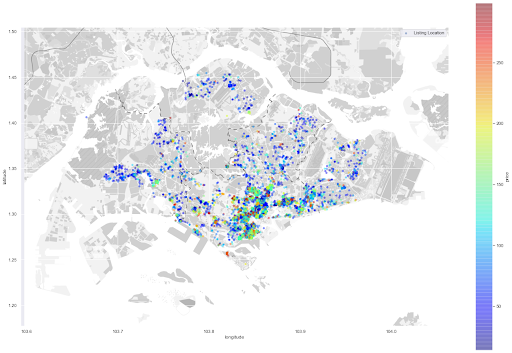

17. Inside Airbnb

On Inside Airbnb, you’ll find data from Airbnb that has been analyzed, cleaned, and aggregated. There is data for dozens of cities around the world, including number of listings, calendars for listings, and reviews for listings.

Agratama Arfiano has extensively examined Airbnb data for Singapore. There are a lot of different analyses you can do, including finding the number of listings by host or listings by neighborhood. Arfiano has produced some really striking visualizations for this project, including the following:

How you can do it: Download the data from Inside Airbnb, then choose a city for analysis. You can look at the price, listings by area, listings by the host, the average number of days a listing is rented, and much more.

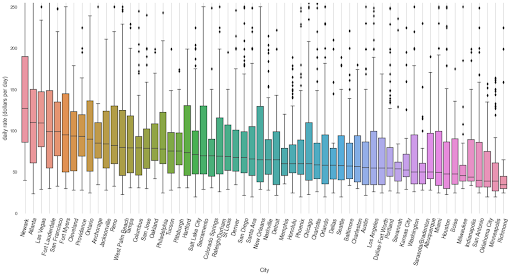

18. Car Rentals

Have you ever wondered which cars are the most rented? Curious how fares change by make and model? Check out the Cornell Car Rental Dataset on Kaggle. Kushlesh Kumar created the dataset, which features records on 6,000+ rental cars. There are a lot of questions you can answer with this dataset: Fares by make and model, fares by city, inventory by city, and much more. Here’s a cool visualization from Kushlesh:

How you can do it: Using the dataset, you could analyze rental cars by make and model, a particular location, or analyze specific car manufacturers. Another option: Try a similar project with these datasets: Cash for Clunkers cars, Carvana sales data or used cars on eBay.

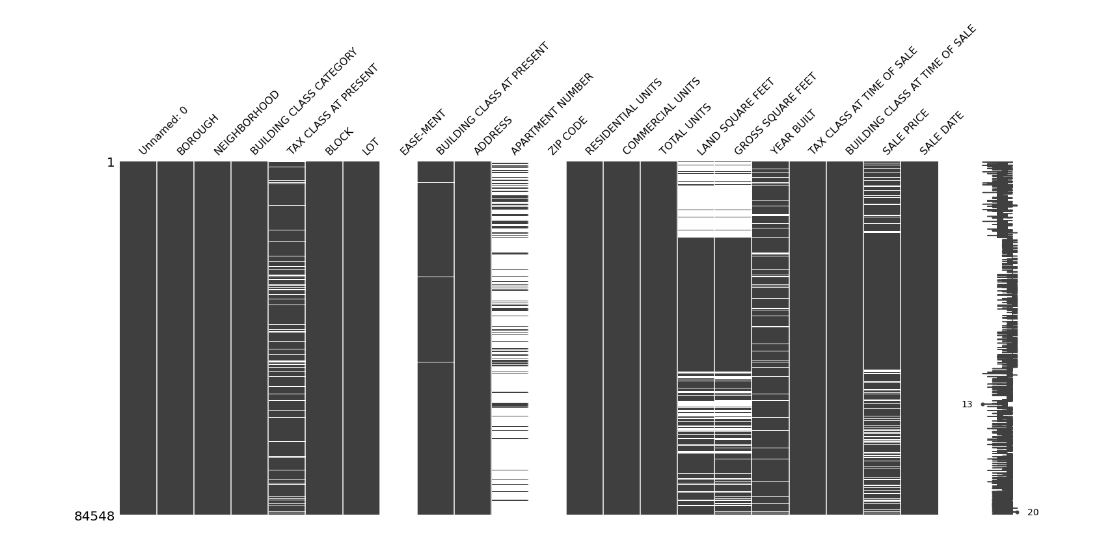

19. Analyzing NYC Property Sales

This real estate dataset shows every property that sold in New York City between September 2016 and September 2017. You can use this data (or a similar dataset you create) for a number of projects, including EDA, price predictions, regression analysis, and data cleaning.

A beginner analytics project you can try with this data would be a missing values analysis project like:

How you can do it: There are a ton of helpful Kaggle notebooks you can browse to learn how to: perform price predictions, do data cleaning tasks, or do some interesting EDA with this dataset.

Sports and NBA Data Analytics Projects

Sports data analytics projects are fun if you’re a fan, and also, because there are quite a few free data sources available like Pro-Football-Reference and Basketball-Reference. These sources allow you to pull a wide range of statistics and build your own unique dataset to investigate a problem.

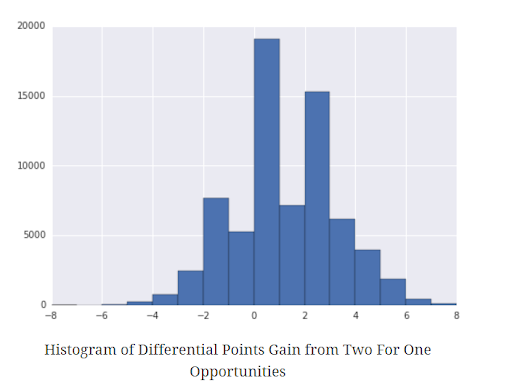

20. NBA Data Analytics Project

Check out this NBA data analytics project from Jay at Interview Query. Jay analyzed data from Basketball Reference to determine the impact of the 2-for-1 play in the NBA. The idea: In basketball, the 2-for-1 play refers to an end-of-quarter strategy where a team aims to shoot the ball with between 25 and 36 seconds on the clock. That way the team that shoots first has time for an additional play while the opposing team only gets one response. (You can see the source code on GitHub).

The main metric he was looking for was the differential gain between the score just before the 2-for-1 shot and the score at the end of the quarter. Here’s a look at a differential gain:

How you can do it: Read this tutorial on scraping Basketball Reference data. You can analyze in-game statistics, career statistics, playoff performance, and much more. An idea could be to analyze a player’s high school ranking vs. their success in the NBA. Or you could visualize a player’s career.

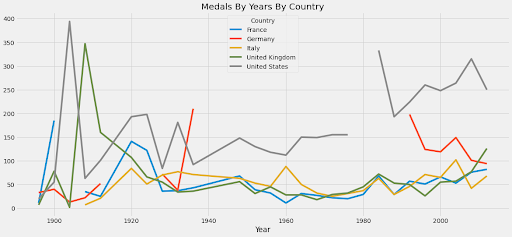

21. Olympic Medals Analysis

This is a great dataset for a sports analytics project. Featuring 35,000 medals awarded since 1896, there is plenty of data to analyze, and it’s useful for identifying performance trends by country and sport. Here’s a visualization from Didem Erkan:

How you can do it: Check out the Olympics medals dataset. Angles you might take for analysis include: Medal count by country (as in this visualization), medal trends by country, e.g., how U.S. performance evolved during the 1900s, or even grouping countries by region to see how fortunes have risen or faded over time.

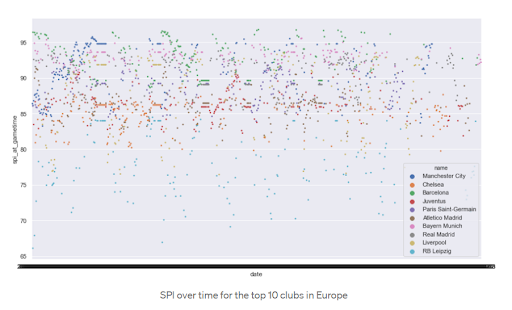

22. Soccer Power Rankings

FiveThirtyEight is a wonderful source of sports data; they have NBA datasets, as well as data for the NFL and NHL. The site uses its Soccer Power Index (SPI) ratings for predictions and forecasts, but it’s also a good source for analysis and analytics projects. To get started, check out Gideon Karasek’s breakdown of working with the SPI data.

How you can do it: Check out the SPI data. Questions you might try to answer include: How has a team’s SPI changed over time, comparisons of SPI amongst various soccer leagues, and goals scored vs. goals predicted?

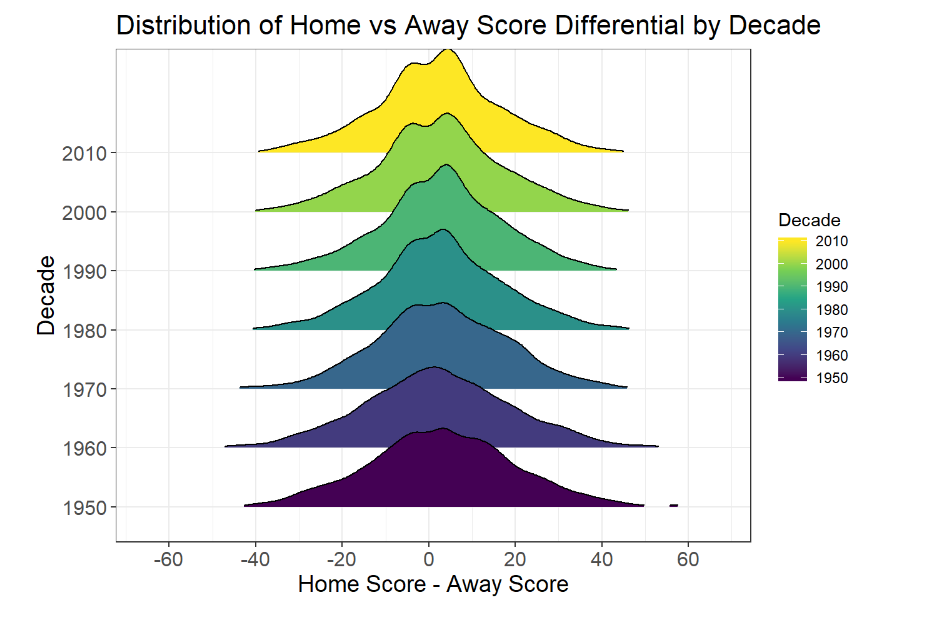

23. Home Field Advantage Analysis

Does home-field advantage matter in the NFL? Can you quantify how much it matters? First, gather data from Pro-Football-Reference.com. Then you can perform a simple linear regression model to measure the impact.

There are a ton of projects you can do with NFL data. One would be to determine WR rankings, based on season performance.

How you can do it: See this Github repository on performing a linear regression to quantify home field advantage.

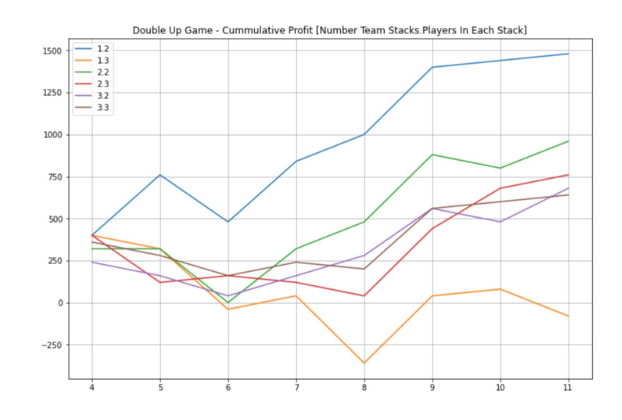

24. Daily Fantasy Sports

Creating a model to perform in daily fantasy sports requires you to:

- Predict which players will perform best based on matchups, locations, and other indicators.

- Build a roster based on a “salary cap” budget.

- Determine which players will have the top ROI during the given week.

If you’re interested in fantasy football, basketball, or baseball, this would be a strong project.

How you can do it: Check out the Daily Fantasy Data Science course, if you want a step-by-step look.

Visualization Data Analytics Projects

All of the datasets we’ve mentioned would make for amazing data visualization projects. To cap things off, we are highlighting three more ideas for you to use as inspiration that potentially draws from your own experiences or interests!

25. Supercell Data Scientist Pre-Test

This is a classic SQL/data analytics project take-home. You’re asked to explore, analyze, visualize, and model Supercell’s revenue data. Specifically, the dataset contains user data and transactions tied to user accounts.

You must answer questions about the data, like which countries produce the most revenue. Then, you’re asked to create a visualization of the data and apply machine-learning techniques to it.

26. Visualizing Pollution

This project by Jamie Kettle visualizes plastic pollution by country, and it does a scarily good job of showing just how much plastic waste enters the ocean each year. Take a look for inspiration:

How you can do it: There are dozens of pollution datasets on data.world. Choose one and create a visualization showing pollution’s true impact on our natural environments.

27. Visualizing Top Movies

There are a ton of movie and media datasets on Kaggle: The Movie Database 5000, Netflix Movies and TV Shows, Box Office Mojo data, etc. And just like their big-screen debuts, movie data makes for fantastic visualizations.

Take a look at this visualization of the Top 100 movies by Katie Silver, which features top movies based on box office gross and the Oscars each received:

How you can do it: Take a Kaggle movie dataset and create a visualization that shows one of the following: gross earnings vs. average IMDB rating, Netflix shows by rating, or visualization of top movies by the studio.

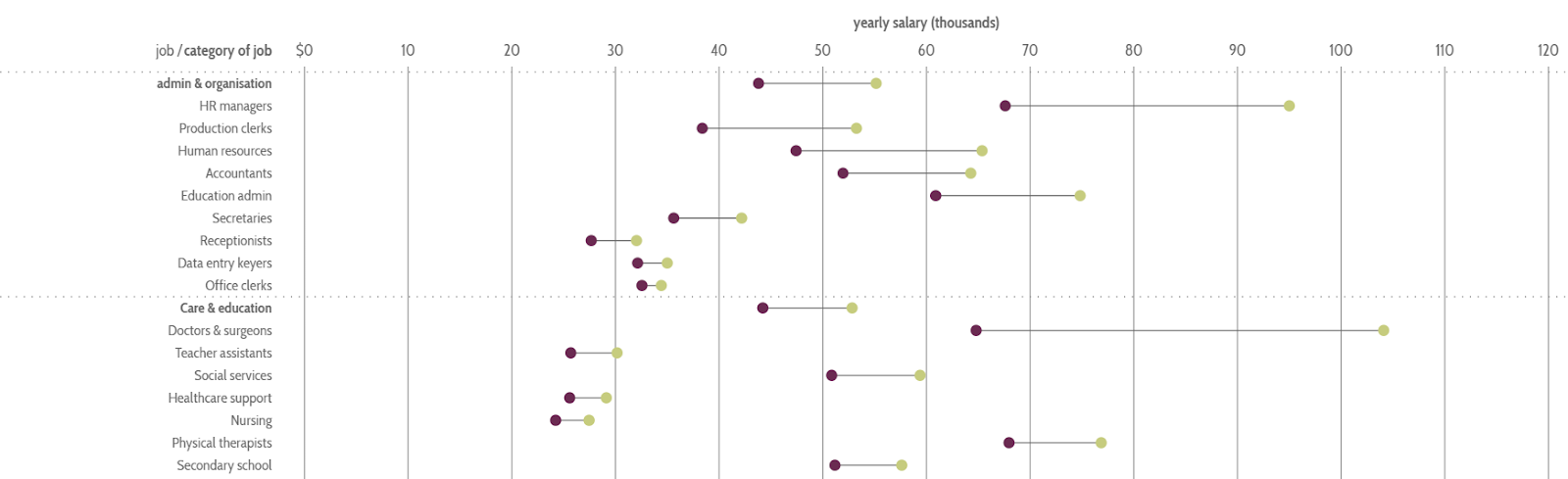

28. Gender Pay Gap Analysis

Salary is a subject everyone is interested in, and it makes it a relevant subject for visualization. One idea: Take this dataset from the U.S. Bureau of Labor Statistics and create a visualization of the pay gap by industry.

You can see an example of a gender pay gap visualization on InformationIsBeautiful.net:

How you can do it: You can re-create the gender pay visualization and add your own spin. Or use salary data to visualize fields with the fastest growing salaries, salary differences by cities, or data science salaries by the company.

29. Visualize Your Favorite Book

Books are full of data, and you can create amazing visualizations using their patterns. Take a look at this project by Hanna Piotrowska, turning an Italo Calvo book into cool visualizations. The project features visualizations of word distributions, themes and motifs by chapter, and a visualization of the distribution of themes throughout the book:

How you can do it: This Shakespeare dataset, which features all of the lines from his plays, would be ripe for recreating this type of project. Another option: Create a visualization of your favorite Star Wars script.

Music Analytics Projects

If you’re a music fan, music analytics projects are a good way to jumpstart your portfolio. Of course, analyzing music through digital signal processing is out of our scope, so the best way to go around music-related projects is through exploring trends and charts. Here are some resources that you may use.

30. Popular Music Analysis

Here’s one way to analyze music features without explicit feature extraction. This dataset from Kaggle contains a list of popular music from the 1960s. A feature of this dataset is that it is currently being maintained. Here are a few approaches you can use.

How you can do it: You can grab this dataset from Kaggle. This dataset has classifications for popularity, release date, album name, and even genre. You can also use pre-extracted features such as time signature, liveness, valence, acoustic-ness, and even tempo.

Load this dataset into a Pandas DataFrame and do your appropriate processes there. You can analyze how the features move over time (i.e., did songs over time get a bit more mellow, livelier, or louder), or you can even explore the rise and fall of artists over time.

31. KPOP Melon Music Charts Analysis

If you’re interested in creating a KPOP-related analytics project, here’s one for you. While this is not a dataset, what we have here is a data source that scrapes data from the Melon charts and shows you the top 100 songs in the weekly, daily, rising, monthly, and LIVE charts.

How you can do it: The problem with this data source is that it is scraped, so gathering previous data might be a bit problematic. In order to do historical analysis, you will need to compile and store the data yourself.

So for this approach, we will prefer a locally hosted infrastructure. Knowing how to use cloud services to automate and store data might introduce additional layers of complexity for you to show off to a recruiter. Here’s a local approach to conducting this project.

The first step is to decide which database solution to use. We recommend XAMPP’s toolkit with MySQL Server and PHPMyAdmin as it provides an easy-to-use frontend while also providing a query builder that allows you to construct table schemas, so learning DDL (Data Definition Language) is not as much of a necessity.

The second step is to create a Python script that scrapes data from Melon’s music charts. Thankfully, we have a module that scrapes data from the charts. First, install the melonapi module. Then, you can gather the data and store it in your database. Here’s a step-by-step guide to loading the data from the site.

from melonapi import scrapeMelon as melon

import json

# Here, you can use 'LIVE', 'day', 'rise', 'week', and 'month'

chart_str = melon.getList('week')

print(json.loads(chart_str))

# For now, we will print the JSON file containing the data, but ideally,

# you should load the data into a SQL server.

Of course, running this script over a period of time manually opens the door to human forgetfulness or boredom. To avoid this, you can use an automation service to automate your processes. For Windows systems, you can use the built-in Windows Task Scheduler. If you’re using Mac, you can use Automator.

When you have the appropriate data, you can then perform analytics, such as examining how songs move over time, classifying songs by album, and so on.

Economic and Current Trends Analytics Projects

One of the most valuable analytics projects is those that delve into economic and current trends. These projects, which make use of data from financial market trends, public demographic data, and social media behavior, are powerful tools not only for businesses and policymakers but also for individuals who aim to better understand the world around them.

When discussing current trends, COVID-19 is a significant phenomenon that continues to profoundly impact the status quo. An in-depth analysis of COVID-19 datasets can provide valuable insights into public health, global economies, and societal behavior.

How you can do it:These datasets, readily available for download, focus on different geographical areas. Here are a few:

- EU COVID-19 Dataset - dataset from the European Centre for Disease Prevention and Control, contains COVID-19 data for EU territories.

- US COVID-19 Dataset - US COVID-19 data provided by the New York Times. However, data might be outdated.

- Mexico COVID-19 Dataset - A COVID-19 dataset provided by the Mexican government.

These datasets provide opportunities to develop predictive algorithms and to create visualizations depicting the virus’s spread over time. Despite COVID-19 being less deadly today, it has become more contagious, and insights derived from these datasets can be crucial for understanding and combating future pandemics. For instance, a time-series analysis could identify key periods of infection rates’ acceleration and slow-down, highlighting effective and ineffective public health measures.

32. News Media Dataset

The News Media Dataset provides valuable information about the top 43 English media channels on YouTube, including each of their top 50 videos. This dataset, although limited in its scope, can offer intriguing insights into viewer preferences and trends in news consumption.

How you can do it: Grab the dataset from Kaggle and use the dataset which contains the top 50 viewed videos per channel. There are a lot of insights you can gain here, such as using a basic sentiment analysis tool to determine whether the top-performing headlines were positive or negative.

For sentiment analysis, you don’t necessarily need to train a model. You can load the CSV file and loop through all the tags. Use the TextBlob module to conduct sentiment analysis. Here’s how you can go about doing it:

from textblob import TextBlob

sentiment = "<insert text here>"

print(TextBlob(sentiment).sentiment)

# The result contains polarity and subjectivity. Polarity can range between -1 to 1

# 1 being positive and -1 being negative. Subjectivity pertains to how subjective or

# objective a text is.

Then, by using the subjectivity and polarity metrics, you can create visualizations that reflect your findings.

33. The Big Mac Index Analytics

The Big Mac Index offers an intriguing approach to comparing purchasing power parity (PPP) between different countries. The index shows how the U.S. dollar compares to other currencies, through a standardized, identical product, the McDonald’s Big Mac. The dataset, provided by Andrii Samoshyn, contains a lot of missing data, offering a real-world exercise in data cleaning. The data goes back to April 2000 up until January 2020.

How you can do it: You can download the dataset from Kaggle here. One common strategy for handling missing data is by using measures of central tendency like mean or median to fill in gaps. More advanced techniques, such as regression imputation, could also be applicable depending on the nature of the missing data.

Using this cleaned dataset, you can compare values over time or between regions. Introducing a “geographical proximity” column could provide additional layers of analysis, allowing comparisons between neighboring countries. Machine Learning techniques like clustering or classification could reveal novel groupings or patterns within the data, providing a richer interpretation of global economic trends.

When conducting these analyses, it’s important to keep in mind methods for evaluating the effectiveness of your work. This might involve statistical tests for significance, accuracy measures for predictive models, or even visual inspection of plotted data to ensure trends and patterns have been accurately captured. Remember, any analytics project is incomplete without a robust method of evaluation.

34. Global Country Information Dataset

This dataset offers a wealth of information about various countries, encompassing factors such as population density, birth rate, land area, agricultural land, Consumer Price Index (CPI), Gross Domestic Product (GDP), and much more. This data provides ample opportunity for comprehensive analysis and correlation studies among different aspects of countries.

How you can do it: Download this dataset from Kaggle. This dataset includes diverse attributes, ranging from economic to geographic factors, creating an array of opportunities for analysis. Here are some project ideas:

- Correlation Analysis: Investigate the correlations between different attributes, such as GDP and education enrollment, population density and CO2 emissions, birth rate, and life expectancy. You can use libraries like pandas and seaborn in Python for these tasks.

- Geospatial Analysis: With latitude and longitude data available, you could visualize data on a world map to understand global patterns better. Libraries such as geopandas and folium can be helpful here.

- Predictive Modeling: Try to predict an attribute based on others. For instance, could you predict a country’s GDP based on factors like population, education enrollment, and CO2 emissions?

- Cluster Analysis: Group countries based on various features to identify patterns. Are there groups of countries with similar characteristics, and if so, why?

Remember to perform EDA before diving into modeling or advanced analysis, as this will help you understand your data better and could reveal insights or trends to explore further.

35. College Rankings and Tuition Costs Dataset

This dataset offers valuable information regarding various universities, including their rankings and tuition fees. It allows for a comprehensive analysis of the relationship between a university’s prestige, represented by its ranking, and its cost.

How you can do it: First, download the dataset from Kaggle. You can then use Python’s pandas for data handling, and matplotlib or seaborn for visualization.

Possible analyses include exploring the correlation between college rankings and tuition costs, comparing tuition costs of private versus public universities, and studying trends in tuition costs over time. For a more advanced task, try predicting college rankings based on tuition and other variables.

Advanced Data Analytics Projects

Ready to take your data skills to the next level? Advanced projects are a way to do just that. They’re all about handling larger datasets, digging into data cleaning and preprocessing, and getting your hands dirty with various tech stacks. It’s a two-in-one deal – you’ll dip your toes inside the roles of both a data engineer and a data scientist. Here are some project ideas to consider.

36. Analyzing Google Trends Data

Google Trends, a free service provided by Google, can serve as a treasure trove for data analysts, offering insights into popular trends worldwide. But there’s a hitch. Google Trends does not support any official API, making direct data acquisition a bit challenging. However, there’s a workaround — web scraping. This guide will walk you through the process of using a Python module to scrape Google Trends data.

How you can do it: We would not want to implement a web scraper ourselves. Simply put, it’s too much work. For this project, we will use a Python module to help us scrape the data. Let’s view an example:

# Before anything, install the following module using the commands:

# pip install -U google_trends

from google_trends import daily_trends, realtime_trends

print(realtime_trends(country='US', language='en-US', timezone='-180'))

This code should print out the data in the following format:

[{'title':'title of the trend',

'entity_names':['title', 'of', 'the', 'trend'],

'article_urls':['url.com', 'article.com']}, {'title':'rank two article'}]

You should use an automation service to automate scraping at least once per hour (see: KPOP Melon Music Charts Analysis). Then, you should store the results in a CSV file that you can query later. There are many points of analysis, such as keyword rankings, website rankings for articles, and more.

Taking it a step further:

If you want to make an even more robust project that’s bound to wow your recruiters, here are some ideas to make the scraping process easier to maintain, albeit with greater difficulty in setting up.

The first problem in our previous approach is the hardware issue. Simply put, the automation service we used earlier is moot if our device is off or if it was not instantiated during device startup. To solve this, we can utilize the cloud.

Using a function service (i.e., GCP Cloud Functions, AWS Lambda), you can execute Python scripts. Now, you will need to orchestrate this service, and you can use a Pub/Sub service such as GCP Pub/Sub and AWS SNS. These will alert your cloud functions to run, and you can modify the Pub/Sub service to run at a specified time gap.

Then, when your script successfully scrapes the data, you will need a SQL server instance. The flavor of SQL does not really matter, but you can use the available databases provided by your cloud provider. For example, AWS offers RDS, while GCP offers Cloud SQL.

Once your data is pulled together, you can then start analyzing it and employing analysis techniques to visualize and interpret it.

37. New York Times (NYT) Movie Reviews Sentiment Analysis

Sentiment Analysis is a critical tool in gauging public opinion and emotional responses towards various subjects, such as movies. With a substantial number of movie reviews published daily in well-circulated publications like the NYT, proper sentiment analysis can provide valuable insights into the perceived quality of films and their reception among critics.

How you can do it: As a data source, NYT has an API service that allows you to query their databases. Create an account at this link and enable the ‘Movie Reviews’ service. Then, using your API key, you can start querying using the following script:

import requests

def query_movie(query='', api_key=''):

return requests.get("https://api.nytimes.com/svc/movies/v2/reviews/search.json?query="+query+"&api-key=" + api_key).json()

The query looks up the titles and returns movie reviews matching those in the query. You can then use the review summaries to do sentiment analysis.

Other NY Times APIs you can explore include the Most Popular API and the Top Stories API.

More Analytics Project Resources

If you are still looking for inspiration, see our compiled list of free datasets which features sites to search for free data, datasets for EDA projects and visualizations, as well as datasets for machine learning projects.

You should also read our guide on the data analyst career path, how to become a data analyst without a degree, how to build a data science project from scratch and list of 30 data science project ideas.

You can also check out our blog for more resources like: