Top Data Science SQL Interview Questions: From Basics to Advanced

Introduction: Why SQL Skills Matter in Data Science Interviews

Every great data model, dashboard, or experiment begins with one skill—SQL. Whether you’re applying for a Data Analyst, Machine Learning Engineer, or Data Scientist role, you’ll almost always face SQL questions. And for good reason: SQL is the language of data.

For data scientists, it’s how you explore, clean, and aggregate raw information before using it for analysis or machine learning. From startups to FAANG companies, interviewers test SQL not to check syntax, but to understand how you think with data and how you structure problems, extract insights, and turn messy tables into clarity.

In this guide, we’ll walk you through everything you need to prepare, from basic SELECT and JOIN questions to complex window functions, optimization tricks, and real-world business scenarios. By the end, you’ll have a clear roadmap of what to expect, how to prepare, and how to make SQL one of your strongest assets in data interviews.

SQL Basics: Essential Concepts and Definitions

Before diving into tough interview questions, it’s worth revisiting the fundamentals. Every SQL interview, no matter how advanced is built on a foundation of tables, relationships, and queries. Interviewers often start with these basics to check whether you understand how data is structured and how to extract meaning from it efficiently.

At its core, SQL (Structured Query Language) is designed to store, retrieve, and manipulate data in a relational database. Data is organized into tables, which are similar to spreadsheets, where rows represent records, and columns represent attributes.

Here are some key terms and ideas you’ll want to keep fresh before any interview:

- Tables: Collections of related data organized into rows and columns.

- Primary Key: A unique identifier for each record in a table. For example,

customer_idin a customers table. - Foreign Key: A field that links one table to another. For instance,

customer_idin an orders table connects back to the customers table. - Constraints: Rules applied to maintain data integrity (e.g.,

NOT NULL,UNIQUE,CHECK,DEFAULT). - Data Types: Define the nature of data stored in each column — like

INTEGER,VARCHAR,DATE, orBOOLEAN. - SQL Commands: The bread and butter of SQL interviews. Expect questions around:

SELECT- retrieving data from one or more tablesWHERE- filtering records based on conditionsGROUP BY- aggregating results by one or more columnsHAVING- applying filters on aggregated resultsJOIN- combining data from multiple tablesORDER BY- sorting resultsLIMIT- restricting the number of returned rows

Mastering these basics helps you think structurally about data, which is crucial once queries get more complex. Many interviewers will start with something simple like:

“Write a query to find the total number of orders per customer.”

And then, layer on complexity with joins, filters, subqueries, and aggregations. Having a rock-solid foundation ensures you won’t get tripped up as questions evolve.

If you want to master SQL interview questions, join Interview Query to access our 14-Day SQL Study Plan, a structured two-week roadmap that helps you build SQL mastery through daily hands-on exercises, real interview problems, and guided solutions. It’s designed to strengthen your query logic, boost analytical thinking, and get you fully prepared for your next data science interview.

SQL Interview Questions by Experience Level

SQL interviews are structured to assess how effectively you can analyze, structure, and extract insights from data using queries. The depth and complexity of questions usually depend on your experience level, from checking basic query fluency for beginners to advanced problem-solving and optimization for experienced candidates.

In this section, we’ll go through examples at each stage, beginner, intermediate, and advanced, explaining what each question evaluates, how to approach it, and what interviewers look for in standout answers.

Read more: Top SQL Interview Questions for Data Engineers

Beginner-Level SQL Interview Questions

At the entry level, interviewers focus on core syntax, query logic, and understanding relationships between tables. You’re expected to write clean, readable SQL and show that you grasp how data connects and aggregates.

Find the largest wireless packages sent per SSID.

This question tests time-based filtering and aggregation. It checks your ability to isolate data by timestamp and identify maximum values per group. Filter by time (e.g., first 10 minutes of Jan 1, 2022), group by SSID and device, and compute the maximum number of packages per SSID.

Tip: Always confirm the time zone or timestamp format in real-world datasets, since inconsistent formats can alter results.

Select the 2nd highest salary in the engineering department.

This question tests your understanding of ranking, sorting, and filtering logic. It’s asked to verify if you can find the second distinct value while handling duplicates. You can use

DENSE_RANK(), a subquery withMAX(), orLIMIT + OFFSETdepending on the platform. This is commonly used in performance benchmarking and reporting second-best metrics.Tip: Mention that

DENSE_RANK()handles ties cleanly whileROW_NUMBER()does not, showing awareness of subtle ranking differences.Write a query to answer multiple transaction-related questions.

This question tests multi-step aggregation and filtering logic. It’s asked to evaluate whether you can extract various insights, like counts, statuses, and revenue in a single query. To solve this, use multiple aggregations such as

COUNT,MAX, and conditional filters withCASE WHEN. This models real-world dashboards where diverse KPIs are presented together.Tip: Structure your query clearly using aliases and such readable queries are easier to explain during interviews.

Determine how many different users gave a like on June 6, 2020

This question tests event filtering and deduplicated counting. It’s asked to verify if you can isolate actions on a specific date. Filter with

WHERE action = 'like' AND date = '2020-06-06'and useCOUNT(DISTINCT user_id). This is used in campaign analysis or feature adoption tracking.Tip: Specify whether the date column includes a timestamp, if so, use

DATE()orCAST()to extract the date part.Find the largest salary grouped by department.

This question tests grouped maximum value queries. It’s asked to check your ability to extract top values per category. Use

GROUP BY departmentwithMAX(salary)to return the highest-paid employee per group. This pattern is frequent in compensation reports and budget planning.Tip: Add

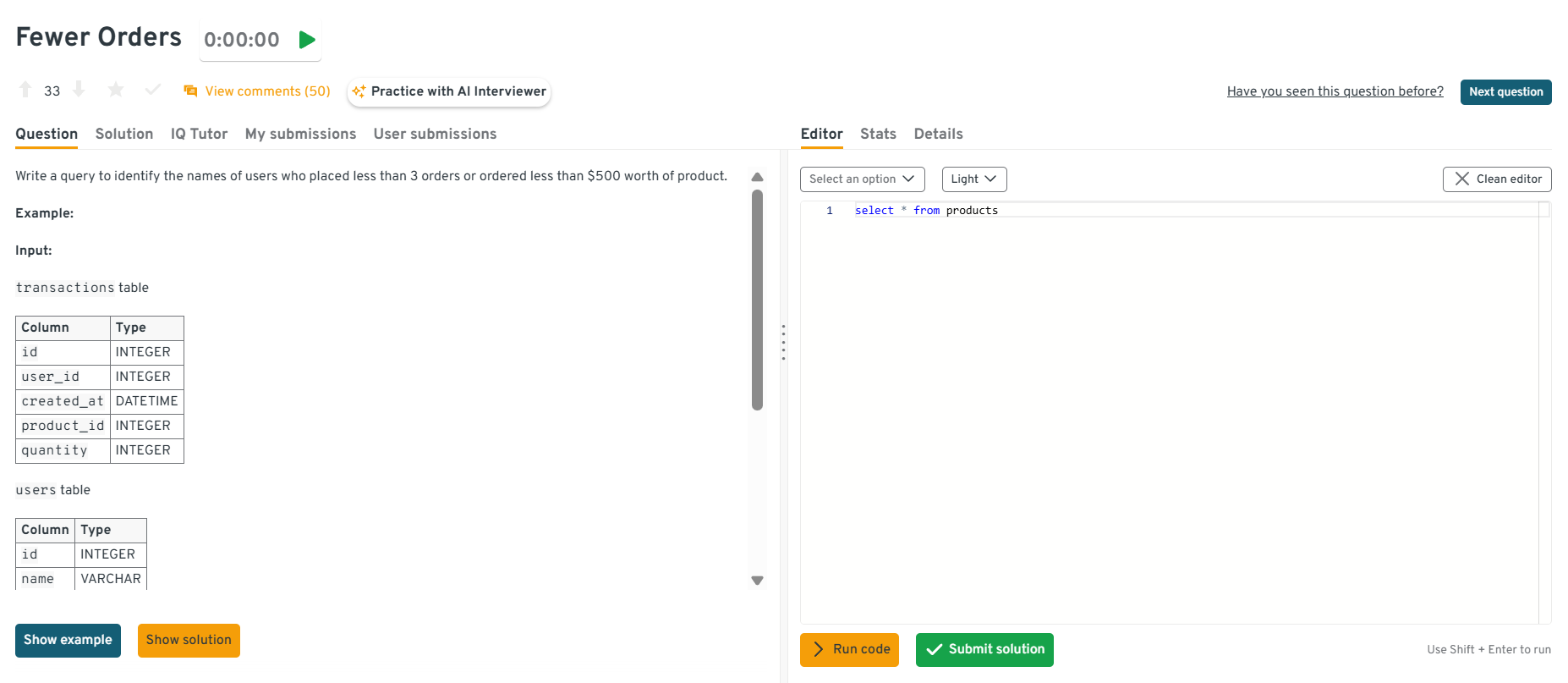

employee_namewith a window function if you need to identify the top earner, not just the salary.Identify users with fewer than 3 orders or total spend under $500.

This question tests conditional logic inside grouped filters. It’s asked to evaluate your ability to apply multiple thresholds in a

HAVINGclause. Group by user ID, count orders, sum spend, and filter withHAVING count < 3 OR sum < 500. This is used in retention models and campaign targeting for low-spend users.Tip: Emphasize that

HAVINGfilters grouped results, whileWHEREfilters individual rows, since it’s a common beginner confusion.

Explore the Interview Query dashboard that lets you practice real-world SQL and data science interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering data science problems across domains.

Return total distance traveled by each user.

This question tests joins and numerical aggregations. It’s asked to confirm your ability to sum up user activity metrics. Join user and ride tables, group by user, and compute

SUM(distance). This is common in logistics, delivery, and transportation analytics.Tip: Always check for units like miles, kilometers, or meters, to ensure consistent interpretation of distance values.

Write a query to find the total number of customers in a customers table.

This simple question checks your ability to use aggregate functions and basic SELECT syntax. You’d use

SELECT COUNT(*) FROM customers;. A strong answer might include how you’d handleNULLvalues or potential filters (e.g., counting only active users).Tip: Mention that

COUNT(column_name)excludes NULLs whileCOUNT(*)counts all rows, which is a small but insightful distinction that impresses interviewers.Retrieve the top 5 highest-paid employees from the employees table.

Here, the interviewer is testing your understanding of sorting and limiting results. You’d write something like

SELECT * FROM employees ORDER BY salary DESC LIMIT 5;. You can stand out by briefly noting that syntax likeTOP 5applies in SQL Server instead ofLIMIT.Tip: Clarify which SQL dialect you’re using, small syntax awareness shows professional maturity.

Find all customers who haven’t placed any orders.

This checks your knowledge of LEFT JOIN and filtering for NULLs. You’d join the customers and orders tables and look for customers with no matching order IDs.

SELECT c.customer_id, c.name

FROM customers c

LEFT JOIN orders o ON c.customer_id = o.customer_id

WHERE o.order_id IS NULL;

Tip: Explain why LEFT JOIN (not INNER JOIN) is used here, it ensures customers without matching rows in orders still appear.

Want to sharpen your SQL skills for real interviews? Start with our SQL Question Bank and practice the SQL problems that top companies use to evaluate candidates.

Intermediate-Level SQL Interview Questions

At this level, questions start testing multi-table joins, grouping, conditional logic, and subqueries. Interviewers want to see if you can reason through data relationships and handle analytical problems similar to those faced by data analysts or junior data scientists.

Get the current salary for each employee.

This question tests anomaly detection with SQL. It’s specifically about identifying mismatched or inconsistent salary records due to ETL errors. To solve this, use joins or window functions to check duplicates, missing entries, or unexpected distributions. In business, this ensures data quality in payroll and finance systems.

Tip: Highlight how you’d use

ROW_NUMBER()to isolate unexpected duplicates, showing practical ETL troubleshooting skills.Write a query that finds the third purchase of every user.

This question tests ranking with

ROW_NUMBER(). It’s specifically about isolating each customer’s third purchase. To solve this, partition bycustomer_id, order bypurchase_date, and select whererow_number = 3. In practice, analysts use this for lifecycle and retention insights.Tip: Mention that you’d handle users with fewer than three purchases carefully to avoid returning empty results.

-

This question tests joins and comparisons. It’s specifically about identifying projects where actual spend exceeds budget. To solve this, join projects to expenditures and filter where spend > budget. In real-world analytics, this supports cost control and project management.

Tip: Explain that adding rounding or tolerance margins (like 1–2%) reflects real-world budget flexibility.

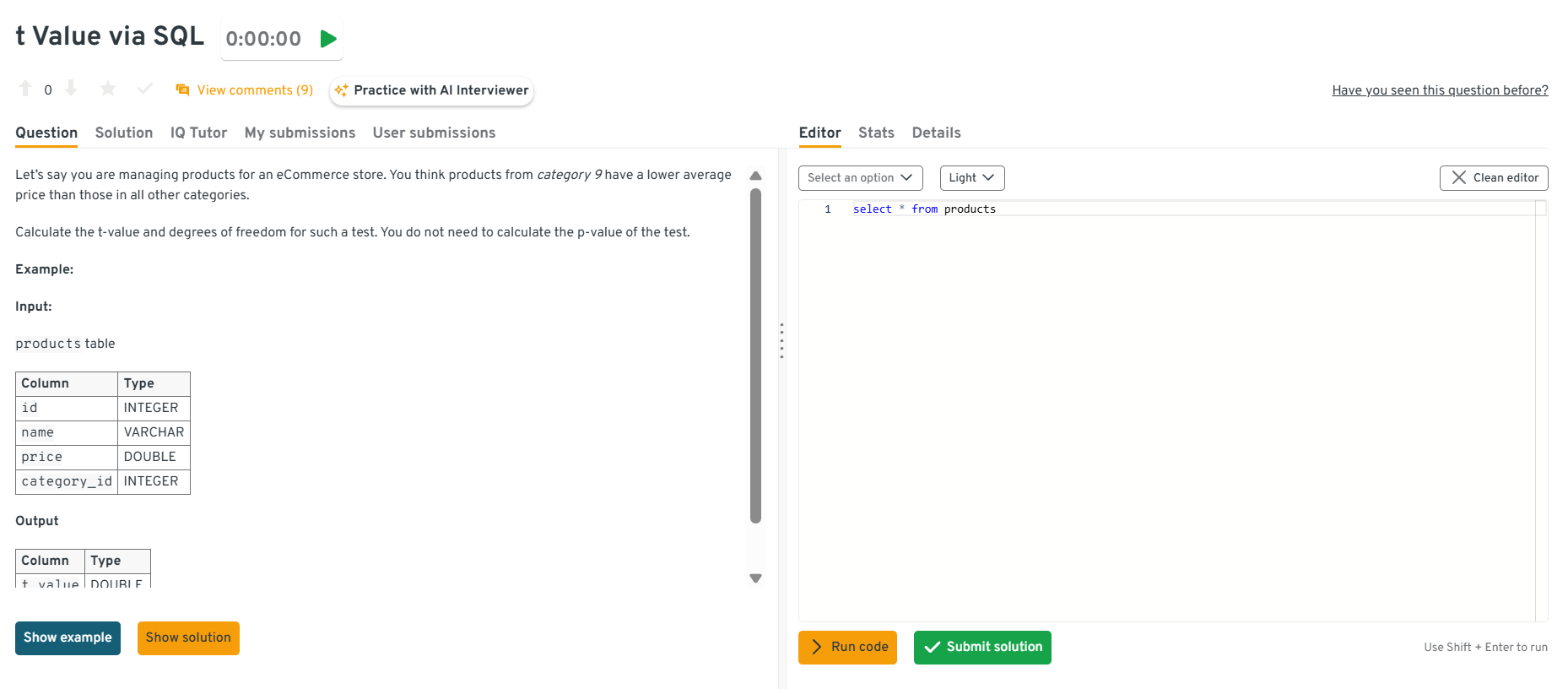

-

This question combines SQL with statistical logic, assessing analytical depth. You’d calculate group means, variances, and counts for category 9 vs. others using aggregates, then compute the t-statistic via Welch’s formula.

Tip: Note that you’d validate data distribution assumptions (e.g., excluding outliers) before testing, showing that you blend SQL with statistical judgment.

Explore the Interview Query dashboard that lets you practice real-world SQL and data science interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering data science problems across domains.

Which SQL logic samples every fourth record from a transaction table ordered by timestamp?

This question tests window functions, row numbering, and ordered sampling logic. It’s about verifying whether you can generate and filter sequential records without relying on randomization. To solve it, order the transactions by date, assign a row number to each record, and then pick only every fourth one by filtering where that row number divides evenly by 4.

Tip: Mention that

ROW_NUMBER()orNTILE()functions keep order intact and prevent uneven distribution, which shows you understand deterministic sampling.-

This question tests window functions, aggregation, and ratio calculations. The goal is to assess how well you summarize multi-year data while maintaining precision. You’d aggregate total revenue by year, identify the first and last years using

MINandMAX, sum those years’ revenue, divide by total lifetime revenue, and round the result. It’s a common analysis for measuring early- and late-stage performance in customer or product lifecycles.Tip: Explain that combining everything in one query avoids multiple scans and improves efficiency, showing that you think in terms of query optimization.

-

This question tests join behavior comprehension and dataset sizing logic. Interviewers use it to see if you understand how each join type affects row counts. You’d first create a

top_adsCTE that selects the three most-popular campaigns, then run separate queries for each join type: anINNERjoin that returns only matching rows (three),LEFTandRIGHTjoins that retain unmatched rows from one side, and aCROSSjoin that produces a Cartesian product of both sets. The key is explaining why row counts differ.Tip: Mention that estimating output size before executing heavy joins is part of good query hygiene, it prevents runtime surprises on large production data.

Find the top 3 products by total sales revenue.

This tests your ability to combine aggregation with sorting and possibly joining product and sales tables.

SELECT p.product_name, SUM(s.amount) AS total_sales

FROM sales s

JOIN products p ON s.product_id = p.product_id

GROUP BY p.product_name

ORDER BY total_sales DESC

LIMIT 3;

You can stand out by mentioning that adding an index on product_id or amount can optimize large dataset queries.

Tip: Always mention performance when working with aggregations, it shows real-world awareness.

9 . Find customers who placed orders in both 2023 and 2024.

This question tests conditional grouping and set logic. You can use a subquery or self-join approach to identify customers present in both years. To solve this, filter orders by year, then group by customer and check for two distinct years.

Tip: Mention that you’d use HAVING COUNT(DISTINCT YEAR(order_date)) = 2, an elegant one-liner solution that interviewers appreciate.

10 . Retrieve the percentage of customers who made repeat purchases.

This tests analytical thinking and division in SQL. Calculate distinct customers with more than one order, divided by total customers.

Tip: Explain how integer division can cause issues in some SQL dialects, like cast values to decimals for accurate percentage output.

Struggling with tricky CASE WHEN logic or GROUP BY filters? Master them with our step-by-step SQL Learning Path to go from basic joins to advanced optimization methods.

Advanced-Level SQL Interview Questions

For senior data roles, SQL interviews become less about syntax and more about efficiency, scalability, and analytical depth. Expect questions on window functions, optimization, CTEs, and real-world metrics.

Find the top 3 customers with the highest total purchase amount each month.

This question tests your mastery of window functions and partitioning. Use SUM(amount) grouped by customer and month, then apply

RANK()orDENSE_RANK() over (PARTITION BY month ORDER BY SUM(amount) DESC)to find the top three.Tip: Explain how ranking functions differ in handling ties. Showing that you’d choose

DENSE_RANK()to include all customers with the same total builds credibility.Optimize a query that’s running slowly when joining multiple large tables.

This is a conceptual question about query optimization and indexing. Discuss using indexes on join keys, pushing filters before joins, avoiding correlated subqueries, and analyzing performance with

EXPLAIN.Tip: Always tie your answer to real-world tradeoffs, like mention that indexes improve read performance but can slow down inserts/updates, demonstrating practical maturity.

-

Group search events by

rating, calculatectr = SUM(has_clicked)/COUNT(*), and return counts plus CTR per rating to enable a chi-square or logistic regression. Including confidence intervals or filtering bypositionhelps control confounders. Strong answers mention that a rating-CTR relationship might be spurious without controlling for rank or query intent and suggest exporting raw aggregates for statistical testing offline.Tip: Emphasize linking SQL output to a downstream statistical test, showing that you understand how SQL fits within a full analytical workflow.

How would you surface the five product pairs most frequently bought together by the same customer?

Aggregate each user’s distinct products into sets, explode them into unordered pairs via a self-join or

ARRAYunnest, group by(least(p1,p2), greatest(p1,p2)), count co-occurrences, and pick the top five withp2alphabetically first for ties. Efficient implementations pre-deduplicate(user_id, product_id)to cut pair generation size and may leverage approximate counts or partitioned tables for billion-row scale. Discussion of bloom filters, partition pruning, and batch processing shows senior-level thinking.Tip: Mention that deduplication before self-joins reduces computational cost dramatically and prevents inflated counts, which shows practical performance awareness.

Which January-2020 sign-ups moved more than $100 of successful volume in their first 30 days?

Join

users(filtered to January-2020 signups) withpaymentstwice—once as sender, once as recipient—keepingpayment_state = 'success'andpayment_datewithin 30 days of signup, sum amounts per user, and count those above $100. A union of the two roles preserves sign, and windowing byuser_idplus a date filter ensures correctness. Good answers highlight indexes on(user_id, payment_date)and justify unions over OR conditions for planner efficiency.Tip: Point out that structuring filters before joins reduces scan size and improves performance, a practice interviewers look for at advanced levels.

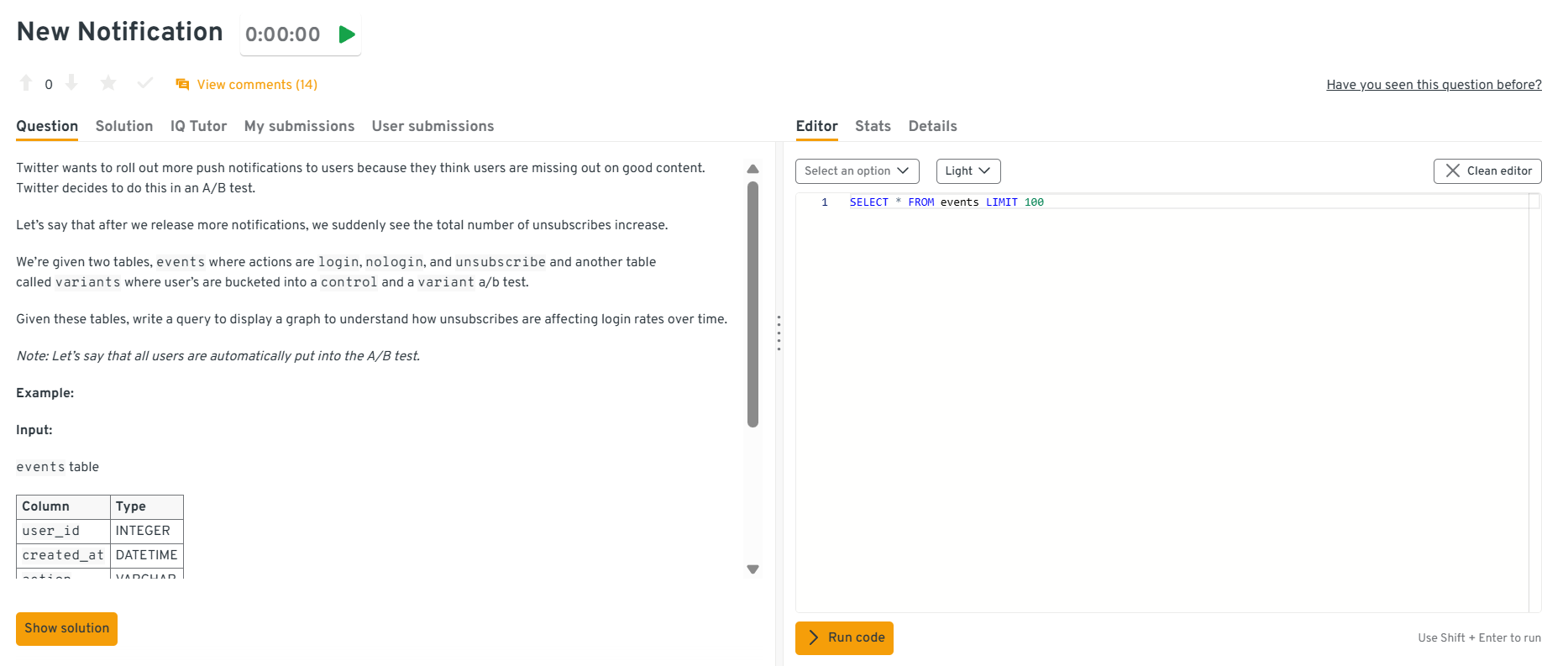

Write a query to track how unsubscribes affect login rates over time

This question tests time-series cohorting with window functions, selective predicates, and partition-friendly indexes. It’s asked to see if you can quantify the downstream effect of unsubscribes on logins by cohort/date while keeping scans bounded. To solve this, bucket users by unsubscribe week, compute weekly login rates with

SUM(CASE …)/COUNT(*)andDATE_TRUNC, and back it with(user_id, event_date)indexes; compare plans and materialize intermediate cohorts if needed. In the real world, these insights guide notification fatigue policy and rate limits without burning warehouse credits.Tip: Explain that using materialized cohorts for unsubscribe data helps scale analysis efficiently over long time windows.

Explore the Interview Query dashboard that lets you practice real-world SQL and data science interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering data science problems across domains.

Write a query to analyze how user activity influences purchasing behavior

This question tests CTE vs materialization cost and join selectivity when modeling conversion funnels. It’s asked to see if you can compute activity-to-purchase lift without massive shuffles. To solve this, pre-aggregate activity per user/day, materialize to a temp table with indexes on

(user_id, event_date), then join to purchases and compute uplift. In practice, efficient funnel queries drive marketing and product decisions with timely SLAs.Tip: Discuss comparing execution plans for inline CTEs versus temporary tables, showing awareness of query planning and caching.

Compute cumulative users added daily with monthly resets

This question tests advanced window framing and partition strategy on large time-series datasets. It evaluates your ability to compute per-month cumulative counts efficiently. To solve this, use

SUM(1) OVER (PARTITION BY DATE_TRUNC('month', created_at) ORDER BY created_at ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)and cluster bycreated_atfor better performance. In BI, this powers growth trend reporting with minimal recomputation.Tip: Mention verifying partition boundaries with sample outputs to show that you understand how frame definitions impact accuracy and performance.

Return the second-longest flight for each city pair

This question tests ranking within groups. It’s specifically about normalizing city pairs (A-B = B-A), computing durations, and finding the 2nd-longest. To solve this, store sorted city names as

city_a,city_b, computeduration_minutes, then applyROW_NUMBER() OVER (PARTITION BY city_a, city_b ORDER BY duration_minutes DESC)and filter where rank=2. Airlines use this to plan backup aircraft allocations for long-haul routes.Tip: Clarify how you’d normalize city pairs using

LEAST()andGREATEST(), this shows precision and data consistency awareness.Detect duplicate records in a large transactions table.

This checks your understanding of grouping and data quality checks.

SELECT transaction_id, COUNT(*)

FROM transactions

GROUP BY transaction_id

HAVING COUNT(*) > 1;

Explain how duplicates affect reporting accuracy and how to enforce uniqueness using constraints or ETL rules.

Tip: Note that you’d add a unique constraint or checksum in ETL to prevent duplicates from being inserted in the first place.

Watch Next: Meta SQL Data Scientist Interview: Calculate Notification Conversion Rates

In this mock data scientist interview, Vivian, a lead data scientist tackles a real SQL problem from Meta along with Chinmaya as the interviewer, determining which type of notification drives the most conversions. The video walks through analyzing multiple tables, building a logical attribution model, and writing clean, efficient SQL using CTEs and window functions. It’s a great resource for learning how to approach complex, ambiguous SQL interview questions and explain your reasoning clearly under pressure, which are key skills for any data science interview.

Scenario-Based and Real-World SQL Interview Questions

Scenario-based SQL questions are designed to evaluate your ability to think like a data professional, not just someone who knows syntax, but someone who understands how data reflects business operations.

In these questions, interviewers often present realistic situations drawn from analytics, marketing, finance, or product data. You might be asked to identify churned users, calculate revenue growth, evaluate an A/B test, or detect data inconsistencies in a sales pipeline. The goal is to see how you interpret messy, imperfect data and turn it into actionable insights.

Read more: SQL Interview Questions for Data Analysts

Find the score closest to a benchmark in SAT results

You’ll often get asked to find the value closest to a target, like a benchmark SAT score. The goal is to identify which student’s score is nearest to the target. You can calculate the absolute difference with

ABS(score - benchmark), order by that difference, and take the first record. It’s common in education analytics when schools match students to cutoff scores for scholarships or programs. If multiple scores are equally close, return all of them so you don’t miss ties.Tip: Always check data types and rounding, since an integer vs. float mismatch can change which score appears “closest.”

Write a query to detect overlapping subscription periods

Here, you’re trying to find customers who had two active subscriptions at once — a classic data consistency issue. The best way is to self-join on customer ID where one subscription’s start date is before the other’s end date and vice versa. Companies use this to detect double billing or overlapping renewals. Make sure to exclude the same record by checking

subscription_id != other.subscription_id.Tip: Null end dates can mess up comparisons, use

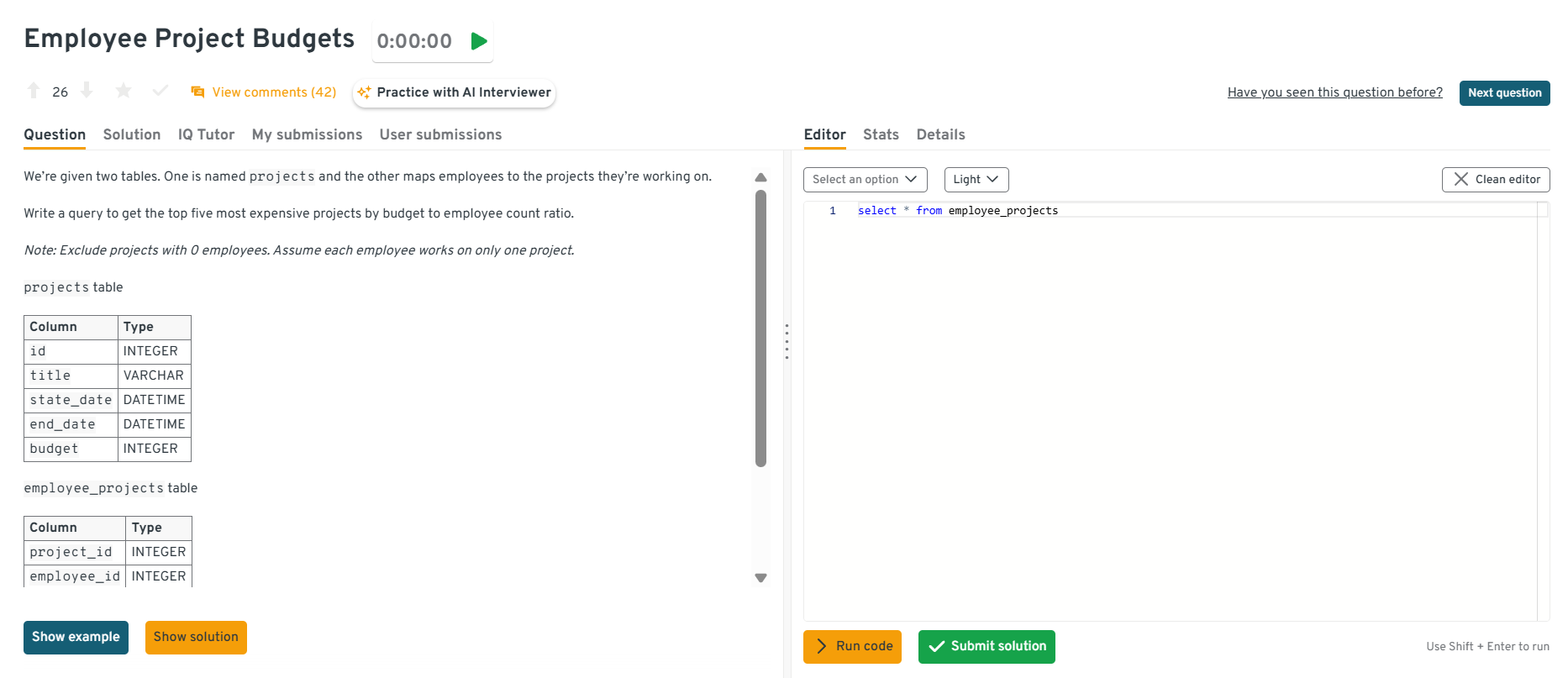

COALESCE(end_date, CURRENT_DATE)when handling ongoing subscriptions.Write a query to aggregate employee allocations against project budgets

In this question, you’re figuring out whether total employee allocations stay within project budgets. You can join employees to their projects, sum up allocation hours per project, and compare that against each project’s budget. This kind of query helps operations teams flag over-allocated projects before costs spiral.

Tip: Always replace missing allocation values with zero using

COALESCE(), otherwise totals will look smaller than they are.

Explore the Interview Query dashboard that lets you practice real-world SQL and data science interview questions in a live environment. You can write, run codes, and submit answers while getting instant feedback, perfect for mastering data science problems across domains.

List accounts that were closed in a timeframe

This one’s about tracking churn over a specific time period. You’d filter accounts where

status = 'closed'andclosure_datefalls between your start and end dates. It’s a simple query but very powerful. Banks and SaaS companies use it to monitor attrition patterns. Grouping results by month or week makes trends clearer to stakeholders.Tip: Convert all timestamps to a consistent time zone so closures are recorded accurately, especially if you’re dealing with global data.

-

Here, interviewers test your grasp of grouping and filtering in analytics queries. Filter the dataset using a

WHEREclause for the specific date, then group bycarrierandcountry, counting confirmation responses withCOUNT()or conditional aggregation. This tests both SQL fundamentals and business reasoning, ensuring you can deliver clear insights for communication analytics. Edge cases may include missing response records or time zone mismatches.Tip: Mention using a

CASE WHEN status = 'confirmed' THEN 1 ENDexpression insideCOUNT()to handle mixed statuses cleanly. -

This question evaluates your ability to use window functions for behavioral metrics. You can calculate consecutive visit streaks by comparing event dates with

LAG()and resetting counts when a break occurs, then aggregate by user to find the longest streak. This problem tests analytical thinking, partition logic, and efficient event ordering, all key in product analytics pipelines.Tip: Emphasize handling edge cases where users skip days or have multiple events on the same day since both can break streak logic if not managed properly.

Write a query to select the top 3 departments by average salary

This question tests ranking and aggregation. It’s specifically about computing average salary per department and selecting the top three. To solve this, group by department, compute

AVG(salary), then applyORDER BYandLIMIT. In real analytics, this reflects benchmarking and compensation analysis across groups.Tip: Mention using

ROUND(AVG(salary), 2)for cleaner output and note that adding an index ondepartment_idimproves aggregation speed in larger datasets.Find the month_over_month change in revenue for the year 2019.

This question tests time-based windowing. You’re looking to measure growth momentum by comparing revenue each month to the previous one. To solve this, group revenue by month using

DATE_TRUNC('month', order_date)and useLAG(SUM(revenue)) OVER (ORDER BY month)to compute the difference, then divide by the previous month’s revenue for a percent change. This is one of the most common metrics in finance and e-commerce analytics.Tip: Clarify that you’d filter out the first month since it has no prior comparison, since such clean handling of edge cases always impresses interviewers.

Write a query to get the number of friends of a user that like a specific page

This question tests joins across relationship and action tables. It’s specifically about intersecting a user’s friend list with a page-likes table. To solve this, join the friendships table to

page_likesonfriend_idand filter by the page, thenCOUNT(*)(orCOUNT DISTINCT friend_id). In practice, this supports social graph analytics and targeted growth/virality tracking.Tip: Mention using

COUNT(DISTINCT friend_id)instead ofCOUNT(*)to prevent overcounting when multiple actions exist per friend.Identify customers whose average order value dropped by more than 30% quarter-over-quarter.

This question combines window functions with business sense. Calculate the average order value per customer and quarter, use

LAG()to compare it with the previous quarter, and flag those with a drop of more than 30%. It’s often used in e-commerce or SaaS churn prediction.Tip: Filter out customers missing consecutive quarters to avoid false drop signals, incomplete data can distort trends.

Need 1:1 guidance on your interview strategy? Explore Interview Query’s Coaching Program that pairs you with mentors to refine your prep and build confidence.

Watch Next: Three Tricky SQL Interview Questions with Andrew

In this mock SQL data science interview, Andrew from Data Leap Tech walks through real SQL problem-solving strategies on the live MySQL platform—from filtering logic and joins to handling ambiguous business questions, while demonstrating how clear communication and reasoning can set candidates apart. This walkthrough highlights practical problem-solving techniques you can apply in your own interview prep.

Advanced SQL Concepts: Optimization, Performance, and Modern Features

As data systems grow in size and complexity, SQL has evolved far beyond simple joins and aggregates. Modern data science interviews now explore advanced SQL techniques that show how well you can handle large datasets, write efficient queries, and leverage newer language capabilities to solve analytical problems.

Interviewers want to see whether you understand the deeper mechanics of SQL, from how data is stored and accessed to how modern features can make your queries more expressive and powerful.

Read more: How to Optimize SQL Query with Multiple JOINs

Let’s look at some of the most common advanced concepts you’ll encounter in data science SQL interviews:

Common Table Expressions (CTEs)

CTEs, defined using the WITH clause, help you write cleaner and more readable queries by breaking complex logic into reusable parts. They’re particularly useful for analytical pipelines where multiple transformations or aggregations are chained together.

Instead of writing nested subqueries, you can define logical steps in sequence:

WITH monthly_sales AS (

SELECT customer_id, SUM(amount) AS total_sales, DATE_TRUNC('month', order_date) AS month

FROM orders

GROUP BY customer_id, DATE_TRUNC('month', order_date)

)

SELECT month, AVG(total_sales) AS avg_sales_per_customer

FROM monthly_sales

GROUP BY month;

A well-written CTE improves both readability and debugging efficiency, and interviewers appreciate when candidates use them to explain multi-step logic clearly.

Tip: Mention that CTEs can sometimes reduce performance in older databases since they may not be optimized like subqueries. Demonstrating awareness of trade-offs shows maturity.

Window Functions

Window functions let you perform calculations across sets of rows without collapsing them into a single output. They’re indispensable in data science interviews for ranking, running totals, moving averages, and cohort analyses.

Example: calculating the rank of each product by revenue within its category:

SELECT

category_id,

product_id,

SUM(sales) AS total_sales,

RANK() OVER (PARTITION BY category_id ORDER BY SUM(sales) DESC) AS rank_within_category

FROM sales

GROUP BY category_id, product_id;

Interviewers love window function questions because they test both logic and interpretation. A strong answer explains not just how the function works, but why it’s useful, for example, identifying top-performing products or tracking customer behavior over time.

Tip: Clarify the difference between RANK(), DENSE_RANK(), and ROW_NUMBER(), subtle details like handling ties can set your answer apart.

JSON and Semi-Structured Data

Modern databases like PostgreSQL, Snowflake, and BigQuery now support JSON columns, allowing you to query nested or semi-structured data directly. This feature is increasingly common in data science interviews, as real-world datasets often blend structured and unstructured information.

For example, extracting values from a JSON field in PostgreSQL:

SELECT

user_id,

details->>'country' AS country,

details->'preferences'->>'theme' AS user_theme

FROM users;

You might also be asked to filter or aggregate based on JSON attributes, such as counting users by a nested field or flattening arrays. Demonstrating comfort with JSON functions (JSON_EXTRACT, ->, ->>, JSON_VALUE) shows you can adapt SQL skills to modern data architectures.

Tip: Emphasize that querying JSON directly is powerful for exploratory analysis, but for large-scale analytics, normalizing data into relational tables is often more performant.

Stored Procedures and Triggers

Although less common in pure data science interviews, stored procedures and triggers occasionally appear in data engineering or production analytics roles. However, understanding them conceptually (even without writing complex code) shows that you can think about SQL as part of an end-to-end system, not just a query interface.

Stored procedures are precompiled SQL blocks used to automate workflows like ETL jobs, data validation, or scheduled reports. Triggers run automatically when specific database events occur, such as inserts or updates. They’re best for enforcing data integrity or updating summary tables.

Tip: If asked about stored procedures, discuss how they can be used for repeatable ETL logic or automated anomaly detection, connecting it to real business value rather than just syntax.

Error Handling and Performance Tuning

Advanced SQL work also involves diagnosing and improving slow queries. Knowing how to interpret an EXPLAIN or EXPLAIN ANALYZE plan, understanding how indexes affect performance, and spotting unnecessary computations can make a big difference.

You can stand out by describing your step-by-step troubleshooting approach:

- Check join order and push filters early to reduce data volume.

- Review indexing strategy to ensure columns in

WHERE,JOIN, orORDER BYclauses are indexed appropriately. - Replace correlated subqueries with joins or CTEs.

- Use approximate functions (like

APPROX_COUNT_DISTINCT) for large datasets where precision isn’t critical.

Tip: Interviewers appreciate candidates who balance correctness with performance, so explain how you’d measure trade-offs between speed, accuracy, and maintainability.

Interviewing at Meta, Amazon, or Stripe? Use our curated company specific guides (Meta SQL Guide, Amazon SQL Questions, Stripe Interview Questions) to prep like the pros.

Watch Next: Breaking Down an Oracle SQL Interview Question

In this video, watch how Andrew and Jay go through a SQL problem asked by Oracle identifying users who are currently engaged with a campaign while ensuring they have never been bored by that same campaign in the past. The video covers how to structure subqueries, use left joins to isolate past behavior, filter on campaign-level conditions, and reason through temporal logic in event tables. It’s a practical example of how to translate business rules into SQL, making it especially useful for data science candidates preparing for SQL questions.

SQL Dialects and RDBMS: Differences That Matter in Interviews

Not all SQL is created equal. While the core syntax remains consistent across databases, different RDBMS platforms (Relational Database Management Systems) have unique features, functions, and optimizations. In interviews, recruiters often ask which system you’ve worked with, like MySQL, PostgreSQL, SQL Server, or even NoSQL to gauge your practical exposure.

Understanding these differences helps you adapt your answers to the interviewer’s tech stack and avoid confusion when discussing query behavior, indexing, or advanced features.

Here’s a quick comparison of the most common SQL dialects you might encounter:

| Feature / Aspect | MySQL | PostgreSQL | SQL Server | NoSQL (e.g., MongoDB) |

|---|---|---|---|---|

| Use Case | Web apps, startups, OLTP systems | Advanced analytics, enterprise data, research | Enterprise applications, BI systems | Unstructured or semi-structured data (JSON, documents) |

| Query Syntax | Follows ANSI SQL with some limitations | Closest to full SQL standard | Proprietary T-SQL extensions | Uses query objects or APIs instead of SQL |

| Performance | Fast for read-heavy workloads; limited parallelism | Strong with complex queries and large joins | Highly optimized for transactional consistency | High scalability, eventual consistency |

| Joins & CTEs | Supports standard joins; CTE support added later | Robust support for recursive CTEs and window functions | Full support for CTEs and advanced joins | Typically unsupported; joins simulated in application logic |

| Window Functions | Limited compared to PostgreSQL | Comprehensive implementation | Full implementation in recent versions | Not applicable |

| JSON Support | Partial via JSON_EXTRACT() |

Native JSONB type with indexing and operators | JSON functions via OPENJSON() |

Native - data is stored as JSON by default |

| Extensions & Functions | Fewer built-in analytical extensions | Extensive functions (e.g., PostGIS, full-text search) | Integrated analytics and business intelligence features | Flexible schema, no joins or constraints |

| Transactions & Concurrency | Good support, but limited ACID tuning | Excellent ACID compliance and concurrency control | Strong transactional support | Depends on specific database and configuration |

| Popularity in Data Science | Common for ETL pipelines and small datasets | Preferred for analytics, research, and ML integrations | Often used in corporate data warehouses | Used for event data, logs, and flexible schemas |

Tip: When discussing SQL dialects in interviews, don’t just state which one you know, explain how you adapted to it. For example, mention how you used PostgreSQL’s JSONB for flexible analytics or optimized indexes differently in MySQL vs. SQL Server. That practical awareness often makes a stronger impression than technical memorization.

Interview Strategies: Answering SQL Questions Effectively

Cracking SQL interviews isn’t only about writing correct queries. It’s about showing how you analyze a problem, reason through data, and communicate your approach clearly. A thoughtful, step-by-step explanation often matters more than finishing first.

Read more: How to Prepare for Data Science Interviews

Here are practical strategies to help you approach SQL interview questions with confidence and clarity:

Start by restating the question.

Begin by summarizing what the interviewer asked, using your own words. This confirms that you understand the problem and gives you a few seconds to organize your thoughts. For instance, if asked to find top-selling products, say, “So you’d like me to find the top products by total sales amount across all transactions?”

Tip: Restating questions not only prevents misunderstandings but also sets a collaborative tone.

Outline your approach before typing.

Describe your plan step-by-step before jumping into SQL. Mention which tables you’ll use, how you’ll join them, and which filters or aggregates will apply. For example, explain, “I’ll first join the

ordersandproductstables onproduct_id, then group by category to calculate total revenue per category.”Tip: This habit shows structured thinking and mirrors how data professionals discuss logic before implementation.

Handle data assumptions openly.

Interviews often involve incomplete datasets. State what assumptions you’re making, for instance, whether

NULLvalues exist, or if there can be multiple rows per ID. Example: “If there are customers without purchase records, I’ll use a LEFT JOIN so they’re still included in the result.”Tip: Verbalizing assumptions shows that you understand real-world data imperfections and know how to handle them.

Explain edge cases and alternatives.

Mention how your query behaves with ties, duplicates, or missing information. If ranking employees by salary, note what happens when two people earn the same amount. For instance, “Using

DENSE_RANK()will handle ties correctly, ensuring employees with the same salary get the same rank.”Tip: Thinking about corner cases signals attention to detail and a deeper analytical mindset.

Keep queries readable and structured.

Use proper indentation, clear aliases, and spacing between clauses. For example:

SELECT c.name, COUNT(o.order_id) AS total_orders

FROM customers c

LEFT JOIN orders o ON c.customer_id = o.customer_id

GROUP BY c.name;

This instantly communicates your professionalism.

Tip: Interviewers care about clarity because readable SQL translates to maintainable production code.

Validate your output logically.

Don’t just write the query, interpret what the output means. For instance, if your result shows that 80% of sales come from one region, explain why that might be the case or what follow-up queries you’d run.

Tip: Discussing results makes you stand out as someone who connects code with business insight.

Stay calm under pressure.

It’s normal to hit errors or draw a blank. Instead of freezing, talk through what you’re testing or verifying. For example, “I think my join condition might be off; I’ll double-check the foreign key mapping.”

Tip: Interviewers appreciate composure and curiosity, such as showing how you debug is often more valuable than getting it right on the first try.

Struggling with take-home assignments? Get structured practice with Interview Query’s Take-Home Test Prep and learn how to ace real case studies.

Portfolio Presentation for SQL and Data Science Roles

A strong portfolio can make all the difference in standing out from other candidates. It shows not only what you know but how you apply your SQL and data skills to real-world problems. Interviewers want to see evidence of clear thinking, clean execution, and measurable impact, and not just code snippets or dashboards.

Here’s how to present your SQL portfolio effectively:

Show end-to-end projects, not isolated queries.

Instead of uploading random SQL scripts, focus on complete projects that demonstrate the full data workflow of collecting, cleaning, analyzing, and visualizing data. For example, a sales analytics dashboard or customer churn analysis using SQL and Python.

Tip: Briefly describe the goal, dataset, and your key takeaways in a few sentences before showing code.

Highlight SQL’s role in each project.

Interviewers want to see how SQL fits into your analysis, like whether you used it to aggregate data, perform feature engineering, or validate results before modeling.

Tip: Use small visuals or schema diagrams to explain your database design or query flow.

Emphasize clarity, documentation, and readability.

Well-commented queries are easier to understand and review. Even a simple

READMEfile describing your approach adds professional polish.Tip: Explain complex joins, CTEs, or window functions in plain language alongside the code.

Include performance insights or optimizations.

If you optimized a query using indexes, CTEs, or partitioning, call it out. This shows practical knowledge that goes beyond theory.

Tip: A short “Before and After” performance comparison helps your project stand out to technical reviewers.

Host your work on a shareable, organized platform.

Use GitHub, Kaggle, or a personal website to display your portfolio. Include links to datasets, notebooks, and dashboards where possible.

Tip: Organize projects by theme (e.g., Marketing Analytics, Healthcare Data, or ETL Pipelines) for easy navigation.

Salary Negotiation and Career Growth Tips for Data Scientists

Cracking SQL and data science interviews is only part of the journey, the next step is knowing your worth. Data professionals who combine strong SQL foundations with analytical and technical versatility are in high demand, and your ability to translate data into insights directly influences your compensation.

Read more: Data Scientist Salaries

Here’s how to approach salary discussions, evaluate market data, and plan your career growth with confidence.

Understand Typical Salary Ranges

Salary ranges for SQL and data science roles vary depending on experience, industry, and technical specialization.

- Entry-Level Data Scientist: $75K–$95K (U.S.)

- Mid-Level Data Scientist: $100K–$125K

- Senior Data Scientist: $125K–$160K+

- Lead Data Scientist: $150K–$190K+

These numbers fluctuate by region and company size, startups may offer less base pay but more equity or flexibility, while large tech firms offer structured salary bands and bonuses.

Tip: Use tools like Interview Query Salary Explorer, Levels.fyi, and Glassdoor to benchmark your compensation range before entering negotiations.

Know What Drives Your Value

Your market value increases with your ability to connect SQL work to measurable business outcomes. Candidates who combine SQL with Python, R, cloud tools, or machine learning typically command higher pay. Companies value professionals who can design efficient data pipelines, optimize queries, and communicate insights that drive decisions.

Tip: Mention specific results, for example, “I optimized SQL queries that reduced dashboard load time by 40%”, to show measurable impact during salary discussions.

Evaluate the Entire Compensation Package

Salary is just one piece of the puzzle. Evaluate total compensation, including performance bonuses, stock options, insurance, and professional development benefits. Some companies also offer annual learning stipends or reimbursements for certifications in SQL.

Tip: Ask about non-salary benefits like remote flexibility, career progression programs, or mentorship opportunities, these can greatly influence long-term satisfaction.

Time Your Negotiation Right

Negotiate only after you receive a formal offer. At that stage, the company has already decided you’re the right fit. Stay calm, thank them for the offer, and ask if there’s flexibility based on your research and achievements.

Tip: Practice a data-driven negotiation line such as:

“Based on my experience in SQL and data science, and salary benchmarks from Levels.fyi and Interview Query, I was expecting something closer to $130K. Is there flexibility in the offer?”

Track Job Market Trends

As of 2025–2026, demand for SQL-skilled data professionals continues to rise, especially in AI-driven industries like fintech, healthcare, and e-commerce. Many roles now expect hybrid expertise, like querying data efficiently and understanding modeling or dashboarding workflows.

Tip: Follow Interview Query’s job market reports, LinkedIn insights, and Kaggle discussions to track how roles and salary trends evolve across different data domains.

Plan for Long-Term Growth

SQL is your foundation, but long-term career growth comes from expanding into areas that complement it.

Keep advancing by:

- Mastering cloud databases and analytics tools (Snowflake, BigQuery, Redshift).

- Learning Python, R, or dbt for data automation.

- Gaining BI and visualization expertise (Tableau, Power BI, Looker).

- Contributing to open-source or publishing data case studies online.

Tip: Build your career in layers—SQL for access, analytics for interpretation, and AI for innovation. Strength across these tiers helps you move from execution roles to leadership and strategy positions.

Looking for hands-on problem-solving? Test your skills with real-world challenges from top companies. Ideal for sharpening your thinking before interviews and showcasing your SQL ability.

FAQs

What are the most common SQL interview questions for data science roles?

You can expect questions on joins, aggregations, subqueries, and window functions. Many interviews also include scenario-based problems, such as analyzing sales data, finding churned users, or ranking performance metrics. Be ready to explain both how you wrote a query and why you structured it that way.

How do you optimize SQL queries for performance in interviews?

Start by filtering early with WHERE clauses, indexing key columns, and replacing correlated subqueries with joins or CTEs. Use EXPLAIN to analyze query execution and focus on reducing unnecessary computations. Mentioning real-world optimization steps shows that you understand scalability.

What are the differences between SQL dialects, and do they matter in interviews?

Most core syntax works across systems, but small differences exist, such as PostgreSQL supports advanced window functions and JSON queries, while SQL Server uses TOP instead of LIMIT. It’s useful to know the environment used by your target company, but demonstrating conceptual understanding matters most.

How should I prepare for scenario-based SQL questions?

Practice with datasets that mimic business problems, like sales performance, customer retention, or campaign metrics. Focus on translating plain-language goals into SQL logic and explaining your reasoning clearly. Employers want to see that you can turn data into insights, not just code queries.

What advanced SQL topics should I know for senior data science interviews?

Understand CTEs, window functions, indexing, query optimization, and handling semi-structured data like JSON. Being able to explain trade-offs, such as query speed versus readability, signals senior-level thinking.

How do I present my SQL projects or portfolio in an interview?

Show complete, end-to-end projects instead of isolated queries. Highlight datasets, business goals, challenges, and measurable outcomes. Use visuals or GitHub links to make your work easy to follow and show real impact.

What are best practices for answering SQL questions during interviews?

Think aloud while solving problems, restate the question to confirm understanding, and explain edge cases or optimizations. Keep queries clean and readable—proper indentation and aliases make a great impression.

How should I handle mistakes or errors in SQL interview questions?

If something doesn’t run or you spot an issue, explain your debugging process. Check joins, filters, and grouping logic systematically. Interviewers value clear thinking under pressure more than perfect syntax.

Conclusion

Mastering SQL is one of the most practical and rewarding investments you can make as a data professional. Whether you’re preparing for your first data analyst interview or tackling advanced data science challenges, strong SQL skills let you extract, explore, and explain data effectively. Interviews are assessing how you think through data problems, handle uncertainty, and communicate insights clearly. If you can pair sound query logic with business reasoning, you’ll stand out in any interview.

Keep practicing, build real projects, and treat each query as a story that connects data to decisions. With consistent preparation, SQL can quickly become your competitive edge.

Additional Resources for SQL Interview Preparation

Want to master SQL interview questions? Kick off your prep with our 14-Day SQL Study Plan and build hands-on confidence through real interview-style problems curated for quick practice.

Ready to sharpen your SQL for data science interviews? Start with our SQL Learning Path for structured, step-by-step prep from beginner to advanced.

Other resources:

- LeetCode SQL Questions – Excellent for timed query challenges and syntax mastery.

- Mode SQL Tutorial – Interactive lessons with live query execution for hands-on learning.

- Kaggle Datasets – Use public data to design and test your own SQL-driven analytics projects.