Tiger Analytics Data Engineer Interview Questions & Process (2025)

Introduction

Tiger Analytics equips its clients with cutting-edge data solutions, and joining as a Data Engineer means playing a crucial role in designing and maintaining the pipelines that power those insights. Whether you’re orchestrating ETL workflows in the cloud or ensuring real-time data availability for downstream analytics, you’ll be at the forefront of transforming raw information into strategic business value. To help you prepare, this guide walks through the Tiger analytics data engineer interview questions you’re likely to encounter and the structure of the hiring process itself.

As a Tiger Analytics Data Engineer, you’ll build green-field architectures for Fortune 500 data lakes, collaborate on AI/ML pipelines, and present results to client stakeholders. The firm’s “client-first” ethos and rapid-experiment culture grant you the autonomy to select tools—be it Airflow, Spark, or serverless functions—while moving at the pace today’s enterprises demand. With competitive compensation and fast promotion tracks, Tiger Analytics offers a career path that rewards both technical excellence and business impact, setting the stage for the Tiger analytics interview questions for data engineer that follow.

Role Overview & Culture

Data Engineers at Tiger Analytics partner closely with data scientists, product teams, and business stakeholders to architect scalable, fault-tolerant pipelines. Your day-to-day includes designing streaming and batch ETL, optimizing data transformations, and ensuring data quality for high-stakes analytics. The company’s bottom-up decision-making empowers you to innovate: choose the right storage layers, enforce CI/CD practices, and champion observability across the stack. Tiger’s client obsession means you’ll rapidly iterate on prototypes and deploy solutions in weeks, while its emphasis on experimentation encourages you to test new technologies, refine workflows, and share learnings across the organization.

Why This Role at Tiger Analytics?

At Tiger Analytics, Data Engineers tackle real-world problems for global brands—building architectures that handle petabyte-scale datasets and powering machine-learning models that drive decisions in finance, retail, and healthcare. You’ll be entrusted with end-to-end ownership, from initial requirements gathering through production rollout, and you’ll see the direct impact of your work on client outcomes. The firm’s rapid career progression rewards high performers with leadership opportunities and strategic client engagements. Ready to prove your skills? Let’s break down the interview process that leads to this exciting role.

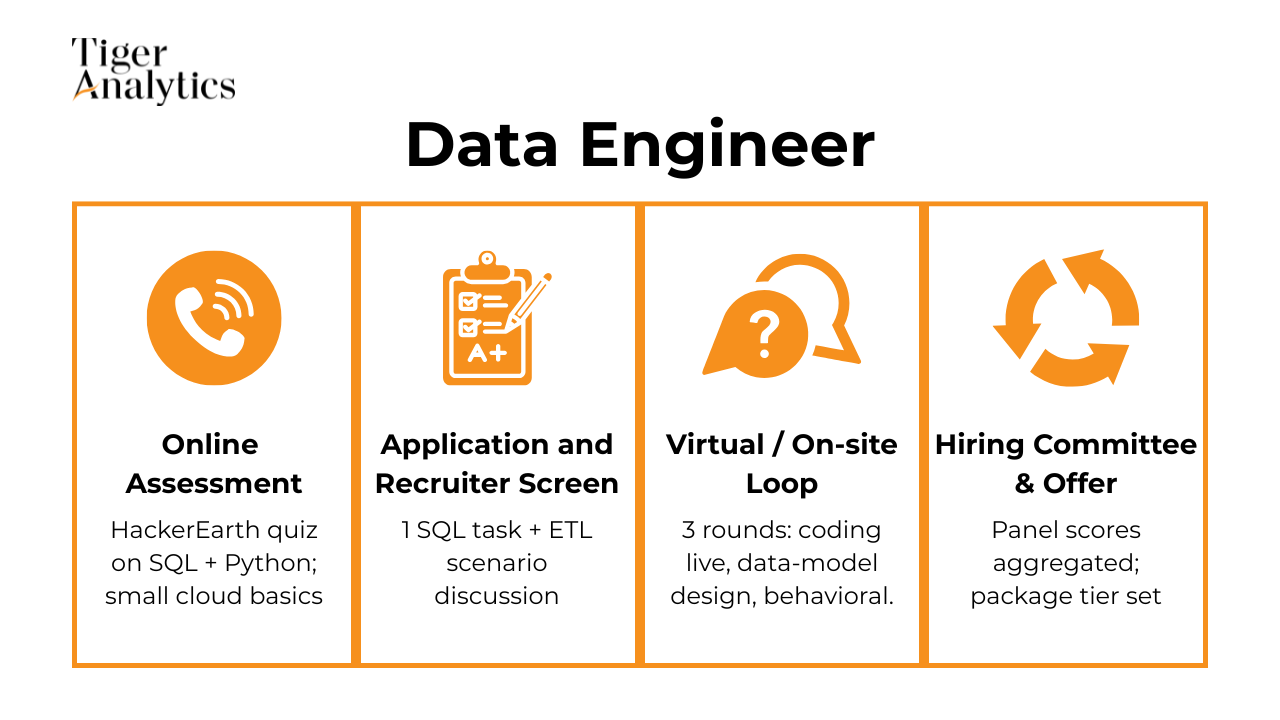

What Is the Interview Process Like for a Data Engineer Role at Tiger Analytics?

In this Tiger Analytics data engineer interview guide, the hiring process starts with a rigorous online coding and SQL quiz. The Tiger Analytics assessment test for data scientist screens for your ability to manipulate data, write efficient queries, and apply basic machine learning logic under time pressure. From there, you progress through live technical discussions and case-based exercises designed to mirror the real-world challenges you’ll face on client projects.

Online Assessment (HackerEarth)

The first step is a 60-minute HackerEarth assessment that blends Python scripting, SQL querying, and data-structure challenges. You can expect questions on list comprehensions, Pandas transformations, and writing efficient joins or window functions in SQL. The goal is to gauge your coding fluency and problem-solving approach under time pressure, so clarity and clean syntax are as important as correctness. Make sure to familiarize yourself with common HackerEarth formats—multiple-choice questions, code-typing prompts, and sometimes small debugging tasks.

Technical Phone Screen

Successful OA candidates move to a 45-minute phone call with an engineer or data-science leader. This session combines live coding—often on a shared editor—and conceptual questions about data modeling, pipeline orchestration, and system reliability. You might be asked to sketch an end-to-end data flow or explain how you’d implement schema evolution in a streaming context. Interviewers assess not just your technical depth but also your communication: can you clearly articulate trade-offs and justify design choices?

Onsite / Virtual Loop

The core interview loop spans 3–4 rounds of 45–60 minutes each, typically conducted virtually. Rounds include:

- Architecture & System Design: Whiteboard or collaborative doc session to design a scalable ETL or streaming pipeline, addressing fault tolerance, latency, and cost.

- Live Coding: Algorithmic tasks in Python or Java, with an emphasis on readability, testability, and performance.

- Case Study Presentation: You’ll walk through a past project or tackle a hypothetical scenario, showcasing your problem-framing, data-ingestion strategy, and monitoring plan.

- Behavioral & Culture Fit: STAR-based questions explore how you handle ambiguity, stakeholder conflicts, and rapid pivots under tight deadlines.

Hiring Committee & Offer

After interviews, a hiring packet—containing interviewer feedback, code samples, and case-study notes—is reviewed by a committee within 24–48 hours. Compensation bands are calibrated against market data and internal benchmarks, ensuring equity across levels. Final offers include base salary, performance bonus, and potential equity or profit-sharing components.

Behind the Scenes

Tiger Analytics prides itself on a streamlined feedback loop: interviewers submit evaluations within one business day, and the hiring committee meets weekly to keep timelines tight. Reference checks are conducted swiftly, and you can often expect an offer or next-step decision within a week of your final interview.

Differences by Level

Junior Data Engineer candidates focus on core SQL/Python competence and basic pipeline patterns. Mid-level hires tackle more complex design problems and demonstrate ownership of entire data products. Senior engineers are asked to lead architecture discussions, mentor peers, and present a 30-minute deep-dive on a real project, highlighting their strategic vision.

Now that you know each stage, let’s dive into the actual Tiger Analytics Data Engineer interview questions you should practise.

What Questions Are Asked in a Tiger Analytics Data Engineer Interview?

Tiger analytics data engineer interview questions begin by evaluating your ability to manipulate and query data at scale. You’ll face a mix of coding exercises, design scenarios, and behavioral probes to assess both technical depth and client-focus.

Coding / Technical Questions

Tiger analytics data engineer interview questions typically include SQL and Python tasks. You might implement window functions to compute rolling metrics, write CTEs to aggregate event data, optimize slow-running queries for large tables, or transform nested JSON with Pandas. Midway through the session, interviewers often revisit these challenges to explore edge-case handling and performance improvements.

-

Tiger Analytics uses graph-traversal and path-optimisation problems to verify that data engineers can model real-world entities as nodes and edges, then reason about memory and compute trade-offs. Explaining an algorithm such as BFS, A*, or Dijkstra—plus why it guarantees optimal steps—demonstrates mastery of complexity analysis and coding discipline. Interviewers also listen for edge-case handling (unreachable exits, large grids) because production pipelines often fail on unexpected inputs. Clear justification of data structures (queue vs. heap) signals you can design efficient ETL workflows that move through many states without bottlenecks.

-

Although Tiger’s engineers mostly build data systems, they still need comfort with lower-level numerical routines when deploying streaming feature pipelines or Monte-Carlo jobs. Describing the Box-Muller transform or Ziggurat algorithm showcases mathematical grounding and the ability to code performant, dependency-light utilities. Validation via histogram plotting, Kolmogorov-Smirnov tests, or moment checks proves you think about statistical correctness, not just code. The question also hints at performance profiling—important when you’ll be sampling millions of points inside Spark or Flink tasks.

-

This classic “two-sum” variant measures your fluency with hashing for O(n) lookup versus naive O(n²) scanning. Tiger Analytics data engineers optimise joins and aggregations on terabyte-scale tables, so interviewers want to see that you instinctively choose linear-time, constant-space solutions where possible. Discussing trade-offs—such as input mutability, duplicate values, or streaming arrival—signals real-world pragmatism. The explanation of edge cases (no pair, multiple pairs) mirrors production data quirks.

How would you generate a list of all prime numbers up to N, returning an empty list if N ≤ 1?

Questions on prime generation test algorithmic thinking around sieves versus per-number checks, highlighting your ability to pick a scalable approach. A well-reasoned answer—e.g., using the Sieve of Eratosthenes and analysing space/time complexity—mirrors decisions you’ll make when designing indexing or compression strategies in columnar stores. Mentioning optimisations like segmenting the sieve shows you can adapt to memory-constrained environments in cloud pipelines. Interviewers also note how you explain primality concepts to stakeholders who may consume your logic downstream.

-

Tiger’s clients frequently ask for nuanced ranking metrics, so engineers must wield window functions, CTEs, or DISTINCT logic confidently. Detailing multiple approaches—ROW_NUMBER(), DENSE_RANK(), etc.—proves you can tailor solutions to different warehouse dialects. Interviewers watch for edge-case awareness (multiple people sharing second place, NULL salaries) to gauge data hygiene instincts. Explaining performance implications of each method shows you think beyond correctness to cluster cost.

-

This exercise assesses comfort with arithmetic series, XOR tricks, and single-scan algorithms—all staples when reconciling record counts or detecting gaps in event streams. Walking through memory-O(1) solutions reaffirms you optimise for throughput. Tiger’s data engineers often build validators for CDC pipelines where such discrepancy detection is critical, so communicating failure scenarios and integer-overflow safeguards counts. Showing test-case design underscores reliability culture.

-

While seemingly trivial, recruiters use this to gauge coding hygiene—proper input checks, concise iteration, and clear variable naming. Explaining why you avoid Python’s built-in max() during an interview reinforces that you can implement primitives when constraints forbid library calls (common in embedded UDFs). Discussing complexity (O(n)) and memory footprint demonstrates analytical completeness. Mentioning unit tests and type hints reflects production-grade standards Tiger expects.

-

The task probes your ability to sort, scan, and build structured outputs without quadratic blow-ups—skills mirroring deduplication or anomaly-matching jobs in data lakes. Interviewers look for explanations of why sorting (O(n log n)) is optimal and how you handle duplicate values gracefully. Producing ordered pairs shows attention to deterministic outputs, crucial for reproducible analytics. Suggesting generator patterns or memory-efficient buffers scores extra points for large-array thinking.

-

This tests your capacity to leverage distribution assumptions to shortcut computation—parallel to exploiting data skew for faster aggregations. Recognising that the majority element must occupy the median index (⌊n/2⌋) shows mathematical intuition. Describing scenarios where the guarantee fails demonstrates critical thinking about pre-conditions in production code. Tiger favours engineers who articulate both rapid solutions and guardrails against misuse.

What algorithm would you implement to decide whether a given string is a palindrome?

Palindrome checks showcase familiarity with two-pointer techniques and O(1) space optimisations, echoing patterns used in streaming validations or reverse-scan transformations. Interviewers expect discussion on Unicode handling, case normalisation, and ignoring non-alphanumeric characters—all pointing to real-world data cleansing savvy. Highlighting test coverage for edge cases (empty string, single char) underlines quality engineering culture. Mentioning algorithmic complexity ties the answer back to performance consciousness—essential for Tiger’s high-volume ETL workloads.

Cloud & Architecture Design Questions

Tiger analytics azure data engineer interview questions assess your cloud architecture expertise early on. You’ll be asked to design a robust Azure Data Factory pipeline for real-time data ingestion, choose partitioning strategies in ADLS Gen2, outline Delta Lake schema evolution, and optimize costs using serverless compute. This portion examines your ability to deliver secure, scalable, and maintainable data solutions.

-

Interviewers want to hear how you balance hot, warm, and cold tiers—e.g., land raw JSON in object storage, catalogue it with Hive/Glue, then roll daily Parquet partitions into Iceberg or Delta Lake for long-term retention. They listen for batch vs. streaming trade-offs (Kinesis ⇄ Spark Structured Streaming), cost controls through compaction and lifecycle rules, and governance features such as schema evolution. Articulating how analysts would hit the data (Athena, BigQuery, Trino) and how partition pruning keeps queries affordable shows that you design for both spend and performance. Calling out GDPR or PII deletion windows proves you consider compliance alongside scale.

-

Tiger Analytics expects you to diagnose dimension cardinality explosions, missing aggregate tables, stale statistics, and skewed data that bypasses partition elimination. Proposing roll-ups such as snapshot-fact tables, materialised views, or pre-computed cube segments demonstrates experience with aggregate-aware engines (e.g., Redshift Materialized Views or BigQuery BI Engine). Discussion of incremental ETL, late-arriving facts, and job orchestration shows you can maintain freshness without reprocessing terabytes. Stating assumptions about query concurrency, SLA windows, and hardware limits proves a methodical consulting mind-set.

-

This question blends data engineering and data science: you must outline near-real-time feature capture (restaurant, courier, prep times), feature stores, and online inference endpoints. Interviewers watch for discussion of labelled feedback loops—order-issue tickets—and how you’d retrain with decay on concept drift. Explaining fallback logic and A/B safety nets highlights your production rigor. Mentioning business metrics (refund rate, NPS, courier wait time) proves you translate models into measurable impact.

-

The exercise tests your ability to normalise event data (vehicles, crossings) while preserving performant time-range queries. You should justify composite primary keys (vehicle_id, crossing_ts) and indexes on date shards to enable “same-day” aggregations. Crafting SQL with window functions or min_by() shows analytical fluency, while mentioning materialised dashboards demonstrates end-user empathy. Flagging data privacy (obfuscated licence plates) earns bonus points for real-world readiness.

-

Strong answers outline a Lambda/Kappa-style design: ingest to Bronze (raw), transform to Silver (sessionised), then aggregate to Gold fact tables with incremental Spark jobs. You should explain watermarking, upserts via Merge Into, and the pros/cons of near-real-time streaming vs. micro-batch. Interviewers value commentary on orchestration (Airflow, Dagster) and data quality tests in CI/CD. Cost-aware partitioning and the choice between materialised views or roll-up tables show holistic thinking.

-

Expect to propose a slowly changing dimension (SCD Type 2) or temporal table with surrogate keys and effective_from / effective_to dates. Explaining how you enforce uniqueness constraints (one “current” row per customer) and handle overlapping date-ranges shows data-modelling maturity. Discussing indexing for point-in-time joins and CDC ingestion demonstrates operational savvy. Highlighting GDPR “right to erase” implications for address history signals compliance awareness.

-

The interviewer gauges your normalisation chops—splitting embedded friends and likes into junction tables—and your understanding of referential integrity. Detailing dual-write (change-data-capture) strategies, back-fill jobs, and phased cut-overs proves migration experience. Mentioning feature flags, read-repair, and eventual deprecation of the old store shows strategic rollout thinking. Risk assessment around data loss, performance regression, and stakeholder buy-in rounds out a senior-level answer.

-

Tiger engineers must wield conditional aggregation and date arithmetic elegantly. Explanation should emphasise how indexes on booking_date speed predicates and why you avoid scanning old partitions every run (e.g., use incremental snapshots). Highlighting NULL-safety and timezone handling shows production caution. Discussing how the query plugs into dashboards illustrates stakeholder empathy.

-

Interviewers probe your ability to balance granularity with payload size—capturing user_id, session_id, page_url, element_id, ts, and optional context (A/B bucket, viewport). Explaining how you encode events (JSON vs. Protobuf) and include a schema version for evolution demonstrates forward compatibility planning. You should address privacy (PII redaction), sampling knobs, and how the schema maps to downstream partitions. Mentioning governance through a central schema registry nails enterprise best practice.

-

A robust answer covers webhook ingestion, idempotent staging tables, incremental loads with cursor fields, and reconciliation jobs against Stripe’s API to catch late updates. Justifying CDC vs. full snapshots, and discussing error-handling for chargebacks or currencies, shows financial-data expertise. Detailing schema design (fact_payments, dim_customers) and BI refresh cadences ties the pipeline to business needs. Cost control via partitioning and retention policies emphasises operational stewardship.

-

You’re expected to outline checksum validation at each hop, schema drift alerts, and bilingual sampling audits against translation accuracy. Mentioning data contracts, Great Expectations tests, and governance metadata (locale, confidence score) proves enterprise-grade discipline. Discussing latency SLAs versus eventual consistency highlights trade-off analysis. Calling out regional compliance (POPIA, GDPR) and encrypted transport shows global readiness.

How would you add and back-fill a column on a 1-billion-row table without degrading user queries?

Candidates should discuss rolling schema migrations, online DDL (e.g., PostgreSQL’s

ADD COLUMN DEFAULT NULL), and phased back-fills via chunked jobs with throttling. Proposing blue-green replicas or shadow tables to avoid lock contention demonstrates operational acumen. Explaining monitoring (replication lag, query latency) and rollback plans signals production vigilance. Highlighting cost implications—IOPS, maintenance windows—rounds out a senior engineer’s perspective.

Behavioral / Culture-Fit Questions

Candidates then discuss past experiences that demonstrate ownership, collaboration, and rapid experimentation. Expect STAR-based prompts about managing production incidents, incorporating stakeholder feedback into pipeline iterations, or delivering client-ready solutions under tight deadlines. Strong answers highlight clear communication, empathy for business needs, and a commitment to continuous improvement.

-

Tiger Analytics DEs are dropped into messy client environments, so interviewers want proof you can push through ambiguous requirements, brittle pipelines, or legacy tech. Your answer lets them gauge your root-cause skills, stakeholder communication, and ability to balance speed with robustness. They’ll listen for how you decomposed the problem, rallied cross-functional teams, and measured success after the fix. Highlight post-mortems and preventive actions to show you create durable solutions, not one-off patches.

How have you made large datasets more accessible to business users who can’t write SQL or Python?

DEs often build self-serve layers for non-technical audiences; this question checks your empathy and product mindset. Interviewers want to hear about semantic models, governed BI cubes, Looker Explores, or well-documented APIs that reduced ad-hoc requests. Explaining how you balanced flexibility with data-quality controls (row-level security, lineage dashboards) shows mature thinking. Measuring adoption—e.g., drop in Jira ticket volume or increase in dashboard sessions—demonstrates impact.

-

Tiger looks for self-awareness because DEs must adapt to new clients quickly. Framing feedback as a growth narrative shows emotional intelligence and coachability. They’ll note whether your strengths map to the role (e.g., pipeline design, stakeholder rapport) and whether your weaknesses have concrete mitigation plans. Evidence of training, mentoring, or process changes signals a bias toward continuous improvement.

-

Success in client services hinges on translating jargon into business value. Interviewers want details on the audience’s context, the conflict (budget, timeline, risk), and the storytelling tools you used—data viz, analogies, or prototypes. They’ll assess how you listened to the stakeholder’s goals, negotiated scope, and documented decisions to prevent future churn. Showing empathy and follow-through proves you can be the trusted technical advisor Tiger bills for.

-

The firm wants candidates who thrive in fast-paced, multi-domain projects rather than a single product environment. A good answer links Tiger’s data-driven consulting ethos and industry variety to your desire for accelerated learning and impact. Referencing recent Tiger case studies or awards shows diligence. Finally, tying your goals—like leading cloud migrations or building ML platforms—to Tiger’s growth paths convinces them you’ll stay and scale.

-

Engineers at Tiger often run several client deliverables in parallel; they need disciplined prioritization. Interviewers look for structured frameworks—RICE, Eisenhower matrix, or SLA tiers—and tooling (Kanban, Airflow DAG tagging) that make your workload transparent. Mentioning proactive stakeholder updates and buffers for unknowns shows maturity. Metrics like on-time delivery rate or reduction in failed jobs quantify effectiveness.

How did you mentor a junior engineer to own a complex pipeline, and what was the outcome for the project?

Senior DEs are expected to upskill client teams as part of the engagement. Interviewers test your coaching style: breaking tasks into learning milestones, pairing on code reviews, and giving constructive feedback. Detailing the mentee’s growth—fewer defects, independent on-call rotations—demonstrates leadership ROI. Emphasize patience and adaptability to different learning styles.

Tell me about a situation where you had to push back on a client’s unrealistic timeline—how did you negotiate scope without damaging the relationship?

This probes your diplomacy under pressure, a core consulting skill. Explain how you used data (throughput metrics, capacity charts) to justify constraints, proposed phased roll-outs, and secured written agreement. Highlight maintaining trust via transparent communication and delivering quick wins to offset delays. The story should show you protect technical integrity while remaining client-centric.

How to Prepare for a Data Engineer Role at Tiger Analytics

Tiger Analytics data engineer interview questions should guide your study plan, but a structured approach ensures you cover coding skills, cloud design, and behavioral readiness.

Study the Role & Culture

Map your previous ETL and pipeline projects to Tiger’s client-first, rapid-experiment model. Prepare concise examples that showcase how you selected tools and architectures to meet business objectives quickly and reliably.

Practice Common Question Types

Allocate roughly 50 % of your prep to SQL/Python coding, 30 % to cloud and system-design scenarios, and 20 % to behavioral interviews. Use platforms like HackerEarth and Interview Query to simulate timed, real-world assessments.

Think Out Loud & Ask Clarifying Questions

During mock sessions, verbalize your reasoning and trade-off analysis—whether you’re choosing partition keys, designing failover mechanisms, or selecting between batch and streaming approaches. Clear, structured thinking is key.

Brute Force, Then Optimize

Start by crafting a working solution under time pressure, then iteratively refine it for performance and readability. Demonstrate how you’d tune indexes, parallelize operations, or refactor for maintainability.

Mock Interviews & Feedback

Partner with peers or mentors for full mock loops using Interview Query’s mock interview services. Time your SQL exercises to under 20 minutes and your design discussions to 30 minutes. Solicit feedback on both technical accuracy and communication clarity to polish your delivery.

FAQs

What Is the Average Salary for a Data Engineer at Tiger Analytics?

Average Base Salary

Tiger analytics data engineer salary packages combine base pay, performance bonuses, and equity components that vary by location and seniority. Data engineer tiger analytics salary bands typically offer a competitive premium, while senior data engineer salary in tiger analytics roles reflects additional leadership and expertise.

Does Tiger Analytics Use Azure-Specific Questions for Data Engineers?

Yes—expect Tiger analytics azure data engineer interview questions covering Azure Data Factory orchestration, ADLS Gen2 partitioning strategies, and cost-optimization patterns using serverless compute.

How Many Interview Rounds Should I Expect?

Most candidates complete four stages: an online assessment, a technical phone screen, an onsite or virtual loop, and a hiring committee review. Entry-level candidates may skip the architecture deep-dive, but coding and behavioral interviews remain standard.

Conclusion

Mastering the Tiger Analytics Data Engineer interview questions requires balanced preparation across SQL fluency, cloud architecture, and client-focused communication. By practicing the scenarios in this guide, conducting mock interviews, and embracing Tiger’s rapid-experiment culture, you’ll enter each stage with confidence.

For further support, explore our Data Engineering Interview Learning Path, schedule a mock interview, and read how Hanna Lee secured her role.