Tiger Analytics Data Scientist Interview Questions & Process (2025 Guide)

Introduction

Tiger Analytics is a global analytics and AI consultancy that helps Fortune 500 companies translate complex data into actionable insights. Its teams move rapidly from hypothesis to production, delivering end-to-end machine learning solutions across industries such as retail, healthcare, and financial services. In this guide, we’ll walk through the Tiger Analytics Data Scientist interview process, highlight common question types, and share preparation strategies to help you shine.

Role Overview & Culture

At Tiger Analytics, a Data Scientist owns the full lifecycle of analytical products—from data ingestion and feature engineering to model deployment and monitoring. Tiger Analytics data scientist interview questions often probe your experience with scalable architectures, algorithm selection, and real-world troubleshooting. You’ll collaborate closely with product managers and engineers to align technical approaches with business objectives, while also presenting findings to client stakeholders. The firm’s culture of rapid experimentation and client obsession means you’ll be encouraged to iterate quickly, test boldly, and share learnings transparently. Interviewers may also explore your familiarity with deployment pipelines and post-production model maintenance—key topics in any Tiger Analytics data science interview questions session.

Why This Role at Tiger Analytics?

If you thrive on seeing models go live within weeks and enjoy tackling fresh challenges across diverse domains, this position is for you. During the Tiger Analytics interview questions for data scientist rounds, you’ll demonstrate your ability to adapt analytical methods to new industries and datasets. The company offers competitive compensation and clear career progression paths—from individual contributor roles to leadership positions—rewarding both technical mastery and client impact. Understanding each step of the interview process is your first advantage in securing this high-velocity role.

What Is the Interview Process Like for a Data Scientist Role at Tiger Analytics?

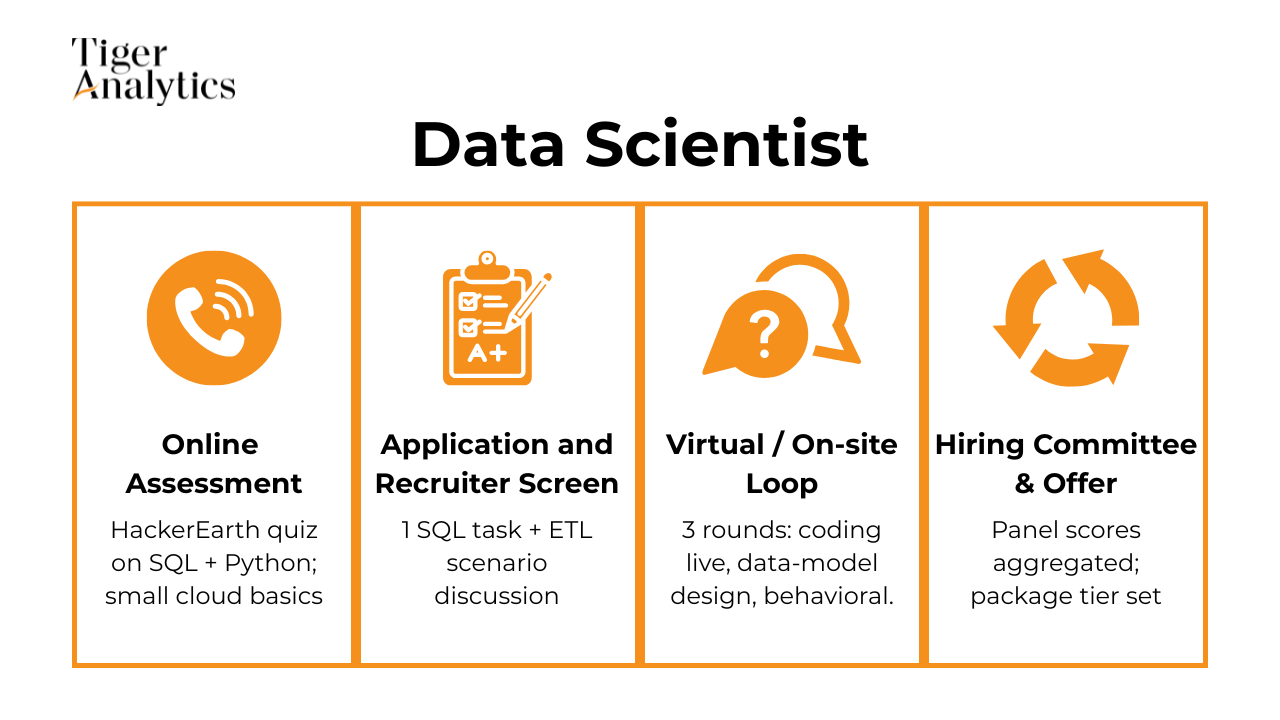

Tiger Analytics takes a structured yet fast-paced approach to evaluate candidates, starting with a rigorous online coding and SQL quiz. The Tiger Analytics assessment test for data scientist screens for your ability to manipulate data, write efficient queries, and apply basic machine learning logic under time pressure. From there, you progress through live technical discussions and case-based exercises designed to mirror the real-world challenges you’ll face on client projects.

Online Assessment (HackerEarth)

The first hurdle is a 60-minute HackerEarth assessment that mixes Python scripting and SQL queries with short ML theory questions. Candidates will encounter problems ranging from data frame manipulations to join-heavy queries and basic algorithmic puzzles. Interviewers use this stage to gauge your coding fluency, problem-solving speed, and familiarity with libraries such as pandas or NumPy. This assessment also helps identify those ready for deeper technical conversations—especially around topics like feature engineering and pipeline automation. In preparing, you should review common patterns and practice with Tiger Analytics data scientist HackerEarth test questions to ensure you can complete tasks accurately within the time limit.

Technical Phone Screen

Successful test-takers move on to a 45-minute phone screen with a Tiger Analytics data scientist. This call typically begins with live coding—often on a shared editor—where you’ll tackle a medium-difficulty Python or SQL problem. Afterward, the conversation shifts to machine learning fundamentals: you may be asked to explain regularization techniques, evaluation metrics for classification models, or how you’d design an experiment to measure uplift. Expect follow-up questions probing your assumptions and how you’d productionize a model. This round assesses both your technical depth and your ability to articulate your approach clearly to stakeholders who may not have a data science background.

Onsite / Virtual Loop

The core of the process is a loop of 3–4 interviews, either onsite or virtually. One session will center on a case-study exercise: you’ll analyze a business problem—such as customer churn prediction or marketing uplift—and propose an end-to-end solution, including data requirements, modeling approach, and deployment considerations. Another round focuses on live coding, where you’ll solve algorithmic challenges on a whiteboard or collaborative tool. A third session dives into ML system design, asking you to architect scalable pipelines or discuss monitoring strategies. Finally, a behavioral interview examines culture fit, client communication stories, and examples of rapid iteration. Together, these interviews evaluate your holistic readiness to deliver analytic solutions in a consulting context.

Hiring Committee & Offer

After the loop, feedback from all interviewers is collated and reviewed by a hiring committee within 48 hours. This group balances technical scores, cultural alignment, and business impact potential to make a hiring decision. Compensation and level are calibrated based on your experience, prior outcomes, and the role’s scope. Tiger Analytics prides itself on a transparent offer process—candidates receive detailed feedback and can discuss any open questions about the team match or project assignments before accepting.

Behind the Scenes

Although the process moves quickly, Tiger Analytics ensures fairness through panel calibration sessions. Interviewers undergo training to standardize scoring rubrics and minimize bias. You can expect follow-up communications within a business day of each stage, with recruiters proactively checking in to guide you on next steps. This level of responsiveness reflects the firm’s client-obsessed culture—keeping you informed mirrors how they maintain transparency with their own stakeholders.

Differences by Level

Junior data scientist candidates are evaluated more heavily on coding accuracy, SQL fluency, and core ML concepts, while senior hires face an additional architecture deep-dive. Senior rounds often include a 30-minute presentation of a prior project, where you defend design choices and discuss trade-offs under questioning. Leadership potential is also assessed through scenario questions about mentoring peers and driving innovation within client teams. This tiered approach ensures that each candidate is matched to the right level of client engagement and technical responsibility.

What Questions Are Asked in a Tiger Analytics Data Scientist Interview?

Tiger Analytics interviews blend coding challenges, modeling case studies, and behavioral discussions to reflect the consulting environment you’ll enter.

Coding / Technical Questions

In the technical rounds, you’ll tackle problems designed around real data patterns. The opening discussion often references Tiger Analytics data scientist interview questions to align expectations on question style and complexity. You may be asked to write a SQL window function that computes rolling aggregates, implement a Python function to clean and transform JSON logs, or optimize an algorithm for large-scale data processing. Interviewers look for clear, efficient code, thoughtful handling of edge cases, and the ability to explain your logic step by step.

How would you rotate a 2-D array 90° clockwise, in-place?

Interviewers use this to verify you can reason about 2-D coordinates, boundary indices, and in-place element swaps without corrupting data. A strong answer walks through layer-by-layer swapping, explains time O(n²) and space O(1), and mentions edge cases like odd-length matrices. Discussing alternative transpose-and-reverse approaches or cache-efficiency considerations demonstrates additional depth.

How can you return the second-highest salary in Engineering when the top value may be shared?

This SQL challenge gauges your proficiency with ranking functions such as

DENSE_RANK,ROW_NUMBER, or sub-query filtering. You must handle duplicates gracefully—if multiple people tie for first, the next distinct salary is the answer. Interviewers listen for commentary on indexes, execution-plan performance, and why a window function beats a naiveMAX(<>)exclusion for large tables.Given 0 … n with one missing value, how do you find the missing number in O(n) time/O(1) space?

A staple algorithmic warm-up that exposes your comfort with arithmetic series formulas or incremental XOR logic. The best solutions avoid extra memory and handle overflow by switching from sum-of-integers to XOR when n is near 2³¹−1. Explaining pros/cons of each approach and how you’d test edge cases (empty or length-1 arrays) shows thoroughness.

How would you generate a sample from a standard normal distribution without high-level libraries?

Tiger Analytics looks for statistical foundations: Box–Muller transform, Marsaglia polar, or inverse-CDF with Sobol sequences. You should discuss uniform RNG requirements, floating-point precision, and why rejection sampling can be more efficient than inverse methods in certain languages. Mentioning vectorization and seeding for reproducibility demonstrates production-ready thinking.

Write a query to return the two students with the closest SAT scores, breaking ties alphabetically.

This exercise tests your mastery of self-joins or

LAG/LEADwindow functions to compute score deltas row-by-row. You need to capture absolute differences, filter to the global minimum, and then apply the alphabetical tie-break. Discussing how the query scales on millions of rows—or why an index onscorespeeds the window—earns bonus points.What algorithm finds the maximum number in a list, returning None for empty input?

Although trivial, the prompt spotlights coding hygiene: type hints, early exits, and guardrails against heterogeneous lists. Explaining why a single linear scan is optimal and how you’d unit-test empty arrays or negative-only sets shows professional discipline. Interviewers may follow up on Python’s built-in

maxversus manual loops to assess language familiarity.How would you return indices of two numbers that add to a target, or an empty list if none exist?

The classic Two-Sum problem reveals whether you instinctively choose a hash-map for O(n) time and O(n) space. Detailing duplicate handling, negative values, and one-pass versus two-pass strategies highlights nuanced understanding. Many candidates stop there; you can stand out by discussing memory-constrained variants that trade speed for O(1) space.

How do you return all element pairs whose absolute difference equals the minimum possible?

Sorting followed by a linear sweep is the idiomatic approach, proving you know how to lower a quadratic brute-force to O(n log n). The interviewer wants careful pair construction in ascending order and thoughtful mention of identical values. Adding commentary on stability, memory, and when a counting sort could outperform demonstrates algorithmic maturity.

Describe an efficient way to list every prime ≤ N and analyse its complexity.

Recounting the sieve of Eratosthenes with space optimizations (bit arrays or skipping even numbers) exhibits strength in number-theory algorithms. You should articulate why the sieve runs in roughly O(N log log N) and how segmented sieves extend beyond memory limits. Discussing real-world uses like cryptography strengthens the narrative.

Given a numeric string (with decimals), how would you sum every digit it contains?

Parsing digits inside a float-like string tests regex skills, list comprehensions, and awareness of Unicode digit classes. Explaining why you ignore the decimal point and sign characters avoids off-by-one slips. A brief complexity analysis—O(len(s)) time and O(1) extra space—rounds out a complete answer.

How would you return the top N frequent words in a paragraph and state your run-time?

Counting with a hash-map then using a min-heap or

Counter.most_commonshows fluency with Python collections. Clarify tokenization rules, stop-word removal, and case normalization to demonstrate NLP awareness. Interviewers care that you explicitly derive time O(m + k log k) and space O(m) where m is vocabulary size.If > 50 % of a sorted list is one repeating integer, how can you return the median in O(1)?

Recognising that the majority element must occupy the median position lets you answer without scanning, highlighting the value of problem constraints. You’ll impress by proving this property formally and discussing how to retrieve the element in O(1) when the list is stored externally. Mentioning alternative median-of-medians methods shows breadth.

How would you test whether a Unicode string is a palindrome?

Beyond reversing the string, you must address case folding, diacritics, and grapheme clusters to handle international text. A strong response contrasts simple slicing with two-pointer techniques that skip punctuation and spaces. Interviewers notice robustness—e.g., using

unicodedata.normalize()—and attention to algorithmic O(n) guarantees.How many distinct triangles can be formed from a multiset of side lengths?

This counting variant checks combinatorial reasoning: after sorting, employ a fixed-third-side two-pointer to count valid (i,j,k) in O(n²). You should mention the triangle inequality, handle duplicates as distinct “sticks,” and analyse worst-case counts. Edge-case discussion (zero or negative lengths) demonstrates thoroughness.

Implement k-Nearest Neighbors from scratch using Euclidean distance and tie-break by k-1.

Building KNN without scikit-learn proves you understand algorithm mechanics: broadcasting in NumPy, vectorised distance matrices, and majority voting. You’ll need to explain runtime O(n d) per query, memory concerns, and how you’d accelerate with KD-trees or faiss in production. Handling the “tie then k-1” rule shows attention to spec details.

Given a building grid where each room has one unlocked door, what is the minimum time from NW to SE?

Breadth-first search on a directed graph is required; describing queue operations, visited-state marking, and early exit criteria demonstrates graph fluency. Interviewers appreciate clarity on why BFS guarantees the shortest path in unweighted graphs. Discussing fallback to −1 when cycles trap you, plus potential heuristic A* tweaks, shows depth.

How would you find the longest common substring of two strings?

You can propose dynamic programming (O(m n) time), suffix automaton, or suffix array + LCP approaches for O(m + n) preprocessing. Explaining memory trimming with rolling rows or banded DP reveals optimisation skill. The ability to compare trade-offs—implementation complexity versus performance—signals senior-level insight.

Machine-Learning & Case Study Questions

These sessions explore how you apply ML in business contexts. You might design a feature uplift experiment to measure the impact of a promotional campaign or outline an approach for churn prediction using survival analysis. Interviewers probe your understanding of model selection, hyperparameter tuning, and validation strategies—especially in scenarios with limited data or class imbalance. They also assess your ability to frame the problem in terms of key performance indicators and stakeholder requirements, ensuring your solution drives measurable value.

What key statistical assumptions must hold true for a linear-regression model to be trustworthy?

Interviewers want to hear that you know all five classics—linearity, independence, homoscedasticity, normality of errors, and lack of multicollinearity—and can diagnose them with residual plots or VIF scores. They also probe whether you understand why violations matter (e.g., biased coefficients, unreliable CIs) and how to remediate them through transformations or robust regressors. Bringing up influential-point checks (Cook’s distance) or heteroskedasticity-robust errors shows mature, production-grade thinking.

How would you prove a new food-delivery ETA model outperforms the current one?

The core skill tested is experimental design for predictive models: define ground-truth arrival timestamps, pick evaluation windows, and choose error metrics such as MAE, P90, or calibration curves. You should describe slicing performance by distance, weather, and restaurant latency to surface regressions on edge cases. Interviewers also appreciate a rollout plan (offline back-test → shadow mode → A/B holdout) and discussion of real-time constraints that affect inference latency.

-

This question explores model explainability and regulatory compliance (e.g., ECOA adverse-action notices). Strong answers mention post-hoc techniques like SHAP, LIME, or counterfactual explanation libraries that rank the top drivers for each individual prediction. Highlighting fairness audits (ensuring reasons aren’t proxies for protected classes) and logging explanations for audit trails demonstrates enterprise-grade rigor.

How do you interpret logistic-regression coefficients for categorical and Boolean predictors?

The interviewer checks that you translate log-odds into intuitive odds ratios and can explain reference categories, dummy coding, and interaction terms. You should clarify that a positive coefficient multiplies the odds by e^β and that for Boolean flags this reflects presence versus absence. Discussing confidence intervals and why large standard errors signal sparse categories shows statistical maturity.

-

Because relevance is often subjective and imbalanced, you must justify precision-recall curves, F1, or average precision over mere accuracy. Explain holding out temporally coherent test sets to avoid information leakage from retweets and trending topics. The best answers add human-in-the-loop re-label checks and cost-sensitive thresholds if irrelevant news surfacing has reputational risk.

-

You should compare gradient-boosted trees (tabular strength, interpretability via SHAP) against deep CTR models (capturing sparse city/driver embeddings). Trade-offs revolve around latency, feature engineering effort, and robustness to concept drift. Discussing features like driver idle time, surge multiplier, historical acceptance rate, and geospatial buckets shows you can craft a thoughtful feature set.

How would you design a model that maps legal first names to likely nicknames on a social media app?

This tests creativity with noisy text data: you might propose a character-level seq2seq, a transliteration model, or a probabilistic alias graph mined from co-occurrence in user profiles. Important points include handling multilingual variants, nicknames that shorten and lengthen names (Liz ↔ Elizabeth), and privacy safeguards. Discussing evaluation via precision@k on held-out alias pairs or A/B impact on friend-finder recall shows real-world grounding.

-

The interviewer wants you to reason through scale sensitivity: logistic regression coefficients will be wildly off because the model assumes the original feature distribution. A good answer proposes data-quality monitoring, zero-sum checks, and retraining after cleaning or winsorizing the bad values. Detailing how you would add robust scaling, feature guards, or fallback models in production highlights operational awareness.

When should you rely on regularization versus cross-validation to improve generalization?

They’re probing conceptual clarity: cross-validation is a procedure for model-selection and hyper-tuning, while regularization is a penalty that shrinks parameters to control variance. You should explain that CV can choose the optimal regularization strength but cannot correct high variance without such penalties; conversely, regularization without CV risks under- or over-penalizing. Bringing up nested CV for unbiased error estimates and elastic-net when predictors are correlated rounds out a nuanced answer.

Behavioral / Culture-Fit Questions

Behavioral rounds examine how you collaborate under tight deadlines and interact with non-technical clients. Expect prompts about a time you had to present difficult findings, resolve conflicting requirements between stakeholders, or recover from a model deployment failure. Tiger Analytics values adaptability and a learning mindset, so highlight experiences where you iterated quickly, solicited feedback, and improved processes. Your storytelling should demonstrate empathy, ownership, and the ability to translate complex insights into actionable recommendations.

-

Tiger Analytics consultants are parachuted into messy, high-stakes client environments, so interviewers need proof you can deliver under ambiguity. They listen for how you scoped the business question, debugged data-quality or modeling issues, and managed timeline or stakeholder friction. Emphasize concrete actions—creative feature engineering, clever experimentation, escalation tactics—and the eventual business outcome to show end-to-end ownership. Framing the story with the STAR method signals clear, client-friendly communication.

What tactics have you used to make complex data insights accessible to non-technical business users?

Because Tiger often embeds analysts alongside marketing, supply-chain, or risk teams, the firm prizes an ability to translate models into decisions. Strong answers mention tailored visualizations (story-point dashboards, executive one-pagers), data-lit training sessions, or building self-serve semantic layers. Interviewers also value discussion of measuring adoption—e.g., tracking dashboard views or decision latency drops—to show that “accessibility” is tied to usage. Highlight any feedback loops you set up to iteratively refine the artifacts.

-

Tiger looks for self-aware consultants who embrace continuous improvement. Articulate strengths that map to their values—analytical rigor, client empathy, or mentoring junior staff—then pair each weakness with a concrete mitigation plan (peer code reviews, public-speaking courses, etc.). Framing weaknesses as growth stories shows maturity instead of defensiveness. Finish by tying the self-reflection back to how you’ll add value on multi-disciplinary client engagements.

-

Consulting success hinges on influencing without authority, so interviewers probe for diplomacy and persistence. Outline the initial disconnect—misaligned KPIs, tech jargon, conflicting incentives—then detail how you adapted: translating to dollar impact, using prototypes, or looping in a champion. Conclude with the measurable change (budget approval, model adoption) to prove you can convert insights into outcomes. Mentioning retrospective lessons learned signals a habit of continuous improvement.

-

Recruiters want evidence you’ve researched Tiger’s domain focus—advanced analytics in CPG, BFSI, healthcare—and aren’t just shotgun-applying. Link your passion for solving open-ended client problems to Tiger’s consulting model and rapid-growth trajectory. Referencing recent case studies or the firm’s “AI for social good” initiatives shows genuine interest. Close by explaining how the diverse project portfolio aligns with your goal to become a full-stack data-science leader.

-

The firm juggles parallel engagements, so they assess your project-management chops. Discuss frameworks such as impact-versus-effort matrices, daily stand-ups, or Kanban boards to reorder work transparently. Stress proactive communication—flagging risks early to account managers—and building reusable analytics pipelines to prevent last-minute scrambles. Showing you can hit deadlines without sacrificing quality reassures interviewers you’ll protect client trust.

Describe a situation in which you had to persuade a skeptical client executive to trust your model’s recommendations.

Tiger’s consultants often face stakeholders with gut-driven instincts. Explain how you blended technical explainability (e.g., SHAP plots) with business narratives, piloted a low-risk proof-of-concept, or aligned incentives through KPI dashboards. Emphasize the balance between humility and confidence that ultimately won buy-in and led to measurable value creation.

Tell us about a time you mentored a junior analyst or engineer—what approach did you take, and what was the impact?

Senior data scientists at Tiger are expected to scale themselves through coaching. Detail how you diagnosed skill gaps, provided hands-on code reviews or learning road-maps, and measured improvement (faster sprint velocity, higher code-quality scores). This shows leadership potential and your ability to uplift team capacity on demanding client timelines.

How to Prepare for a Data Scientist Role at Tiger Analytics

To excel in the Tiger Analytics Data Scientist interview, you’ll need a blend of technical mastery, business acumen, and clear communication. Focus on understanding the company’s fast-paced, client-focused culture and the types of problems you’ll solve on day one.

Study the Role & Culture

Align your STAR stories with Tiger Analytics’ values of rapid experimentation, client obsession, and bottom‐up innovation. Demonstrate familiarity with their clientele—Fortune 500 companies—and emphasize how you’ve driven insights under tight deadlines.

Master Python & SQL Foundations

Hone your skills by practicing problems similar to those on the HackerEarth assessment: Python data manipulation, SQL window functions, and basic algorithm challenges. Building speed and accuracy here will pay dividends in the technical screens.

Mock Case Studies

Simulate the case‐study rounds by framing problems end‐to‐end: define the business hypothesis, select modeling approaches, and articulate the impact. Rehearse articulating your thought process, from feature selection to performance metrics.

Think Out Loud & Ask Clarifying Questions

Interviewers value structured reasoning. Verbalize your assumptions, trade‐offs, and next steps as you work through problems. This transparency shows you can collaborate effectively on cross‐functional projects.

Seek Feedback Early

Arrange peer mock interviews or connect with ex-Tiger Analytics data scientists. Incorporate their feedback to refine your technical explanations and business narratives—practice Tiger Analytics data science interview questions using Interview Query’s mock interview services.

FAQs

What Is the Average Salary for a Data Scientist at Tiger Analytics?

Average Base Salary

Average Total Compensation

Tiger Analytics data scientist salary and tiger analytics senior data scientist salary packages typically combine base, bonus, and equity components. Junior roles focus more on base and performance bonus, while senior positions include larger stock grants. Negotiation tip: anchor to market benchmarks and emphasize your track record of delivering client value.

Does Tiger Analytics Use a HackerEarth Assessment for Data Scientists?

Yes, candidates begin with a HackerEarth assessment that tests Python, SQL, and problem‐solving skills. Review common patterns from “tiger analytics data scientist HackerEarth test questions” to familiarize yourself with the question formats and time constraints.

How Many Rounds Are in the Interview?

The loop is generally 4–5 stages: HackerEarth OA, technical phone screen, virtual/on‐site case study & coding rounds, and finally a behavioral discussion. Fresh graduates may skip the case round, while experienced hires often face an extra system‐design deep‐dive.

Conclusion

By mastering the Tiger Analytics Data Scientist interview questions and familiarizing yourself with each stage of the process—from the HackerEarth assessment through the on-site loop—you’ll walk into your interviews with confidence and clarity.

To deepen your preparation, download our comprehensive question bank, explore the Data Science Learning Path, and schedule a mock interview to rehearse under realistic conditions. For inspiration, check out how Dhiraj Hinduja leveraged structured prep to land his role in our success story. Good luck!