Tiger Analytics Machine Learning Engineer Interview Guide (2025)

Introduction

The Tiger Analytics machine learning engineer interview questions you’ll face are designed to assess both your coding chops and your ability to architect end-to-end ML systems under real-world constraints. In this role, you’ll productionize models, own feature pipelines, and partner with Data Scientists and DevOps teams to deploy solutions on cloud platforms. Tiger Analytics’ client-first, rapid-prototype culture means you’ll work in flat, autonomous squads, driving high-impact ML initiatives across diverse domains.

Role Overview & Culture

As a Machine Learning Engineer at Tiger Analytics, your day-to-day revolves around taking predictive models from proof-of-concept to production at scale. You’ll design and optimize feature-extraction pipelines, ensure model reliability, and integrate monitoring for drift and performance degradation. The firm’s “client-first, rapid-prototype” ethos empowers you to experiment freely and iterate quickly, while its global delivery model exposes you to best practices across industries—from retail and BFSI to healthcare.

Why This Role at Tiger Analytics?

Joining Tiger Analytics places you at the heart of the ML lifecycle: data ingestion, feature engineering, model training, and continuous deployment. You’ll tackle a variety of business problems, honing your skills across multiple tech stacks and cloud environments. Career progression is clear—from Machine Learning Engineer to ML Architect—rewarding both technical depth and leadership. Mastering the Tiger Analytics interview process is your first step toward landing this high-velocity role.

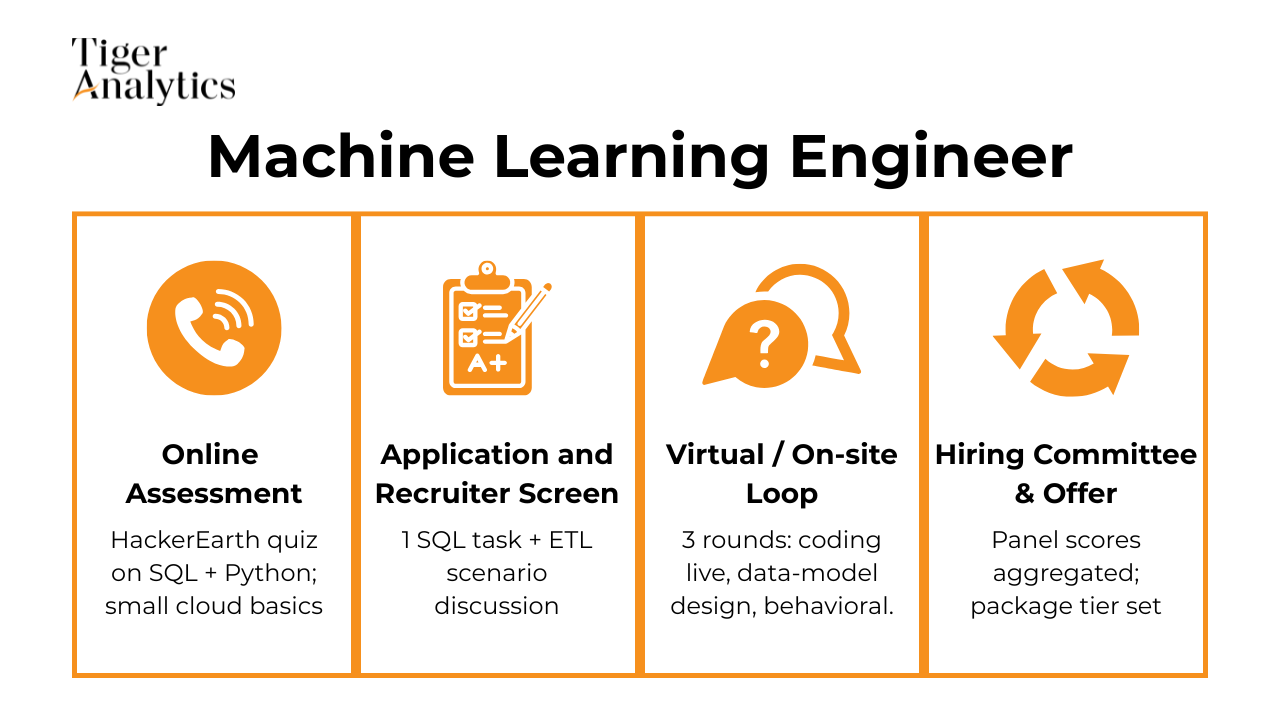

What Is the Interview Process Like for a Machine Learning Engineer Role at Tiger Analytics?

Before diving into practice questions, it helps to understand each stage of the Tiger Analytics interview process for MLEs. This roadmap will let you tailor your preparation to every round.

Application & Recruiter Screen

Your journey begins with a recruiter call focused on your résumé, ML background, and motivation for joining Tiger Analytics. Expect questions about your prior end-to-end ML projects and client engagements.

Online Assessment (HackerEarth)

Next is a 60-minute HackerEarth quiz containing mixed Python and SQL challenges, alongside ML theory questions. This test evaluates your ability to manipulate data, implement algorithms, and reason about model performance.

Technical Phone Screen

In a 45-minute session, you’ll tackle live coding problems and discuss ML fundamentals—such as feature selection methods, evaluation metrics, and overfitting prevention. Be ready to walk through your code and explain design decisions clearly.

Virtual or On-Site Loop

This multi-round loop typically includes:

- Case Study: You’ll present an ML solution to a business problem, discussing trade-offs and impact.

- Live Coding: Hands-on Python or pseudocode exercises.

- ML System Design: Architecting a scalable training and serving pipeline.

- Behavioral: STAR-style questions exploring teamwork, client communication, and rapid iteration.

Hiring Committee & Offer

Interviewers submit feedback within 48 hours, and the hiring committee calibrates scores across levels. Successful candidates receive an offer aligned to their experience and Tiger Analytics’ competitive compensation bands.

Behind the Scenes: All panelists’ feedback is reviewed collectively to ensure consistency and fairness. You’ll typically hear back on next steps within 48 hours of your final interview.

Differences by Level: Senior MLEs (with 5+ years of experience) will face an additional architecture whiteboard session focused on system scalability and production monitoring; juniors often skip this deep-dive.

Now that you know the stages, let’s dive into the actual Tiger Analytics machine learning engineer interview questions you should practice.

What Questions Are Asked in a Tiger Analytics Machine Learning Engineer Interview?

This section outlines the types of prompts you’ll encounter, from coding exercises to system design and culture-fit discussions.

Coding / Technical Questions

These assess your ability to manipulate data pipelines and write production-quality Python. Expect tasks like optimizing in-memory data structures, enforcing object-oriented best practices, and building end-to-end ETL processes. For each exercise, interviewers look for clean, efficient code, clear explanations of algorithmic choices, and thoughtful trade-off analysis. Be prepared to explain why particular data structures or patterns are most appropriate for the problem at hand.

How would you rotate an N × N matrix of random values 90 degrees clockwise in-place?

This coding prompt checks whether you grasp 2-D array indexing, in-place swaps, and time/space complexity trade-offs—skills vital when transforming tensors inside deep-learning pipelines. Interviewers expect you to articulate the layer-by-layer or transpose-and-reverse strategy and analyze why both run in O(N²) time but differ in memory overhead. A crisp explanation of edge cases (non-square input, integer overflow) demonstrates production-mindset. Finally, outlining how you’d unit-test on small matrices shows engineering rigor.

-

Tiger Analytics MLEs often optimize data-prep steps that feed models; this problem gauges comfort with arithmetic series formulas or XOR tricks that avoid extra memory. Explaining both methods—and why they scale for billion-row logs—shows you can choose the simplest performant approach in ETL code. Discussing overflow-safe implementations and how you’d embed the check in Spark or Pandas pipelines highlights practical experience. Clear reasoning around complexity proves you keep latency budgets in mind.

How would you write digit_accumulator to sum every numeric character inside a floating-point string?

Although simple, the task tests string parsing, Unicode awareness, and clean functional style—skills MLEs need when feature-engineering messy text fields. Interviewers listen for attention to corner cases like signs, multiple decimals, or scientific notation. Mentioning regex vs. ordinal checks and benchmarking trade-offs shows depth. Concluding with how you’d wrap the function in a data validation step or integrate it into a preprocessing pipeline demonstrates end-to-end thinking.

-

This mirrors real-world NLP token-frequency tasks. A strong answer walks through normalization (case-folding, punctuation stripping), a hash-map frequency table, and a min-heap or Counter.most_common selection approach. You should articulate the O(M + V log N) complexity (M = tokens, V = unique words) and memory footprint. Citing how you’d stream large corpora or shard counts with MapReduce showcases readiness for production-scale text analytics at Tiger clients.

What logic would you implement to check whether a given string is a palindrome?

Beyond the two-pointer scan, interviewers care about how you handle Unicode, casing, and non-alphanumeric characters—crucial in multilingual consumer data. Comparing slicing, iterative, and deque methods reveals performance intuition. You should also discuss testing strategy and the role such utilities play in data cleaning for NLP tasks. Demonstrating defensive coding (returning False for None, trimming whitespace) underscores your engineering discipline.

-

Tiger values engineers who understand algorithms beneath libraries. Outline data normalization, Euclidean distance computation, vectorization with NumPy, and optimizations like KD-trees for large k. Explain how you’d break ties by recursively reducing k, and how to unit-test correctness against scikit-learn. Finally, reflect on KNN’s scalability limits and where you’d switch to approximate nearest neighbors, showing architectural judgment.

-

This problem probes graph modeling and shortest-path algorithms (BFS) under constrained movement rules, akin to navigating state spaces in reinforcement-learning environments. Interviewers look for a clear mapping of rooms to nodes, doors to directed edges, and queue-based exploration guaranteeing optimality. Discussing time/space complexity (O(R × C)) and pruning strategies demonstrates efficiency awareness. Relating the solution to robot-path planning or game-AI scenarios ties it back to Tiger’s applied ML projects.

ML System-Design Questions

You’ll be asked to architect scalable model-serving solutions on cloud platforms such as AWS or Azure. Topics include designing a feature store, orchestrating retraining pipelines, and implementing A/B or canary deployments to minimize production risk. Interviewers will probe your understanding of latency vs. retraining cadence trade-offs, monitoring strategies for model drift, and how to ensure repeatable, reliable performance at scale.

-

Interviewers want to hear how you chain multiple signals—keyword dictionaries, image-hash matching against known gun shapes, optical-character-recognition on photos, and graph features such as seller history—to create a multi-modal classifier. Discuss building a human-in-the-loop review queue to minimize false positives, plus regular retraining to keep pace with code-word drift. Explain how you’d gather labelled data (partnerships with trust-and-safety teams, synthetic augmentation), handle model explainability for policy auditors, and instrument precision / recall dashboards tuned toward extremely low false-negative rates. Touch on legal privacy limits and the need for regional rule variants to show product-minded awareness.

-

The question probes your end-to-end thinking—from real-time ingestion, sharded text + vision models (e.g., Transformer NER for self-harm phrases, CLIP-based computer vision for graphic imagery), to streaming feature stores and on-call monitoring. You should justify a tiered approach: lightweight keyword and hash filtering first, followed by heavier model inference, and finally a manual escalation layer. Elaborate on policy constraints (child-safety, terrorism lists), data governance, and regional opt-outs to demonstrate regulatory literacy. Mention feedback loops for continuous improvement and A/B testing mitigations to keep latency under user-experience SLAs.

-

A strong answer blends collaborative-filtering embeddings with sequence-aware deep models (e.g., MIND or YouTube DNN) while highlighting scalability tricks like two-stage retrieval + ranking and vector-search acceleration. Discuss user signals (dwell time, likes, freshness, “not interested”), video signals (topic/length), and context (device, time of day) that feed into multi-task loss functions balancing watch time with creator diversity and policy health. Interviewers expect remarks on cold-start handling, feedback delay bias, and mitigating filter bubbles. Finally, outline online/offline evaluation—precision@k, novelty, churn—and guardrails for misinformation or extremist content.

-

Start by describing robust feature engineering—transaction amount, velocity, merchant risk scores—plus temporal cross-validation to respect concept drift across ten years of data. Explain resampling or cost-sensitive learning to counter the % fraud rate, then compare tree ensembles, gradient-boosted models, and deep tabular nets, noting interpretability vs. performance. Tie bias-variance to hyper-parameter tuning: deeper trees reduce bias but raise variance, demanding regularization and out-of-time testing. Finish with real-time inference constraints, feedback loops from charge-backs, and adversarial adaptation by fraudsters to demonstrate operational savvy.

-

This design question looks for segmentation of the problem into forecast → optimization → experimentation layers. Outline an LSTM or Prophet model to predict occupancy and booking horizons per micro-market, then a revenue-management solver that maximizes long-run revenue subject to host constraints and minimum-stay rules. Discuss seasonality, competitor scraping, and elasticity estimation to set price bounds. You must mention fairness (avoiding discriminatory surges), host override UX, and a shadow-pricing A/B test to measure lift. Highlight latency and explainability so hosts trust automated suggestions.

-

Interviewers assess your knowledge of edge-device inference, privacy, and ID management. Propose on-device face embedding computation with secure transmission to a central identity service backed by a feature-store and PKI. Explain role-based access tokens with TTLs for contractors, audit logging, and revocation paths. Cover accuracy tuning (ROC curves, lighting augmentation), spoof-detection, GDPR consent flows, and fallback mechanisms (badge swipe) for model failures. Emphasize scalable enrollment queues and blue-green deployment to minimize downtime.

-

You should outline sources—check-ins, posts, Instagram photos, third-party menus—and how to assemble user × venue embeddings via graph-SAGE or dual-tower models combining text, image, and location signals. Mention contextual re-rankers for time of day and friend preferences. Address experimentation (geo-split roll-outs) and metrics (incremental orders, session length). Flag downsides: privacy perceptions around location inference, filter-bubble cuisine exposure, and potential for unfair small-business ranking. Proposing mitigation—explanations and opt-outs—shows product sensitivity.

What model would you build to power a type-ahead search suggestions?

Explain an inverted-index candidate generator with prefix trees backed by n-gram or neural autocomplete (e.g., Trie-GRU) rerankers. Detail features: query popularity, embeddings of title/genre, personalization via watch-history, and language locale handling. Discuss latency budgets (<50 ms), cache sharding, and stale-ranking refresh cadences. Address evaluation via offline MAP @ K and live acceptance-rate uplift, plus guardrails against spoiler or age-inappropriate suggestions.

-

A complete response covers multimodal feature extraction—scene-change detection, dialogue sentiment, viewer drop-off curves—and labels derived from historical skip-rates or manual annotations. Detail a two-phase approach: candidate spotting (rule-based, low latency) followed by neural ranking (e.g., temporal CNN). Talk about balancing revenue (ad impressions) against engagement (post-break retention) in the loss function. Include edge deployment considerations for streaming devices and an online-learning loop to adjust to new content genres.

If you build a WallStreetBets sentiment-analysis model, what post-deployment issues could surface?

The interviewer is probing model governance. Discuss domain drift (slang evolves), sarcasm misclassification, coordinated manipulation, and exposure to toxic language requiring content filters. Address feedback loops affecting trading volumes, regulatory scrutiny (SEC), and potential amplification of market volatility. Mitigation includes continuous active learning, bias audits, and human oversight on trade-trigger thresholds. Highlight ethical and legal responsibilities when sentiment models may influence financial decisions.

How would you predict numeric movie ratings (1-10) from review text?

Propose a supervised NLP pipeline: text cleaning, sub-word tokenization, and a pretrained transformer fine-tuned with regression head. Explain handling class imbalance, review sarcasm, and multi-sentence context aggregation (CLS token vs. pooling). Cover evaluation (MAE, Spearman rank), inference cost vs. batch scoring, and explainability via SHAP or attention heat-maps to justify scores to content teams. Mention governance for hate-speech or spoilers embedded in reviews.

-

Outline feature engineering (one-hot amenities, geo-hash embeddings, price buckets) and a hybrid model that blends collaborative filtering with content-based similarity. Explain cold-start handling via nearest-neighbor in embedding space and feedback loops from click and inquiry actions. Discuss fairness (avoid steering vulnerable groups), diversity constraints, and ranking metrics like nDCG and long-term conversion. Bringing up explainable filters (pet-friendly, school ratings) shows product-market empathy.

How would you generate Spotify’s “Discover Weekly” playlist for each user?

A good answer combines audio-signal embeddings (MFCC, VGGish), collaborative signals (co-listens), and contextual filters (release recency, genre quotas) inside a multi-objective ranking framework balancing novelty and familiarity. You should describe candidate generation from nearest-neighbor search in embedding space, followed by a learning-to-rank model trained on skip vs. save actions. Cover serving architecture—batch ETL into Redis, fallback rules for new users—and A/B test metrics like long-term retention and artist diversity.

Behavioral / Culture-Fit Questions

Tiger Analytics values client obsession and rapid experimentation. In these rounds, share STAR stories that highlight your collaboration with cross-functional teams, how you iterated quickly on proof-of-concepts, and how you handled feedback under tight deadlines. Demonstrating clear communication, a growth mindset, and a focus on delivering business impact will set you apart.

Describe a data-science project you led end-to-end and the biggest hurdles you faced along the way.

Tiger Analytics looks for MLEs who can shepherd messy, ambiguous business ideas into production models. By asking about obstacles—data sparsity, shifting requirements, pipeline failures—interviewers gauge your resourcefulness, stakeholder management, and grit. Share a concise STAR story that highlights how you diagnosed root causes, balanced model accuracy with delivery timelines, and drove a measurable outcome. Emphasize any creative tooling or automation you introduced, because the firm prizes engineers who raise team velocity.

What techniques have you used to make complex analytics accessible to non-technical stakeholders?

Clients hire Tiger Analytics to unlock value from data, so the firm needs MLEs who translate model outputs into business-friendly insights. Discuss concrete tactics: interactive dashboards, SHAP visualizations, scenario simulators, and plain-language executive summaries. Mention how you adapt explanations to the audience’s statistical literacy and use A/B demos or pilot pilots to build trust. Showing you “close the last mile” between ML and decisions reassures interviewers you can drive adoption, not just code.

If we asked your current manager, what strengths and weaknesses would they highlight?

This question tests self-awareness and coachability—traits Tiger prioritizes in its flat, consulting culture. Offer two or three strengths that map to the MLE role (e.g., feature-engineering creativity, scalable pipeline design, mentoring juniors) and one genuine growth area paired with a concrete improvement plan. Keep the tone balanced and factual; avoid humble-bragging or fatal flaws. Demonstrating reflective learning signals you’ll thrive amid rapid project rotations.

Tell us about a time you struggled to communicate with a stakeholder. How did you bridge the gap?

Consulting work often means aligning data solutions with diverse business owners who may be skeptical or technical novices. Interviewers want evidence you can adjust vocabulary, negotiate scope, and establish shared KPIs. Walk through a scenario where misalignment threatened a deliverable, the listening and reframing tactics you used, and the positive impact on project momentum. Highlight any artifacts you produced—mock-ups, one-pagers, pilot dashboards—to illustrate proactive communication.

Why are you interested in Tiger Analytics and how does this role fit your career path?

The firm expects consultants to articulate a clear motive beyond “I like data.” Show you’ve researched their industry mix (CPG, retail, BFSI), open-source contributions, and culture of rapid prototyping. Tie your answer to the kinds of ML problems they tackle—forecasting, personalization, responsible AI—and how the breadth of client work aligns with your growth goals. A thoughtful response indicates you’ll be motivated across varied engagements.

How do you juggle multiple concurrent deadlines while keeping model quality high?

Tiger projects often overlap and pivot quickly. Explain your prioritization framework (impact vs. effort matrices, sprint planning, time-boxed research spikes) and the automation you rely on—CI/CD, data-quality tests, reusable feature stores—to avoid regression. Mention communication cadences (daily stand-ups, client demos) that surface risks early. The goal is to reassure interviewers you can deliver reliable models without burning out or dropping client commitments.

Describe a situation where model bias emerged after deployment and how you remediated it.

Because Tiger serves regulated domains, leaders want MLEs who embed fairness monitoring. Discuss how you detected drift or disparate impact, the root-cause analysis you ran, and the mitigation—re-sampling, post-processing, feature constraint, or stakeholder education. Close with lessons you built into subsequent projects (e.g., bias dashboards).

Tell us about a time you championed a tooling or process change (e.g., moving to MLflow or adopting feature stores). How did you win adoption across the team?

This probes your influence skills and vision for scalable MLOps. Outline the pain points you spotted, the cost-benefit case you made, pilot metrics you tracked, and how you trained peers. Emphasize measurable improvements—faster experiment turnaround, reduced deployment bugs—that demonstrate leadership beyond individual contributions.

How to Prepare for a Machine Learning Engineer Role at Tiger Analytics

To excel, you should rehearse authentic Tiger Analytics machine learning engineer interview questions while aligning your responses with the firm’s client-centric values.

Study Role & Culture

Begin by mapping past projects to Tiger Analytics’ consulting model. Reflect on times you delivered rapid prototypes and worked closely with clients to gather requirements, then highlight how those experiences translate to their “client-first, rapid-prototype” culture.

Practice Core Question Types

Allocate your preparation time roughly as 40 % coding, 35 % ML system-design, and 25 % behavioral. Drill SQL/Python quizzes, sketch end-to-end training and serving pipelines on a whiteboard, and rehearse STAR narratives to ensure balanced readiness across all rounds.

Think Out Loud

During mock exercises, verbalize your assumptions, ask clarifying questions, and walk interviewers through your reasoning. This transparency helps demonstrate structured problem solving and aligns with Tiger’s emphasis on collaborative innovation.

Brute Force → Optimize

For coding and design prompts, always start with a straightforward solution before iterating to a more optimized, production-ready approach. Outline your baseline, then discuss how you’d improve performance, fault tolerance, or maintainability.

Mock Interviews & Feedback

Partner with peers or former Tiger Analytics engineers to simulate each stage: the coding screen, the system-design whiteboard, and the behavioral loop. Solicit candid feedback on clarity, depth, and pacing to refine your delivery before the real interviews.

Conclusion

Mastering tiger analytics machine learning engineer interview questions by combining technical rigor with client-first storytelling will position you for success. For a deeper dive into Tiger Analytics’ full interview suite, explore our company-wide interview guide, and strengthen your skills through the Modeling & Machine Learning Interview Learning Path.

When you’re ready for realistic practice, book a mock interview with our coaches. Good luck—from everyone at Interview Query, and congratulations on joining the ranks of successful candidates like Keerthan Reddy.