Tiger Analytics Data Analyst Interview Guide (2025)

Introduction

Tiger Analytics data analyst interview questions are designed to evaluate your ability to turn complex datasets into actionable insights that drive business outcomes. In this guide, we’ll walk through each stage of the hiring journey, sample question types, and tips to help you stand out. You’ll learn what to expect—from SQL and Excel assessments to client-facing case studies—and how to showcase both technical acumen and stakeholder empathy.

As a Tiger Analytics data analyst, you’ll partner closely with data engineers and scientists to build dashboards, perform root-cause analyses, and present recommendations to Fortune 500 clients. Tiger Analytics’ culture of “client-first innovation” and flat, autonomous teams empowers analysts to own end-to-end projects and iterate rapidly. With hands-on exposure to real-world data challenges, this role offers a unique blend of technical growth and high-impact delivery.

Role Overview & Culture

A Data Analyst at Tiger Analytics spends their day ingesting raw data from diverse sources, crafting SQL queries and Excel models, and translating findings into clear, visually engaging dashboards. You’ll work in small, cross-functional pods that embrace rapid experimentation, enabling you to pilot new analytical techniques and tools. Regular client interactions ensure your insights directly inform strategic decisions and measurable business improvements. This environment rewards proactive problem-solving and continuous learning.

Why This Role at Tiger Analytics?

Joining Tiger Analytics means deploying analytics solutions to production within weeks, rather than months. You’ll tackle large-scale datasets across industries—retail, healthcare, finance—while benefiting from transparent career paths and competitive compensation packages. The firm’s emphasis on bottom-up innovation allows you to propose and implement process improvements that accelerate client value. Before diving into your prep, let’s explore the what is the interview process like for a data analyst role at Tiger Analytics.

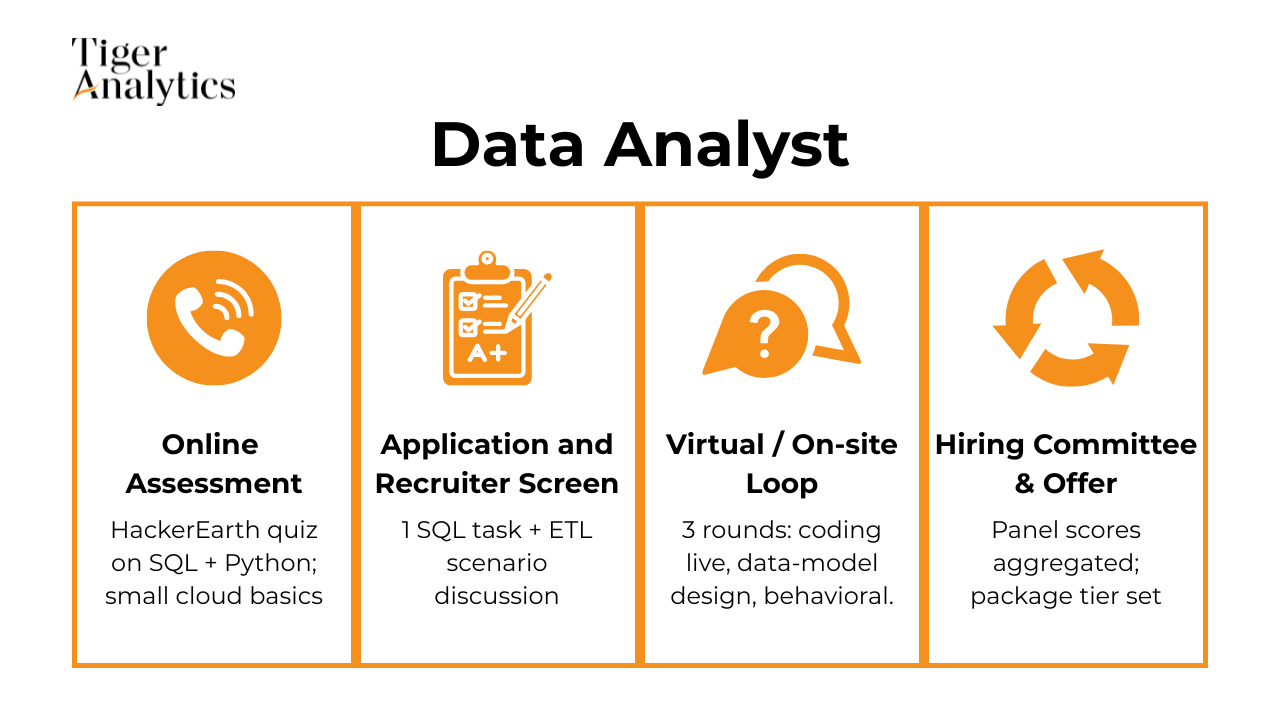

What Is the Interview Process Like for a Data Analyst Role at Tiger Analytics?

A successful candidate navigates a multi-step process designed to assess both technical proficiency and client-facing savvy. Below is an overview of each stage, along with behind-the-scenes insights and level-based variations

.

.

Application & Recruiter Screen

Your resume is reviewed for relevant analytical experience and domain fit, followed by a brief recruiter call to discuss your background, motivations, and salary expectations.

Technical Assessment

This 45-minute online test combines SQL and Excel challenges, focusing on data cleaning, aggregation, and dashboard-style problem solving under time constraints.

Virtual Onsite / Panel

You’ll join a three-round virtual loop: a live SQL coding exercise, a case study where you analyze business metrics and recommend actions, and a behavioral discussion exploring your client engagement and problem-solving approach.

Hiring Committee & Offer

Interview feedback is consolidated within 24 hours and reviewed by a committee. Once approved, you receive a formal offer that includes role details and compensation.

Behind the Scenes

Tiger Analytics prides itself on rapid feedback cycles—most candidates hear back within a day of each interview. The hiring committee calibrates scores against role expectations and ensures consistency across teams before extending offers.

Differences by Level

Junior analysts focus primarily on SQL and Excel proficiency, while senior candidates are expected to present prior work (e.g., dashboards or client presentations) and may face an additional round on stakeholder management and project leadership.

Transitioning into focused practice will help you master the Tiger Analytics data analyst interview questions you’ll encounter at each stage.

What Questions Are Asked in a Tiger Analytics Data Analyst Interview?

In this section, you’ll discover the core question types used to assess both your technical chops and your client-facing analytics skills.

Coding / Technical Questions

To start, candidates are presented with Tiger Analytics data analyst interview questions focused on data manipulation and interpretation. Typical tasks include writing complex SQL queries with multiple joins and window functions, performing quick calculations in Excel or Pandas, and interpreting visualizations to spot trends or anomalies. For each, be prepared to explain why the problem matters (e.g., business impact), how you structured your solution (logic and tools), and walk through a concise example demonstrating your approach.

How would you rotate a square matrix 90° clockwise in-place?

Tiger Analytics likes this classic array-manipulation prompt because it surfaces how you reason about coordinate transforms, index arithmetic, and memory constraints. Interviewers watch for an O(n²) one-pass solution that swaps layers from the outside in, rather than building a second matrix. Explaining edge-case testing—e.g., 1×1 or non-square inputs—demonstrates defensive thinking. Clear variable names and a brief complexity analysis round out a production-ready answer.

-

The exercise checks your window-function fluency and understanding of NULL/TIE scenarios that break naïve MAX(<) subqueries. Tiger projects often require ranked roll-ups, so recruiters look for

DENSE_RANK()orROW_NUMBER()with a filter on rank = 2. Discussing index paths ondepartment_id,salary DESCshows you think about performance. Mention how you’d adapt the pattern for N-th salary to hint at reusability. -

This seemingly simple puzzle gauges your grasp of arithmetic series vs. XOR tricks—both useful for checksum validation in streaming ETL jobs. Interviewers note whether you derive the formula

n*(n+1)/2 – sum(nums)and discuss integer-overflow safeguards. If you bring up bitwise methods and compare trade-offs, you score “depth” points. Relating the idea to data-integrity audits shows domain relevance. -

Consultants sometimes must implement statistical primitives inside constrained client stacks. Expect to lay out Box–Muller or Ziggurat methods and defend your choice in terms of speed and tail accuracy. The follow-up often probes how many samples you need before performance degrades and how you’d unit-test distributional properties (e.g., Kolmogorov–Smirnov). Mention seeding for reproducibility to score craftsmanship points.

-

This window-function brain-teaser stresses ordered comparisons and tie-breaking logic—skills vital for cohort analyses. A clean answer uses

LAG()to compute adjacent differences after sorting, then a second step to filter the MIN(diff). Detailing composite indexes on(score, name)and why they matter for million-row tables shows you’re performance-minded. Always explain how you’d validate correctness with unit data. Write a function that returns the maximum value in a list, or

Noneif the list is empty.While trivial, hiring managers watch for edge-case discipline (empty list, non-numeric values) and linear-time logic. They may pivot into “how would you parallelize this over distributed shards?” to test scalability awareness. Bring up Python’s

max()vs. manual scan to discuss trade-offs in clarity versus control. Treat the prompt as an opening to reveal your coding hygiene.-

Tiger engagements frequently require hash-based dedup and match operations; this question measures your instinct to trade space for speed via a dictionary lookup. Interviewers verify you avoid nested loops and handle duplicates or self-pairing correctly. Discussing memory ceilings and streaming adaptations shows system-design maturity. Annotate complexity (time O(n), space O(n)) to demonstrate rigor.

-

The optimal approach—sort then single pass—tests your algorithmic efficiency and sorting-cost intuition. You’ll earn extra credit by clarifying stability when equal differences occur and by articulating memory usage. Real-world parallel: deducing closest sensor readings in time-series ETL. Explaining why a brute-force O(n²) scan is infeasible cements your senior-level perspective.

List all prime numbers ≤ N; return an empty list if N < 2.

Tiger teams working on analytics platforms often need one-off math utilities. Interviewers expect you to cite Sieve of Eratosthenes for O(N log log N) and mention optimizations like skipping evens. Discuss memory trade-offs for large N and how you’d stream results without holding the full sieve. Touch on unit tests—checking prime counts against known datasets—to show reliability focus.

Create a

digit_accumulatorfunction that sums every digit (ignoring “.”) in a numeric string.They’re testing string-parsing agility and input validation (minus signs, scientific notation). Mention vectorization with list comprehensions for clarity and speed. Tie the exercise to checksum calculations on ingested CSV columns to illustrate practical relevance. Complexity remains O(len(s)), but you can note that for completeness.

Return the N most frequent words in a paragraph and their counts; what’s your algorithm’s Big-O?

Data engineers at Tiger routinely build word-level aggregations; this question blends data-structures with runtime analysis. The ideal solution uses a hash-map for counts then a heap (or

Counter.most_common) for top-K, yielding O(M log K) where M distinct words. Interviewers look for lowercase normalization, punctuation stripping, and tie-breaking rules. Calling out memory vs. speed trade-offs shows system sense.-

This puzzle gauges creative reasoning under constraints—common when optimizing aggregations on large fact tables. Candidates typically reason that the majority element is necessarily the median, so a single pass to find it (Boyer-Moore) suffices. Demonstrating proof by contradiction impresses interviewers. Relating the trick to finding dominant event types in log streams connects the dots to real projects.

-

It probes combinatorial reasoning and two-pointer optimization (sorting + triangle inequality). Explain why naive triple-nested loops are O(n³) and how sorting lets you achieve O(n²). Discuss handling duplicates as distinct sides per prompt, and potential integer-overflow checks. Mapping this to manufacturing tolerance checks shows business empathy.

-

Tiger projects sometimes embed custom models in production codebases without heavy libraries. Interviewers assess your understanding of vector math, efficient distance computation, and careful tie logic. Articulate complexity O(N·D) per prediction and memory considerations for large datasets. Mention acceleration strategies (KD-trees, approximate NN) to hint at scalability know-how.

-

This grid path-finding task measures your grasp of BFS for unweighted graphs and handling dead-ends. Interviewers expect you to justify BFS over DFS for shortest paths and to discuss visitation marking to avoid cycles. Explaining how you’d serialize huge grids for memory efficiency and test impossible cases displays production readiness.

Metrics & Case-Study Questions

Next, interviewees tackle metrics-driven case studies: building and explaining funnel metrics, designing A/B-test readouts, or calculating ROI for a marketing campaign. Strong candidates use prioritization frameworks like ICE (Impact, Confidence, Ease) or PACE (Prioritize, Act, Check, Evaluate) to justify which metrics to track and how to interpret results for stakeholders.

-

Start by checking that the experiment met its sample-size requirement and ran the full planned duration; peeking or early stopping can inflate false-positive risk. Validate instrumentation (e.g., bot traffic, duplicate cookies) and confirm that users were randomized cleanly with balanced covariates. Examine multiple-testing context—were other variants or metrics also queried?—and compute confidence intervals to gauge practical rather than just statistical significance. Finally, replicate or run a hold-back test to ensure the 4 % tail result isn’t a fluke before rollout.

-

A difference-in-differences or stratified A/B rollout lets you track adoption, trade frequency, and account-funding growth versus matched controls. You’d monitor unit economics such as order-routing cost per trade, average revenue per user, and portfolio diversification indices. Cohort retention curves reveal whether fractional buyers stay more active or simply shift volume from whole-share orders. Because feature uptake is optional, instrumentation must separate causal uplift from self-selection by propagating treatment flags through the event pipeline.

-

Use a stepped-wedge or region-stagger rollout to create quasi-experimental contrasts over time. Apply causal-impact or synthetic-control models that predict what engagement would have been absent the feature, controlling for seasonality and traffic trends. Segment by sender/recipient activity level to check heterogeneous effects—does the dot spur replies from dormant users? Complement quantitative analysis with funnel event logging (hover, message started) to attribute lift specifically to visibility of the indicator.

-

Frame hypotheses around retention, daily sessions, and incremental ad or interchange revenue. Model the TAM by estimating overlap with Venmo/Cash App users and latent demand in unbanked segments. Prototype in a pilot market, then compare cohort stickiness, cross-sell into Marketplace, and uplift in social interactions versus a matched geo without the feature. Be sure to factor fraud-loss forecasts and regulatory compliance costs, as a positive NPV must outweigh operational risk.

-

You’ll bucket results by rating (1–5), compute CTR aggregates per bucket, and attach confidence intervals using Wilson or Agresti–Coull formulas. Including position as a covariate in a regression or stratifying by top-N slots guards against rank bias. Interviewers expect a discussion on Simpson’s paradox: high-rated results might cluster lower in the list, obscuring the true relationship unless position is controlled. Explaining how to surface actionable cut-offs (e.g., ratings < 3 hurt CTR) shows product intuition.

-

For skewed or heavy-tailed metrics (revenue per fleet), switch to non-parametric tests like Mann-Whitney U or bootstrap the mean/median difference with thousands of resamples. Quantile regression can capture impact on the typical fleet while being robust to outliers. Bayesian credible intervals on the uplift provide an intuitive probability of superiority. You should also comment on transforming the metric (e.g., log-scale) and verifying equal variance assumptions before drawing conclusions.

-

Adopt cluster randomization at the social-graph level—e.g., ego-networks or strongly connected components—to minimize spill-over when one user’s story tags friends in another bucket. Measure both direct effects (engagement by treated users) and indirect effects (their friends’ viewing time). Include guardrail metrics like overall feed load to catch negative externalities. If clusters become too large, consider two-stage randomization with exposure modeling rather than simple assignment.

Which analyses would you run to judge whether opening DMs to third-party businesses is beneficial?

First, quantify potential lift in time-spent and retention against risks of spam or user churn. Pilot with a handful of vetted partners, logging opt-in rates, block/unfollow events, purchase conversions, and response latency. Compute net-promoter-score deltas via surveys to capture sentiment. Scenario-plan server-load costs and content-moderation overhead; if incremental revenue per active user exceeds these, the idea merits scaling.

How do you evaluate a platform’s feed-ranking when some engagement metrics rise but others fall?

Define a north-star composite—quality session time or downstream job applies—then instrument a causal DAG to see how likes, dwell, and shares contribute. Use a multi-objective optimization framework (Pareto frontier) to visualize trade-offs and pick an operating point. A/B test ranker tweaks while running regression TMLE to de-bias from presentation order. If metrics conflict, dig into content-type segments; rising clicks yet falling comments might indicate click-bait.

-

Recommend a multi-arm bandit or Thompson-sampling scheme that dynamically shifts spend from under-performing channels to winners while preserving exploration. Set conversion value (LTV) as the reward and use hierarchical priors to share strength across similar ad groups. Impose spend-saturation caps and latency windows since some channels (mail) have delayed conversions. Present how you’d monitor incremental lift versus last-touch attribution to convince finance of ROI validity.

-

Build a bottoms-up TAM and funnel model: acquisition enlarges the top, ranking optimizes mid-funnel retention, and creator tools enrich content supply. Simulate DAU uplift by applying historical elasticities (e.g., 1 % more quality videos raises session time X%). Estimate engineering cost, risk, and time-to-impact to compute marginal DAU per dev-week. Prioritize the initiative with the highest ROI, validating with small-scale experiments or counterfactual modeling.

-

Hypotheses include influx of lurker users diluting averages, shift toward passive media (video views), or spam filtering removing low-quality comments. Pull cohort curves to see if legacy users are less engaged or new users comment less from the outset. Check ratio of posts to comments, comment length, and device mix (mobile vs. desktop). A decomposition analysis helps pinpoint whether the issue is behavioral or demographic.

Which live metrics reveal ride-sharing demand and how do you decide when demand exceeds supply?

Track real-time request-to-supply ratio, surge-price multipliers, pickup-ETA distributions, and abandonment rates. High unfulfilled-request percentage and sharply rising ETAs serve as supply-gap signals. Set thresholds through historical analysis of rider churn versus driver utilization—e.g., if median ETA > 12 minutes, satisfaction drops 15 %. Feed these triggers into dynamic incentives for driver dispatch or pricing algorithms.

-

Explain two perspectives: LTV = ARPU × average lifespan = $100 × 3.5 = $350, or using churn: lifespan ≈ 1/churn = 10 months, but adjust because observed tenure is 3.5 months—highlighting why steady-state assumptions matter. Discuss gross-margin adjustments and discount rates for a fuller formula. Clarify how improving retention by one percentage point outperforms equivalent price hikes, motivating retention work.

Behavioral / Culture-Fit Questions

Finally, expect questions probing your client-communication narratives, ownership mindset, and iterative delivery examples. Use the STAR method—Situation, Task, Action, Result—to structure stories that highlight how you collaborated with clients, drove insights to action, and refined analyses based on feedback.

-

Hiring managers want evidence that you can keep momentum when datasets are dirty, stakeholders go dark, or scope suddenly shifts. Walk through the Situation, Task, stubborn Obstacles, and the specific Analysis, automation, or relationship-building you used to unblock progress. Quantify the project’s impact so the panel sees you can rescue value, not just complete tasks. Tiger Analytics values consultants who stay calm under ambiguity and guide clients to solutions, so emphasize resilience and proactive communication.

-

A core part of the analyst role is translating statistical outputs into decisions executives can act on. Interviewers listen for layered storytelling: choosing the right grain of detail, using interactive dashboards, analogies, or data-walk sessions instead of dense SQL. Mention moments when clearer visuals or stakeholder workshops flipped skepticism into buy-in. Showing you can adapt your narrative style to audiences of varying literacy signals the client-facing polish Tiger expects.

-

The team wants honest self-reflection paired with a growth mindset, not perfection. Choose strengths that fit consulting (e.g., speed to insight, facilitation) and weaknesses you’ve already begun to mitigate through courses, peer reviews, or new habits. Connecting feedback loops to measurable improvement proves you’re coachable in Tiger’s flat, feedback-heavy culture. Avoid generic clichés; instead anchor each point with a concrete example.

-

Analysts at Tiger juggle clients in different time zones and business functions, so misalignment happens. Interviewers want to hear that you diagnose root causes (conflicting KPIs, unmet expectations) and reopen channels through structured updates, empathy, and data demos. Emphasize how you verified shared definitions and secured renewed commitment, then note the positive project outcome. This showcases both interpersonal savvy and project-management discipline.

-

Expect follow-ups on your knowledge of Tiger’s domain focus (CPG, retail, BFSI) and its mix of advanced analytics plus engineering. Craft an answer that links your past wins to their consulting model — perhaps rapid prototype-to-production cycles or storytelling for C-suite workshops. Interviewers gauge how much homework you’ve done and whether your motivations align with their entrepreneurial, high-ownership environment. Concrete examples of past consulting or cross-industry adaptability strengthen credibility.

How do you keep multiple ad-hoc requests and long-term dashboards on track without missing deadlines?

Detail your prioritization framework: effort-versus-impact matrices, stakeholder SLAs, or agile sprint planning. Discuss tooling such as Jira, Kanban boards, or automated data-quality alerts that free cognitive load. Closing with an anecdote where you renegotiated scope, delegated, or built reusable code shows you protect delivery quality under pressure. Tiger looks for analysts who self-manage in fast-changing client environments.

Describe a situation where you challenged a senior client’s preferred metric and successfully shifted the discussion to a more reliable KPI.

Consultants often encounter vanity metrics; Tiger wants proof you can diplomatically push back with statistical rigor. Explain the context, the alternative metric you proposed, how you modeled its business relevance, and the resulting decision improvement. Demonstrating principled persuasion shows leadership potential even in an individual-contributor role.

Tell us about a time you spotted an ethical or privacy risk in a dataset or analysis and what steps you took to mitigate it.

With clients spanning healthcare, finance, and retail, Tiger emphasizes responsible data use. Share how you identified the risk (e.g., re-identification, proxy bias), involved legal or governance teams, and adjusted the pipeline or model. Highlighting proactive stewardship convinces interviewers you’ll safeguard both client trust and Tiger’s reputation.

How to Prepare for a Data Analyst Role at Tiger Analytics

Preparation is key to standing out in the Tiger Analytics process. Here are targeted strategies to help you excel:

Study the Role & Culture

Familiarize yourself with Tiger’s consulting model by mapping past projects to the end-to-end analytics lifecycle they value—data ingestion, analysis, and client presentation—demonstrating a client-first mindset.

Practice Common Question Types

Allocate your practice time roughly as: 50 % SQL coding challenges, 30 % analytics case studies (funnel analysis, A/B tests), and 20 % behavioral stories centered on client impact.

Think Out Loud & Ask Clarifying Questions

Verbalize your thought process clearly and ask questions to ensure you understand business context and requirements before diving into the solution.

Brute Force, Then Optimize

Start with a working solution—like a straightforward SQL query—then iterate to improve performance or readability, showcasing your optimization skills and clarity in data storytelling.

Mock Interviews & Feedback

Time-box SQL exercises to under 20 minutes and run through case studies with peers or mentors, capturing feedback on both your technical answers and how you communicate insights.

FAQs

What Is the Average Salary for a Data Analyst at Tiger Analytics?

Average Base Salary

Tiger Analytics data analyst salary and data engineer tiger analytics salary vary by level and location. Typical base, bonus, and stock bands range from entry through senior positions, with a premium for those based in the US—negotiate by highlighting your detailed technical and client work.

How Many Rounds Are in the Interview?

Most candidates go through 3–4 stages: recruiter screen, technical assessment, virtual panel, and committee review. Senior roles may add a deeper case-study presentation or leadership interview.

Where Can I Read More Discussion Posts on Tiger Analytics Data Analyst Roles?

Check out our Interview Query community threads for firsthand experiences and tips from candidates who’ve walked this path.

Are Data Analyst Job Postings for Tiger Analytics Available on Interview Query?

You can view current openings and set up alerts on our job board to be notified the moment new roles are posted.

Conclusion

Mastering Tiger Analytics data analyst interview questions and aligning your preparation with the firm’s client-centric culture will give you the confidence to navigate each stage. For a comprehensive view, explore our broader Tiger Analytics interview overview and related analyst guides. To sharpen your skills further, consider scheduling a mock interview, joining our newsletter or webinar series, and diving into the Data Analytics Learning Path.

Read how Simran Singh prepared and landed her role. Good luck—your next opportunity to impact Fortune 500 clients awaits!