Tiger Analytics Software Engineer Interview Questions (2025 Guide)

Introduction

The Tiger Analytics software engineer interview questions are designed to evaluate your full-stack development skills, algorithmic problem solving, and system-design acumen at Tiger Analytics. You’ll collaborate with data scientists to deploy ML pipelines and build production-grade micro-services that power analytics platforms for Fortune 500 clients.

Mastering these Tiger Analytics software engineer interview questions means understanding Tiger’s rapid-prototyping culture, client-first mindset, and distributed-team model. With the right preparation, you’ll be ready to showcase both your technical chops and your ability to drive business impact.

Role Overview & Culture

As a Software Engineer at Tiger Analytics, you’ll spend your days architecting and maintaining scalable data platforms, authoring micro-services, and crafting client-facing applications. You’ll partner closely with data scientists to operationalize machine-learning models, ensuring that advanced analytics move swiftly into production. Tiger Analytics prizes ownership and bottom-up innovation: you’ll be empowered to select your own tools, iterate rapidly, and deliver solutions that directly affect client outcomes.

Why This Role at Tiger Analytics?

This position offers full-stack exposure—one day you might optimize a Spark job, the next you’re designing RESTful APIs for real-time dashboards. You’ll work on high-visibility projects for leading enterprises, enjoy competitive compensation, and follow a clear path toward Tech Lead responsibilities. Up next, let’s look at the Tiger Analytics software engineer interview process so you can target your preparation effectively.

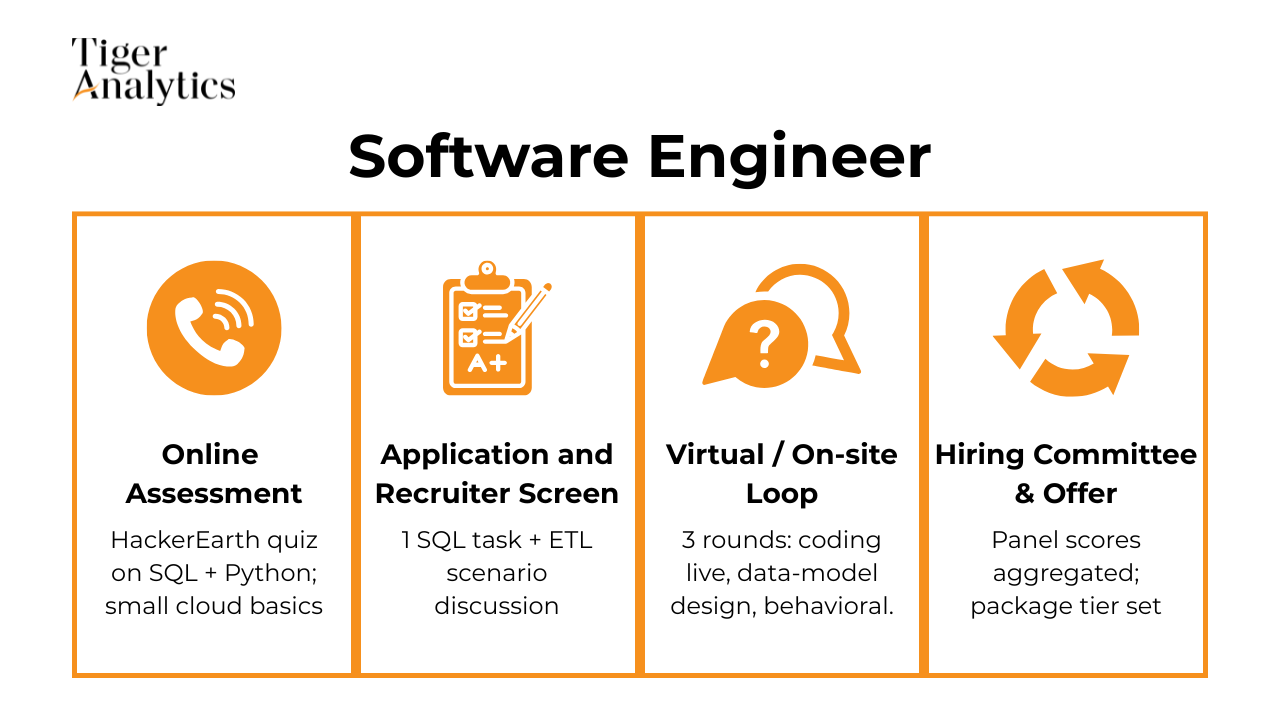

What Is the Interview Process Like for a Software Engineer Role at Tiger Analytics?

The Tiger Analytics hiring process for Software Engineers unfolds in four main stages, each designed to assess a different facet of your skills and fit.

Online Coding Assessment

The process begins with a 60-minute HackerEarth test featuring Tiger Analytics coding questions in Python or Java. You’ll solve algorithmic and data-structure problems under time pressure, demonstrating both correctness and code clarity. This screen ensures you can write clean, efficient code before moving on to live interactions.

Technical Phone Screen

Next, you’ll join a 45-minute pair-programming session, where you’ll tackle coding challenges collaboratively and discuss past projects. Interviewers probe your problem-solving approach, coding style, and ability to explain design decisions—all while simulating the real-time collaboration you’ll experience on the job.

Virtual Onsite / Loop

During the virtual onsite loop, you’ll face 3–4 rounds covering data structures and algorithms, system design, and behavioral fit. These sessions will test your mastery of the Tiger Analytics software engineer interview questions you’ve been practicing, from designing scalable services to articulating trade-offs in architecture and implementation.

Hiring Committee & Offer

Finally, your feedback packet—including coding results, live-coding performance, and cultural alignment—is reviewed by a hiring committee. Within 24–48 hours you’ll learn the outcome and, if successful, discuss compensation and team match.

Behind the Scenes

Interviewers submit detailed feedback within 24 hours, and a calibration meeting ensures consistency across candidates and levels. This rapid cycle reflects Tiger’s bias for action and commitment to keeping momentum in the hiring process.

Differences by Level

Junior engineers focus on core coding and problem solving, whereas seniors face an additional high-level architecture whiteboard exercise and deeper behavioral questions around mentorship and project leadership.

Understanding each stage helps you target the right Tiger Analytics software engineer interview questions and shine at every step.

What Questions Are Asked in a Tiger Analytics Software Engineer Interview?

Before diving into specifics, you’ll encounter a mix of coding, design, and behavioral prompts crafted to mirror the problems you’ll solve on the job. These questions not only test your technical proficiency but also your ability to communicate effectively and partner with clients.

Coding / Technical Questions

In the Tiger Analytics software engineer interview questions, expect 3–4 examples drawing on arrays, graph algorithms, and concurrency challenges. Interviewers probe your understanding of data structures depth and problem-solving speed. The ideal response clarifies edge cases, proposes a brute-force solution, then optimizes for performance. In this Tiger Analytics python coding questions, it will assess your fluency in scripting and libraries without overshadowing the core algorithms.

Write a function that rotates an n × n matrix 90 degrees clockwise in place.

Tiger Analytics wants SWE candidates who can reason about in-memory data transformations and constant-space optimizations. Correctly handling indices, layer-by-layer swaps, and corner cases (non-square or single-row matrices) shows mastery of array arithmetic and time/space complexity trade-offs. Walk through how you would test the routine against both even- and odd-sized matrices and why an O(1)–space, O(n²)–time approach is preferable to allocating a copy.

-

This prompt checks your fluency with window functions (e.g.,

DENSE_RANK), sub-queries, and NULL-safe comparisons. Tiger analysts frequently distill messy HR or finance tables into executive dashboards, so precision around edge-cases like ties is critical. Be prepared to justify index choices and describe how the query would scale on millions of rows. -

Interviewers are probing for your comfort with bit-vector or arithmetic tricks (XOR sweep, Gauss formula) that optimize both memory and speed. Explaining why a hash-set scan is sub-optimal in space-bound environments demonstrates systems thinking—essential when Tiger deploys code to memory-constrained data pipelines. Outline unit tests for edge inputs (empty, length 1, missing 0 or n).

Implement a sampler that returns one draw from a standard normal distribution.

Beyond calling

numpy.random.randn, Tiger wants to see whether you understand the math behind Box-Muller, Marsaglia, or Ziggurat techniques. Detailing numerical-stability concerns and vectorizing the routine for millions of draws shows readiness to embed statistical primitives inside production ML services. Mention how you would validate the output via QQ plots or Kolmogorov–Smirnov tests.Find the two students whose SAT scores are closest together and report the score difference.

The task measures your ability to sort, apply window functions, and resolve tie-breaking rules lexicographically. Tiger projects often include ranking items by similarity and surfacing “top-k nearest” results to business users. Discuss indexing on the numeric column and ensuring deterministic output when multiple pairs share the same minimal gap.

Write a function that returns the maximum number in a list (or

Nonefor an empty list).Although simple, the exercise lets interviewers watch your coding hygiene: input validation, doc-strings, and linear-time guarantees. Show how you handle large lists without Python’s built-in

max, emphasizing readability plus early exit conditions if the first element is alreadymath.inf. Small snippets like this reveal your baseline craftsmanship.Return the indices of two numbers in an array that add up to a target, in O(n) time.

Expect to discuss hash-table lookups versus two-pointer techniques and why constant-factor memory overhead is acceptable. Tiger appreciates engineers who can articulate trade-offs between clarity and performance—especially when arrays exceed cache size. Cover duplicate-value handling and why returning any valid pair satisfies ambiguous requirements.

Generate all prime numbers up to N, or an empty list if none exist.

Demonstrating an efficient Sieve of Eratosthenes (O(n log log n)) showcases algorithmic depth beyond naïve trial division. Relate how you might parallelize the sieve, stream primes, or cache results across API calls—skills valuable for analytics workloads that preprocess numeric ranges repeatedly. Highlight boundary cases such as N < 2.

-

This problem fuses text parsing, hashmap aggregation, and heap-based selection—all common in log-analytics pipelines Tiger delivers. Explain tokenization choices (regex vs.

nltk), lowercase normalization, and why a min-heap maintains O(n log N) performance. Discuss memory pressure for very large corpora and potential streaming approximations like Count-Min Sketch. In a list where >50 % of values are identical, return the median in O(1) time and space.

The interviewer is testing logical reasoning: realizing that if a majority element exists, it must also be the median. Articulating this proof and coding a linear Boyer-Moore majority pass (versus sorting) evidences both theoretical insight and practical optimization—traits Tiger counts on for bespoke data-processing kernels.

Determine whether a given string is a palindrome.

You’ll reveal attention to Unicode, punctuation stripping, and case folding—crucial when Tiger internationalizes products. Compare slicing (

s==s[::-1]) to two-pointer scans for large strings, and mention unit tests on emojis or combining characters. Interviewers also watch for graceful handling of empty or single-character inputs.-

Efficient two-pointer or binary-search solutions after sorting (O(n²)) highlight your algorithmic sophistication. You should articulate the triangle inequality and performance trade-offs compared with brute-force O(n³) enumeration. Tiger’s analytics often involve combinatorial counting of entities under constraints, making this reasoning directly relevant.

-

The prompt assesses your grasp of distance metrics, vectorization with NumPy, and algorithmic efficiency for large datasets. Discuss how you’d preprocess sparse one-hot data, optimize with KD-trees or Ball trees when k-d grows, and log prediction latency. Tiger values engineers who can implement ML algorithms bottom-up when libraries are not an option.

-

Present a dynamic-programming O(n m) solution and, if asked, a suffix-array or rolling-hash approach for large inputs. Explaining memory-saving tricks (keeping only two DP rows) shows you can adapt algorithms to production constraints. Such string-similarity logic underpins many text-matching and deduplication tasks Tiger delivers to clients.

System / Product Design Questions

You’ll be asked to architect services such as a scalable feature-store API or a high-traffic log-ingestion pipeline. Be prepared to discuss trade-offs around data consistency, sharing strategy, observability instrumentation, and failure recovery. Outline key components, draw clear diagrams, and articulate why you chose particular technologies or patterns.

How would you design a relational schema that powers a Yelp-style restaurant-review platform?

Tiger Analytics looks for engineers who can translate product requirements (users, restaurants, one-review-per-user-per-restaurant, image storage) into a normalized, performant data model. Interviewers want to hear how you’d separate entities such as

users,restaurants,reviews,photos, and perhaps areview_editshistory table, plus text-search indexes for fast retrieval. They’ll probe your reasoning around unique constraints (one review per user), foreign-key cascades when eateries are deleted, and how you’d shard or cache popular venues. Mention trade-offs between storing images in the DB vs. object storage and how you’d expose aggregated ratings for feed queries.-

The question gauges your understanding of data integrity in high-volume OLTP systems. Explaining how FK constraints prevent orphaned rows, enable cost-based optimizers, and document entity relationships shows architectural rigor. Interviewers expect you to weigh cascading deletes (great for tight parent–child lifecycles) against nullable FKs when historical child records must persist after a parent disappears. You should also address potential performance hits and mitigation strategies such as deferred constraint checks or partitioning.

-

Tiger’s SWE roles often straddle engineering and analytics, so they test your ability to gather requirements (latency SLAs, GDPR locality, currency support) before committing to a design. Outline an ingestion layer (Kafka → Spark/Flink ETL), a cloud object store for raw data, a dimensional warehouse in Snowflake/BigQuery, and vendor dashboards backed by aggregate tables. Discuss multi-region replication, timezone normalization, SCD-2 for inventory, and how near-real-time metrics flow to the vendor portal. They’ll listen for cost controls (cold vs. hot storage) and monitoring hooks.

-

This scenario tests schema creativity (separate

crossingsvs.vehicles), timestamp precision, and partitioning for high-ingest IoT data. You’re expected to craft analytic SQL usingWHERE date_trunc('day', ts)=current_date,ORDER BY/LIMIT, and model-levelGROUP BYwithAVGplus window functions. Explaining how you’d handle late-arriving telemetry or duplicate pings shows attention to data quality. Finally, mention indexing on the date column and clustering by vehicle_id to keep queries snappy. -

Interviewers want to see ER-thinking—

orders,order_items,menu_items,payments—and how you’d precompute daily KPIs. Your SQL should use date filters,SUM(price*qty), ranking (ROW_NUMBERorLIMIT 3) and a conditional drink flag inside an aggregate ratio. Explaining indexing onorder_date, surrogate keys vs. natural SKUs, and how the schema supports combo meals demonstrates practical judgment. Touch on extensibility for mobile ordering or loyalty programs. -

Tiger expects SWE’s to marry systems engineering with ML. Outline a prefix-tree or n-gram index backed by Redis/ElasticSearch for sub-100 ms suggestions, enriched with personalization signals (watch history, locale). Describe offline pipelines that compute popularity scores and an online ranking service that blends lexical match with collaborative filtering. Discuss A/B metrics (mean reciprocal rank, latency) and fallbacks for cold-start titles.

-

You’ll be evaluated on streaming ingestion (POS → Kafka), a time-series store or materialized views in ClickHouse/Druid, and a denormalized

branch_sales_hourlytable to power the dashboard. Explain how you’d handle high-cardinality branch IDs, late sales corrections, and idempotent upserts. Mention WebSocket or GraphQL push mechanisms for live updates and how aggregation windows (5 min, 1 hr) keep queries cheap. -

This quirky prompt probes set-based SQL reasoning and your ability to encode finite combinations. Describe

cards(suit,rank),hands(player_id,card_id), plus helper views for hand strength with ranked values and flush detection. Outline a scoring UDF or CTE that assigns numeric strength, then choose the max per game. Discuss transaction boundaries to avoid duplicate card deals. Propose a database design for a Tinder-like swiping app and highlight scaling optimizations.

You’ll need user, profile, swipe, and match tables, plus denormalized caches (Bloom filters, Redis sets) to prevent re-surfacing seen profiles. Talk about geo-hash partitioning, fan-out write vs. read strategies, and how you’d store ephemeral media. Mention GDPR deletions, rate-limiting rows per user, and candidate-generation pipelines that mix heuristics with embeddings.

Architect a notification back-end and data model for a Reddit-style discussion platform.

Highlight an events queue (Kafka/PubSub) emitting comment/upvote actions, a worker that materializes per-user

inboxrows, and a notification preferences table. Explain why you’d batch or debounce events, ensure idempotency with composite keys, and index on(user_id, is_read)for fast badge counts. Discuss mobile push vs. in-site alerts, TTL policies, and backfilling historical notifications.-

Your answer should separate static

airportsfromroutesand include hub flags plus spatial indexes for proximity search. Tiger values mention of ISO codes, multicolumn primary keys (origin,destination), and surrogate route IDs for schedule joins. Describe maintaining SCD-2 history on hub status and partitioning flight tables by fiscal year for maintenance. -

This end-to-end system question probes your ability to coordinate batch OCR (Tesseract/AWS Textract), asynchronous processing queues, and data contracts between storage layers. Detail how raw PDFs land in S3 → Lambda triggers OCR → text lands in a staging lake → Airflow DAG tokenizes and writes to Snowflake (mart) and ElasticSearch (search API). Cover PII redaction, schema evolution, and monitoring precision/recall of keyword extraction.

Behavioral & Culture-Fit Questions

Tiger Analytics values client obsession and rapid iteration. Prepare STAR-style stories showcasing how you’ve communicated complex technical ideas to non-technical stakeholders, owned a project end-to-end under tight timelines, and iterated on solutions based on feedback. Emphasize outcomes and learnings that reflect their bottom-up innovation ethos.

-

Tiger Analytics wants to hear how a SWE balances coding excellence with messy real-world constraints. Detailing obstacles—say, late-arriving data, scaling pains, or shifting specs—and the trade-offs you made shows mature problem-solving. Be ready to quantify impact, cite your debugging toolkit, and highlight lessons that changed your engineering practice. Interviewers will probe for ownership and your ability to rally cross-functional teams when blockers surface.

-

Consulting-focused firms prize engineers who translate complexity into clarity. Explain choices such as building self-serve dashboards, crafting concise APIs, adding data dictionaries, or embedding visual cues in UI components. Discuss how you gathered user feedback to iterate on usability and how this drove adoption. Expect follow-ups on balancing security, governance, and performance while keeping the experience intuitive.

-

This question tests self-awareness and coachability, two attributes Tiger emphasizes in its performance culture. Interviewers expect honest reflection paired with concrete improvement plans (e.g., pairing sessions, architecture reviews). Showing measurable progress—say, latency reductions after learning advanced profiling—demonstrates growth mindset. Avoid generic traits; tie each point to real deliverables and team outcomes.

-

Tiger’s consultants often juggle clients, PMs, and offshore teams, so clear communication is critical. They look for a structured approach—early signal detection, root-cause analysis, and action plans (status cadences, visual prototypes). Emphasize empathy, negotiation tactics, and how you documented decisions to prevent scope creep. Highlight the business impact of restoring trust, not just the technical fix.

-

The firm screens for genuine motivation beyond “interesting projects.” Connect Tiger’s data-first consulting model and domain diversity to your passion for solving ambiguous problems at scale. Reference specific case studies or thought-leadership articles that resonated with you. Finally, outline how the company’s mentorship structure or global delivery model aligns with your ambition to become a tech lead or solution architect.

With multiple sprint commitments colliding, how do you triage tasks and keep yourself—and your team—organized so deadlines are met without sacrificing code quality?

Tiger’s delivery timelines can be tight, so they value disciplined planning. Discuss techniques such as story-point re-estimation, risk matrices, and explicit “definition of done” criteria. Mention tooling (Jira filters, automated tests, CI gates) that keep work visible and quality measurable. They’ll probe how you escalate when trade-offs become unavoidable and how you communicate impacts to clients.

Describe a situation where you championed automated testing or DevOps practices that initially faced resistance. How did you persuade the team, implement the change, and measure its success?

This original question tests your ability to drive engineering excellence in consulting engagements where process maturity varies. Interviewers want evidence of influence skills, ROI storytelling, and the technical chops to roll out pipelines or test frameworks that stick. Share metrics like deployment frequency or defect rate improvements to prove the initiative’s value.

Tell us about a time you inherited a legacy codebase with significant technical debt. What systematic approach did you take to refactor safely while still delivering new features to the client?

Tiger Analytics’ projects often involve modernizing existing systems. They’ll gauge your balance between refactor and roadmap, use of metrics (cyclomatic complexity, coverage), and stakeholder negotiation for phased debt pay-down. Highlight tooling (static analysis, feature flags) and how you measured risk reduction or performance gains after refactoring.

How to Prepare for a Software Engineer Role at Tiger Analytics

Successful candidates blend rigorous technical practice with deep company and client understanding. Here’s how to structure your prep:

Study Role & Culture

Map past projects to Tiger Analytics’s consulting model—highlighting how you drove client impact through rapid prototyping and clear communication.

Practice Core Question Types

Allocate roughly 50 % of your time to coding problems, 30 % to system-design scenarios, and 20 % to behavioral rehearsals. In this Tiger Analytics software engineer interview questions, it will keep you focused on the real hurdles ahead.

Think Out Loud

Verbalize your assumptions, ask clarifying questions when requirements are vague, and narrate trade-off reasoning to mirror on-the-job collaboration.

Brute Force → Optimize

Demonstrate a baseline solution first—then iterate to improve memory usage, latency, or scalability. Walk interviewers through the evolution of your approach.

Mock Interviews & Feedback

Pair with peers or former Tiger Analytics engineers to simulate real interview loops, record sessions, and refine both technical and communication skills. Utilize Interview Query’s mock interview services.

Conclusion

Practicing authentic Tiger Analytics software engineer interview questions while aligning your examples with the firm’s client-first values will set you apart. For a deep dive into Tiger Analytics’s culture and broader role guides, check out the company overview and explore our mock-interview services.

Ready to elevate your prep? Explore our learning paths, or see how candidates like Jeffrey Li cracked the code.