Scale AI Business Analyst Interview Guide (2026): Process, Questions & Cases

Introduction

The business analyst role is expected to continue growing in the next ten years, with over 100,000 new openings and a job market expansion of 9.7%. Scale AI contributes to this trend, as it needs analysts who can help meet the growing need for reliable AI performance and evaluation. Scale AI business analysts contribute to the company by translating complex data into clear business decisions across research labs and enterprises.

Also worth noting is how Scale AI’s growing reputation in model development and the generative AI ecosystem mirrors how it approaches analytics in practice. Instead of limiting the role to reporting or static dashboards, the Scale AI business analyst interview covers your ability to evaluate data quality, model performance, and customer outcomes while also working across product, operations, and strategy.

This interview guide is designed to help you meet these holistic expectations. We break down how the business analyst interview process works, the types of interview questions candidates consistently face, and how your analytical thinking, communication, and decision-making are assessed. We will also share practical preparation strategies designed specifically for case-heavy, open-ended interviews common at Scale AI.

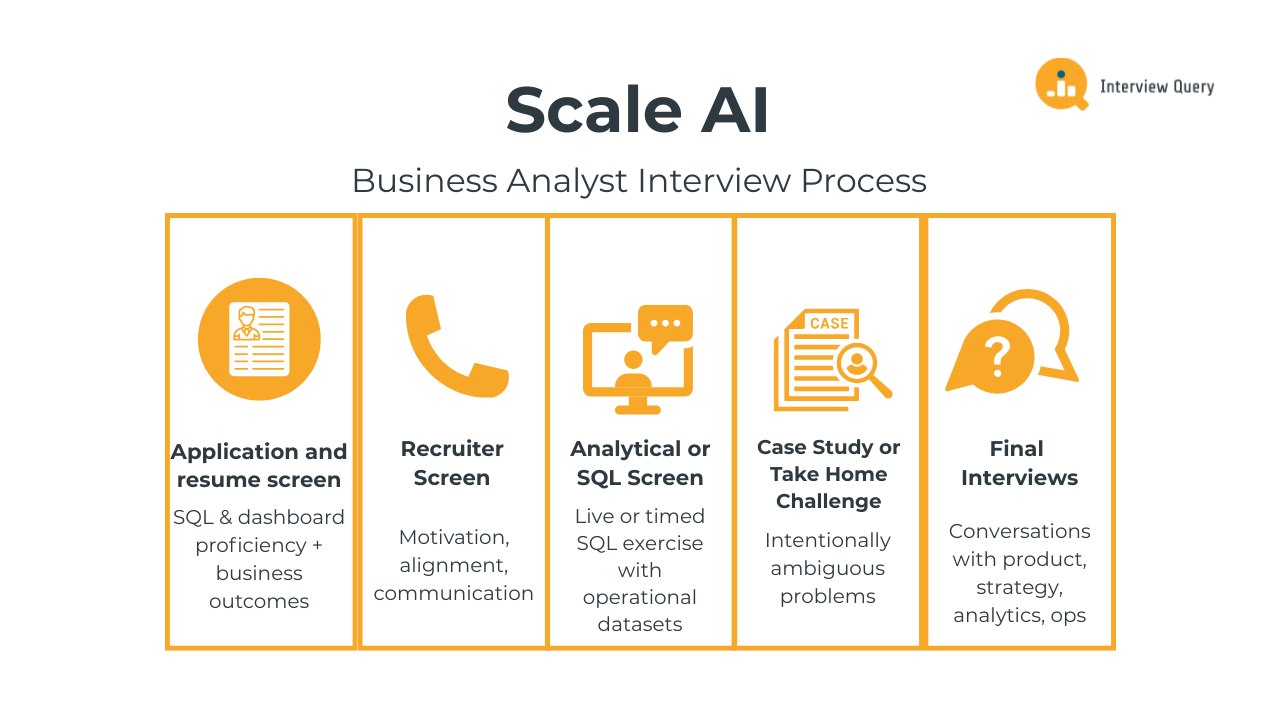

Scale AI Business Analyst Interview Process

Scale AI’s business analyst interview process is grounded on real, day-to-day work, unlike traditional analytics interviews that test isolated technical skills. At each stage, you’re expected to navigate incomplete data, make judgment calls without perfect information, and translate analysis into decisions that directly affect product, operations, and customers. While the exact sequence can vary by team, most candidates move through a multi-stage funnel that consistently tests analytical depth, decision-making under ambiguity, and communication clarity.

Application and resume screen

The first filter is a resume review conducted by recruiters and hiring managers. At this stage, Scale AI is not looking for a laundry list of tools or coursework, but scanning for evidence that your work materially changed outcomes. SQL proficiency is assumed, but it is rarely the differentiator. What immediately stands out internally is whether you’ve operated close to the decision surface: pricing, quality thresholds, capacity planning, workflow design, or customer-facing metrics.

Instead of listing dashboards, describe how insights led to operational improvements, pricing changes, workflow redesigns, or strategic decisions. Experience in fast-moving environments like startups, operations-heavy teams, or platform businesses is also a positive signal, as it aligns you with the pace and ambiguity that define Scale’s work.

Tip: Write bullets that make it obvious what decision existed before you, and what decision existed after you. Use language like “recommended,” “influenced,” or “changed direction,” and quantify outcomes where possible (cost savings, throughput gains, latency reduction, etc.).

Recruiter screen

The recruiter screen is typically a 25–30 minute conversation focused on motivation, alignment, and communication. Expect questions about your background, what draws you to Scale AI, and how you approach open-ended analytical problems.

This conversation also tests whether you understand Scale’s position in the AI ecosystem. Recruiters want to see that you grasp how data labeling, model evaluation, and enterprise AI deployments differ from traditional SaaS or analytics roles. You may also be asked about your preferred working style, experience collaborating with product or operations teams, and how you handle shifting priorities.

Tip: When answering “Why Scale AI?”, anchor your response in problems by talking about enabling reliable AI in high-stakes settings, or about the challenge of operationalizing ML at scale. Book a coaching session with one of Interview Query’s AI industry experts, who can provide insider tips and help you make a positive impression at this round.

Analytical or SQL screen

The analytical screen usually involves a live or timed SQL exercise grounded in realistic business scenarios. These problems often resemble operational datasets: task throughput, customer usage, quality metrics, or workflow performance.

Interviewers care less about perfect syntax and more about how you think. You’ll be evaluated on how you clarify the question, choose the right metrics, sanity-check results, and explain what the numbers actually mean for the business. Expect follow-ups like: “Is this metric actually actionable?” or “What would you do if leadership pushed back on this conclusion?”

Tip: Explore Interview Query’s SQL learning path and practice narrating your thinking as if you were talking to a product or ops partner, not another analyst. For each question, explicitly state why you chose a metric, what decision it would inform, and summarize insights.

Case study or take-home challenge

Many candidates encounter a case study or take-home assignment designed to simulate real work at Scale AI. These challenges are intentionally under-specified; you may receive a rough dataset, a loosely defined goal, or conflicting signals that force trade-offs.

Evaluation centers on how you structure the problem, prioritize analyses, and communicate conclusions—not whether you find a single “correct” answer. How you present your work also matters as much as the analysis itself. Clear charts, concise writing, and a strong executive summary go a long way, because Scale analysts frequently influence decisions through docs instead of meetings.

Tip: Start with a clear recommendation even if it’s provisional, and explicitly state what you would not analyze given time constraints. Using our Take-Home Review, you can leverage AI-powered feedback to help you structure your analysis like an internal memo and support your recommendation with evidence.

Final interviews

Final-round interviews typically include multiple conversations with leaders from product, strategy, analytics, or operations. These sessions blend behavioral questions, deep dives into past projects, and live case discussions.

Interviewers are assessing ownership and judgment. They want to see whether you can push back thoughtfully, adapt when new information emerges, and explain complex analysis in a way that drives alignment. Expect probing questions about trade-offs you’ve made, mistakes you’ve learned from, and times you influenced decisions without formal authority.

Tip: Practice explaining not just how you would evaluate success, but also how you’d work around trade-offs and second-order effects. When deciding which stakeholders to involve, think like the owner of AI infrastructure, not an analyst simply delivering a report.

Overall, Scale AI’s interview loop rewards deliberate practice. Schedule mock interviews with Interview Query to help build the analytical fluency and communication clarity needed to perform consistently, from SQL challenges to case drills and take-home simulations.

Scale AI Business Analyst Interview Questions

Scale AI’s interview questions aim to uncover how you think when the answers aren’t obvious. Instead of leaning on formulaic BI prompts or textbook questions, the interview stages focus on real operational problems, open-ended case studies, and behavioral scenarios reflective of the company’s culture and operations. When encountering a mix of analytics and SQL questions, case studies, and behavioral prompts, remember that you’re being evaluated not just on technical correctness but also on structure, judgment, and impact.

Watch Next: Business Analyst Interview Questions ANSWERED

Before diving into sample questions, check out this video on business analyst interview questions and how to answer them.

In this video, data scientist and Interview Query co-founder Jay Feng helps you learn practical analytics frameworks for structuring responses, common pitfalls to avoid, and real examples of strong answers you can adapt for your own prep. The insights, like breaking problems into clear components and linking your analysis back to business impact, are directly applicable to Scale AI’s interview style and will help you think more confidently through representative questions, like those outlined below.

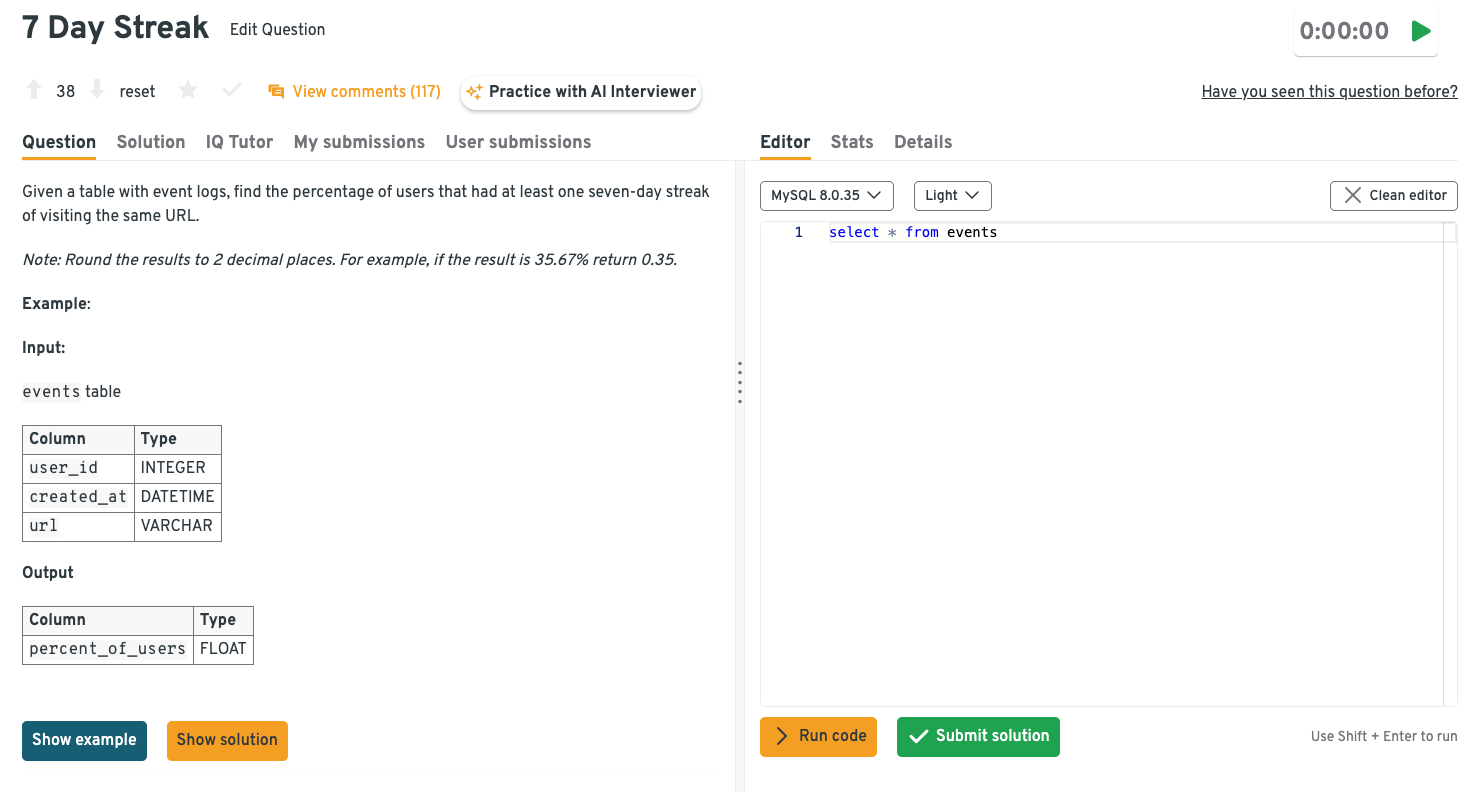

Analytics and SQL interview questions

Analytics and SQL interviews at Scale AI are grounded in real operational and customer-facing challenges. SQL is treated as a means to an end, essentially by generating insight that informs decisions around efficiency, quality, and scale. Be prepared to define success metrics, reason through edge cases, and interpret results in the context of teams building, training, and evaluating AI models.

-

Here, interviewers are testing time-series analysis, window functions, and behavioral reasoning. The solution involves grouping user activity by workflow and date, then identifying sequences of seven consecutive days using ranking or lag logic. Once qualifying users are flagged, calculating the percentage requires dividing by the total active user population and rounding per the specification.

Tip: Call out how you’d align “day” definitions to the business reality, e.g., normalizing to a single timezone or model-run cadence. Then, explicitly mention filtering out internal QA or stress-test traffic, which is common at Scale and can silently distort streak metrics.

Practice questions like this hands-on by heading to the Interview Query dashboard. Solve SQL and analytics problems in a built-in editor, then compare your approach with step-by-step solutions to sharpen both correctness and structure.

Which Scale AI projects deliver the highest budget per active contributor?

This prompt focuses on SQL aggregation, ratio-based metrics, and resource efficiency analysis. The approach combines project budgets with employee assignments, filtering out inactive projects before computing a budget-to-employee ratio. Ranking those results surfaces which initiatives are most capital-intensive per contributor, a useful signal for operational planning.

Tip: Frame the metric as a decision tool by explaining when leadership should lean **into a high ratio (early-stage, high-risk AI work) versus when it signals poor capacity allocation. Note what additional context you’d request before acting.

How would you measure data labeling efficiency across teams?

This question evaluates metric design, operational analytics, and your ability to translate raw activity data into performance insights. Define efficiency in context, such as labels completed per hour or per worker, while normalizing for task complexity and quality thresholds. From there, you would segment by team, time period, and task type to identify structural differences rather than one-off fluctuations.

Tip: Explain how you’d adjust for task difficulty and quality acceptance rates with edge cases like onboarding ramp-up periods or mixed task queues. This way, teams aren’t incentivized to trade accuracy for speed.

How would you analyze a sudden drop in labeling throughput?

This question probes diagnostic thinking, data slicing, and comfort with ambiguous operational issues. A thoughtful response begins by validating the drop and pinpointing when it started, then breaking throughput down by team, task type, geography, or tooling changes. From there, you’d correlate trends with known events like model launches, policy shifts, or workforce changes to isolate the most likely root cause.

Tip: Describe starting with a fast, hypothesis-driven slice (by task type or tool change) to localize the issue within minutes, since Scale decisions often need directional answers before a fully robust analysis is possible.

Design a dashboard for monitoring customer onboarding performance.

Guided by metric prioritization and stakeholder empathy, a strong approach defines the onboarding funnel end to end and tracks conversion, time-to-value, and drop-off points at each stage. The final step is tailoring views for different audiences. Executives may need high-level trends, while ops teams benefit from real-time alerts and segmented drill-downs.

Tip: Show Scale awareness by noting how you’d instrument early signals of long-term success, e.g., first successful model run or first labeled batch, rather than relying only on surface-level signup completion metrics.

Tip: Anchor the dashboard around operational “first value” milestones like the first production-ready labeled batch. This is important because at Scale, onboarding success is defined by model readiness instead of just account activation.

Case study and scenario-based interview questions

Case interviews at Scale AI are deliberately under-defined and closely resemble executive-style problem solving. You’re often given partial data, competing priorities, or unclear goals, then asked to chart a path forward. There is rarely a single correct answer. Evaluation focuses on clarity of thought, prioritization, and communication.

-

Start your approach by benchmarking the new geography against mature regions on throughput, quality, and turnaround time, then drilling into differences by task type, workforce experience, tooling, and local constraints. Pairing quantitative findings with launch timelines, training changes, or policy differences helps isolate whether the issue is ramp-related, structural, or execution-driven.

Tip: Explicitly call out which metrics you’d trust earliest, such as task pickup latency and first-pass quality. Contrast this with metrics you’d treat cautiously (e.g., throughput per worker) to proactively validate data reliability in a new market.

A major customer reports lower-than-expected model performance. How do you investigate?

Here, interviewers are assessing analytical debugging, cross-functional thinking, and customer-facing judgment. The investigation should trace the full data pipeline, i.e., from task specifications and labeling quality to data distribution shifts and evaluation metrics, while comparing current outputs to historical baselines. Collaborating with product, ML, and ops teams ensures you distinguish data issues from modeling or expectation-setting gaps.

Tip: Walk through a concrete triage path: compare recent labeled data against the mode’s training distribution, audit quality acceptance rates by labeler cohort, and check whether task instructions or evaluation metrics changed.

-

This prompt evaluates metric design, platform analytics, and systems-level thinking. A thoughtful response defines health across acquisition, engagement, productivity, and retention, using signals like task acceptance rates, session frequency, and repeat contribution. Tracking trends over time and segmenting by cohort allows you to detect early warning signs before they surface in customer-facing outcomes.

Tip: Anchor your framework around leading indicators (task acceptance rate, time-to-first-task, repeat contribution within 7 days) and explicitly state which metric movements would trigger intervention versus monitoring.

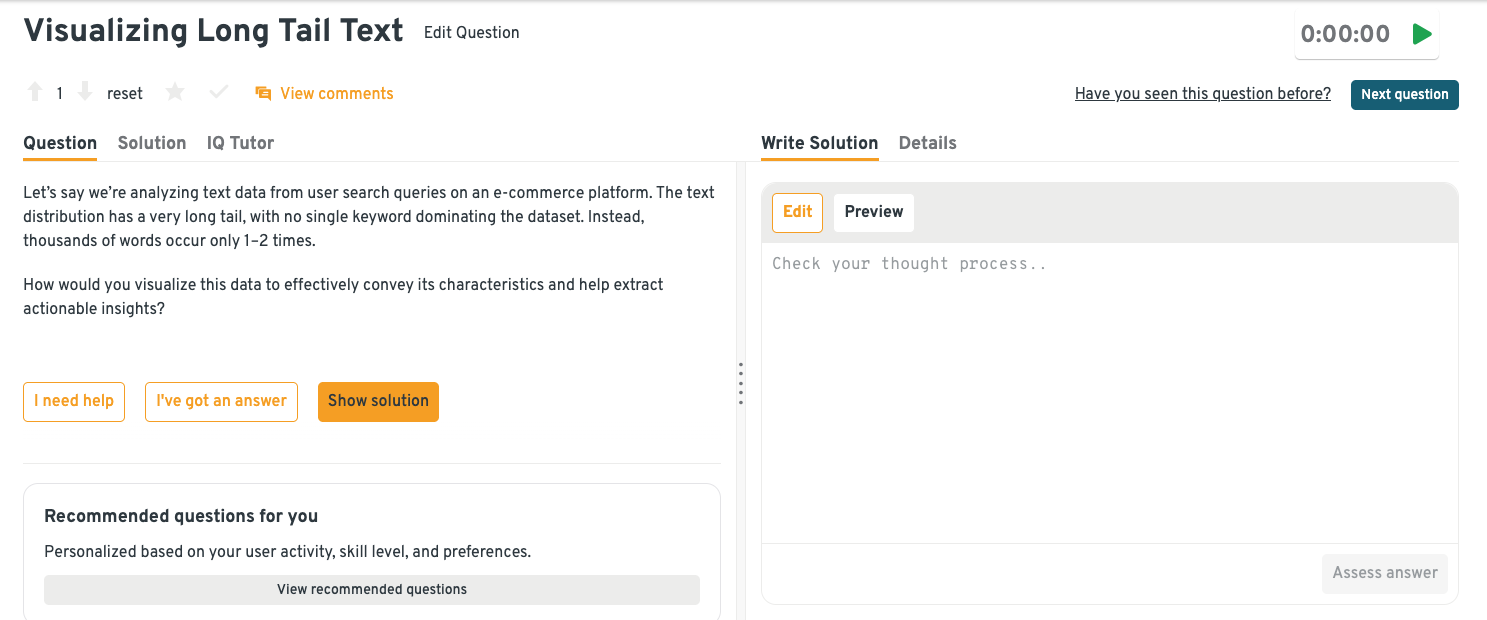

-

This question targets exploratory data analysis skills and comfort working with unstructured data. An effective approach combines clustering or topic modeling with frequency and severity analysis to surface recurring edge cases that don’t appear in top-line metrics. Translating those findings into clear themes helps prioritize workflow improvements, tooling changes, or guideline updates.

Tip: Describe how you’d rank themes by both frequency and operational cost, such as rework hours or reviewer escalations. Explain that by doing so, leadership can prioritize fixes that meaningfully move quality or throughput.

If you want guidance while working through case-based questions like this, try using IQ Tutor in the Interview Query dashboard to get AI-powered hints, logic checks, and feedback as you explore different analytical approaches.

Leadership wants to optimize cost, but quality metrics are declining. What do you recommend?

The focus here is on tradeoff analysis, stakeholder communication, and decision-making under constraints. Rather than treating spend as uniform, frame the problem by identifying where cost reductions are impacting quality, such as task complexity, reviewer coverage, or worker experience. From there, you’d propose targeted optimizations, like reallocating budget to high-impact tasks or introducing quality tiers, to protect outcomes while improving efficiency.

Tip: Highlight how you’d quantify the downstream cost of quality regressions, like rework or customer churn, to reframe the discussion beyond short-term savings.

Want to practice tackling real-world problems like these? Check out Interview Query’s Challenges, where you can work through product and business case studies designed to mirror the complexity and ambiguity of actual business analyst interviews.

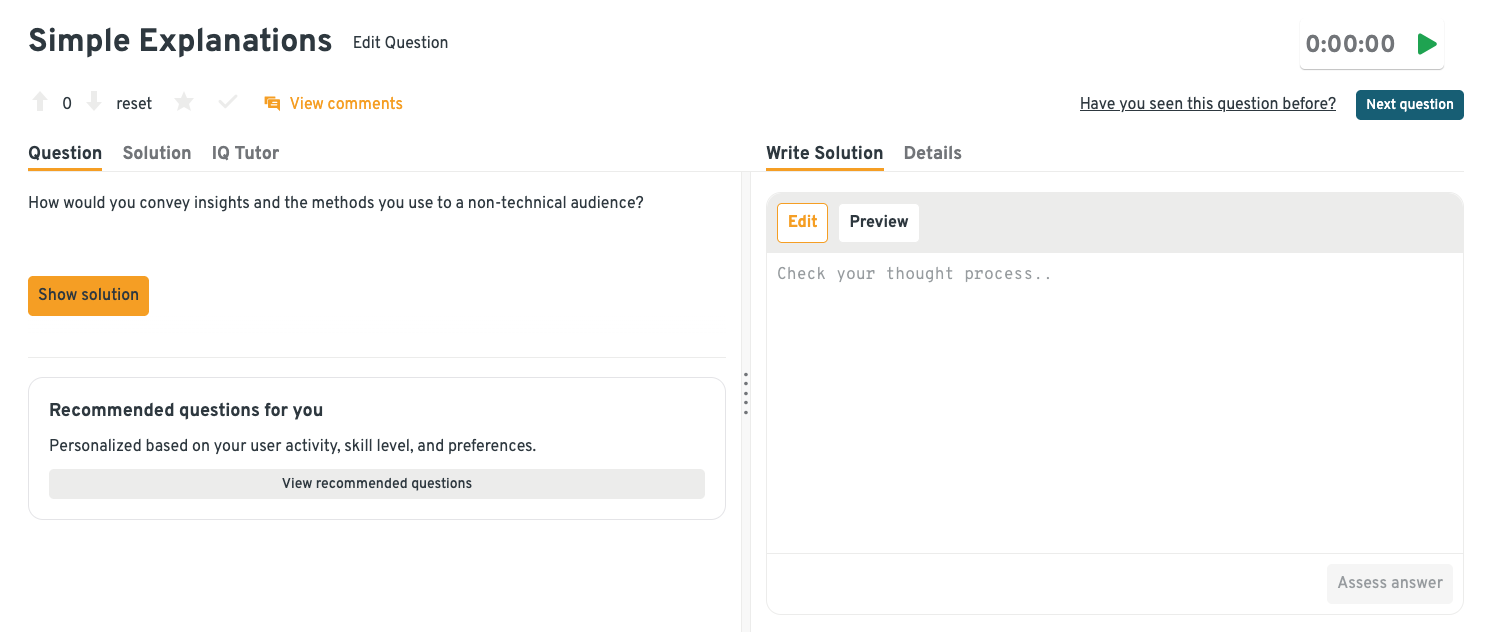

Behavioral interview questions

Behavioral interviews at Scale AI focus on ownership, speed, and accountability. Expect questions that probe how you’ve influenced decisions without authority, handled conflicting priorities, or learned from decisions that didn’t go as planned.

Describe a time you worked with incomplete data.

This question evaluates comfort with ambiguity and judgment under imperfect conditions. In addition to showing how you assessed risk and communicated uncertainty, quantify the size of the gap and the confidence range of your estimate when making reasonable assumptions. This show that you understand you understand operational risk, not just statistical correctness.

Sample Answer: While analyzing labeling throughput for a new task type, I found that several days of event data were missing due to a logging issue. I estimated throughput using the prior two weeks of comparable tasks, which covered ~80% of expected volume. I also flagged a ±10% confidence range so ops leaders could plan capacity while logging was fixed. Once the full data was available, I validated that the conclusions still held.

-

To show communication clarity and empathy for non-technical audiences, emphasize simplifying concepts, focusing on impact, and tailoring the explanation to the listener’s goals. Tie the explanation to a measurable operational action, i.e., what someone should do differently and what changes as a result.

Sample Answer: I start by anchoring the explanation to the decision the metric supports rather than the math behind it. For example, instead of describing a weighted quality score, I explain what actions improve it and what happens when it drops. I use simple visuals or concrete examples to make the concept intuitive. By reframing the metric around actions, operators were able to adjust workflows, and we saw quality flags drop by ~20% over the next two weeks.

When answering prompts like this on the Interview Query dashboard, the AI Tutor and community comments can be especially helpful. Use them to refine your storytelling, pressure-test how your explanation sounds to others, and see how different candidates frame similar behavioral responses.

-

This question tests collaboration, influence, and conflict resolution in cross-functional environments like Scale’s product, ops, and ML teams. Candidates should not only highlight listening, but also show how data reframing resolved conflict by connecting trade-offs to end-to-end operational outcomes.

Sample Answer: I once worked with product and operations teams that had conflicting priorities around task speed versus quality. I organized a working session to align on shared goals, and the analysis showed that a 5% drop in quality increased rework enough to add nearly a full day to turnaround time. Framing the tradeoff in terms of end customer impact helped both teams agree on revised speed and quality thresholds.

Tell me about a time your analysis led to an unexpected outcome.

This prompt evaluates intellectual honesty, adaptability, and the ability to revise assumptions. It’s not enough to show curiosity; quantify the delta between expectation and reality, then show the improvement after course correction.

Sample Answer: I analyzed a workflow change that was expected to increase labeling speed, but the data showed a decline in throughput. After digging deeper, I discovered that instead of the expected 10% speed gain, throughput dropped by ~8%. I shared this insight with the team and proposed a revised workflow. As a result, we recovered speed while improving contributor satisfaction scores.

Describe a situation where speed mattered more than precision.

Scale AI values timely insights that unblock decisions, especially in operational or customer-facing contexts. On top of explaining how you scoped a lightweight analysis, specify how quickly the insight was delivered, what decision it enabled, and how you followed up with more rigorous validation.

Sample Answer: During a customer escalation, leadership needed quick insight into whether a quality issue was isolated or systemic. Within a few hours, I analyzed a sample of ~200 recent tasks and showed the issue was isolated to one workflow. That allowed the team to intervene the same day, instead of pausing the broader pipeline while a deeper analysis was being completed.

To go deeper and build true interview readiness, explore Interview Query’s question bank, where you’ll find a comprehensive set of SQL, case, and behavioral questions with guided solutions—all curated specifically for business analyst candidates like you.

How to Prepare for a Scale AI Business Analyst Interview

When preparing for a Scale AI business analyst interview, it helps to know that it looks very different from studying for a traditional business intelligence roles. These interviews are intentionally ambiguous, closer to how real decisions get made inside an AI infrastructure company than to textbook analytics problems. What interviewers are really testing is whether you can impose structure on messy situations, reason through trade-offs, and explain why a recommendation makes sense even when the data is incomplete. Integrating the following steps into your interview prep can help you become a successful candidate.

Practice ambiguous SQL and analytics scenarios: At Scale, SQL is a baseline skill; nearly every qualified candidate can write joins and aggregations. Be comfortable querying messy operational data, validating assumptions, and explaining what the results imply for product, operations, or customers. Focus on questions where the answer is not obvious and where multiple interpretations are possible.

Tip: Use Interview Query’s SQL learning path to brush up on fundamentals and apply them to interview settings. After solving a problem from the course, force yourself to write a one-paragraph “exec summary” stating the decision you’d recommend.

Build fluency in AI and data quality metrics: Understand how labeling accuracy, throughput, reviewer coverage, and evaluation metrics affect model performance and customer trust. Review concepts such as precision and recall trade-offs, quality versus cost dynamics, and how evaluation metrics translate into customer trust and enterprise adoption.

Tip: Review important AI & ML news and announcements from Scale AI’s website. Then, create a simple one-page glossary translating common ML and data quality metrics into business outcomes.

Don’t forget behavioral prep: Prepare stories that show how you moved forward without perfect data, influenced cross-functional teams, or made a call under time pressure. Keep your answers tight and impact-driven: what problem you faced, what decision you made, and what changed as a result. Avoid over-indexing on effort; outcomes and learning matter more.

Tip: When rehearsing your stories using Interview Query’s behavioral question bank, set the built-in timer to 90 seconds to ensure you can deliver it clearly and confidently under interview pressure.

Practice decision framing, not just analysis: Before you dive into metrics or SQL, make it a habit to open answers with a short framing statement like, “The decision here is whether we should optimize for cost or quality in the short term,” or “This analysis helps determine if the issue is systemic or isolated.” Think like an internal advisor rather than a task executor to signal seniority, business judgment, and readiness.

Tip: Practice rewriting past analyses with a structured approach that includes a recommendation, risks, and next steps—even if the original work didn’t require it.

Simulate the interview environment: Open-ended case prompts, live SQL exercises, and executive-style discussions are hard to fake if you’ve only studied in isolation. Mock interviews and realistic case practice force you to articulate structure, defend assumptions, and adjust when challenged.

Tip: Make use of Interview Query’s mock interviews, which are built to mirror this exact style and help you practice communicating your responses the way Scale AI expects on interview day.

If you want more personalized feedback on your structure, decision framing, and communication style, Interview Query’s coaching sessions offer targeted, one-on-one prep tailored to the exact gaps interviewers look for.

Role Overview and Culture at Scale AI

Since the business analyst role at Scale AI sits at the intersection of analytics, operations, and strategy, tasks go beyond mere maintenance of static dashboards and routine reports. Instead, analysts transform signals from data quality, model performance, and customer usage into decisions that shape AI systems end to end, from how they are built to how they are evaluated and deployed at scale.

On a day-to-day basis, business analysts work closely with product managers, engineers, operations leaders, and strategic initiatives teams. Typical responsibilities include:

- Analyzing data labeling efficiency, throughput, and quality to surface operational bottlenecks

- Defining and refining metrics for AI evaluation and data quality products

- Investigating issues in enterprise customer deployments and translating findings into recommendations

- Partnering with cross-functional teams to assess trade-offs around cost, quality, and speed

- Communicating insights clearly to both technical and non-technical stakeholders

Due to Scale AI’s support for frontier research labs and large enterprises, analysts are exposed to cutting-edge AI use cases and work on problems that directly influence some of the most advanced models in production. The fast-paced, high-ownership environment creates visibility in leadership and accelerates overall growth, but also requires comfort with ambiguity and imperfect information.

Learn more about how business analysts operate in the company’s AI ecosystem by reading our full Scale AI interview guide.

Average Scale AI Business Analyst Salary

At Scale AI, business analyst compensation reflects both the technical demands of the role and the company’s position in the competitive AI infrastructure market. Total compensation varies based on seniority, location, and team scope, with meaningful differences between entry-level and senior analyst roles.

According to Levels.fyi, reported total compensation for business analysts in the United States typically ranges from around $117K at entry-level (L3) to about $252K for more senior individual contributors (L5). Median pay sits comfortably above traditional business intelligence roles with a total package near $220K, driven in part by the impact analysts have on product strategy, operations, and enterprise customers.

Below is the salary breakdown using aggregated data from sources like Levels.fyi, consisting of base salary, equity, and bonuses.

| Level | Total / Year | Base / Year | Stock / Year | Bonus / Year |

|---|---|---|---|---|

| Business Analyst | ~$155K | ~$130K | ~$20K | ~$5K |

| Senior Business Analyst | ~$190K | ~$155K | ~$30K | ~$5K |

| Lead / Principal Analyst | ~$225K | ~$175K | ~$45K | ~$5K |

Compensation typically increases meaningfully after equity vesting begins in year two.

Regional salary comparison

| Region | Salary Range | Notes |

|---|---|---|

| United States (Bay Area) | $180K–$225K | Highest compensation driven by cost of living and role scope |

| United States (Remote) | $155K–$200K | Adjusted for location with similar equity structure |

| International | Varies | Compensation aligned to local market benchmarks |

Overall, Scale AI compensation reflects the premium placed on analytics talent that can operate effectively in ambiguous, high-impact AI environments.

Explore Interview Query’s salary guides to better benchmark your offer based on industry trends and influential factors like seniority, education, and location.

FAQs

How is Scale AI different from other analytics roles?

Scale AI business analysts operate in an AI infrastructure environment rather than a traditional software-as-a-service setting. The role emphasizes decision-making under uncertainty, exposure to model evaluation and data quality metrics, and close collaboration with product and engineering leaders. This makes interviews more case-driven and less focused on static reporting.

Is there always a take-home challenge in the Scale AI interview process?

Not every candidate completes a take-home challenge, but many business analyst interview loops include either a take-home case or a live case study. Teams use these exercises to evaluate how candidates structure ambiguous problems, communicate assumptions, and make recommendations with incomplete data. Even when a take-home is not required, candidates should expect case-style questions that simulate real internal analyses.

How technical is the SQL round at Scale AI?

The SQL round is moderately technical but intentionally practical. Interviewers are less concerned with advanced syntax and more focused on how you use SQL to generate insight. Candidates are expected to write clear, correct queries, validate data quality, and explain what the results mean for the business. Strong performance comes from pairing solid SQL fundamentals with thoughtful interpretation.

How long does the Scale AI business analyst interview process take?

The full interview process typically takes three to five weeks from initial recruiter screen to final decision. Timing varies based on team availability and whether a take-home challenge is included. Candidates who prepare early for SQL, case studies, and behavioral interviews tend to move more smoothly through the process.

How should I prepare if I come from a traditional business intelligence background?

Candidates from business intelligence roles should focus on building comfort with ambiguity and AI-adjacent metrics. Practicing open-ended case studies and operational analytics scenarios is more valuable than memorizing SQL patterns.

Begin Your Scale AI Business Analyst Journey with Interview Query

As you lay the groundwork for acing the Scale AI business analyst interview, it helps to go far beyond textbook SQL or canned case answers. Rather, your efforts must be poured into mastering structured reasoning, open-ended analytics, and clear decision-oriented communication. Scale AI interviewers look for candidates who think like internal consultants: framing decisions early, navigating ambiguity confidently, and tying data back to business outcomes.

To build well-rounded readiness, leverage Interview Query’s question bank for deep practice with SQL and analytics problems, explore tailored learning paths to shore up both technical fundamentals and business judgment, and work through real-world Challenges that mirror the complexity of actual interview scenarios. Then, refine your strategy and gain personalized feedback on your structure, storytelling, and impact through 1:1 coaching sessions.