Scale AI Research Scientist Interview Guide: Process, Questions & Prep (2025)

Introduction

As Scale AI grows its footprint in training data, alignment, and model reliability, the research scientist role has become central to building systems that evaluate, stress test, and refine large language models. Preparing for a Scale AI research scientist interview means understanding how the company’s rapid expansion into enterprise model evaluation, AI agents, and government partnerships is reshaping expectations for technical talent.

Within the first stages of the Scale AI research scientist interview, candidates are assessed not only on modeling depth, but also on their ability to reason quickly, debug ambiguous failure cases, and design clear evaluation frameworks. The Scale AI interview process places substantial weight on experimentation quality and analytical rigor, so strong candidates are expected show both research fluency and practical engineering judgment.

What does a Scale AI research scientist do?

A Scale AI Research Scientist operates at the center of large scale evaluation, alignment, and experimentation, shaping how Scale AI Research advances enterprise model assessment, multimodal systems, and AI agent reliability. The role blends scientific investigation with practical engineering judgment, ensuring that models behave reliably in real world settings rather than controlled academic environments.

Core responsibilities include:

- Building and refining evaluation pipelines that measure reasoning quality, safety, and robustness.

- Designing metrics that expose failure modes across hallucinations, preference modeling, and RLHF workflows.

- Analyzing model behavior across edge cases using structured, repeatable experimentation.

- Partnering with engineering teams to enhance data generation, labeling quality, and structured output reliability.

- Debugging unpredictable model behavior and translating findings into improvements for production systems.

Compared with a Scale AI Machine Learning Research Engineer, the Scale AI Research Scientist Role focuses less on algorithm implementation and more on measurement design, statistical soundness, and failure mode analysis. Across all projects, researchers ensure that Scale AI’s models, pipelines, and agent systems behave consistently, safely, and accurately at scale.

Why work at Scale AI with this role?

Joining Scale AI as a Research Scientist means contributing directly to fast moving work in evaluation, alignment, and real world model reliability for enterprise clients, OpenAI-level partners, and expanding government programs. Scale AI Research drives rapid product iteration by defining benchmarks, safety metrics, and alignment standards that influence how large language models and multimodal agents behave in production. Throughout the Scale AI interview, this emphasis on measurable impact, fast experimentation, and clear scientific reasoning is the core theme being evaluated.

Scale AI Research Scientist Interview Process

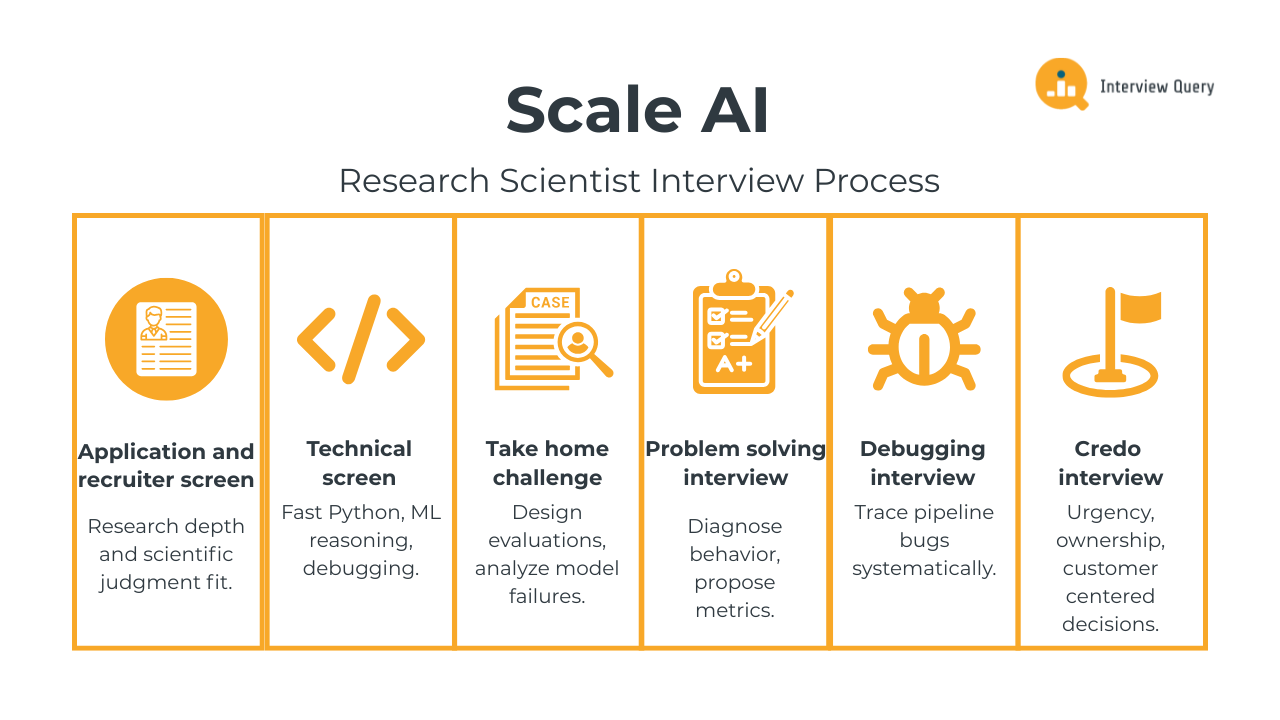

The Scale AI Interview Process evaluates how quickly and rigorously candidates reason about model behavior, experimentation quality, and scientific decision making. Across the loop, you will be assessed on technical depth, research intuition, structured debugging, problem solving pace, and alignment with Scale AI’s Credo. The process typically includes four to six stages: the application review and recruiter conversation, the Scale AI Technical Interview, the take home challenge, the Scale AI Problem Solving Interview, the Scale AI Debugging Interview, and finally the Credo interview.

Each stage emphasizes clarity, reproducibility, and the ability to work with real model failures that appear at production scale. Repetition of the exact phrase Scale AI Interview Process throughout the loop reflects how consistently candidates must demonstrate depth, speed, and judgment at every step.

Stage 1: Application and recruiter screen

The Scale AI Recruiting Process begins with a review of your research background, including publications, evaluation work, and hands on experimentation with language or multimodal systems. During the recruiter screen, you will discuss how you handle ambiguity, your research interests, and how you move quickly in high throughput environments.

The Scale AI Hiring Process favors candidates who can communicate measurable results, such as evaluation methods they created or insights that improved model behavior. Recruiters also look for signs of ownership and clear scientific judgment.

Stage 2: Technical screen

The Scale AI Technical Interview is one of the most variable parts of the loop. It typically includes live Python exercises involving algorithms, debugging, vector operations, or probability based reasoning. Recent interview experiences show that candidates may be asked to implement nucleus sampling, explain masking in multihead attention, or reason through subtle failures in an evaluation pipeline. Tasks can include preprocessing JSON files, performing text normalization at speed, or analyzing where an inference step produces incorrect outputs.

Because the Scale AI Coding Interview is fast paced, interviewers pay close attention to how you handle uncertainty, verify assumptions, and articulate each decision out loud. Many candidates also see targeted Scale AI Coding Interview Questions related to evaluation metrics, structured prediction, or alignment methods. For research oriented roles, an extended Scale AI ML Coding Interview may include reasoning about multimodal models, reward shaping, or reliability metrics.

Stage 3: Take home challenge

The take home assignment reflects the real nature of work inside Scale AI Research. The Scale AI Take Home Challenge usually involves designing an evaluation pipeline, analyzing model failure cases, or conducting a small controlled experiment. In some cases, tasks resemble the responsibilities seen in the Scale AI ML Take Home, requiring structured data cleaning, concise experiment framing, and actionable insights.

While candidates sometimes search online for terms like Scale AI Take-Home Challenge GitHub, each assignment is unique and emphasizes clarity, reproducibility, and practical relevance. Interviewers look for clean reasoning, transparent assumptions, and findings that could influence production model behavior.

Stage 4: Problem solving interview

The Scale AI Problem Solving Interview evaluates how you navigate ambiguity and reason about model behavior at scale. You may be asked to diagnose hallucinations, evaluate preference inconsistencies, identify data quality failures, or propose new metrics for reasoning.

These discussions reveal how you compare tradeoffs, isolate root causes, and think systematically under pressure. Interviewers look for structured logic and a strong ability to translate model observations into scientific hypotheses.

Stage 5: Debugging interview

The Scale AI Debugging Interview is a deep test of your ability to trace, isolate, and resolve failures in pipelines, preprocessing, model evaluation, or internal logic. You may encounter issues related to incorrect masking, token misalignment, data drift, silent normalization bugs, or malformed JSON structures. This round evaluates whether you can reason systematically, maintain clear hypotheses, and converge quickly toward a validated fix. The ability to debug under uncertainty is a core requirement for Research Scientists.

Stage 6: Credo interview

The Scale AI Credo Interview examines your ability to operate within Scale AI’s core values, including urgency, ownership, and customer centered decision making. Expect behavioral questions about navigating disagreements, making fast decisions with incomplete information, or driving a research outcome under tight constraints. Successful candidates show clarity, resilience, and ownership of both failures and successes.

What questions are asked in a Scale AI research scientist interview?

Preparing for Scale AI Interview Questions requires understanding how the company evaluates research depth, coding fluency, debugging structure, and scientific reasoning. The loop typically tests four areas: coding and debugging fundamentals, machine learning and modeling intuition, experiment design and research reasoning, and alignment with Scale AI’s Credo.

These categories show up consistently across ScaleAI Interview Questions and Scale AI Data Science Skill Test Questions, and they reflect the real challenges Research Scientists solve when assessing model reliability at scale. Across all categories, interviewers look for structured thinking, clarity under time pressure, and the ability to diagnose issues using measurable evidence rather than intuition alone.

Coding and debugging questions

Coding and debugging tasks assess how well you isolate errors, validate assumptions, and reason through pipeline behavior. Interviewers expect structured hypothesis testing and clean implementation practices, since these skills translate directly to daily work in evaluation and alignment.

Implement a Regex Matcher Supporting Dot and Star Operators

This question tests your ability to model sequence alignment and control flow using recursion or dynamic programming to handle ambiguous pattern matching across an entire input string. For a Scale AI Research Scientist, interviewers use this to assess how you reason about symbolic rule systems, manage combinatorial explosion, and build reliable evaluation logic that mirrors how model outputs are validated against flexible but strict specifications.

Simulate Normal Samples and Estimate an Order-Statistic Gap

This question tests your ability to combine stochastic simulation, order statistics, and aggregation to estimate a stable expected value from repeated random sampling. For a Scale AI Research Scientist, this evaluates how well you reason about sampling behavior, distributional properties, and empirical validation, which directly maps to how model behavior, evaluator variance, and metric stability are tested at scale.

How Would You Adapt a CNN to Handle Systematic Label Noise Between Two Classes

This question is about designing learning strategies that remain stable when labels are systematically corrupted, especially under distribution shift and poor observation conditions. For a Scale AI Research Scientist, this evaluates your ability to reason about data quality, label noise modeling, and evaluation robustness, which directly impacts how large scale training and alignment datasets are cleaned, audited, and trusted.

Design an Algorithm to Quantify Text Reading Difficulty for Non-Fluent Speakers

This question is about modeling text difficulty using NLP, feature engineering, and possibly supervised or self-supervised learning to map raw text to a continuous notion of readability for learners. For a Scale AI Research Scientist, it tests how you design measurable language quality signals, choose and validate difficulty metrics, and connect them to real users, which mirrors how Scale evaluates and routes model outputs to the right annotators, tasks, or end users based on fluency and comprehension levels.

Design a Real-Time Streaming System for High-Volume Transaction Data

This question is about designing a low-latency, fault-tolerant streaming architecture that replaces batch ingestion with continuous event processing for time-sensitive decisions. For a Scale AI Research Scientist, this tests how you think about real-time data reliability, evaluation latency, and signal integrity at scale, which directly mirrors how model outputs, human feedback, and safety signals must flow through Scale’s infrastructure without breaking consistency or trust.

Design an Anomaly Detection Model for High Volume Semi Structured Logs

This question is about building and validating an unsupervised or weakly supervised anomaly detection system for noisy, semi structured log streams at scale, including representation learning, sequence modeling, and drift aware monitoring. For a Scale AI Research Scientist, it tests how you translate messy operational telemetry into reliable statistical signals for rare failure modes, and how you design evaluation frameworks that keep anomaly models trustworthy while the underlying infrastructure, customers, and workloads are constantly changing.

Machine learning and modeling questions

These questions resemble those asked in a Scale AI Machine Learning Research Engineer Interview and focus on reliability, modeling intuition, and evaluation tradeoffs. They require clear articulation of how model behavior changes under different setups.

Design an Experiment and Metrics to Compare Two Search Engines

This question is about setting up an online experiment and defining robust relevance and engagement metrics to decide which of two search systems provides higher quality results for users. For a Scale AI Research Scientist, it tests how you think about evaluation design, metric choice, and human-in-the-loop judgment when comparing ranking or retrieval models, which maps directly to how Scale measures improvements in LLM evaluation systems, search over datasets, and enterprise tooling for model quality.

Design a Text to Image Retrieval System for Large Product Catalogs

This question is about building an end to end multimodal retrieval system that maps free form text queries into a joint embedding space with images so that relevant products can be efficiently retrieved at scale. For a Scale AI Research Scientist, it tests how you think about representation learning, large scale retrieval, and evaluation frameworks for multimodal models, which are central to how Scale builds and benchmarks systems that connect natural language instructions to visual data for enterprise and agentic use cases.

Design and Evaluate a High Stakes Bomb Detection Model

This question is about framing a rare event classification problem end to end, including how you choose model inputs and outputs, define labels, and design accuracy and robustness measurements for a security critical task. For a Scale AI Research Scientist, it tests how you think about safety sensitive classifiers under extreme class imbalance, how you trade off false positives and false negatives, and how you would validate such a system with human oversight and realistic evaluation pipelines before deployment.

Explain Common Failure Modes in Reinforcement Learning from Human Feedback Pipelines

This question is about identifying where RLHF systems can break down across data collection, reward modeling, policy optimization, and evaluation due to bias, misalignment, reward hacking, or distribution shift. For a Scale AI Research Scientist, interviewers ask this to assess how deeply you understand human-in-the-loop training at scale, since Scale’s core work depends on building RLHF pipelines that remain stable, interpretable, and trustworthy under real-world annotation noise and model misuse risks.

Differentiate Reward Modeling and Preference Modeling in Human Feedback Systems

This question is about clarifying the conceptual and practical differences between learning a scalar reward function versus learning relative preferences from pairwise or ranked human judgments. For a Scale AI Research Scientist, this tests whether you understand how different supervision signals shape RLHF pipelines in practice, since Scale’s work depends on choosing the right feedback representation to balance annotation efficiency, alignment fidelity, and downstream policy behavior.

Research reasoning and experiment design

These questions evaluate how you construct hypotheses, design sound experiments, and diagnose model inconsistencies. Strong answers show systematic thinking and a commitment to reproducibility.

Identify and Mitigate Size-Based Bias in Generative Financial Summarization Models

This question is about diagnosing and correcting systematic performance bias in a generative model by analyzing data imbalance, representation gaps, and model behavior across different subpopulations. For a Scale AI Research Scientist, this evaluates how you audit model fairness, trace bias back to data and training dynamics, and validate mitigation strategies using rigorous, human grounded evaluation pipelines that Scale relies on for trusted enterprise and regulatory use cases.

How Would You Choose Between a Fast Baseline Recommender and a More Accurate Deep Model

This question is about making a principled tradeoff between time to deployment, expected performance gains, engineering cost, and experimental risk when choosing between two modeling approaches. For a Scale AI Research Scientist, this evaluates how you prioritize research investment versus iteration speed, and how you justify added model complexity based on measurable improvements in evaluation quality, downstream alignment outcomes, and resource constraints across large scale ML systems.

Decide Whether an A/B Test Lift in Click Through Rate Is Statistically Significant

This question is about applying hypothesis testing to compare click through rates between two variants, choosing an appropriate test, and interpreting p values or confidence intervals to decide if the observed lift is due to chance. For a Scale AI Research Scientist, it tests how rigorously you design and read experiments on model changes or evaluation policies, ensuring that claimed improvements in quality metrics are statistically sound before those changes influence large scale deployments and customer decisions.

Explain Whether Unequal Sample Sizes Bias an A/B Test and Why It Matters

This question is about understanding how unbalanced sample sizes affect variance, power, and statistical inference in A/B tests, and when imbalance does or does not introduce bias into estimated treatment effects. For a Scale AI Research Scientist, it tests whether you can reason precisely about experimental design and the reliability of evaluation results, which is critical when judging improvements to model quality, reward models, or RLHF policies from large scale but imperfectly balanced experiments.

Analyze A/B Tests With Non Normal Metrics and Limited Data

This question is about choosing appropriate statistical methods for an A/B test when the outcome metric is non normal and sample sizes are small, including when to use nonparametric tests, transformations, or bootstrapping. For a Scale AI Research Scientist, it tests whether you can still draw reliable conclusions about model or policy changes when standard Gaussian assumptions fail, which is critical for evaluating RLHF tweaks, new reward models, or safety interventions on messy, heavy tailed quality metrics.

Behavioral and Credo questions

These questions assess ownership, urgency, communication clarity, and values alignment. Strong answers include measurable outcomes and demonstrate scientific discipline.

Tell me about a high urgency project where you delivered impact quickly

This evaluates how you prioritize, make tradeoffs, and execute under time pressure without sacrificing scientific rigor. Interviewers want to see whether you can scope problems fast, cut through noise, and still produce results that matter.

Describe a time you debugged a difficult research issue

This tests your technical depth, persistence, and debugging methodology when experiments fail in non-obvious ways. The goal is to understand how you reason through uncertainty in complex systems rather than relying on trial and error.

How do you handle ambiguity in research direction?

This assesses your ability to self-direct when goals, data, or constraints are unclear. Strong answers show how you form hypotheses, choose what to test first, and reduce uncertainty through disciplined experimentation.

Give an example of disagreeing with a research lead

This evaluates your ability to challenge ideas with evidence while maintaining trust and alignment. Interviewers look for scientific maturity, not just confidence, especially in high-stakes research decisions.

Describe a failure that improved your experimentation approach

This reveals how you learn from mistakes and improve your experimental design, validation strategy, or evaluation methods. Strong answers show concrete changes in how you now run experiments, not just lessons in hindsight.

How to prepare for the Scale AI research scientist interview

Effective Scale AI Interview Prep means focusing on the skills that matter most: clean coding, structured debugging, scientific reasoning, and strong evaluation intuition. Because Scale AI Research emphasizes real world reliability, preparation should center on how large models fail, how to measure those failures, and how to design experiments that reveal useful patterns.

- Strengthen your coding fundamentals Practice Python problems involving tokenization, probability transforms, JSON parsing, and pipeline debugging. Use a plain editor without autocomplete to simulate interview conditions.

- Build a repeatable debugging framework Effective Scale AI Interview Preparation requires a disciplined method: verify assumptions, reproduce the failure on a minimal example, Isolate components, and test hypotheses step by step. Focus on common LLM failure modes like masking issues, normalization errors, reward drift, and subtle data quality problems.

- Deepen your evaluation and alignment intuition Read recent papers on alignment, preference modeling, and evaluation metrics. Recreate small experiments: compare model outputs, design simple rubrics, or probe hallucinations with adversarial prompts. These “mini pipelines” closely mirror take home and onsite tasks.

- Refine your scientific reasoning Practice articulating tradeoffs, ranking hypotheses, and explaining why a metric or experiment design is appropriate. Use examples from real projects to show how you navigate ambiguity and diagnose complex research issues.

- Mock Interviews Improve your reasoning under pressure by booking an Interview Query Mock Interview. These sessions simulate real debugging and evaluation challenges and help you sharpen both clarity and depth.

By preparing this way, you enter the interview with stronger intuition, clearer reasoning, and a deeper understanding of how Scale AI evaluates research impact.

Average Scale AI research scientist salary

Scale AI Research Scientists earn competitive compensation across levels, and the figures below use publicly available Machine Learning Engineer data from Levels.fyi as a conservative proxy, since Levels.fyi does not currently list verified Research Scientist submissions for Scale AI. Total compensation varies by seniority, scope, and location, and packages tend to be stock heavy given the company’s growth stage and equity philosophy.

| Level | Total / Year | Base / Year | Stock / Year | Bonus / Year |

|---|---|---|---|---|

| Research Scientist (est.) | ~$240K–$300K | ~$170K–$185K | ~$50K–$90K | ~$10K–$20K |

| Senior Research Scientist (est.) | ~$300K–$380K | ~$180K–$200K | ~$80K–$150K | ~$15K–$30K |

| Staff Research Scientist (est.) | ~$380K–$500K+ | ~$195K–$220K | ~$150K–$250K | ~$20K–$40K |

Compensation often rises significantly after year two once stock vesting accelerates.

Regional salary comparison

| Region | Salary Range | Notes | Source |

|---|---|---|---|

| San Francisco | ~$300K–$500K | Highest equity and cash | Levels.fyi (proxy role) |

| New York | ~$260K–$400K | Lower equity relative to SF | Levels.fyi (proxy role) |

| Remote US | ~$220K–$330K | Broad variation by state | Levels.fyi (proxy role) |

Disclaimer: These ranges are estimates based on Scale AI Machine Learning Engineer entries from Levels.fyi and may differ from actual Research Scientist compensation.

FAQs

How hard is the Scale AI research scientist interview?

The Scale AI interview is challenging because it tests depth, speed, and applied reasoning simultaneously. Coding problems are fast paced, debugging tasks mirror real evaluation failures, and research discussions require structured scientific thinking. The process rewards candidates who can work through ambiguity with discipline and communicate clear, testable insights.

How technical is the debugging round?

The debugging round is highly technical and reflects real issues found inside Scale AI Research. You may encounter masking problems, data drift, malformed JSON structures, inconsistent normalization, or incorrect metric implementations. Interviewers look for systematic reasoning with a repeatable workflow: reproduce the issue, isolate components, form hypotheses, and test them step by step.

How much research depth is required for this role?

You are expected to understand experimental design, evaluation methodology, preference modeling, and how large language models fail in both structured and open ended settings. Depth matters, but interviewers prioritize clarity and reasoning over niche specialization. Strong candidates can explain tradeoffs, interpret results, and propose measurable improvements to model behavior.

What distinguishes the Research Scientist role from the ML Engineer role at Scale AI?

Research Scientists focus on evaluation design, model reliability, experiment structure, and failure analysis. ML Engineers focus more on scalable implementation, optimization, and deploying model improvements into production. Both roles require strong coding skills, but the Research Scientist role prioritizes scientific exploration and measurement design.

How should I practice for the take home challenge?

Practice designing small evaluation pipelines, interpreting model outputs, and writing concise experiment summaries. Focus on clarity, transparency, and actionable insights rather than overengineering. Create mini experiments where you compare outputs, design rubrics, or analyze hallucinations. Time boxed practice sessions help simulate real conditions.

How long does the Scale AI interview process take?

Most candidates complete the process within two to four weeks, depending on scheduling and the complexity of the take home challenge. Faster loops often involve back to back technical interviews and aligned availability across teams.

What helps candidates stand out?

Consistency across interviews matters most. Candidates who reason aloud, validate assumptions, work systematically through failures, and present insights with precision tend to perform well. Scale AI values speed, clarity, and rigor, so concise communication is a meaningful advantage.

Conclusion

To deepen your preparation, explore Interview Query’s full set of research focused interview guides, practice questions, and hands on challenges. Strengthen your evaluation intuition, debugging discipline, and scientific reasoning with structured practice designed for high performance technical roles. Start preparing with Interview Query to build confidence and stand out in every stage of the Scale AI Research Scientist interview.