Scale AI Software Engineer Interview Guide: Process, Questions & Prep (2025)

Introduction

Preparing for the Scale AI Software Engineer Interview means understanding how Scale builds the data infrastructure and evaluation systems powering today’s rapid growth in large language models. As companies deploy AI at unprecedented scale, engineers at Scale ship fast, own complex systems end to end, and maintain reliability under constant iteration. The Scale AI Interview reflects this pace by focusing on practical coding, debugging, and system design that mirrors real production challenges.

You will be evaluated on clarity of thought, technical depth, and your ability to build tools that support high-volume annotation and model evaluation. Each stage highlights how you approach problems, handle ambiguity, and deliver customer-focused solutions. This guide outlines each stage of the Scale AI Software Engineer Interview, highlights common questions, and shares proven strategies to help you stand out and prepare effectively with Interview Query.

What does a Scale AI software engineer do?

A Scale AI software engineer builds the systems that support large language model pipelines, real-time annotation operations, and evaluation workflows. Scale AI engineering focuses on backend development, platform reliability, and infrastructure that keeps high-throughput data processes fast and dependable. Engineers design APIs, manage distributed services, and optimize storage and retrieval so annotation platforms remain performant at scale. They work closely with machine learning teams, data operations, and product partners to turn model requirements into production-ready tools while moving quickly and owning features end to end.

Core responsibilities include:

- Developing backend services for annotation, data ingestion, and evaluation

- Improving reliability across distributed systems and internal platforms

- Automating infrastructure to support high-volume, low-latency workloads

- Collaborating with machine learning engineers and product teams

- Iterating rapidly based on customer needs and system performance

For hands-on practice, explore Interview Query Challenges to strengthen your coding and system design skills.

Why this role at Scale AI?

Understanding why work at Scale AI starts with the company’s outsized impact on enterprise AI, where its data pipelines, evaluation systems, and high-reliability tooling support the rapid growth of partners deploying models like ChatGPT, Claude, and Gemini. Engineers here influence LLM quality directly by building infrastructure that shapes how models behave in production and how customers adopt AI at scale.

The fast-moving Scale AI culture emphasizes ownership, cross-functional problem solving, and delivering value quickly, giving software engineers the opportunity to work across data infrastructure, large-scale evaluation workflows, and distributed systems that operate at industry-leading volumes. This role offers uncommon exposure to both the engineering depth and product context behind modern AI systems, making it a strong fit for anyone seeking meaningful impact and accelerated growth in the generative AI ecosystem.

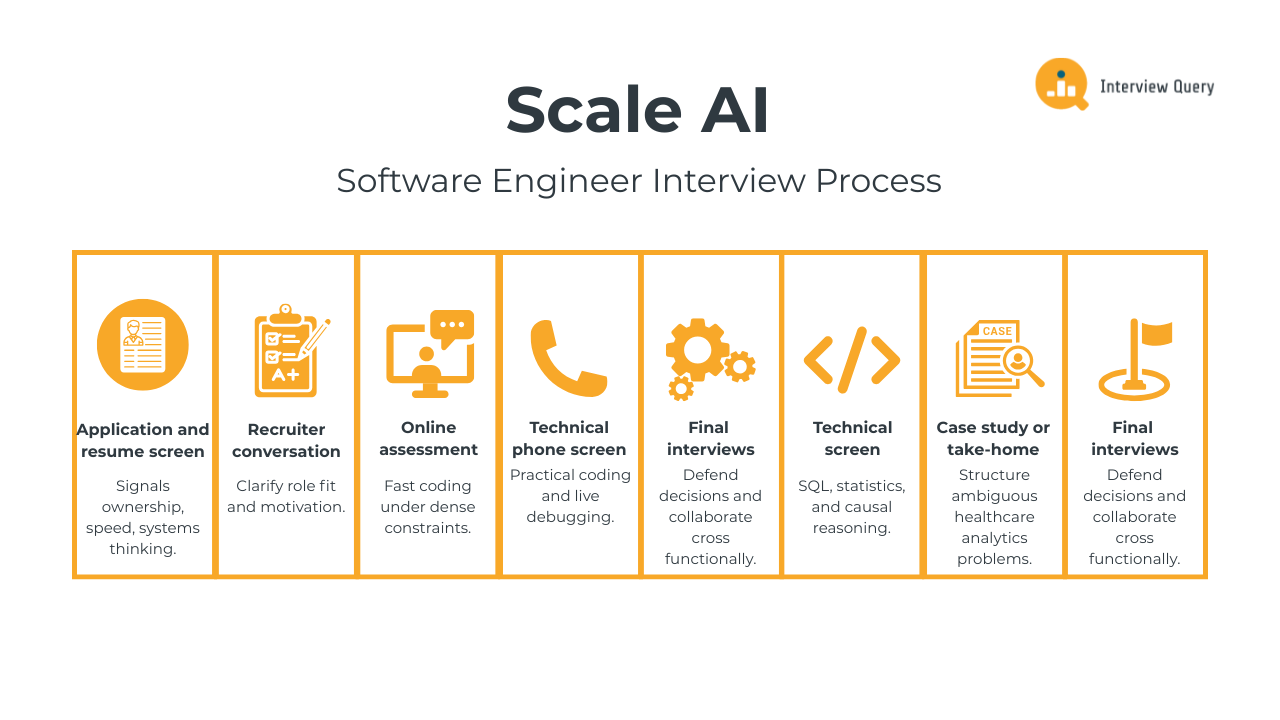

Scale AI Software Engineer Interview Process

The Scale AI Software Engineer Interview Process evaluates your ability to code quickly, debug accurately, and reason about real production systems. Candidates typically move through an application review, recruiter call, a technical screen or Scale AI HackerRank assessment, and then system design, Credo, and onsite rounds.

Early interviews focus on assessing implementation speed and problem-solving depth, while later rounds evaluate architectural thinking, ownership, and communication. This structure reflects the broader Scale AI Interview Process, designed to identify engineers who can operate effectively in a high-velocity environment.

Application and resume screen

The application review emphasizes signals aligned with Scale’s engineering culture: ownership, speed, and systems thinking. Recruiters look for backend, infrastructure, distributed systems, or real-time data experience, along with evidence of debugging strength and product awareness. Many candidates described resume discussions that centered on concrete accomplishments rather than theoretical concepts, which reinforces the importance of strong, quantifiable impact statements. To optimize for Scale AI Resume Tips, highlight experience with system design, data-intensive workloads, or components that supported large-scale operations. Demonstrating end-to-end ownership and the ability to ship quickly increases the likelihood of progressing.

Recruiter conversation

The Scale AI Recruiter Screen is usually a short call focused on your background, motivation, communication style, and alignment with the role. Candidates frequently noted that these calls were transparent and helpful, outlining upcoming interview stages and compensation expectations. This conversation rarely includes technical questions and instead focuses on how clearly you can explain your experience and how well you understand the role’s responsibilities. Be prepared to articulate your impact concisely and connect your past work to Scale’s mission and engineering challenges.

Online assessment (HackerRank)

The Scale AI HackerRank assessment is one of the most variable yet defining stages. Some candidates receive algorithmic problems that resemble LeetCode medium or hard difficulty. Others report custom exercises requiring object-oriented design, parsing logic, or fast implementation of complex rules. Several reviewers described the Scale AI Coding Interview portion as dense, with long prompts that require careful reading and rapid execution under tight time limits. The assessment measures correctness, speed, and your ability to reason independently without scaffolding. Strong performers manage edge cases, test their code proactively, and communicate their approach cleanly.

Technical phone screen

The phone screen introduces the first set of Scale AI Coding Interview Questions, often built around practical engineering tasks rather than abstract puzzles. Candidates reported questions involving JSON parsing, interval manipulation, multi-part logical constraints, or object-oriented design. Interviewers often ask candidates to walk through their code, justify decisions, and correct mistakes live. This stage evaluates structured thinking, error handling, and clarity. Success requires both implementation accuracy and the ability to verbalize your reasoning under time pressure.

System design interview

The Scale AI System Design Interview evaluates your ability to architect systems that support real-time annotation, high-volume request throughput, distributed services, and data integrity. Candidate reports describe design prompts involving routing pipelines, message reliability, rate limiting, and mechanisms to maintain consistency across concurrent operations. Interviewers look for a clear breakdown of components, thoughtful trade-offs, and an understanding of scalability and fault tolerance. Demonstrating awareness of monitoring, backpressure, replication, and performance tuning is particularly valuable.

The Credo interview

The Scale AI Credo Interview focuses on values critical to the company: ownership, urgency, customer focus, and clarity. Glassdoor candidates described behavioral questions centered on delivering under pressure, navigating ambiguity, resolving conflicts, and taking responsibility for outcomes. Interviewers expect structured, specific stories that demonstrate not only what you achieved but how you made decisions. Clear articulation of impact, learnings, and customer outcomes is essential.

The Scale AI card game (optional round)

Some candidates encounter the Scale AI Card Game Interview, a fast-paced implementation challenge built around a set of evolving rules or constraints. Many reviewers noted that it is less about algorithms and more about coding speed, accuracy, and the ability to translate a specification into a working system while thinking aloud. This round also surfaces how candidates prioritize, handle ambiguity, and reason about product-like logic. It is especially common in technical screening phases for mid-level roles.

Onsite engineering loop

The onsite loop typically consists of four to five interviews that combine deeper coding challenges, debugging tasks, backend engineering problems, system design, and another Credo session. Candidate feedback highlights LeetCode-hard problems, multi-part questions, and system design scenarios that examine both architectural thinking and execution detail. This stage verifies technical depth and assesses how effectively you collaborate, communicate trade-offs, and reason through incomplete information.

What questions are asked in a Scale AI software engineer interview?

Coding questions

Coding questions in the Scale AI software engineer interview focus on practical data manipulation, metric computation, and reasoning over real-world datasets. These problems test whether you can write clean, efficient logic that mirrors how Scale AI engineers analyze usage, revenue, and engagement signals at production scale.

Find the Top Five Frequently Bought Together Products

At its core, this evaluates how you identify relational patterns in transactional datasets using joins and aggregations. Scale AI uses this to gauge whether engineers can surface co-occurring signals similar to task grouping or dataset usage patterns at production scale.

Count January 2020 Users With Over $100 in Successful Payments

This problem centers on converting raw payment events into a clearly defined user-level metric under strict filtering rules. For Scale AI, it mirrors the kind of revenue attribution and usage tracking required for enterprise AI customers.

Compute Yearly Retention Rates for Annual Subscriptions

Here, the emphasis is on structuring time-based cohorts and measuring continuity across long subscription windows. Scale AI asks this because retention directly affects long-running labeling programs and multi-year AI contracts.

Detect Subscription Date Overlaps per User

This prompt targets your ability to reason about temporal overlaps and uncover logical conflicts in subscription data. At Scale AI, catching these issues is essential for maintaining accurate access control, billing, and entitlement systems.

Identify Age Groups With the Highest Clickthrough Rate in 2021

Rather than simple aggregation, this tests whether you can derive comparative performance metrics from interaction data. Scale AI relies on this skill to evaluate user engagement patterns and inform product experimentation.

Data Structure and Algorithms questions

Data structures and algorithms questions assess your fundamentals in traversal, optimization, and constraint-based reasoning. At Scale AI, these interviews confirm that software engineers can implement reliable, performant logic that underpins large-scale annotation pipelines, scheduling systems, and core infrastructure.

Search for a Value in a Linked List

This question checks your understanding of pointer-based data structures and your ability to traverse linear collections safely and efficiently. Scale AI asks this to confirm engineers are comfortable working close to core data representations that underpin performance-critical systems.

Example:

Input:

target = 2 linked_list = 3 -> 2 -> 5 -> 6 -> 8 -> NoneOutput:

search_list(target, linked_list) -> TrueDetermine Whether One String Is a Subsequence of Another

This problem focuses on sequential comparison logic and state tracking across iterations. For Scale AI, it reflects real-world needs such as validating ordered signals or ensuring data integrity in streamed or preprocessed inputs.

Input:

string1 = 'abc' string2 = 'asbsc' string3 = 'acedb' is_subsequence(string1, string2) -> True is_subsequence(string1, string3) -> FalseCompute the Greatest Common Divisor of Two Integers

This question evaluates your grasp of fundamental algorithmic reasoning and numerical edge cases. Scale AI uses this to assess whether candidates can implement reliable low-level logic that often appears inside larger optimization or validation routines.

Input:

int_list = [8, 16, 24]Output:

def gcd(int_list) -> 8Count the Number of Valid Triangles From an Array

This prompt tests your ability to combine mathematical constraints with efficient iteration strategies. At Scale AI, this signals whether you can reason about feasibility conditions and performance when working with combinatorial data.

Choose the Optimal Host Based on Availability Intervals

This question centers on interval reasoning and selecting optimal candidates under constraints. Scale AI values this skill because scheduling and resource allocation problems frequently arise in large-scale annotation and compute workflows.

System design interview questions

System design questions in the Scale AI software engineer interview evaluate how you architect scalable, fault-tolerant systems under real operational constraints. Interviewers look for clear data modeling, API design, and tradeoff analysis that reflect Scale AI’s need to support high-volume workflows and enterprise-grade reliability.

Design an Address Storage Schema

This question evaluates how you model flexible, real-world entities that vary across regions while remaining queryable and consistent. Scale AI asks this to assess whether engineers can design schemas that support global customers, messy inputs, and evolving data requirements.

Design a Type-Ahead Search System

This problem focuses on low-latency system design, ranking logic, and efficient data retrieval under real-time constraints. At Scale AI, this reflects the need to build fast feedback systems for internal tools and user facing annotation or search workflows.

Design a Click Tracking Data Schema

This question examines how you structure high-volume event data for analysis, experimentation, and downstream consumption. Scale AI uses this to gauge whether candidates can support large-scale behavioral logging that feeds model training and product analytics.

Design a Swipe-Based Payment API

This prompt tests your ability to define clear API contracts, handle failure states, and ensure data consistency across distributed systems. Scale AI values this skill because reliable payment and usage tracking is essential for enterprise customers and long-running contracts.

Design a Yelp-Like Review and Recommendation System

This question assesses end-to-end system thinking, including data modeling, ranking, scalability, and user interaction patterns. For Scale AI, it demonstrates whether an engineer can design complex platforms that combine user generated data with intelligent ranking and retrieval.

Behavioral and Credo questions

Behavioral and Credo questions assess how you communicate, make decisions, and align with Scale AI’s mission of building real-world AI infrastructure. These interviews focus on ownership, clarity, and judgment, especially when working across product, research, and operations teams under tight timelines.

Present Data Insights to a Non-Technical Audience

This question focuses on how effectively you translate complex analysis into clear narratives that drive understanding and action. Scale AI asks this because engineers frequently need to communicate model behavior, data quality issues, or system tradeoffs to operators, clients, and leadership.

Handle Conflicting Feedback From Multiple Stakeholders

This question evaluates your ability to navigate ambiguity, prioritize inputs, and maintain alignment across teams with different incentives. At Scale AI, this skill is critical when balancing the needs of product, research, operations, and enterprise customers.

Explain a Complex Technical Concept in Simple Terms

This prompt tests whether you truly understand a system well enough to simplify it without losing accuracy. Scale AI values this because engineers often work with non-technical partners in labeling operations and customer-facing workflows.

Respond to a Delayed Product or Feature Launch

This question examines how you take ownership, communicate setbacks, and make decisions under pressure. Scale AI asks this to assess accountability and judgment in environments where delivery timelines directly affect customer trust and model deployment.

Explain Why You Want to Work at Scale AI

This question assesses alignment with the company’s mission, pace, and responsibility level. Scale AI uses it to understand whether candidates are motivated by building real-world AI infrastructure rather than purely theoretical or academic work.

Scale AI Software Engineer interview tips

Preparing for the Scale AI software engineer interview requires a slightly different mindset than traditional big tech prep. While strong algorithms matter, Scale places far more weight on production realism, debugging skill, and your ability to reason through messy, incomplete systems. Your preparation should mirror that reality.

1. Prioritize debugging and production style coding

Many candidates are surprised by how often Scale interview questions resemble real bugs, partial failures, or ambiguous feature behavior rather than clean textbook problems. Instead of only grinding standard LeetCode patterns, spend time practicing:

- Tracing failing logic through existing code

- Handling edge cases in long problem statements

- Writing defensive code with input validation, retries, and clear error handling

Practice explaining not just what your code does, but how it behaves under load, failure, and unexpected input. Interviewers care just as much about how you reason through uncertainty as they do about final correctness.

2. Master system design fundamentals with Scale-specific context

Scale’s system design interviews focus heavily on data pipelines, annotation workflows, evaluation infrastructure, and reliability at high throughput. Your preparation should emphasize:

- Designing for concurrency, retries, and backpressure

- Trade-offs between real-time and batch systems

- Data consistency across distributed services

- Observability through logging, metrics, and alerting

Practice designing systems like streaming ingestion pipelines, job schedulers, or evaluation platforms for LLMs. Be ready to clearly articulate bottlenecks, scaling limits, and failure recovery strategies.

3. Build product awareness around AI data and evaluation

Scale engineers sit at the intersection of infrastructure and product. Interviewers often test whether you understand how annotation, model evaluation, and enterprise AI workflows actually operate. Spend time learning:

- How labeling pipelines work end to end

- Where data quality breaks down in production

- How evaluation datasets evolve and version over time

- What reliability means when customers depend on your system for model performance

Being able to connect technical design decisions to downstream model behavior and customer outcomes is a major differentiator.

4. Prepare behavioral answers around ownership and urgency

The Credo interview is not a formality. Scale strongly values speed, accountability, and clear decision-making under pressure. Prepare stories that show:

- You owned a system end to end, not just a small piece

- You shipped under time pressure with incomplete information

- You made a hard trade-off for customer impact

- You took responsibility for failures and fixed them

Structure your answers around the problem, the constraints, your decision, and the measurable outcome. Vague teamwork stories will not carry much weight here.

5. Simulate real interview conditions

Practice coding without heavy scaffolding. Read long prompts in one pass. Speak your thought process while implementing. Force yourself to test aggressively and debug live. This mirrors the cognitive load of Scale interviews far better than slow, scripted practice.

For hands-on preparation that closely matches real interview difficulty, many candidates use Interview Query to sharpen debugging, system design, and production-focused coding skills in a structured way.

Strong preparation for Scale AI is less about memorizing patterns and more about training your engineering judgment. If you can build clearly, debug calmly, and explain trade-offs under pressure, you will be operating exactly the way Scale engineers are expected to operate on the job.

Average Scale AI software engineer salary

Average Base Salary

Average Total Compensation

Scale AI software engineers earn competitive compensation across levels, combining a solid base salary with meaningful stock grants and bonuses, making total compensation packages considerably larger than base pay alone.

| Level | Total / Year | Base / Year | Stock / Year | Bonus / Year |

|---|---|---|---|---|

| L3 Software Engineer | ~ US$ 180K–190K | ~ US$ 145K | ~ US$ 38K–41K | ~ US$ 0.4K–0.5K (Levels.fyi) |

| L4 Software Engineer | ~ US$ 310K–330K | ~ US$ 197K–200K | ~ US$ 115K–124K | ~ US$ 1.2K–1.3K (Levels.fyi) |

| Senior / L5 Software Engineer | ~ US$ 430K–440K | ~ US$ 216K | ~ US$ 222K | ~ US$ 0.7K (Levels.fyi) |

Compensation tends to vary based on region, seniority, and equity vesting — and stock grants become a substantial part of total pay, making long-term upside especially meaningful.

Note: Compensation rises significantly after stock vesting begins in year two.

Regional compensation context

In the United States — where most publicly reported salaries come from — total packages roughly span US $180K to over US $430K depending on level and equity awards.

Keep in mind that cost-of-living and local market conditions greatly affect the real value of these compensation packages, especially outside major tech hubs.

FAQs

Is Scale AI hard to interview for?

Yes, the Scale AI interview is considered challenging, but for different reasons than traditional big tech companies. Instead of relying only on abstract algorithm puzzles, Scale emphasizes real-world engineering judgment, debugging skill, and system design under ambiguity. Candidates who are strong at shipping production code, reasoning about failures, and explaining trade-offs clearly tend to perform best. The difficulty comes from the realism of the problems and the speed expected, not just raw algorithm depth.

Does Scale AI use LeetCode-style questions?

Scale AI does use LeetCode-style questions in some early rounds, especially during the HackerRank and phone screens. However, these problems often feel more applied than theoretical. Many candidates report multi-part questions with long prompts, custom constraints, or object-oriented design requirements. You should still prepare standard data structures and algorithms, but expect to apply them in less polished, more production-like scenarios.

What is the Credo interview really evaluating?

The Credo interview evaluates ownership, urgency, accountability, and customer focus. Interviewers are not looking for abstract leadership principles. They want concrete examples where you took responsibility for outcomes, made difficult trade-offs under pressure, and delivered impact with imperfect information. Strong answers show decision-making under constraints, not just collaboration or good intentions.

What languages does Scale AI use?

Scale AI primarily hires engineers working in Python, Go, and JavaScript or TypeScript, especially across backend services, infrastructure, and platform tooling. Some teams also use Java and C++ depending on system requirements. More important than any single language is your ability to write clean, production-ready code and reason across services.

How long is the Scale AI hiring process?

The full Scale AI hiring process usually takes three to six weeks, depending on scheduling speed and role urgency. This includes the resume screen, recruiter call, technical assessment, system design and Credo interviews, and the onsite loop. Delays typically occur around onsite scheduling and final team matching.

How can interns or new grads prepare?

Interns and new grads should focus heavily on debugging fundamentals, clean coding habits, and system design basics. Strong preparation includes practicing real implementation problems, learning how distributed systems fail, and developing the ability to communicate clearly while coding. Demonstrating learning velocity, ownership in projects, and strong fundamentals matters more than having deep industry experience.

Does Scale AI focus more on speed or correctness?

Scale values both, but speed under realistic constraints is critical. Interviewers expect you to move quickly, test aggressively, and adjust when things break. Perfect code written too slowly is often less impressive than solid, well-tested code delivered with urgency and clarity.

Conclusion

The Scale AI software engineer interview rewards candidates who think like real production engineers: fast, methodical, and customer-focused. With the right balance of coding realism, system design depth, and ownership-driven behavioral prep, you can approach each stage with confidence. For deeper preparation across data engineering, machine learning systems, and platform roles, explore Interview Query’s broader engineering interview guides.