Optiver Machine Learning Engineer Interview Guide (2026): Process, Questions & Prep

Introduction

Optiver sits at the center of modern quantitative trading, where machine learning models operate under extreme performance constraints and directly influence real capital decisions. As one of the world’s leading proprietary trading firms, Optiver applies machine learning to pricing, execution, and market-making problems that demand mathematical rigor, clean engineering, and fast judgment. Preparing for an Optiver machine learning engineer interview means going far beyond standard model theory and into applied decision-making, numerical reasoning, and systems thinking under pressure.

This guide walks through the Optiver machine learning engineer interview process step by step, explains the types of technical and behavioral questions you can expect, and shows how interviewers evaluate modeling trade-offs, coding quality, and real-world impact. You will also learn how Optiver’s expectations differ from other quant firms and how to prepare effectively for each stage of the process, from online assessments to final interviews. Overall, we outline each stage of the Optiver machine learning engineer interview, highlight the most common Optiver style interview questions, and share proven strategies to help you stand out and prepare effectively with Interview Query.

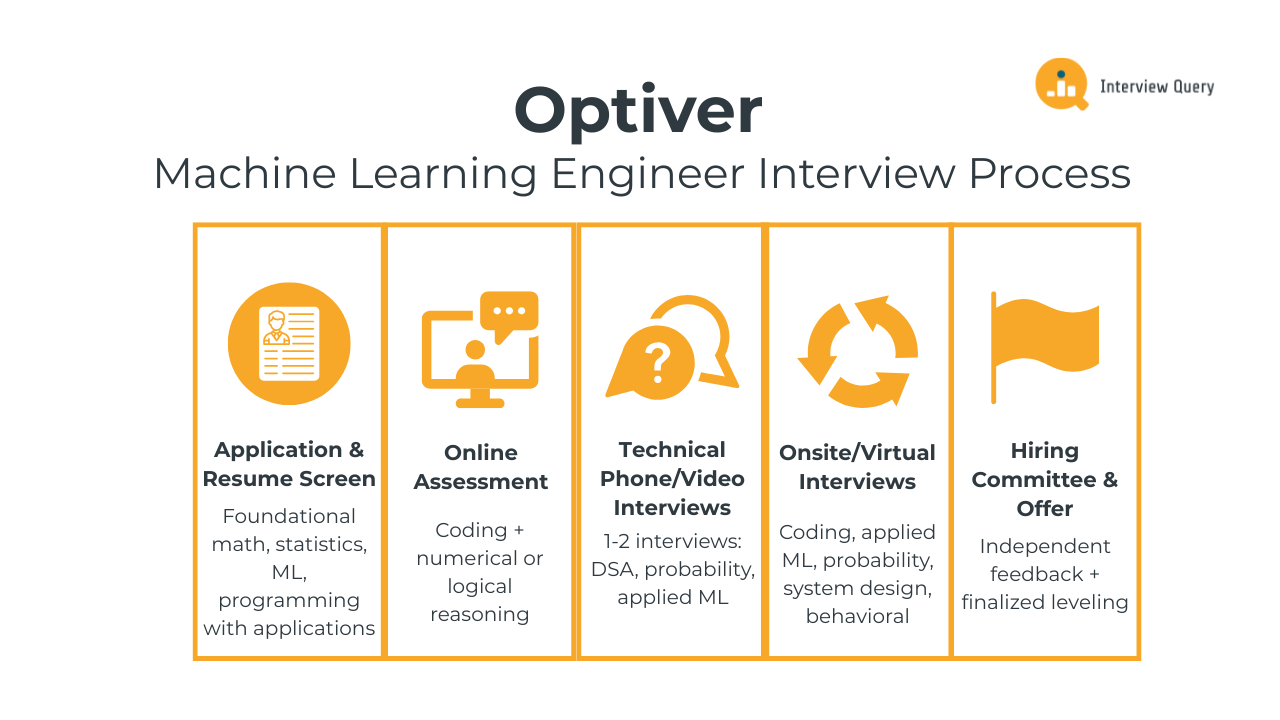

Optiver Machine Learning Engineer Interview Process

The Optiver machine learning engineer interview process is designed to test how well you apply mathematical reasoning, machine learning fundamentals, and software engineering principles in fast moving, high stakes environments. Optiver Interviewers focus on clarity of thought, speed and accuracy under pressure, and your ability to make sound trade-offs when working with noisy data and strict performance constraints. The full process typically spans several weeks and includes multiple technical and behavioral touchpoints, each evaluating a distinct skill set that is critical to success at a top quantitative trading firm.

Application and Resume Screen

The process begins with a resume review by Optiver recruiters and engineers. At this stage, they look for strong foundations in mathematics, statistics, machine learning, and programming, along with evidence that you have applied these skills to real problems. Resumes that stand out usually highlight performance improvements, modeling impact, or system efficiency rather than generic project descriptions. Experience with time series data, optimization problems, competitive programming, or performance critical systems is especially relevant.

Tip: Highlight measurable outcomes such as latency reductions or predictive improvements to show strong ownership and an ability to evaluate real world impact.

Online Assessment and Numerical Reasoning Test

Many candidates are asked to complete an online assessment early in the process. This typically includes coding problems and numerical or logical reasoning questions that test speed, accuracy, and mental math. The questions are designed to evaluate how quickly you can reason through unfamiliar problems and implement correct solutions under time constraints. Precision matters, as small mistakes can compound in trading environments.

Tip: Practicing timed problem solving demonstrates strong execution speed and attention to detail, both of which are critical signals for performance driven roles.

Technical Phone or Video Interviews

The next stage usually consists of one or more technical interviews conducted over video. These interviews focus on algorithms, data structures, probability, and applied machine learning concepts. You may be asked to write code in a shared document, walk through a modeling decision, or analyze a simplified trading or data scenario. Interviewers pay close attention to how you structure your thinking and explain assumptions as you work through problems.

Tip: Explaining your reasoning clearly as you code shows strong communication skills and the ability to collaborate effectively in high pressure discussions.

Onsite or Virtual Onsite Interviews

The onsite or virtual onsite stage is the most in depth part of the Optiver machine learning engineer interview process. It usually consists of four to five interviews, each lasting around 45 to 60 minutes. These rounds are designed to simulate the types of technical and decision making challenges you would face on the job, with a strong emphasis on reasoning under uncertainty, performance constraints, and collaboration with traders and engineers.

Coding and algorithms round: This round focuses on writing clean, efficient code to solve time constrained problems. You may be asked to implement algorithms, work with data streams, or reason about performance trade offs related to memory usage and latency. Interviewers care as much about correctness as they do about how you structure your solution and handle edge cases.

Tip: Talking through time and space complexity shows strong engineering judgment and signals that you can reason about performance critical systems.

Applied machine learning round: In this interview, you will discuss machine learning problems in a trading context. Questions often cover feature selection for noisy time series data, model evaluation under non stationary conditions, and trade offs between model complexity and stability. You may also be asked to critique a modeling approach or explain how you would monitor a model in production.

Tip: Grounding your answers in real constraints like latency, robustness, and interpretability demonstrates practical machine learning maturity.

Probability and numerical reasoning round: This round tests your ability to reason quantitatively under pressure. You may solve probability puzzles, expected value problems, or estimation questions that resemble trading decisions. The goal is to assess how quickly and accurately you can reason through uncertainty and arrive at defensible conclusions.

Tip: Clearly stating assumptions before solving the problem highlights disciplined thinking and reduces the risk of logical errors.

System design or modeling discussion: Depending on the team and level, you may be asked to design a simplified system or modeling pipeline. This could involve outlining how data flows from ingestion to inference, how models are updated, or how failures are detected. Interviewers look for clarity, simplicity, and awareness of operational risks.

Tip: Prioritizing reliability and ease of debugging shows strong ownership and readiness for production responsibilities.

Behavioral and collaboration round: This interview evaluates how you work with others in a fast paced environment. Expect questions about handling disagreements, responding to failed ideas, or collaborating with traders who rely on your models. Optiver values clear communication, accountability, and the ability to learn quickly from feedback.

Tip: Using concrete examples with clear outcomes demonstrates strong collaboration skills and emotional composure under pressure.

Hiring Committee and Offer

After the interviews, each interviewer submits independent feedback that is reviewed by a hiring committee. The committee evaluates your performance holistically, considering technical strength, problem solving approach, communication, and overall fit with Optiver’s culture. If you pass the bar, the team finalizes leveling and extends an offer that reflects both your experience and performance across the process.

Tip: Consistency across interviews signals reliability, which is a highly valued trait in environments where decisions have immediate financial consequences.

Want to build up your Optiver interview skills? Practice real hands-on machine learning engineering problems on the Interview Query Dashboard and start getting interview-ready for Optiver today.

Optiver Machine Learning Engineer Interview Questions

Optiver’s machine learning engineer interview questions focus on how well you apply fundamentals in fast moving, high pressure environments where models directly influence trading decisions. Interviewers are not only evaluating technical depth, but also how you reason through uncertainty, justify trade-offs, and communicate clearly with engineers and traders. Questions tend to blend machine learning theory, applied modeling, numerical reasoning, and system level thinking, all grounded in performance, robustness, and real world impact.

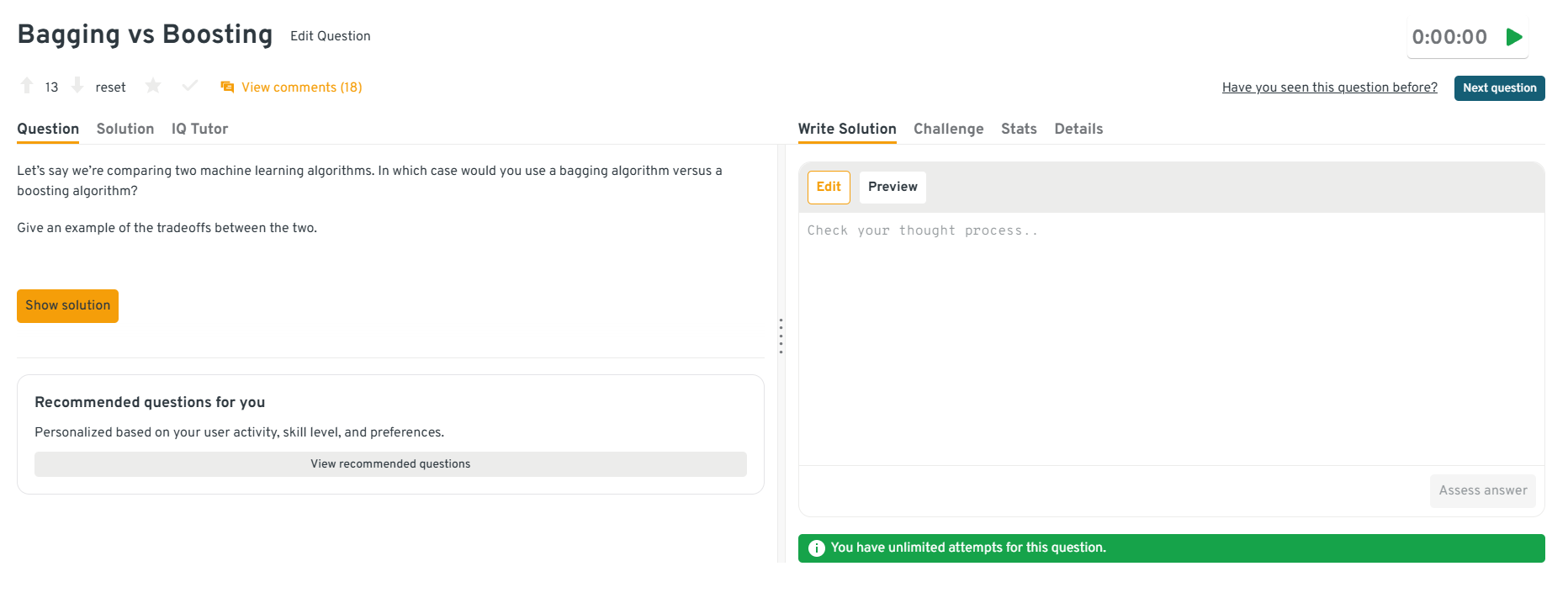

Machine Learning Fundamentals and Modeling Questions

Machine learning questions at Optiver emphasize judgment over complexity. Interviewers want to see whether you can choose the right model for noisy, non-stationary market data and explain why that choice makes sense under latency and risk constraints.

-

This question tests whether you understand trade-offs between model expressiveness, sparsity, and computational cost, which matter in latency-sensitive trading systems. At Optiver, high-cardinality categories can introduce noise and instability if handled poorly. A strong answer explains why one-hot encoding may be impractical and discusses alternatives like target encoding, hashing, or learned embeddings, including their risks. You should emphasize preventing leakage, handling rare categories, and monitoring stability over time in non-stationary data.

Tip: Explaining how you balance information retention with noise control signals strong modeling judgment, not just technical knowledge.

-

This question evaluates your understanding of bias-variance trade-offs and robustness, which are critical when market behavior shifts. Optiver asks this to see whether you can reason about model stability under noisy signals. A good answer explains that bagging reduces variance and is more robust to noise, while boosting reduces bias but can overfit unstable patterns. You should frame the choice around market volatility, data quality, and tolerance for model risk.

Tip: Connecting algorithm choice to risk control demonstrates decision-making maturity that Optiver values in production models.

Head to the Interview Query dashboard to practice the full set of machine learning engineer interview questions. With hands-on coding challenges, applied machine learning problems, probability drills, and system design scenarios, along with built-in code testing and AI-guided feedback, it is one of the most effective ways to sharpen your reasoning, judgment, and decision-making skills for the Optiver machine learning engineer interview.

-

This question tests your ability to translate an abstract concept into measurable signals, a core skill in trading analytics. Optiver cares less about the exact definition and more about how you reason through ambiguity. A strong answer defines “good” using risk-adjusted returns, consistency, and behavior under drawdowns, then outlines a pipeline for feature engineering, labeling, validation, and monitoring. You should emphasize avoiding hindsight bias and ensuring definitions remain stable over time.

Tip: Showing how you guard against label leakage highlights strong causal and statistical awareness.

-

This question evaluates whether you understand metrics beyond surface-level interpretation. At Optiver, small metric differences can mask meaningful risk trade-offs. A strong answer explains how ROC AUC measures ranking quality, why it may be insufficient alone, and how you would compare models using confidence intervals, stability across regimes, and operating points. You should also mention aligning the final choice with business costs rather than blindly maximizing AUC.

Tip: Discussing metric uncertainty signals disciplined evaluation, which is critical in high-stakes decision systems.

-

This question tests whether you understand foundational modeling mechanics rather than memorized definitions. Optiver asks it to assess clarity of reasoning and probability interpretation. A strong answer explains that logistic maps inputs to a single probability, while softmax produces a normalized probability distribution across classes. You should connect their usefulness to interpretability, calibration, and stability, especially when probabilities directly inform trading or risk decisions.

Tip: Linking activation functions to probability calibration demonstrates conceptual depth beyond formula recall.

Watch Next: How to Ace a Machine Learning Mock Interview - Design a recommendation engine

In this mock interview session, Ved, a PhD student and ML research scientist intern at LinkedIn, walks through a real-world ML challenge prompt showing how he breaks down the problem into features, modeling strategy, evaluation metrics, and delivery-ready presentation, giving you an interview-ready template you can apply in your own prep.

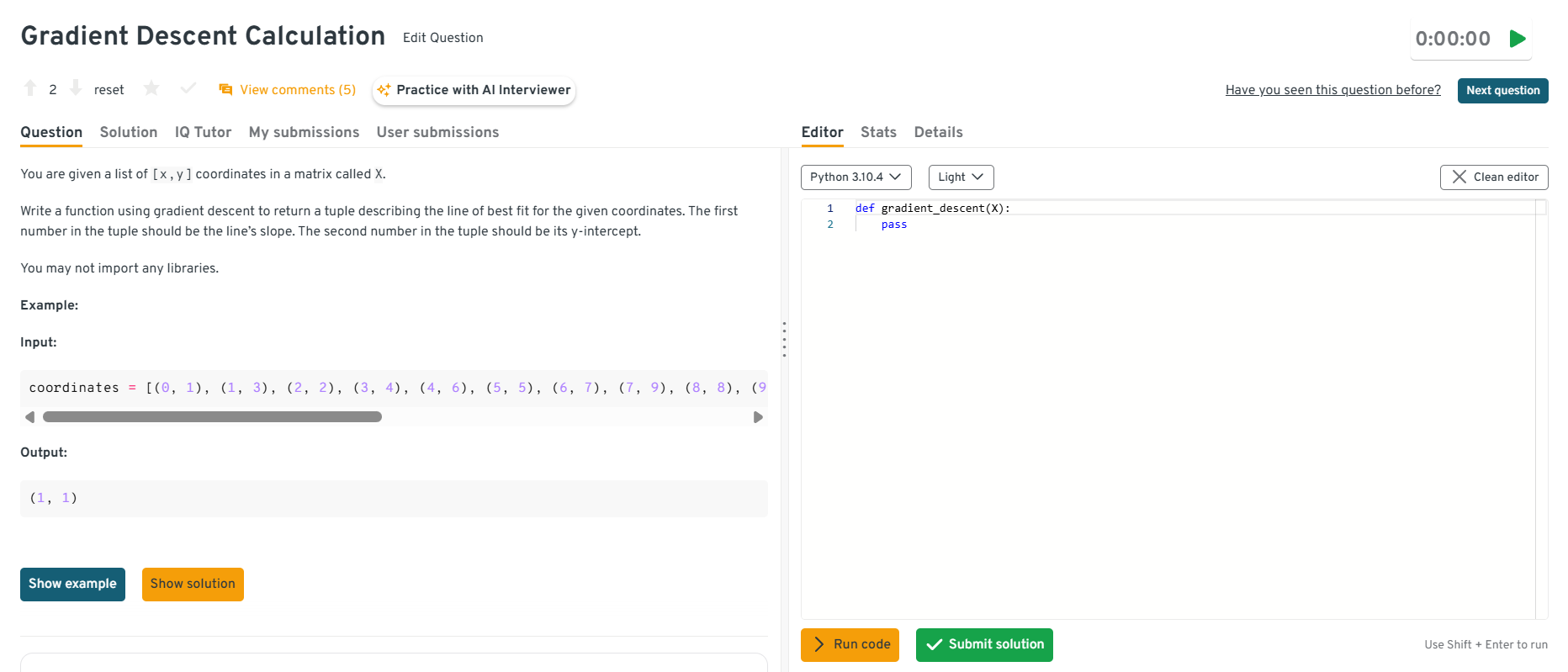

Coding and Algorithms Questions

Coding questions at Optiver assess correctness, speed, and clarity. Interviewers care deeply about how you structure solutions, reason about performance, and handle edge cases under time pressure.

How would you implement an efficient rolling statistic on a data stream?

This question tests whether you can design algorithms that work incrementally under strict latency and memory constraints, which is essential at Optiver where data arrives continuously. A strong answer explains maintaining running aggregates such as sums, counts, or deques to update statistics in constant time as new data arrives and old data expires. You should emphasize avoiding full recomputation, handling edge cases at window boundaries, and ensuring numerical stability in long-running systems.

Tip: Calling out constant-time updates and boundary handling shows interviewers that you think in terms of production performance and long-running system behavior, not just algorithmic correctness.

How would you detect anomalies in real-time data?

Optiver asks this to evaluate how you balance responsiveness with robustness in noisy environments. A strong answer discusses simple, fast methods such as rolling z-scores, adaptive thresholds, or exponentially weighted statistics that can flag unusual behavior without overreacting to noise. You should also explain how you would tune sensitivity and incorporate safeguards to reduce false positives that could trigger unnecessary interventions.

Tip: Explaining how you control false positives signals that you understand the operational cost of alerts in high-risk trading systems.

-

This question tests whether you understand optimization mechanics rather than relying on libraries. At Optiver, this matters because you must reason about convergence, step sizes, and numerical behavior. A strong answer walks through defining a loss function, computing gradients for slope and intercept, iteratively updating parameters, and stopping based on convergence criteria. You should also mention learning rate choice and the risk of divergence.

Tip: Discussing learning rate tuning and convergence criteria shows mathematical maturity and awareness of how small choices affect model stability.

Head to the Interview Query dashboard to practice the full set of machine learning engineer interview questions. With hands-on coding challenges, applied machine learning problems, probability drills, and system design scenarios, along with built-in code testing and AI-guided feedback, it is one of the most effective ways to sharpen your reasoning, judgment, and decision-making skills for the Optiver machine learning engineer interview.

-

This question evaluates your comfort with linear algebra and implementing core algorithms cleanly. A strong answer explains transposing the design matrix, computing normal equation components, and solving for parameters while noting numerical issues such as matrix invertibility. You should also mention when this approach breaks down at scale and why iterative methods may be preferred in practice.

Tip: Acknowledging numerical instability and scalability limits demonstrates that you think beyond textbook solutions and toward real system constraints.

-

Optiver asks this to test your understanding of randomness and reproducibility. A strong answer explains sources of variability such as random initialization, data shuffling, stochastic optimization, and non-deterministic execution. You should also describe how to control variance through fixed seeds, repeated runs, and statistical comparison rather than trusting a single result, especially when evaluating trading models.

Tip: Emphasizing reproducibility practices shows interviewers that you are skeptical of noisy results and capable of running disciplined evaluations.

Want to master the entire ML pipeline? Explore our ML Engineering 50 learning path to practice a curated set of machine learning questions designed to strengthen your modeling, coding, and system design skills.

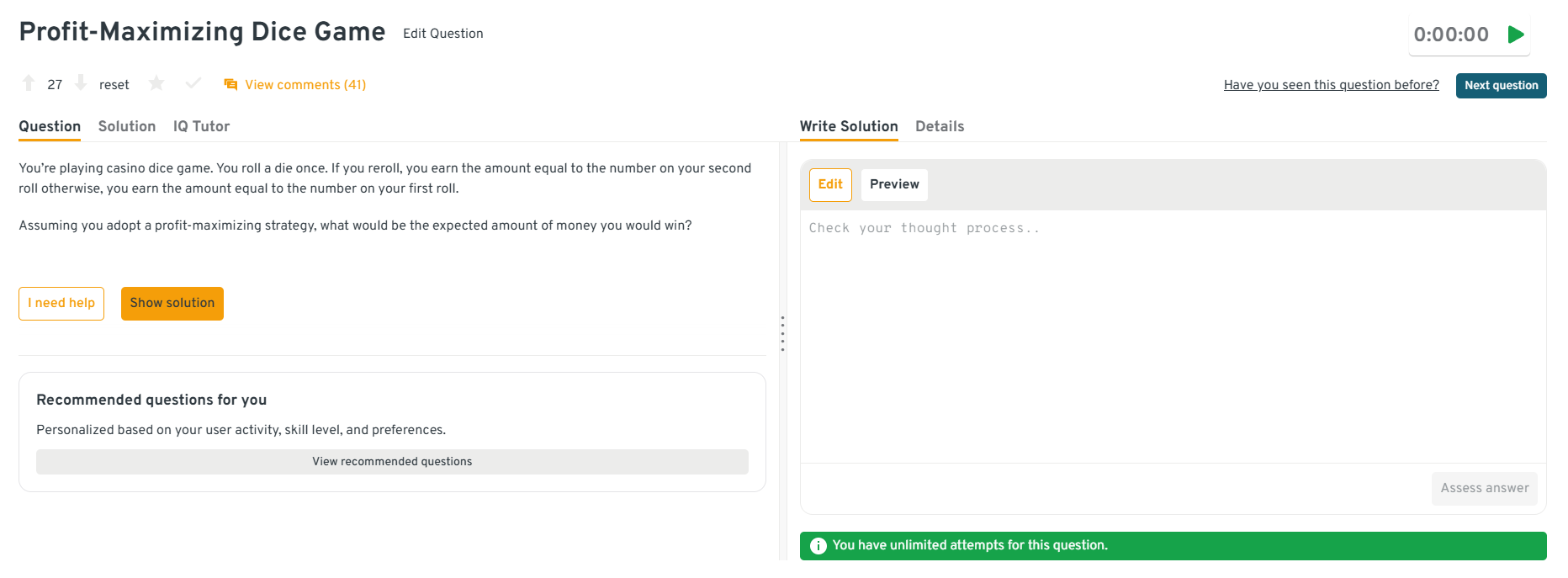

Probability and Numerical Reasoning Questions

Probability and numerical reasoning are core to Optiver’s interviews. These questions assess how quickly and accurately you can reason about uncertainty, expected value, and risk, all of which directly mirror how trading decisions are evaluated in real time.

-

This question tests whether you understand decision risk, not just statistical definitions. At Optiver, acting on a false signal can be just as costly as missing a real one. A strong answer explains that a type I error is a false positive while a type II error is a false negative, then connects each to real consequences such as entering a losing trade versus missing a profitable opportunity. You should emphasize that the acceptable balance depends on the cost asymmetry of the decision.

Tip: Mapping statistical errors to trading consequences shows risk-aware thinking, which is central to Optiver’s decision-making culture.

What is the difference between covariance and correlation?

Optiver asks this to assess whether you can reason about relationships between variables at different scales. A strong answer explains that covariance measures joint variability in absolute terms, while correlation normalizes this relationship to a unit-free scale between minus one and one. You should highlight why correlation is often more interpretable when comparing signals, but also why covariance matters for portfolio-level risk and variance calculations.

Tip: Explaining when scale matters signals that you understand how dependencies affect real financial systems.

-

This question tests structured probability reasoning under uncertainty. Optiver interviewers care about how you break the problem down rather than how fast you compute it. A strong answer explains conditioning on which coin is chosen, computing the probability of matching outcomes for each case, and then combining them using total probability. Clear step-by-step logic matters more than memorized formulas.

Tip: Clearly structuring conditional reasoning demonstrates calm, disciplined thinking under pressure.

-

This question evaluates your ability to reason about expected value and optimal decision thresholds. At Optiver, similar logic applies when deciding whether to hold or adjust positions. A strong answer explains computing expected values for keeping versus rerolling outcomes, identifying the cutoff where rerolling is favorable, and maximizing long-term gain rather than short-term outcomes.

Tip: Focusing on expected value over individual outcomes shows trading-relevant intuition.

Head to the Interview Query dashboard to practice the full set of machine learning engineer interview questions. With hands-on coding challenges, applied machine learning problems, probability drills, and system design scenarios, along with built-in code testing and AI-guided feedback, it is one of the most effective ways to sharpen your reasoning, judgment, and decision-making skills for the Optiver machine learning engineer interview.

-

This question tests whether you understand statistical assumptions and when they matter. A strong answer explains that both tests assess mean differences, with the Z test relying on known variance and large samples, while the t test accounts for uncertainty in variance with smaller samples. You should connect this to Optiver’s preference for choosing tools based on data reliability rather than habit.

Tip: Explaining why assumptions matter shows statistical discipline and resistance to blind formula usage.

Struggling with take-home assignments? Get structured practice with Interview Query’s Take-Home Test Prep and learn how to ace real case studies.

Behavioral and Collaboration Questions

Behavioral questions at Optiver focus on ownership, learning speed, and collaboration under pressure. Interviewers want evidence that you can communicate clearly, adapt quickly, and make sound decisions in environments where mistakes have immediate consequences.

How would you convey insights and the methods you use to a non-technical audience?

This question assesses your ability to translate complex analysis into actionable insight, a core skill at Optiver where machine learning engineers work closely with traders and decision-makers. Interviewers want to see whether you can strip away unnecessary technical detail while preserving what matters for decisions and risk.

Sample answer: “When sharing insights with non-technical stakeholders, I focus first on the decision being made and the key takeaway. I explain results using intuition, comparisons, or simple visuals before discussing assumptions or limitations. If needed, I keep technical details available as backup rather than leading with them.”

Tip: Showing that you lead with decisions rather than methods demonstrates strong communication judgment and business awareness.

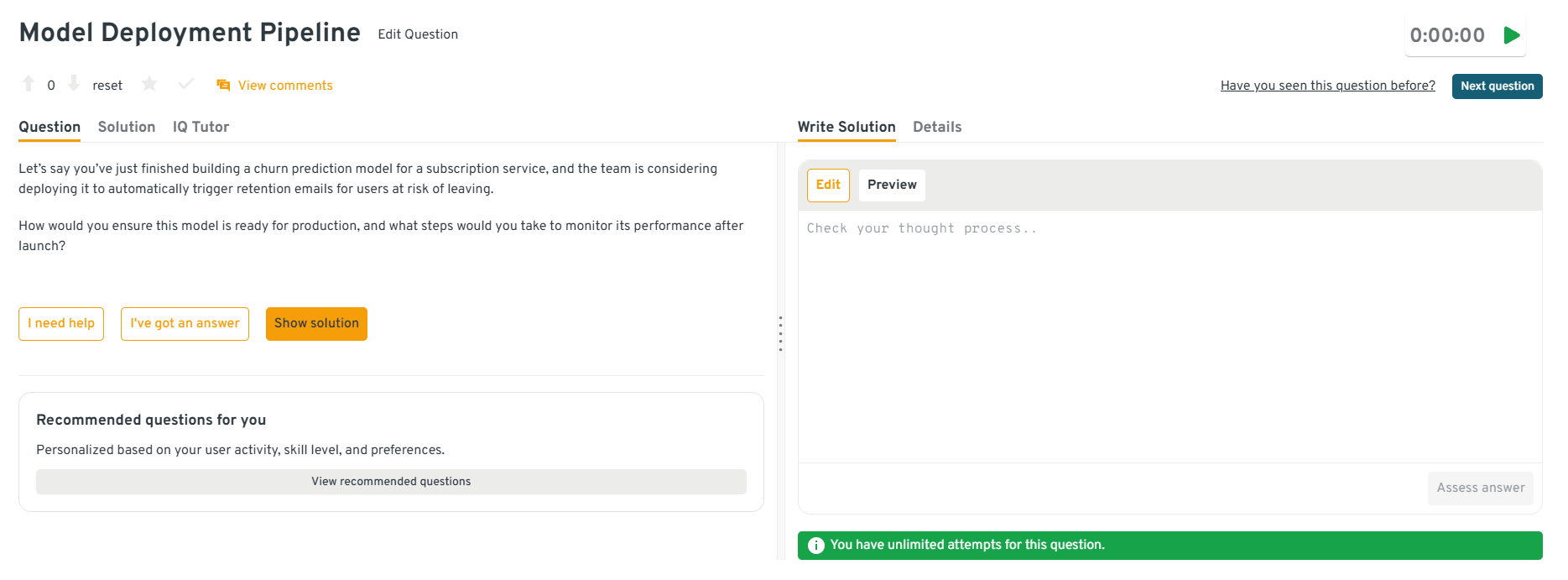

-

This question tests ownership beyond model training. Optiver values engineers who think about lifecycle risk, monitoring, and failure modes. Interviewers want to see how you ensure models remain reliable once real money or decisions depend on them.

Sample answer: “I would validate the model using out-of-sample and time-based splits, then define clear success and failure metrics before deployment. After launch, I would monitor performance decay, input distribution shifts, and downstream impact, with alerts and fallback strategies if behavior changes unexpectedly.”

Tip: Emphasizing monitoring and rollback plans signals production maturity and risk-aware ownership.

Head to the Interview Query dashboard to practice the full set of machine learning engineer interview questions. With hands-on coding challenges, applied machine learning problems, probability drills, and system design scenarios, along with built-in code testing and AI-guided feedback, it is one of the most effective ways to sharpen your reasoning, judgment, and decision-making skills for the Optiver machine learning engineer interview.

-

This question assesses adaptability and trust building. At Optiver, misalignment between engineers and traders can lead to costly misunderstandings. Interviewers want to know how you adjust when communication breaks down.

Sample answer: “I once shared model results that stakeholders felt were difficult to act on. I followed up by asking what decisions they were trying to make, then reframed the analysis around those choices instead of the model details. Once aligned, the discussion became much more productive.”

Tip: Demonstrating that you changed your approach shows humility and a results-oriented mindset.

How do you handle disagreements on modeling choices?

At Optiver, this question evaluates how you reason through uncertainty while maintaining productive collaboration in high-stakes environments. Interviewers want to see that you can challenge ideas without becoming rigid, and that you prioritize outcomes over personal preferences.

Sample answer: “When there is disagreement on a modeling approach, I start by making sure we are aligned on the objective and constraints, such as latency, risk tolerance, and stability. I then surface the assumptions behind each option and suggest concrete ways to test them, whether through offline validation, simulations, or controlled experiments. If results are inconclusive, I default to the simpler or safer approach, especially when the cost of failure is high. Throughout the discussion, I focus on evidence and trade-offs rather than defending a particular solution.”

Tip: Demonstrating that you resolve disagreements through shared goals and data shows strong judgment and the ability to collaborate effectively under pressure.

Want realistic ML interview practice without scheduling or pressure? Try Interview Query’s AI Interviewer to simulate Optiver style coding, modeling, and system design questions and get instant, targeted feedback.

What Does an Optiver Machine Learning Engineer Do?

An Optiver machine learning engineer builds models and systems that directly support real-time trading decisions in highly competitive financial markets. The role sits at the intersection of machine learning, mathematics, and performance-critical engineering, where models must be robust, interpretable, and fast enough to operate under strict latency constraints. Unlike research-focused roles, this position emphasizes end-to-end ownership, from data analysis and modeling to production deployment and ongoing performance monitoring.

Common responsibilities include:

- Designing and implementing predictive models for pricing, execution, and risk management using time-series data and probabilistic techniques.

- Developing high-performance machine learning pipelines in Python and C++, with careful attention to numerical stability, latency, and memory efficiency.

- Partnering closely with traders and researchers to translate market hypotheses into testable models and measurable signals.

- Evaluating models using online metrics, simulation frameworks, and live trading feedback rather than offline accuracy alone.

- Maintaining and improving production systems by monitoring model drift, handling non-stationary data, and iterating quickly based on market behavior.

Tip: Emphasizing model simplicity and operational reliability demonstrates strong judgment and shows interviewers you can make sound decisions under real trading constraints.

Need customized guidance on your Optiver interview strategy? Explore Interview Query’s Coaching Program, where you are paired with experienced mentors to refine your preparation, sharpen decision-making under pressure, and build confidence for Optiver’s machine learning engineer interviews.

How to Prepare for an Optiver Machine Learning Engineer Interview

Preparing for an Optiver machine learning engineer interview means training yourself to think clearly under pressure and make high quality decisions with incomplete information. Optiver interviews reward candidates who demonstrate strong judgment, numerical intuition, and ownership rather than those who simply recite theory. Your preparation should mirror how problems are approached in live trading environments, where speed, discipline, and clarity matter.

Train decision making under time pressure: Optiver interviews are intentionally fast paced. Practice solving problems with strict time limits and commit to an answer rather than endlessly refining it. Focus on making reasonable assumptions quickly and moving forward with a defensible approach, since this mirrors how real trading decisions are made.

Tip: Making confident, time bounded decisions shows decisiveness and mental composure, which interviewers associate with strong trading floor readiness.

Build strong numerical intuition: Many Optiver questions rely on estimation, probability, and expected value rather than formulas. Practice mental math, quick approximations, and reasoning about magnitudes. You should be comfortable sanity checking results without relying on calculators or detailed derivations.

Tip: Fast and accurate numerical reasoning signals quantitative fluency and reliability under pressure.

Practice explaining trade-offs clearly: Optiver cares deeply about why you chose one approach over another. In your preparation, force yourself to articulate trade-offs around simplicity, risk, latency, and robustness in plain language. Avoid defaulting to “more complex is better” and focus on explaining the cost of each decision.

Tip: Clear trade-off articulation demonstrates judgment and the ability to collaborate with traders and engineers.

Develop intuition for non-stationary systems: Markets change constantly, and Optiver values candidates who anticipate that. Review your past projects and think about how your solutions would behave if assumptions broke or data distributions shifted. Practice discussing monitoring strategies and failure modes rather than only success cases.

Tip: Anticipating failure modes highlights ownership and long term thinking.

Refine how you tell technical stories: Many interviews involve walking through past work. Practice structuring these explanations around the problem, constraints, decision points, and outcomes. Keep explanations concise and focused on impact rather than implementation details.

Tip: Structured storytelling shows strong communication skills and makes complex work easy to trust.

Simulate full interview loops: Run mock interviews that combine problem solving, reasoning aloud, and behavioral discussion without breaks. Review not just correctness, but how often you hesitated, over explained, or lost structure. Treat each mock like a data point and adjust deliberately.

Tip: Tracking reasoning patterns across mocks shows self-awareness and continuous improvement, traits Optiver values in long-term hires.

Looking for hands-on problem-solving? Test your skills with real-world challenges from top companies. Ideal for sharpening your thinking before interviews and showcasing your problem solving ability.

Salary and Compensation for Optiver Machine Learning Engineers

Optiver’s compensation structure is built to reward engineers who can deliver consistent performance in high impact, low latency trading environments. Machine learning engineers receive a mix of competitive base salary, performance based bonuses, and in some regions additional long term incentives. Total compensation varies significantly based on level, location, and scope, with senior engineers and those working closest to live trading systems typically earning at the top of the range among quantitative trading firms.

Read more: Machine Learning Engineer Salary

Tip: Clarifying your target level early signals seniority awareness and helps interviewers align expectations around scope, autonomy, and compensation.

Optiver Machine Learning Engineer Compensation Overview (2025-2026)

| Level | Role Title | Total Compensation (USD) | Base Salary | Bonus | Equity / Long Term Incentives | Signing / Relocation |

|---|---|---|---|---|---|---|

| Junior | Machine Learning Engineer | $180K – $260K | $140K–$180K | Performance based | Limited or none | Offered selectively |

| Mid Level | Machine Learning Engineer II | $230K – $350K | $160K–$200K | Large performance bonus | Possible long term incentives | Common for global hires |

| Senior | Senior Machine Learning Engineer | $300K – $500K+ | $180K–$230K | Very high bonus upside | Long term incentives | Frequently offered |

| Staff+ | Lead / Principal ML Engineer | $400K – $700K+ | $200K–$260K | Exceptional bonus leverage | Significant long term incentives | Negotiated case by case |

Note: These estimates are aggregated from data on Levels.fyi, public role disclosures, and Interview Query’s internal compensation research. Optiver compensation is highly performance driven, so upside can vary meaningfully year to year.

Tip: Understanding bonus variability shows financial literacy and signals you are evaluating risk and reward like a trader.

Negotiation Tips that Work for Optiver

Compensation conversations at Optiver are most effective when grounded in performance, level clarity, and market benchmarks rather than rigid salary expectations. Recruiters expect candidates to understand how quant firm compensation differs from traditional tech roles.

- Confirm role scope and level first: At Optiver, level directly influences bonus leverage more than base salary. Clarifying whether you are being evaluated as mid level, senior, or staff materially changes the compensation conversation.

- Anchor discussions with relevant benchmarks: Use data from Levels.fyi and comparable quant firms rather than big tech averages. Optiver expects candidates to compare offers across trading firms, not general software roles.

- Account for location and desk impact: Compensation differs across Amsterdam, Chicago, Sydney, and Asia Pacific offices. Teams closer to core trading systems often carry higher bonus upside.

Tip: Asking for a full breakdown of base, bonus structure, and long term incentives demonstrates commercial awareness and helps you negotiate from a position of clarity.

FAQs

How long does the Optiver machine learning engineer interview process take?

Most candidates complete the Optiver interview process within three to five weeks. Timelines depend on interview availability and whether you are evaluated by multiple teams. Recruiters typically share next steps quickly after each round, and the process tends to move faster than traditional big tech hiring loops.

Does Optiver use online assessments or numerical tests?

Yes, many candidates complete an online assessment that includes coding and numerical reasoning. These tests are designed to evaluate speed, accuracy, and structured thinking under time pressure rather than deep system design. Performance on this step strongly influences progression to technical interviews.

How important is a mathematics or quantitative background for Optiver?

A strong mathematical foundation is very important, even for machine learning engineers. Optiver values probability, statistics, and numerical intuition because models directly inform trading decisions. While a formal finance background is not required, comfort with quantitative reasoning is essential.

Does Optiver expect prior trading or finance experience?

Prior trading or finance experience is helpful but not mandatory. Candidates with strong machine learning judgment, engineering discipline, and problem solving skills can ramp up quickly. Interviewers focus more on how you reason through uncertainty than on domain specific knowledge.

What programming languages should I be comfortable with for Optiver interviews?

Python is commonly used during interviews, especially for modeling and problem solving discussions. Familiarity with performance oriented programming, often in C++ or similar languages, is also valued since many production systems operate under strict latency constraints.

How deeply do interviewers probe machine learning theory at Optiver?

Interviewers expect you to understand core machine learning concepts, but the emphasis is on application rather than memorization. You should be able to explain why a model works, when it breaks, and how it behaves under real constraints like noise and latency. Depth is tested through follow-up questions rather than abstract theory.

What distinguishes strong Optiver candidates from average ones?

Strong candidates consistently show clear reasoning under time pressure, make sensible simplifying assumptions, and explain trade-offs confidently. They focus on robustness and decision quality rather than perfect answers. This signals reliability, judgment, and readiness to operate in high-stakes environments.

Become an Optiver Machine Learning Engineer with Interview Query

Preparing for the Optiver machine learning engineer interview means sharpening your decision making under pressure, strengthening numerical intuition, and learning how to justify modeling and engineering trade-offs in performance critical environments. By understanding Optiver’s interview structure, practicing real world problem solving, and refining how you explain assumptions and outcomes, you can approach each stage with confidence.

For targeted preparation, explore the full Interview Query’s question bank, practice live with the AI Interviewer, or work one on one with a mentor through Interview Query’s Coaching Program to refine your approach and position yourself to stand out in Optiver’s highly competitive machine learning hiring process.