Netflix Data Scientist Interview Guide (2025) – Process, Questions & Salary

Introduction

Netflix’s data-scientist teams power personalized experiences and experimentation for over 260 million members worldwide. Whether you’re designing causal models for recommendation algorithms or optimizing A/B frameworks for new features, the interview process is both rigorous and rewarding. In this guide, you’ll learn how the Netflix data scientist interview is structured, the question formats to expect, and actionable strategies to excel at each stage.

Role Overview & Culture

Netflix data scientist interview roles immerse you in end-to-end analytics: you’ll design and analyze randomized experiments, build causal-inference pipelines, and inform high-stakes studio and product decisions. As a Netflix data scientist, you’ll leverage petabyte-scale streaming data to guide content greenlighting, personalization, and user growth initiatives. Operating in a “Freedom & Responsibility” culture, you have autonomy to choose tools and methods while engaging in healthy debate with cross-functional peers, driving impact at every turn.

Why This Role at Netflix?

Joining as a data scientist netflix means your models go live directly to 260 million+ viewers, influencing recommendations, content strategy, and engagement metrics. Competitive compensation, generous equity grants, and fast career progression reward measurable impact and innovation. To secure this position, you’ll navigate the Netflix data-scientist interview process—a multi-round loop designed to evaluate your technical depth, problem-solving approach, and strategic insight.

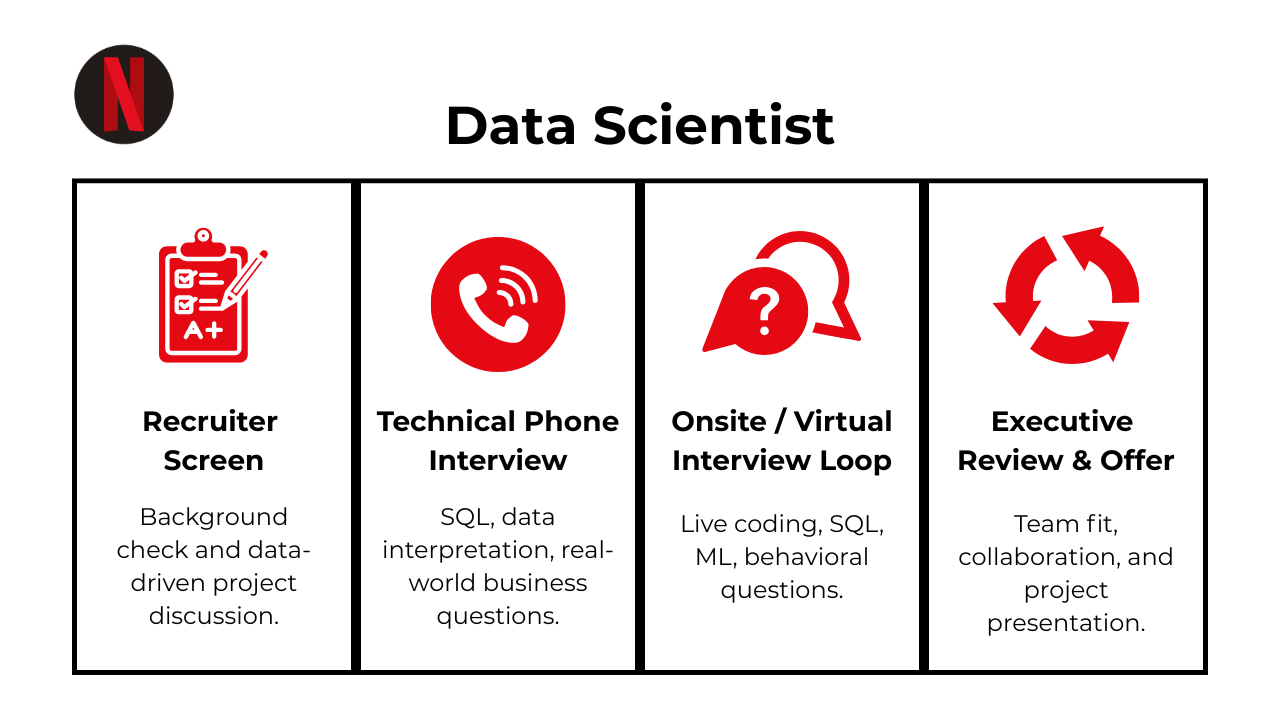

What Is the Interview Process Like for a Data Scientist Role at Netflix?

The Netflix data scientist interview process at Netflix is designed to evaluate both your technical expertise and your ability to drive business impact through data. It typically spans four main stages—from an initial recruiter conversation through a rigorous onsite loop—each focused on different core competencies. Below is a breakdown of what to expect at each phase.

Recruiter Screen

In this first stage, a recruiter will assess your resume fit and motivation for the role. Expect questions about your background in statistics, machine learning, and Netflix-specific use cases (e.g., personalization or experimentation). This call also covers logistical details like preferred locations, compensation expectations, and interview timing.

Technical Screen

This round often takes the form of a live coding or SQL challenge combined with a short statistics/ML quiz. You may be asked to write queries that analyze retention metrics or to solve algorithmic problems in Python or R. Strong performance here demonstrates your ability to manipulate data and apply statistical reasoning under time constraints.

Virtual On-Site

The core of the loop consists of three interviews:

- Coding & SQL: Implement data transformations or algorithms on a shared editor.

- Experimental Design: Design and critique A/B tests, define metrics, and discuss power analysis.

- Behavioral: Share STAR-format stories that illustrate ownership, collaboration, and decision-making.

Interviewers look for clear thought processes, communication skills, and alignment with Netflix’s culture of freedom and responsibility.

Hiring Committee & Offer

After the onsite, feedback from all interviewers is compiled and reviewed by a cross-functional committee. This group evaluates your performance against bar-raising standards, considering both technical rigor and business impact. If approved, you’ll receive an offer package and have the opportunity to negotiate details before joining the team.

Behind the Scenes

Interviewers are expected to submit feedback within 24 hours, ensuring a fast-moving process. The hiring committee places particular weight on the depth of your experimental-design discussions and the real-world applicability of your technical solutions, reflecting Netflix’s data-centric decision-making.

Differences by Level

- Data Scientist (Mid-Level): Focus on coding fluency, statistical rigor, and individual project ownership.

- Senior Data Scientist: Adds a roadmap or strategic-impact discussion and evaluates your mentorship and cross-team leadership experience.

What Questions Are Asked in a Netflix Data Scientist Interview?

Netflix’s data scientist interviews probe both your technical chops and your capacity to turn insights into strategic decisions. In each round, you’ll tackle real-world problems—from large-scale data pipelines to rigorous experimentation scenarios—while also demonstrating alignment with Netflix’s culture of Freedom & Responsibility. Below is what you can expect across the main question types.

Coding / Technical Questions

In this portion, you’ll face Netflix data scientist interview questions that test your ability to manipulate and analyze massive datasets efficiently. Expect to write SQL queries that compute rolling metrics or confidence intervals and optimize Python or R code to process millions of rows per minute. Interviewers look for clean, production-ready solutions, thoughtful handling of edge cases, and clear explanations of algorithmic trade-offs. Demonstrating proficiency with Netflix’s tech stack—whether that’s Apache Spark, Flink, or in-house tools—will set you apart.

Implement a priority-queue API using a linked list.

Build

insert(element, priority),delete(), andpeek()so that higher-priority elements surface first and FIFO order breaks ties. A singly linked list kept in descending-priority order letsinsertrun in O(n) and bothdeleteandpeekrun in O(1) while using O(1) extra space. Edge-case handling—empty queue, duplicate priorities, or very large priority ranges—shows discipline in defensive coding. Because heaps are the usual choice, articulating why a list is acceptable for small queues (lower constant factors, simpler memory layout) demonstrates engineering trade-off awareness.Create a friend-based page-recommendation metric.

Join

friendstopage_likestwice: once for each friend’s likes and once for the target user’s existing likes. AggregateCOUNT(*)over(user_id, page_id)where the page is liked by friends but not by the user, then rank pages per user to surface candidates. Including the fraction of friends who like the page yields a normalized “score” great for offline evaluation. This pattern mirrors collaborative-filter seeds that kick-start more sophisticated recommenders.Recommend a “new best friend” for John using point weights.

Build CTEs for mutual-friend counts and shared page-like counts, LEFT JOIN them, then compute

3*mutual_friends + 2*shared_pagesas the composite score. Exclude anyone inblock_listorfriendsvia anti-joins. Ordering by score DESC and earliest user_id tie-breaker returns a deterministic pick. The exercise checks your ability to codify business rules, protect against disqualifiers, and surface a single recommendation in pure SQL.Write

isMatch(s, p)that supports.and in a full-string regex.Use dynamic programming:

dp[i][j]is True ifs[:i]matchesp[:j]. Whenp[j-1] != '*', transition on a direct char/.match; when*appears, inherit fromdp[i][j-2](zero repeat) or, if preceding char matches, fromdp[i-1][j](consume one). Proper initialization for empty strings and patterns with leading*avoids off-by-two errors. A clean O(|s|×|p|) solution proves comfort with classical DP interview staples.Generate a friendship timeline combining additions and removals.

Index

(min(u1,u2), max(u1,u2))to treat pairs direction-lessly, sort both lists by timestamp, and step through them to match an add with its next remove. Store tuples(user_a, user_b, start_ts, end_ts)when both events exist; ignore dangling starts. Handling multiple on-off cycles per pair requires updating a hash map of “open” friendships. This problem spotlights time-series reasoning and careful state bookkeeping.rain_days(n): probability it rains on day n given Markov-style dependencies.Model three weather states—RR, RN, NR—based on the last two days, encode the given transition probabilities into a 3×3 matrix, and raise it to the (n-1) power (or iterate) to fetch the RR→rain pathway. Returning the scalar probability with floating-point precision demonstrates applied Markov-chains and iterative DP—skills useful for sequence modeling tasks.

Calculate first-touch attribution channel per converted user.

Join

user_sessionstoattribution, filter toconversion = TRUE, and within eachuser_iduseFIRST_VALUE(channel) OVER (PARTITION BY user_id ORDER BY session_ts)to pick the earliest converting channel. Deduplicate byDISTINCT user_id. Explaining why first-touch guides upper-funnel spend shows marketing-analytics intuition.Implement reservoir sampling of size 1 for an unbounded stream.

Track

(choice, count); on element k generaterandint(1,k), overwriting the choice only if the draw equals k. Proving equal 1/ k probability for every item and using O(1) space satisfies streaming-data constraints common in telemetry services.Write

random_key(weights)to sample by weight.Build a cumulative-weight array, draw a uniform random float in

[0,total), and binary-search to find the bucket. Explain how alias tables or Vose’s method drop lookup to O(1) for very large dictionaries, showing algorithmic depth.Rotate an n × n matrix 90° clockwise in place.

Swap across the main diagonal, then reverse each row, achieving O(1) extra space. Edge-case tests (1×1, even/odd dimensions) and clear index math reflect robustness—crucial in production data-transforms.

Find the 2nd-highest salary in engineering.

Use

DENSE_RANK()partitioned by department ordered by salary DESC, then filter whererank = 2; or selectMAX(salary)where salary < (SELECT MAX(salary) …). Mention handling NULLs and salary ties to show thoroughness.Return the last node of a singly linked list (or null if empty).

Traverse with a pointer until

node.next is None; O(n) time, O(1) space. Discuss why storing a tail pointer during list mutations would drop lookup to O(1), reflecting performance awareness in data-structure design.

Experiment / Product-Inference Design Questions

This segment evaluates your understanding of causal inference and experimentation at scale. You might be asked to design an A/B test for a new recommendation model or outline approaches to mitigate interference when multiple users share an account. The goal is to see how you select appropriate metrics, calculate required sample sizes, and address real-world complications like carryover effects. Use “data scientist netflix interview” scenarios to illustrate how your frameworks translate into actionable product insights.

Compute variant-level conversion rates for a trial experiment.

Join

ab_teststosubscriptions, then for each variant flag conversions by the provided rules—simpleEXISTSfor control and an additional(cancel_dt IS NULL OR cancel_dt - sub_dt ≥ 7)for trial. Group byvariant, calculate100*SUM(conv)::numeric/COUNT(*)and round to two decimals for presentation. Calling out why “trial” needs the stricter definition shows grasp of retention nuance in subscription funnels.Explain Z-tests vs. t-tests — when, why, and how to choose.

Both tests compare a sample mean with a population mean (or two sample means) but rely on different assumptions. A Z-test uses the normal distribution and requires knowing the population standard deviation or working with very large samples (≈ ≥ 30) so the sample SD is a solid proxy. A t-test relies on the Student-t distribution, which has fatter tails and is appropriate when the population SD is unknown and sample sizes are modest. Rule of thumb: use Z when σ is known or n is large and the central-limit theorem applies; use t when σ is unknown and n is small, reporting degrees of freedom to reflect extra uncertainty.

Diagnose whether a redesigned email journey truly lifted conversion from 40 → 43 %.

First chart the long-run conversion time-series and fit an interrupted-time-series or difference-in-differences model to see if the post-launch trend departs from the pre-launch baseline. Include control cohorts (e.g., geo or acquisition channels that did not receive the new flow) to separate treatment from external factors like seasonality. Check for sample-mix shifts and run logistic-regression with covariates to isolate the “design” coefficient. Finally, back-test the model on the period that slid from 45 → 40 % to verify it flags no false positives, giving you confidence the observed uptick is causal.

Choose a winner when A/B-test data are non-normal and sample-constrained.

When metrics are skewed (e.g., revenue per user) and n is small, non-parametric approaches like the Mann-Whitney U test or permutation testing avoid normality assumptions. You can also bootstrap the mean or median treatment-effect to get robust confidence intervals. If you must compare proportions, use Fisher’s exact test instead of χ². Report effect sizes with percentile bands rather than relying solely on p-values, and pre-register which statistic you’ll use to prevent cherry-picking.

Design a social media “close-friends” experiment that accounts for network effects.

Randomly assigning individual users risks spill-over because a treated user’s story surfaces to control friends. Instead adopt cluster randomization: bucket users by densely connected ego-networks and assign whole clusters to control or test. Alternatively, test at the country or region level and use difference-in-differences to absorb macro noise. Measure both direct creator metrics (stories shared) and indirect consumer metrics (views, engagement) to quantify network externalities. Guardrails such as total story volume prevent platform-wide regressions.

Verify that A/B bucket assignment is truly random.

Compare pre-experiment covariates (device, OS, tenure, past spend) across buckets using χ² or KS tests; p-values should be high and standardized-mean-difference < 0.1. Plot bucket sizes over assignment time to spot seeding bugs, and check that user-ids modulo hash salt split evenly. Run a placebo-test: pretend the test started earlier and confirm “effect” ∼ 0. Any systematic imbalance signals leakage in bucketing logic.

Does unequal sample size create bias toward the smaller A/B group?

Unbalanced groups don’t bias the point estimate, but they inflate variance for the smaller arm, widening its confidence band and lowering power. If allocation is exogenous and users are random, estimates remain unbiased; you just need to use pooled or Welch’s t-test and adjust power calculations. Remedy by re-balancing traffic mid-test or running CUPED/covariate-adjusted analyses to reclaim precision without extending test length.

Select 10 k customers for a limited TV-show pre-launch and measure performance.

Use stratified sampling to mirror the full subscriber base on dimensions that drive viewing (device, region, engagement tier). Exclude churn-risk cohorts if the content is edgy. After launch, track primary KPIs (completion rate, incremental watch-time, retention uplift) versus a matched control. Deploy causal-impact or Bayesian structural-time-series to estimate counter-factual viewing. A phased rollout plan—10 k → 100 k → global—lets you halt quickly if metrics underperform.

9 . How would you define and measure acquisition success for Netflix’s 30-day free-trial offer?

This question assesses your ability to design metrics and your understanding of customer acquisition funnels. A key way to measure success is by tracking conversion rates from free-trial to paid subscriptions, as well as customer retention after the trial period ends. Additionally, segmenting the data by demographics, device usage, and engagement levels can help identify high-value users and refine marketing strategies.

Watch Next: Netflix Data Scientist Interview Question: Measure Free Trial Success with A/B Testing

Understanding how to measure the success of a free trial is essential for evaluating customer acquisition and retention strategies. In this video, Priya Singla, product data scientist at Meta, dives deeper into effective metrics and approaches used in Netflix-style product analysis. By watching the video, you can learn how to set up a comprehensive funnel analysis and identify key performance indicators that can reveal the long-term value of your free trial subscribers.

Behavioral & Culture-Fit Questions

Behavioral interviews at Netflix are designed to gauge how you embody the company’s values under ambiguity. You’ll share STAR-formatted stories about moments when you recommended killing your own model due to unexpected bias or when you delivered candid feedback to senior leadership. Interviewers look for instances where you took ownership, collaborated across functions, and balanced speed with rigor. Reflecting on these experiences shows you can thrive in Netflix’s fast-paced, high-autonomy environment.

Describe a data project you worked on. What were some of the challenges you faced?

Pick a project that showcases end-to-end ownership—e.g., building a personalization model, revamping a reporting pipeline, or launching a new experiment framework. Explain the toughest hurdles (data sparsity, skewed labels, shifting schemas, stakeholder alignment) and how you unblocked them with creative feature engineering, robust monitoring, or cross-team collaboration. Finish with the measurable impact (lift, hours saved, latency cut) to show you can translate adversity into business value—crucial in Netflix’s high-autonomy culture.

What are some effective ways to make data more accessible to non-technical people?

Discuss layered dashboards that surface a “north-star” metric first, with drill-downs for power users; scheduled plain-English email digests; and annotated notebooks that combine narrative with SQL/Python snippets. Mention self-serve experimentation portals, governed metric definitions, and office-hours that de-jargonize statistical concepts. Netflix values a culture of informed decision-making—your answer should highlight empathy for non-technical partners and a bias toward self-service tooling over ad-hoc one-offs.

What would your current manager say about you? What constructive criticisms might they give?

Choose strengths that resonate with Netflix principles—such as intellectual curiosity, candor, and delivering context-rich insights at speed. For weaknesses, pick a real but non-fatal trait (e.g., over-indexing on perfection in exploratory work) and outline concrete steps you’ve taken to improve (time-boxed analyses, peer reviews). Framing feedback as an ongoing growth narrative signals self-awareness and coachability without undermining confidence.

-

Select a scenario where technical nuance clashed with business urgency—perhaps an experiment readout that contradicted intuition. Explain how you diagnosed the gap (asking clarifying questions, mapping their goals), recalibrated your narrative with visuals or analogies, and secured alignment on next steps. Emphasize the outcome: quicker decisions, refined metrics, or follow-up tests, demonstrating your ability to bridge data science and storytelling—vital for Netflix’s “context, not control” ethos.

Why did you apply to our company, and what makes you a good fit?

Tie Netflix’s freedom-and-responsibility culture, global content footprint, and experiment-driven product development to your passion for large-scale causal inference or recommendation systems. Highlight specific initiatives—e.g., personalized artwork, adaptive streaming, or localization analytics—and how your experience in scalable machine-learning pipelines or user-behavior modeling maps to those challenges. Show that you’ve done your homework and can contribute to the next wave of consumer delight.

How do you prioritize multiple deadlines, and how do you stay organized when juggling competing tasks?

Describe a framework (RICE, impact × effort, or OKR alignment) you use to rank work, then detail your rituals—weekly Kanban grooming, time-boxing deep-work blocks, and automated Slack reminders. Explain how you renegotiate scope early when new, higher-ROI requests emerge, preserving trust while keeping high-quality standards. Netflix’s fast cadence means endless inbound asks; demonstrating disciplined prioritization reassures interviewers you won’t drown in context switches.

Tell me about a model you deployed that under-performed after launch. How did you detect the issue and course-correct?

Netflix ships to production quickly, so failures must be caught fast. Discuss alerting on drift, offline/online parity checks, and rapid rollback procedures. Outline the root-cause analysis (data skew, concept drift, or feature bugs) and the remediation you led. This showcases ownership, rigor, and a bias toward protecting the member experience.

Give an example of how you fostered a data-driven culture within a cross-functional team.

Perhaps you instituted a weekly “metrics moment,” built a self-serve experimentation dashboard, or mentored PMs on power analysis. Explain the tangible shift—fewer opinion-driven debates, faster iteration cycles, or more confident launches. Demonstrating that you elevate the data literacy of those around you signals the multiplier effect Netflix seeks in senior data scientists.

How to Prepare for a Data Scientist Role at Netflix

Preparing for a Netflix data scientist interview means honing both your technical toolkit and your ability to translate data into high-impact decisions under ambiguity. Netflix’s data science loops weigh experimentation design, code efficiency, and narrative clarity equally. Below are targeted preparation strategies to help you stand out.

Master Experimentation & Causal Inference

Roughly 40 % of your technical rounds will focus on A/B testing and causal frameworks. Be ready to articulate hypothesis formulation, sample-size calculations, and approaches to mitigate carryover or novelty effects. Demonstrate familiarity with techniques like CUPED or Thompson sampling, and tie these back to real-world Netflix use cases such as measuring feature engagement.

Sharpen SQL & Python

About 30 % of your assessment centers on data manipulation and pipeline optimization. Practice advanced SQL window functions, CTEs, and vectorized operations in Python (NumPy, pandas). Optimize end-to-end pipelines for speed and memory efficiency—skills Netflix relies on when processing billions of viewing events.

Think Out Loud

Netflix values transparent reasoning nearly as much as correct answers. Throughout coding and design exercises, narrate your trade-off analysis: why choose one metric over another, or why prioritize performance versus readability. Clear, structured explanations help interviewers follow your logic and assess your decision-making under pressure.

Brute Force → Optimize

Start with a straightforward, brute-force solution to demonstrate correctness swiftly. Then iteratively refine for performance, explaining each optimization step. This pattern reflects Netflix’s “freedom and responsibility” culture—show that you can both deliver a working prototype and enhance it for production.

Mock Interviews & Feedback

Simulate full loops with peers or mentors, ideally ex-Netflix data scientists. Use Interview Query’s mock interviews to replicate timing and question styles. Solicit feedback on your problem approach, storytelling, and cultural fit. Familiarity breeds confidence, turning the real interview into a comfortable conversation.

FAQs

What Is the Average Salary for a Netflix Data Scientist?

Average Base Salary

Average Total Compensation

Netflix offers top-of-market compensation packages that blend base salary, equity grants, and performance bonuses, with regional variations. When negotiating, benchmark against the Netflix data scientist salary, compare equity refreshers under the data scientist salary Netflix metric, and understand the premium for senior roles reflected in the Netflix senior data scientist salary bands.

Are There Job Postings for Netflix Data Scientist Roles on Interview Query?

Yes—visit our jobs board to see the latest openings and get insider tips on preparation.

Conclusion

Nailing the Netflix data scientist interview means more than memorizing questions—it’s about demonstrating rigorous experimentation design, efficient data pipelines, and clear storytelling under Netflix’s Freedom & Responsibility culture. By walking through each stage—from your initial recruiter screen to the final hiring committee—and practicing the question archetypes outlined above, you’ll build the confidence and fluency needed to stand out.

For further role-specific prep, explore our Netflix Machine Learning Engineer Interview Guide and Netflix Data Engineer Interview Guide. Ready to put theory into practice? Schedule a mock interview or dive deep into our Data Science Learning Path. Finally, get inspired by success stories like Chris Keating’s journey—your offer could be next!