Netflix Research Scientist Interview Guide (2025) – Questions, Salary & Process

Introduction

The Netflix Research Scientist role challenges you to bridge advanced ML research with tangible product impact, assessing candidates on both theoretical depth and practical deployment skills. In this guide, we’ll demystify the Netflix research-scientist interview process, walking through each stage—from initial screens to deep dives—so you know exactly what to expect and how to prepare.

Role Overview & Culture

Research Scientists at Netflix conceive, prototype, and validate experiments that drive personalization, content valuation, and demand forecasting for over 260 million members. You’ll own end-to-end research workflows: formulating hypotheses, designing rigorous A/B tests, and collaborating with engineering teams to integrate your models into production. All this happens within Netflix’s renowned Freedom & Responsibility culture, where you choose your toolchains, publish findings openly, and move at startup speed while benefiting from a collaborative, cross-functional environment.

Why This Role at Netflix?

At Netflix, research work transforms directly into features that millions of viewers engage with daily—offering an unparalleled line of sight from innovation to impact. The role comes with top-of-market compensation, including generous Netflix research scientist salary packages, and the freedom to publish papers or file patents. If you’re driven by the chance to see your research shape real-world product experiences at massive scale, this interview process is your first step toward joining Netflix’s elite AIML labs.

What Is the Interview Process Like for a Research Scientist Role at Netflix?

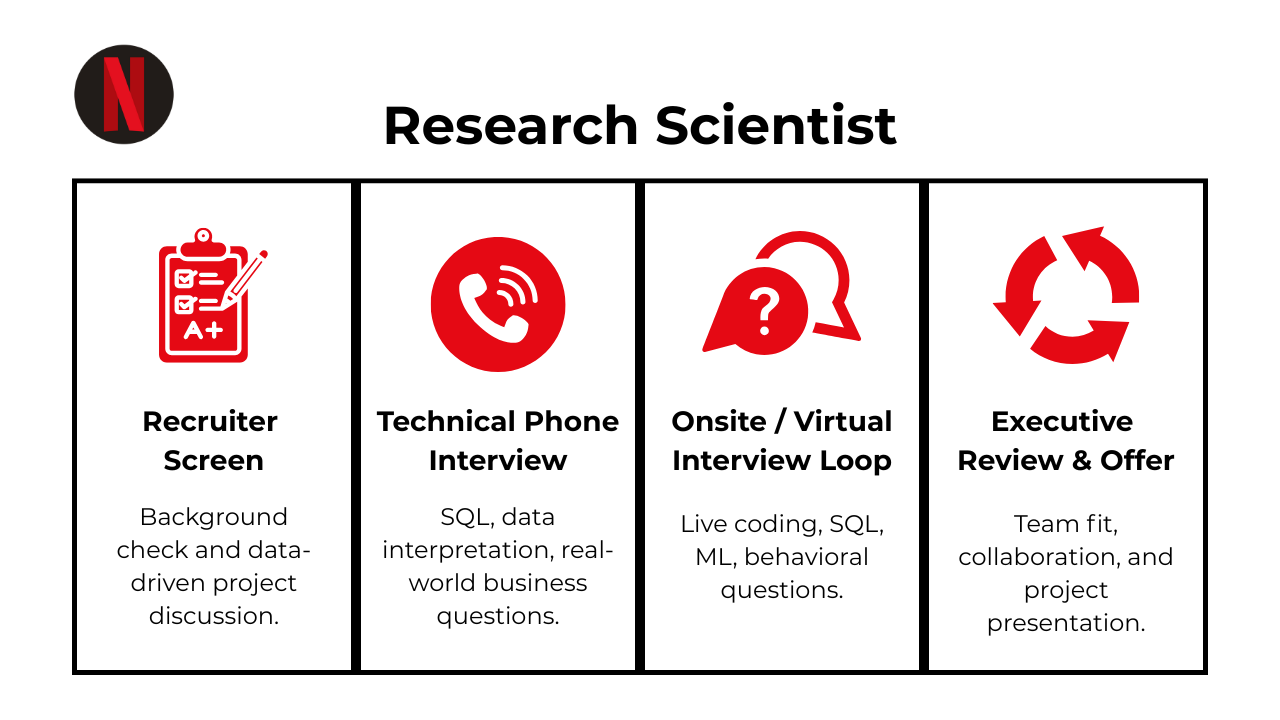

The Netflix research scientist interview journey is designed to evaluate both your theoretical expertise and your ability to translate research into production-ready features. Here’s a concise look at each stage of the process.

Recruiter Screen

The recruiter screen serves as your first introduction to the team, usually conducted over a 30- to 45-minute phone call. You’ll discuss your academic background, publication record, and motivation for joining Netflix’s AIML labs. The recruiter will probe for alignment with Netflix’s culture of “Freedom & Responsibility,” and may ask you to walk through a recent research project or paper. This stage also clarifies logistical details such as preferred locations, visa requirements, and compensation expectations. By the end of this conversation, you should have a clear understanding of the subsequent technical and on-site steps, as well as any materials you’ll need to prepare.

Technical Screen

In the technical screen, a data scientist or research engineer will assess your grasp of core statistical and machine-learning concepts. Expect a mix of whiteboard or virtual pen-and-paper questions on probability distributions, hypothesis testing, and optimization techniques. You may also tackle brief coding challenges in Python or SQL that demonstrate your ability to manipulate data and implement simple algorithms. Interviewers gauge not only your correctness but also how you communicate your reasoning and trade-offs. Successful candidates move forward with a strong balance of theoretical knowledge and practical coding skills.

Virtual On-Site Loop

The virtual on-site loop is a multi-hour, multi-round experience that typically spans a single day. You will begin with a live coding session that focuses on data structures and model implementation, followed by an ML system-design discussion where you’ll architect scalable inference pipelines under real-world constraints. Next comes a dedicated “research deep-dive” in which you present one of your own publications or projects, defend your methodology, and explore how your work could translate into Netflix products. The loop concludes with a behavioral interview, where you use STAR-structured stories to illustrate your collaboration, leadership, and impact. Throughout each round, interviewers look for clarity of thought, technical rigor, and an ability to innovate within Netflix’s fast-paced environment.

Offer Review & Compensation Calibration

After the on-site loop, your interviewers submit detailed feedback, which is consolidated by a cross-functional hiring committee. This committee weighs your technical expertise, research contributions, and cultural fit against Netflix’s high bar for scientific excellence and impact. Compensation is calibrated based on market data, level alignment, and your prior experience, with Netflix known for offering top-of-market packages and RSU refreshers. You will receive a formal offer call from a recruiter, followed by an opportunity to review and negotiate the details. This transparent process ensures that both parties are aligned on expectations before you accept.

Behind the Scenes

Behind the scenes, Netflix operates on a rapid feedback cycle: interviewers are expected to submit written evaluations within 24 hours, and the hiring committee meets weekly to make decisions. There is no formal bar-raiser role, but peers from other teams often provide input to ensure consistency and high standards across the organization. The emphasis is on candid, direct feedback and a bias for swift decision-making, reflecting Netflix’s culture of efficiency. Throughout, confidentiality is paramount to protect unreleased features and internal roadmaps.

Differences by Level

For mid-level research scientist candidates (IC4–IC5), the focus remains squarely on technical chops and system-design proficiency. Senior roles (IC6+) require additional depth: you’ll deliver a 30-minute research presentation on a new strategic direction or roadmap initiative and participate in a leadership discussion about mentoring, cross-team collaboration, and influencing product decisions. Principal-level applicants may also be asked to evaluate and critique a peer’s research proposal or lead a mock technical leadership workshop. These extra rounds ensure that senior hires can both advance Netflix’s scientific vision and elevate the capabilities of colleagues around them.

What Questions Are Asked in a Netflix Research Scientist Interview?

Netflix research scientist interview candidates can expect a rigorous evaluation of both their technical prowess and their ability to translate novel ideas into production-ready solutions. Interviewers look for depth in statistical methods, software engineering skills for handling large-scale systems, and a strong sense of collaboration and judgment in Netflix’s “Freedom & Responsibility” culture.

Coding / Technical Questions

This round probes your ability to implement advanced algorithms and data pipelines under realistic constraints. You might be asked to code estimators for causal inference, optimize deep-learning workflows for video or audio embeddings, or develop monitoring dashboards that detect metric drift in live experiments. Evaluators focus on your coding style, efficiency, and how you balance correctness with performance when working on high-volume streaming data.

Write

friendship_timelineto pair every friendship start and end event.You receive two chronologically unordered lists—

friends_addedandfriends_removed—that capture multiple on-again/off-again relationships. A clean solution first groups events by(user_a, user_b)after normalizing the id order so A-B equals B-A, then sorts each pair’s events to greedily match the earliest “add” with the next “remove.” Edge cases include adds with no corresponding remove (ignore) and overlapping friendship windows (track with a counter). Producing an ordered list of[start_ts, end_ts]tuples demonstrates time-series reasoning and careful state management, both important in user-behavior research.Rotate an

n × nmatrix 90° clockwise in-place.The classic two-step approach transposes the matrix (

A[i][j] ↔ A[j][i]) and then reverses each row, yielding O(n²) time and O(1) extra space. Highlight why element swaps must avoid over-writing and how the symmetry of a square matrix guarantees correctness. Discuss alternative cache-friendly strategies (layer-by-layer cyclic swaps) and note that verifying the transform on odd-dimensional centers prevents off-by-one bugs. Algorithmic clarity and space efficiency mirror the rigor expected in experimentation code.Select the 2-nd highest salary in the engineering department.

In SQL, use

DENSE_RANK()(orROW_NUMBER()with a< MAX(salary)sub-query) partitioned by department, ordered DESC, and filter for rank = 2. Explain howDENSE_RANKgracefully skips duplicates at the top yet still returns the “next” distinct value. Edge-case awareness—departments with < 2 distinct salaries—shows maturity. This problem spotlights ranking functions, a staple when comparing model metrics or experiment arms.Return the last element of a singly linked list, or

Noneif empty.Traverse with a single pointer until

node.next is None, which takes O(n) time and O(1) space. Mention handling an empty head, why a two-pointer technique is unnecessary, and how this walk mirrors streaming scenarios where only a terminal statistic is required. Clear, robust base-case handling reflects defensive coding practices important in production research pipelines.Implement

max_profitto maximize stock gains with up to two buy/sell cycles.The optimal solution tracks four states while scanning prices once: best first-buy cost, best first-sell profit, best second-buy effective cost (price – first profit), and best second-sell total profit—yielding O(n) time, O(1) space. Describe why dynamic programming beats brute-force enumeration and how state-compression keeps memory constant. Analytical DP reasoning is central to sequential decision problems in streaming or reinforcement-learning contexts.

Find the missing number in an array containing 0…n with one absent.

Two constant-time ideas: XOR all indices and values, or compute

n*(n+1)/2 – sum(nums). Discuss overflow safeguards with largenand why XOR avoids arithmetic limitations. A linear scan with O(1) space matches research scenarios that process massive logs without extra memory.Compute the probability it rains on the n-th day given Markov-like dependencies.

Model the system as a 3-state Markov chain representing the last two days of weather. Build a 3 × 3 transition matrix, raise it to the n-1 power, and multiply by the initial state vector for an exact answer; or iteratively update state probabilities in O(n). Explain trade-offs between numerical stability and speed, and note how eigen-decomposition offers a closed form—skills aligned with probabilistic modeling work.

Identify the user who tipped the most from parallel

user_idsandtipslists.Zip the two lists, aggregate totals in a dictionary keyed by

user_id, then return the arg-max. Point out how a single-pass reduction keeps the runtime O(m) and memory proportional to unique users. Handling ties deterministically (e.g., lowestuser_id) shows attention to reproducibility, a priority in large-scale A/B evaluations.

Research / System Design Questions

Here, you’ll architect end-to-end solutions that bridge offline model training with online inference. Expect prompts to design scalable A/B testing frameworks for personalization, build multi-armed bandit services that dynamically allocate traffic at catalog scale, or outline data-centric pipelines that ensure reproducibility and low latency. Success depends on your ability to justify architectural trade-offs around consistency, fault tolerance, and cost in a production environment.

-

Explicit constraints enforce referential integrity at the engine level, preventing orphaned rows, simplifying joins, and letting the optimizer build smarter execution plans. They also document relationships directly in the schema, easing maintenance and code reviews. A cascade delete makes sense when child data is meaningless without the parent (e.g., order-items after an order is removed) and the row counts are modest enough that the cascading work won’t lock large tables. Set NULL works when you want to preserve the child record for auditing or recovery—think log entries that can outlive a user account—while still signaling the break in the relationship. Both options should be paired with indexes on the FK columns to avoid full-table scans during enforcement.

Design an end-to-end global data architecture for an international e-commerce warehouse.

Start by clarifying SKU volume, regional latency targets, data-sovereignty rules, and forecasted vendor growth. A hub-and-spoke model works well: regional Postgres/MySQL shard(s) handle OLTP inventory updates, while CDC streams these changes into a central cloud lake (e.g., S3 + Iceberg) via Kafka. An hourly Spark/Flink job denormalizes the feed for vendor dashboards and loads a dimensional warehouse (Snowflake/BigQuery) partitioned by

ds, region. Tableau/Looker cubes cache daily, weekly, and quarterly slices; vendors query through row-level-security views keyed to theirvendor_id. Global duplicate-SKU resolution and return tracking live in a microservice with idempotent Upsert APIs to keep all stores in sync.Propose a relational schema for a ride-sharing app that records every trip.

Core tables:

users(user_id, profile fields),drivers(driver_id, vehicle info),rides(ride_id, rider_id FK, driver_id FK, requested_at, pickup_lat/lon, dropoff_lat/lon, status, fare). A separate **payments** table linksride_idto transaction details, while **driver_locations** is a high-write, time-series table keyed by(driver_id, ts)for dispatch analytics. Index rides on(rider_id, requested_at DESC)and(driver_id, status)` to speed history look-ups and active-ride polling. Partition large tables by month to keep vacuum and backups reasonable; foreign keys guarantee riders can’t reference nonexistent drivers.Sketch a distributed facial-recognition clock-in system for employees + contractors.

Capture images on edge kiosks that run a lightweight CNN, then send feature embeddings (not raw images) over TLS to a central matcher backed by a GPU-enabled vector store (e.g., FAISS). Store embeddings in two collections—employees and contractors—with metadata for access tiers. The clock-in API first authenticates the device, then calls a similarity-search endpoint; a match above threshold triggers a signed JWT for downstream systems. Periodic model retraining runs offline on labeled check-ins; new faces flow through a human-in-the-loop review queue before entering the gallery. Audit logs land in a secure lake to satisfy compliance audits.

Design a relational schema for a swipe-based dating app and name key optimizations.

Tables:

users(user_id, demographics, prefs),swipes(swiper_id, swiped_id, is_right, ts), **matches** (user_a,user_b, matched_tsunique on the unordered pair). Hot read paths—“who liked me?”—use a compound index(swiped_id, is_right, ts); hot write paths shardswipesbyswiper_id % Nto spread inserts. Store last-24-hour swipe counters in Redis for rate-limiting and feed-ranking. Pre-filter potential cards in an offline Spark job using locality and preference tags, pushing daily candidate lists to a fast document store to keep feed latency sub-100 ms.Draft a blogging-platform schema covering users, posts, comments, and tags.

Add

ON DELETE CASCADEfrompoststocomments, but keep comments when a user is deactivated viaON DELETE SET NULLonauthor_idto preserve discourse. Full-text indices onposts.bodyandcomments.bodypower search; a covering(post_id, published_at)index accelerates feeds.

Behavioral or Culture-Fit Questions

Netflix values researchers who can push boundaries while navigating stakeholder concerns. You’ll share stories about championing unconventional hypotheses, deciding when to publish research versus keeping it proprietary, and collaborating across product, engineering, and analytics teams. Interviewers assess your leadership, communication, and how you embody Netflix’s principles of candid feedback and collective ownership.

Describe a data project you worked on. What were some of the challenges you faced?

Expand on a research-grade initiative—perhaps building a causal-inference pipeline to evaluate personalization models or devising a new video-quality metric. Detail the technical hurdles (sparse labels, GPU bottlenecks, data-privacy limits) and the scientific ones (statistical validity, reproducibility). Explain how you iterated on methodology—e.g., switched from a classic baseline to a self-supervised approach—and validated results with offline metrics and live A/Bs. Conclude with measurable impact such as reduced rebuffering minutes or improved engagement prediction AUC.

What are some effective ways to make data more accessible to non-technical people?

Emphasize translating complex models into intuitive visuals (e.g., percentile “speedometer” plots for NPS predictions) and baking insight directly into self-serve dashboards. Discuss layered storytelling: start with a high-level narrative, then link to a reproducible Colab or Jupyter notebook for curious stakeholders. Mention maintaining standardized metric definitions in a knowledge base to prevent misinterpretation. Highlight the payoff—faster decision cycles and fewer ad hoc explainer meetings.

What would your current manager say are your greatest strengths and areas for growth?

Anchor strengths in research-scientist competencies: rigorous experimental design, creativity in model architecture search, and an ability to publish internal white papers that influence multiple teams. For growth, pick an honest, non-fatal trait—perhaps a tendency to over-index on methodological depth before aligning with product timelines—then describe concrete steps you’ve taken (setting decision checkpoints, pairing with a PM early). Framing feedback as an active development plan shows self-awareness and coachability.

Talk about a time when you had trouble communicating with stakeholders. How did you overcome it?

Choose a scenario where highly technical findings (say, a hierarchical Bayesian uplift model) needed buy-in from marketing or content teams. Describe the initial disconnect, how you identified the knowledge gap, and the translation tactics you employed—analogy, simplified visuals, or interactive demos. Note the final outcome: the stakeholders adopted your recommendations, and the experiment rolled out successfully, underscoring your adaptability as a scientific communicator.

Why do you want to work with us?

Tie Netflix’s culture of “Freedom & Responsibility” to your desire for end-to-end ownership of research that ships to millions of members. Reference specific open-source work or academy-industry collaborations Netflix Research has published (e.g., causal‐analysis pipelines or VMAF evolution) and explain how your skill set—deep learning for personalization, causal inference, or media optimization—can extend that frontier. Convey that you’re motivated by the chance to blend cutting-edge research with direct product impact.

How do you prioritize multiple deadlines, and how do you stay organized when juggling them?

Outline a framework that weighs business impact, scientific novelty, and dependency unblockers (e.g., data-collection windows). Describe using OKRs and weekly research sprints, maintaining a Kanban board that separates “exploration,” “experimentation,” and “productization.” Stress frequent syncs with PMs and engineering leads to realign when new show launches or strategic bets surface. Mention using reproducible pipelines and templated experiment notebooks to cut context-switch overhead.

Describe a time you transformed an exploratory insight into a production-level model.

Explain how a promising prototype—maybe a sequence-to-sequence model forecasting audience retention—was hardened through scalable feature pipelines, offline back-tests, and shadow deployments. Highlight cross-team collaboration (data, infra, product) and the metrics that drove final rollout. This shows you can bridge pure research and engineering execution.

How do you ensure statistical rigor when shipping models that influence creative decisions (e.g., thumbnail selection or trailer ranking)?

Discuss pre-registration of hypotheses, choosing appropriate priors or non-parametric tests for heavy-tailed engagement data, and using holdouts or switched-off cohorts to monitor for drift. Mention post-launch monitoring dashboards and formal post-mortems that feed back into the research cycle. This demonstrates a holistic view of scientific responsibility in a high-stakes content environment.

How to Prepare for a Research Scientist Role at Netflix

Preparing for the Netflix research scientist interview means demonstrating both theoretical rigor and practical engineering skills. You’ll need to show mastery of statistical methods, software design for large-scale experimentation, and the ability to articulate trade-offs in high-stakes product settings. Below are five focused strategies to structure your preparation and maximize your readiness for each stage of the loop.

Master Causal Inference & Experimentation

Roughly 40 percent of the technical rounds will probe your understanding of causal methods and experimental design. Deepen your knowledge of techniques like doubly-robust estimation, uplift modeling, and sequential testing. Practice by designing end-to-end A/B tests: from hypothesis formulation and power calculations to interpretation of confidence intervals. Be ready to discuss how you’d handle confounders, instrument choice, and guardrail metrics in complex, real-world scenarios. Clear, concise explanations of your approach will set you apart.

Review Netflix Open-Source

Netflix has open-sourced tools like Metaflow, Feathr, and Polynote to streamline ML workflows. Spend time exploring their GitHub repositories, running example pipelines, and reading the underlying architecture docs. Understanding these platforms shows you can hit the ground running on the very systems you’ll use. It also signals your ability to contribute to Netflix’s culture of sharing and innovation. In your interviews, reference specific features or design decisions to demonstrate genuine familiarity.

Think Out Loud

Interviewers at Netflix highly value transparency in your reasoning. As you work through problems, narrate your assumptions, trade-offs, and checkpoints. Articulate why you choose one statistical test over another, how you’d validate your data sources, or why a particular feature engineering step matters. This practice not only clarifies your thought process but also invites collaborative dialogue. It’s better to lay out an imperfect plan clearly than to silently work toward a perfect solution.

Brute Force, Then Optimize

Whether coding an estimator or architecting a data pipeline, begin with a straightforward, correct implementation. Walk the interviewer through a baseline version, then iteratively refine it for efficiency, scalability, or numerical stability. For example, start with a simple loop-based algorithm, then vectorize or parallelize using Spark or NumPy. Demonstrating this progression shows you can balance speed of delivery with attention to performance—an essential skill for production research systems.

Mock Interviews & Research Talks

Simulate the actual loop by practicing both live-coding problems and a 10-slide research presentation. Use Interview Query’s mock interview feature to get timed feedback on your code challenges and slide decks. For your talk, pick a past project and rehearse explaining your problem statement, methodology, results, and impact in 15 minutes, leaving time for questions. Repeated rehearsal under realistic conditions will boost your confidence and fluency during the real interview.

FAQs

What Is the Average Salary for a Netflix Research Scientist?

Average Base Salary

Average Total Compensation

Salaries for this role typically reflect top-of-market compensation. The Netflix research scientist salary and Netflix researcher salary bands trend near the high six-figure range, complemented by annual RSU refreshers. Senior and Applied-AI tracks often exceed Netflix AI salary benchmarks across FAANG peers. When negotiating, anchor to the highest band and emphasize your publication portfolio and production impact.

Does Netflix Differentiate Between Research Scientist and Applied Scientist Titles?

While both tracks share a common interview loop, the Research Scientist role focuses more on novel algorithm development and external publication, whereas the Applied Scientist role emphasizes engineering integration and product metrics. Expect identical coding and design rounds, but the Research Scientist interviews include additional deep-dives into your academic contributions and roadmap vision.

Conclusion

You now have a clear roadmap for the Netflix research scientist interview, from understanding each stage and typical question themes to navigating compensation negotiations. Build on this foundation by refining your research deep-dive, rehearsing causal-inference and ML system-design examples, and staying sharp on Netflix’s open-source tools. Take inspiration from Alma Chen’s success story, who leveraged mock rounds and targeted prep to land her offer in streaming AI research.

To keep the momentum, explore our comprehensive learning paths for deep dives into data science, experimentation, and system design. When you’re ready, schedule a mock interview to simulate the real loop and receive actionable feedback. Good luck—your next breakthrough in AI-driven entertainment awaits!