Netflix Data Engineer Interview Guide (2025) – Process, Salary & Questions

Introduction

The Netflix data engineer interview at Netflix evaluates your expertise in building and optimizing the ETL pipelines that power real-time personalization and subscriber insights. You’ll craft scalable data workflows ingesting and transforming petabytes of viewing data, ensuring reliable metrics for product teams. Within Netflix’s “Freedom & Responsibility” culture, you have the autonomy to select technologies, own end-to-end delivery, and drive high-impact outcomes across the entire data lifecycle.

Role Overview & Culture

As a Netflix data engineer, you’ll partner closely with data scientists, analysts, and product managers to design, implement, and maintain the data infrastructures underpinning critical features—from For You feed ranking to churn prediction. You’ll leverage tools like Apache Spark, Kafka, and AWS (or Netflix’s internal alternatives) to orchestrate batch and streaming workflows, all while ensuring data quality, observability, and low latency. The role demands a strong foundation in data modeling, distributed systems, and performance tuning, as well as a proactive approach to anticipating scale challenges. Netflix’s “Freedom & Responsibility” ethos empowers you to propose improvements, experiment with new technologies, and take full ownership of your services. This position sits at the heart of Netflix’s data ecosystem, shaping how the company understands and delights its 260 M+ members through robust Netflix data engineering solutions.

Why This Role at Netflix?

You’ll be drawn to the Netflix data engineer role by the chance to solve some of the largest ETL challenges in streaming, handling trillions of events monthly and delivering insights that directly influence content and product strategy. Netflix offers top-of-market compensation—competitive base salaries, significant RSUs, and generous bonuses—to reflect the strategic value of this work. Beyond pay, you’ll join a team that values continual learning, hosts frequent tech deep dives, and provides clear paths to senior or principal roles. If you’re passionate about crafting high-throughput data platforms and driving data-driven decisions at global scale, read on to learn about the interview process that lies ahead.

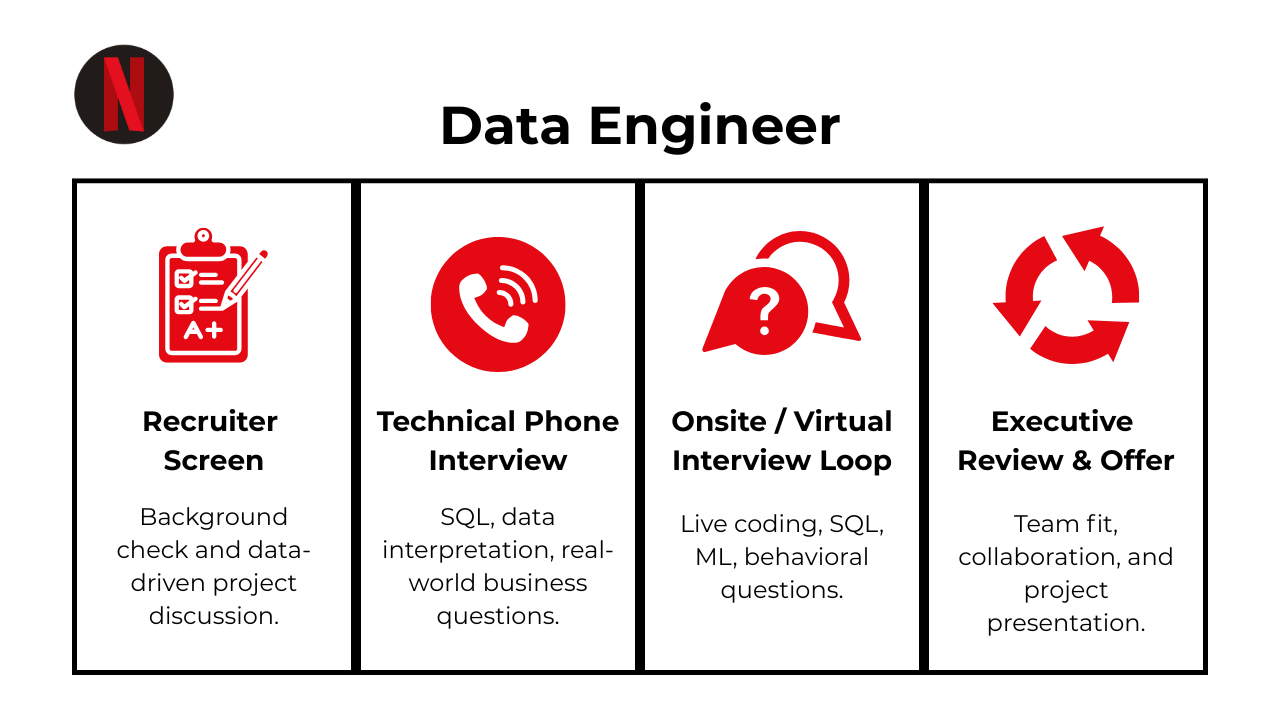

What Is the Interview Process Like for a Data Engineer Role at Netflix?

The Netflix data engineer interview process kicks off with an initial recruiter conversation and moves swiftly through technical screens to on-site loops, ensuring you’re assessed comprehensively and given prompt feedback. If you’re weighing Netflix against other best data engineering companies, you’ll appreciate our focus on real-world scale and end-to-end ownership for data engineers.

Application & Recruiter Screen

Your journey begins with a brief call where a recruiter gauges résumé alignment, role motivations, and culture fit. Expect questions about your past data projects and familiarity with streaming or large-scale ETL—key for any data engineer Netflix candidate aiming to join our global team.

Technical Screen

Next, you’ll tackle a timed assessment—often a HackerRank-style quiz combining SQL puzzles, Python scripting, and data modeling scenarios. The goal is to verify your ability to write efficient queries, handle complex joins and window functions, and prototype transformation logic under time constraints.

Virtual On-Site Loop

In the virtual loop, you’ll engage with multiple interviewers across coding challenges, data architecture exercises, and behavioral discussions. You might be asked to design a partitioning strategy for Netflix’s event logs or walk through optimizing a Spark job for real-time recommendations.

Hiring Committee & Offer

After interviews, your packet—comprising interview feedback, work samples, and recruiter notes—goes to a cross-functional hiring committee. They calibrate levels, discuss comp bands, and make the final decision, typically within a week of your on-site.

Behind the Scenes

Interviewers submit detailed feedback within 24 hours of each stage, allowing recruiters to share timely updates. Compensation is benchmarked against our top-of-market guidelines, ensuring offers reflect both your expertise and Netflix’s high-performance standards.

Differences by Level

Junior data engineers (L4–L5) focus more on coding and fundamental pipeline tasks, while senior tracks (L5+) include an architecture deep dive, leadership discussions, and questions about influencing cross-team data strategies—demonstrating the broader impact expected at higher levels.

What Questions Are Asked in a Netflix Data Engineer Interview?

When gearing up for Netflix data engineer interview questions, you’ll find the assessments span from low-level coding checks to high-level architectural discussions. Below is an overview of the major categories and the kinds of challenges you can expect, complete with common themes from data engineering questions at Netflix.

Coding / Technical Questions

In this section, interviewers probe your hands-on skills with real-world data tasks. You might be asked to optimize a distributed ETL pipeline to gracefully handle late-arriving events, or to craft a SQL window function that surfaces trending shows. When discussing schema evolution, expect to explain strategies from a Netflix data modeling interview perspective—how you’d debug Kafka stream schema changes without downtime. Many data engineering questions at Netflix also test your ability to write efficient Python or PySpark transformations under tight performance constraints.

Write a SQL query that returns the 2nd-highest salary in the engineering department.

Filter the

employeestable todepartment = 'Engineering', use a window function such asDENSE_RANK()or a correlated sub-query to rank distinct salary values in descending order, and pick the row where rank = 2. A robust answer covers salary ties, NULL handling, and why an index on(department, salary DESC)keeps the scan efficient.Determine whether two axis-aligned rectangles overlap given four corner points each.

Normalize every rectangle to

(min_x, max_x, min_y, max_y)and returnTrueunless one lies completely left/right or above/below the other. Edge and corner-touch cases count as overlap, so the Boolean check is the negation of those non-overlap conditions. Constant-time logic and clear boundary reasoning are what interviewers look for.missing_number(nums)— find the single missing value in the range 0…n in O(n) time and O(1) space.Leverage the arithmetic-series identity

n × (n+1)/2(or XOR accumulation) and subtract the actual sum of the array. Highlight why the approach is linear, memory-light, and immune to integer ordering.most_tips(user_ids, tips)— return the user who tipped the most.Zip the two lists, aggregate tips per user in a dictionary, then track the max as you iterate. Clarify how you handle ties (e.g., earliest or lexicographically first user) and confirm O(n) time with minimal extra space.

Compute first-touch attribution for every user who converted.

Join

attributionanduser_sessions, filterconversion = true, then withROW_NUMBER() OVER (PARTITION BY user_id ORDER BY session_ts)pickrow_number = 1to capture the discovery channel. Mention deduping equal timestamps and indexing(user_id, session_ts)for petabyte-scale logs.Select a random element from an unbounded stream in O(1) space.

Describe reservoir sampling of size 1: keep the first value, and for the k-th item replace the stored value with probability 1/k. Provide the inductive proof of uniformity and note how this pattern underpins log sampling and telemetry pipelines.

Detect overlap among a user’s completed subscription periods.

Self-join the

subscriptionstable onuser_idwith astart_a < end_b AND start_b < end_acondition anda.id <> b.id. Wrap inEXISTSor aggregate withBOOL_OR()to output a per-user TRUE/FALSE flag. Indexing on(user_id, start_date, end_date)keeps the join feasible on large histories.Find the five most-frequent paired products purchased together.

Explode each

transaction_idinto product pairs via a self-join, enforcep1 < p2to avoid duplicates, count occurrences, order by count DESC, and limit 5. Discuss deduplicating billion-row intermediates with temp tables or approximate sketches for production scale.Return the last transaction of each calendar day.

Use

ROW_NUMBER() OVER (PARTITION BY DATE(created_at) ORDER BY created_at DESC)and filter forrow_number = 1, returningid,created_at, andtransaction_value. Mention casting to DATE and why a composite index on(created_at)accelerates the partitioned sort.List the top-3 salaries per department with employee full name and department name.

Join

employeestodepartments, applyDENSE_RANK()partitioned by department ordered by salary DESC, filter for ranks ≤ 3, and sort results by department ASC then salary DESC. Stress how this window-function pattern simplifies leaderboard logic and scales with proper(department_id, salary DESC)indexing.

System / Data Architecture Design Questions

Here, you’ll demonstrate your grasp of large-scale infrastructure and resiliency patterns. You might outline a petabyte-scale S3 → Spark → Redshift pipeline, choosing optimal partition keys for high-cardinality event data. Discussions often touch on GDPR delete workflows in real time or strategies for replaying event streams without data loss. These scenarios highlight your ability to architect for Netflix big data analytics and guarantee pipeline idempotency under heavy load.

-

Foreign-key constraints preserve referential integrity at write time, preventing orphaned rows and enabling the optimizer to choose tighter joins.

CASCADEis appropriate when the child record is meaningless without the parent (e.g., order-line items tied to a deleted order);SET NULLworks when the child row can stand alone but should lose its association (e.g., “last_viewed_movie_id” on a user profile). Calling out index requirements on both sides and the slight write-time overhead shows pragmatic judgment. Design an end-to-end global e-commerce warehouse architecture: ETL + reporting.

Clarifying questions span cross-border tax rules, SLAs for vendor dashboards, multi-currency handling, and latency targets for inventory updates. A typical answer proposes an event-stream (Kafka / Kinesis) ingest layer feeding partitioned cloud object storage (S3 / GCS) and a columnar warehouse (Snowflake, BigQuery) with dbt-managed transforms. Fact tables like

inventory_snapshotandorders_fctpower Looker or Tableau semantic layers; regional read replicas keep GDPR and data-locality constraints. CDC from OLTP into the lake via Debezium plus incremental materializations keeps nightly jobs under control.Golden Gate Bridge car-timing data: schema + “fastest car today” queries.

Store an entry in

crossings(car_id, entry_ts, exit_ts, make, license_plate). A viewcross_time AS (exit_ts‒entry_ts)lets you pullORDER BY cross_time ASC LIMIT 1for today. To find the make with the best average, aggregateAVG(cross_time)grouped bymake, filter to today, and pick the MIN. Partitioning onentry_tskeeps hot-day queries in memory while historical partitions live colder.Single-store fast-food DB: schema + top-items + “drink attach rate.”

Core tables:

menu_items,orders,order_itemswith a lookup oncategory(“drink”, “entree”, etc.). Yesterday’s top-three revenue items come from summingprice*qtyinorder_itemsjoined toordersfiltered onorder_date = CURRENT_DATE–1. Drink attach rate isCOUNT(DISTINCT order_id WHERE category='drink') ÷ COUNT(DISTINCT order_id).Build an hourly/daily/weekly Active-User pipeline that refreshes every hour.

Land raw events into a lake, then run Spark-structured-streaming (or Flink) to produce an hourly incremental fact table keyed by

(date_hour, user_id). Rollups to day- and week-level materialized views are incremental—merge-upsert on change sets—so only the most-recent hour’s partitions re-aggregate. A task-orchestration layer (Airflow) governs dependency DAGs, and backfills are idempotent via partition deletes + re-writes.Evolving an address-history schema that tracks occupants over time.

Introduce an

addressesdimension with surrogate PK, and ahousehold_history(user_id, address_id, move_in_date, move_out_date)fact.move_out_dateNULL signals current residence. Composite(user_id, address_id, move_in_date)key prevents duplicates; a check constraint guaranteesmove_out_date > move_in_date. Temporal querying becomes straightforward with range-overlap predicates.If you were to architect a YouTube-scale video recommendations, how would you go about it?

Decompose into candidate-generation (ANN on embedding store), multi-tower ranking (deep model using watch history, freshness, and diversity), and post-ranking business rules (age limits, regional blocks). Feature store caches user and video embeddings in Redis-like KV with TTL. Offline Spark jobs retrain embeddings nightly; a real-time feedback loop logs watch events to Kafka and increments embeddings via Lambda-style micro-updates. Guardrails include fairness, cold-start fallbacks, and explainability hooks.

Plan a migration from a document DB to a relational store for a small social network.

Enumerate entities (users, friendships, likes) and design 3NF schemas with junction tables (

friendships(user_id_a, user_id_b, created_at)). A dual-write or CDC bridge keeps both systems live while backfills stream historical JSON into staging tables, validated via row-count and checksum parity. De-normalization (e.g., friend-count materialized against friendships) prevents n-plus-one read storms.Cost-aware click-stream solution for 600 M events/day with two-year retention.

Raw Avro/Parquet lands in S3 with partitioning

dt=YYYY-MM-DD/hr=HH. Glue/BigQuery external tables serve ad-hoc queries; frequent dashboards rely on aggregated rollups (session-level, page-view fact) stored in a columnar warehouse tier. Lifecycle policies tier cold partitions to Glacier/Archive after 90 days. Athena/Presto on-demand keeps OpEx low vs. always-on clusters.Design a Tinder-style swiping-app schema and highlight optimizations.

Core tables:

users,swipes(from_user, to_user, is_right, ts),matches(match_id, user_a, user_b, created_ts), plusmessages. Hot-path optimizations include Redis bloom filters to avoid re-surfacing prior dislikes and a composite(from_user, ts DESC)index to fetch recent swipes. Partitioningswipesbyfrom_user % 1024evens shard load.Design an ML system to minimize wrong or missing orders in a food delivery platform.

Capture structured order meta-data (merchant, items, prep time) and image/IoT sensor signals from restaurants/drivers. A feature-store writes normalized features to Cassandra with TTL; an online model scores risk pre-delivery and triggers confirmation flows. Periodic offline retraining consumes labeled incidents from a QA table, and an experiment-service gates rollout. KPIs: incident rate, false-positive driver pings, and complaint-handling cost.

End-to-end parking-spot-finder system design.

Assumptions: ≤100 K spots city-wide, updates every 30 s. Raw CSV/JSON availability feeds land in Kafka, processed by a stream job that upserts

(spot_id, lat, lon, is_available, updated_ts)into a low-latency store (DynamoDB / Spanner). A separate analytical pipeline snapshots state changes into time-partitioned Parquet for occupancy heat-maps. Geospatial queries leverage H3/quad-key indexing so the API can return open spots within radius in <100 ms.

Behavioral & Culture-Fit Questions

Within Netflix’s “Freedom & Responsibility” ethos, you’ll also discuss how you’ve owned critical data jobs end-to-end. Interviewers look for examples of navigating ambiguous requirements under tight deadlines, owning a failed pipeline and clearly communicating its business impact, and persuading stakeholders to adopt new tools or processes. These conversational Netflix data engineer interview prompts assess your judgment, collaboration style, and readiness to thrive in our fast-paced environment.

Describe a data project you worked on. What were some of the challenges you faced?

Choose a project that required moving or transforming large data sets—for example, migrating legacy ETL to a streaming architecture or backfilling petabytes into Iceberg tables. Detail the toughest hurdles (schema-drift, GDPR constraints, runaway costs) and the debugging or redesign steps you took. Emphasize how you balanced speed of delivery with Netflix-grade reliability, and call out measurable wins such as runtime cuts or cost reductions.

What are some effective ways to make data more accessible to non-technical people?

Discuss how you surface metrics through curated Looker dashboards, semantic layers, or self-serve notebooks with data contracts that guarantee column meaning. Explain how you partner with PMs and creatives to co-define “single-source-of-truth” tables, add plain-language metadata, and schedule data-literacy clinics. Highlight tooling (e.g., DataHub lineage, dbt docs) that prevents misinterpretation while keeping the pipeline agile enough for rapid experimentation.

What would your current manager say about you, and what constructive feedback might they offer?

Anchor strengths in core data-engineering competencies—e.g., “They’d say I’m the go-to person for optimizing Spark jobs and mentoring new hires on partitioning strategy.” For growth areas, reference something adjacent (perhaps over-indexing on perfection before shipping) and describe actions you’ve taken—such as setting explicit ‘good-enough’ SLAs or pairing with SREs on observability. This shows self-awareness and a bias toward continuous improvement.

Talk about a time you had trouble communicating with stakeholders. How did you overcome it?

Pick a scenario where data latency or metric definitions were misunderstood by marketing or content teams. Outline how you surfaced root causes with probing questions, translated technical jargon into business impact, and agreed on next-step instrumentation or dashboards. Underline the outcome—fewer ad-hoc requests, faster decision cycles, or less metric thrash.

Why did you apply to our company, and what makes you a good fit?

Tie your answer to Netflix’s data culture—real-time experimentation, micro-service ownership, and the freedom-and-responsibility ethos. Reference specific engineering blog posts (e.g., Iceberg, Keystone) that excite you and show how your background in streaming ETL, cost-optimization, or ML-feature stores maps to ongoing initiatives. Finish with how you’ll help scale insights that keep members “joyfully bingeing” worldwide.

How do you prioritize multiple deadlines, and how do you stay organized when juggling competing tasks?

Describe a framework—impact vs. effort scoring, RICE, or Netflix’s context-not-control style—to triage pipeline outages, schema-change reviews, and feature requests. Explain tooling: JIRA swim-lanes for sprint planning, On-call rotation runbooks, and alert dashboards tied to SLO breaches. Show that you revisit priorities whenever upstream teams shift roadmaps, keeping communication tight but overhead light.

Tell me about a time you reduced cloud spend without sacrificing performance. What trade-offs did you evaluate?

Netflix’s data bills are enormous; interviewers want to know you can profile expensive queries, implement partition pruning, leverage spot instances, or introduce auto-scaling—and that you measured the savings against any latency or reliability impact.

Describe a situation where a data-quality issue escaped into production dashboards. How did you handle the remediation and prevent recurrence?

Highlight incident-response steps: swift root-cause analysis, backfilling corrected partitions, stakeholder notifications, and adding unit tests / data contracts or Great Expectations checks to block future bad loads. Emphasize ownership and lessons learned rather than blame.

How to Prepare for a Data Engineer Role at Netflix

Before your interviews, you’ll want a targeted prep plan that sharpens both your technical chops and your storytelling.

Deep-Dive Netflix Open Source

Study core projects like Iceberg, Mantis, and Keystone to understand Netflix’s in-house solutions. Being able to reference these tools shows you’re ready to hit the ground running.

Master SQL + PySpark

Since about 40 % of your technical screen hinges on query and transformation skills, practice complex joins, window functions, and PySpark DataFrame optimizations. These exercises mirror the Netflix data engineer interview questions you’ll face.

Review Streaming Design Patterns

Brush up on event-time processing, watermarking, and stateful stream joins. Exactly once mention of Netflix data modeling interview techniques here will help you articulate best practices for real-time pipelines.

STAR Your Culture Stories

Frame your anecdotes around ownership, impact, and how you embraced responsibility. Netflix values engineers who can connect technical work back to business outcomes.

Mock Interviews

Simulate two coding sessions, one system-design exercise, and a behavioral round with peers or mentors. Doing full-loop dry-runs familiarizes you with the pace and depth of the real interviews. Utilize Interview Query’s mock interview services.

With focused practice on core data engineering topics, a solid grasp of Netflix’s architecture, and compelling STAR stories, you’ll be well on your way to mastering the Netflix data engineer interview process.

FAQs

What Is the Average Salary for a Data Engineer at Netflix?

Average Base Salary

Average Total Compensation

Netflix data engineer salary bands are structured to reflect top-of-market compensation philosophies. Mid-level data engineers receive competitive base pay plus equity refreshers, while senior roles align with Netflix senior data engineer salary benchmarks for L5/L6 levels. When comparing offers, data engineer salary Netflix figures often position Netflix above peers once stock and bonus components are included.

Is Netflix One of the Best Data Engineering Companies?

Netflix is consistently rated among the best data engineering companies thanks to its culture of innovation, autonomy, and scale. Data teams build exabyte-scale pipelines under a “Freedom & Responsibility” ethos, driving rapid iteration and real-time insights that power personalization for over 260 million members. This environment attracts engineers eager to solve complex challenges with cutting-edge tools.

Where Can I Practice Netflix-Style Data Engineering Questions?

For targeted practice, explore Interview Query’s extensive question bank featuring real-world scenarios modeled after Netflix’s interviews. The dedicated section for Netflix data engineer interview questions covers ETL optimization, streaming architectures, SQL transformations, and system-design cases—exactly the skills you’ll need to excel.

Does Netflix Require a Coding Test for Senior Data Engineers?

Yes—senior candidates typically complete a rigorous coding assessment focused on advanced pipeline scenarios alongside the standard technical screen. Discussion of senior data engineer Netflix salary often arises during compensation conversations, reflecting the elevated expectations and deeper domain expertise required at higher levels.

Conclusion

Mastering the Netflix data engineer interview flow and drilling these role-specific questions will dramatically boost your odds of securing an offer. For a comprehensive view of the hiring journey, visit our Netflix Interview Process overview. You can also deepen your prep with our Netflix Data Scientist Interview Guide or jump into a full IQ mock-interview coaching session.

As Hanna Lee’s success story shows, consistent practice and targeted preparation are key—begin your path today with the Data Engineering Learning Path.