Netflix Machine Learning Engineer Interview Guide (2025) – Salary, Questions, Process

Introduction

Netflix’s Machine Learning Engineers are at the heart of creating personalized, engaging experiences for over 260 million members worldwide. Navigating the interview process for this role requires not only algorithmic and coding proficiency but also the ability to design scalable ML systems, craft rigorous experiments, and maintain production-grade reliability under ambiguity. This guide demystifies each stage of the Netflix hiring journey—from the recruiter screen and technical assessments to onsite rounds and the final hiring committee—so you know exactly what to expect.

You’ll find detailed breakdowns of the key technical topics, system-design scenarios, and behavioral prompts that Netflix uses to evaluate candidates. Alongside sample question types, we offer targeted preparation tips, mock-interview strategies, and insights into Netflix’s unique Freedom & Responsibility culture. Whether you’re transitioning from data science, software engineering, or research, this guide will help you align your strengths with Netflix’s high bar for Machine Learning Engineers.

Role Overview & Culture

The Netflix machine learning engineer interview evaluates your ability to own real-time training pipelines that underpin content recommendations, dynamic thumbnail personalization, and experiment-driven A/B tests. In this role, you will collaborate with data scientists, site reliability engineers, and product teams to build high-throughput data architectures capable of serving hundreds of millions of users per second. Netflix’s Freedom & Responsibility culture empowers you to select your tools, prototype rapidly, and iterate based on live performance metrics. You’ll need to demonstrate not only coding and algorithmic skills but also a deep understanding of distributed systems, model deployment strategies, monitoring, and continuous integration. As a machine learning engineer Netflix, you’ll work hands-on with petabyte-scale datasets and own the end-to-end lifecycle of ML features.

Why This Role at Netflix?

Joining as a Netflix machine learning engineer offers the opportunity to innovate at unprecedented scale, shipping models directly to over 260 million subscribers. You’ll gain exposure to cutting-edge ML research in areas like causal inference, deep learning, and streaming analytics, while receiving generous stock-based compensation and rapid career growth. Netflix values engineers who can translate research breakthroughs into production systems, balancing exploration with reliability. To thrive in the Netflix machine learning interview, you’ll need to articulate not only your technical depth but also your business impact and decision-making under ambiguity. Let’s break down the interview stages and equip you with the insights to land this high-impact role.

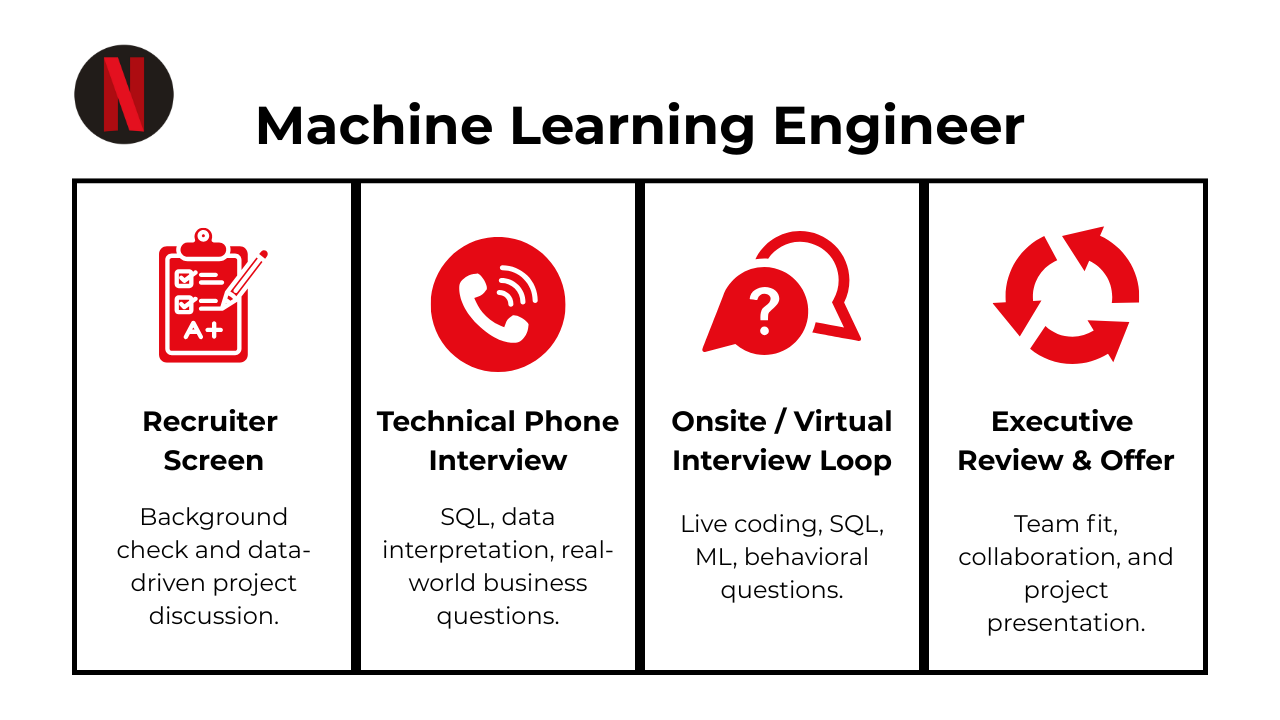

What Is the Interview Process Like for a Machine Learning Engineer Role at Netflix?

The Netflix machine learning engineer interview typically unfolds over four main stages, each designed to assess different facets of your technical expertise, system-thinking, and cultural fit. Below is a concise overview of what to expect at each step, followed by insights into how Netflix evaluates candidates behind the scenes and at different seniority levels.

Application & Recruiter Screen

Your journey begins with a short call to confirm résumé fit, motivation for joining Netflix, and basic eligibility. Recruiters will probe your background in machine learning, distributed systems, and previous impact on production services, ensuring alignment with Netflix’s Freedom & Responsibility culture.

Technical Screen

Successful candidates receive a take-home modeling quiz paired with a live coding exercise in Python. The quiz often covers topics like feature engineering, model evaluation metrics, and data preprocessing, while the coding portion tests your ability to implement algorithms cleanly under time constraints.

Virtual On-Site

This loop usually consists of one ML system design interview (e.g., architecting online training pipelines), one algorithmic coding session, and one behavioral discussion focusing on collaboration and problem-solving. Expect deep dives into trade-offs between model complexity, latency, and maintainability in production.

Hiring Committee & Comp Review

After passing the loop, your packet—comprising interviewer feedback and work samples—is reviewed by a cross-functional panel. Decisions balance technical performance, cultural alignment, and market benchmarks to determine your compensation and level.

Behind the Scenes

Interviewers submit scores within 24 hours of each loop. A centralized packet review aggregates evaluations across performance dimensions, ensuring consistency and rigor. Compensation is then calibrated against peer benchmarks and Netflix’s top-of-market pay philosophy.

Differences by Level

At more senior levels, candidates face an additional ML architecture deep-dive that explores operationalizing models at scale, including monitoring, rollback strategies, and feature store design—part of the broader Netflix MLE interview for leadership roles.

Next Steps

Understanding each stage of this process allows you to tailor your preparation effectively, focusing on the right mix of coding, design, and behavioral strategies. Up next: detailed question breakdowns and preparation tips to help you excel.

What Questions Are Asked in a Netflix Machine Learning Engineer Interview?

In the Netflix machine learning interview, candidates can expect a mix of coding challenges, system design discussions, and behavioral prompts that reflect real-world MLE responsibilities. Below is an overview of each category and why these questions matter.

Coding / Technical Questions

These problems assess your ability to implement algorithms and data pipelines under production constraints. Interviewers look for clean, efficient code in languages like Python or Scala, as well as your understanding of numerical stability, vectorization, and streaming data frameworks. Excelling here demonstrates your readiness to write high-quality, maintainable code for Netflix’s real-time personalization and recommendation systems.

Rotate a matrix 90-degrees clockwise in place.

Treat the 2-D array as concentric “rings.” For each ring, swap the four corresponding elements (top → right → bottom → left) one layer at a time, iterating from the outer border toward the center. This avoids extra memory and runs in O(n²) time for an n×n matrix. Correct indexing and walking only half of the ring length prevent double swaps. The problem exercises low-level array manipulation—handy when implementing custom kernels or memory-tight preprocessing inside ML pipelines.

Return the missing number in a 0…n sequence.

Sum of 0 … n equals n × (n + 1)/2; subtract the actual array sum to get the missing value in O(n) time and O(1) space. For huge ranges where overflow is a concern, XORing all indices with all elements yields the same answer without large integers. Edge cases include the missing value being 0 or n. This is a classic bit-manipulation warm-up that confirms comfort with arithmetic tricks useful in optimized data loaders.

Implement a priority queue with a singly-linked list.

Maintain the list in descending-priority order:

insert()scans until the correct slot and splices the new node;peek()returns the head;delete()pops the head, all in O(k) worst-case time where k is queue length. Stable tie-breaking is preserved because new elements are appended after same-priority nodes. Graceful handling of empty states returnsNone. Though a heap is faster, understanding linked-list trade-offs builds intuition for custom queueing inside streaming inference systems.Write

isMatch(s, p)supporting.and regex operators.Build a DP table where

dp[i][j]stores whethers[:i]matchesp[:j]. For*, propagate truth fromdp[i][j-2](zero repeats) or, if preceding char matches, fromdp[i-1][j](consume one). The dot matches any single character. Initialization handles patterns likea*. Time and space are both O(|s|·|p|). This demonstrates dynamic-programming fluency often reused in sequence-model loss functions or tokenizer code.Simulate drawing balls from a jar given color counts.

Convert counts to a cumulative range, pick a random integer in

[1, total], and binary-search to map it back to a color—yielding sampling proportional to weight in O(log m) where m = colors. Adjust counts and total after each draw to support sampling without replacement. The routine mirrors weighted reservoir sampling for negative-sampling or class-balanced mini-batch creation.Select a random element from an unbounded stream in O(1) memory.

Apply reservoir sampling of size 1: keep the first element, and for the k-th item replace the stored value with probability 1/k. Inductive proof shows every element has equal 1/N probability after N items. This algorithm underpins online logging and unbiased data collection for continual-learning pipelines.

-

Use two pointers that advance whichever current element is smaller, appending to an output list until both inputs are exhausted. The time complexity is O(n + m) and memory O(n + m) (or O(1) if splicing nodes in linked-list form). This pattern is foundational for external merge-sort in large-scale ETL jobs.

Compute business days between two dates.

Convert strings to

datetime, iterate or use arithmetic to count weekdays excluding weekends and optionally a holiday set. A math shortcut: total days minus 2 × full-week count minus weekend adjustments for the partial week. This type of date math surfaces in churn or renewal-lag features for subscription-ML models.-

A single nested loop (or NumPy’s

np.sum) aggregates in O(r × c). Show awareness of integer overflow and how vectorized BLAS instructions accelerate the scan. Although trivial, it checks understanding of memory layout and reduction operations essential for custom CUDA kernels. Monthly user, order, and GMV report for 2020 (SQL).

Generate a calendar spine of 2020 months, left-join transactions,

COUNT(DISTINCT user_id),COUNT(*)orders, andSUM(order_total)revenue, grouping bymonth_start. Filtering to 2020 ensures 12 rows. Windowing or CTEs keep the query readable. Efficient date-dim usage proves you can build reliable feature backfills.Find all number combinations that sum to N.

Sort the list, then recurse with backtracking: choose an element, subtract from target, and explore, skipping duplicates and halting when the remaining sum is negative. Store valid paths when target hits zero. Complexity is exponential in worst case, but pruning and early stopping keep average cases fast. Such combinatorial reasoning appears in hyper-parameter grid generation and feature-subset search.

Implement a random-forest classifier from scratch without scikit-learn.

Enumerate all feature permutations to build deterministic decision trees that split on the query point’s value; each tree votes, majority class wins. Use Pandas for data slicing and NumPy for vector ops. While exponential, the toy constraint showcases understanding of bagging, ensemble bias-variance, and tree traversal—knowledge useful when extending ML libraries or debugging prod models.

Identify intersecting lines within an x-range.

Two lines

y = m₁x + b₁andy = m₂x + b₂intersect ifm₁ ≠ m₂and the x-coordinate of intersectionx_int = (b₂ − b₁)/(m₁ − m₂)lies inside the given range. Brute-force all pairs and store intersecting ones; optimize with slope buckets to prune parallel candidates. Edge cases include vertical overlaps (equal slopes) and shared intercepts. This geometric reasoning is handy for computational-geometry preprocessing in AR/VR and graphics-heavy ML tasks.

System / Product Design Questions

You’ll be asked to architect end-to-end machine-learning solutions—such as an online–offline training loop with real-time feedback or a scalable feature store with sub-minute update latency. Discussion points include data ingestion, model versioning, monitoring, and canary rollout strategies. These exercises evaluate your ability to design robust, low-latency pipelines at Netflix’s massive scale and highlight key considerations in a Netflix machine learning engineer interview.

How would you architect a large-scale video-recommendation system?

Lay out an end-to-end pipeline: event ingestion → real-time feature store → two-tower candidate generator (user × video embeddings) → ranker → on-device caching. Emphasize incremental training on watch, impression, and abandonment signals; hard-negative mining to avoid popularity bias; and multi-objective ranking that balances watch-time, freshness, and content diversity. Discuss safeguards—age-appropriateness, creator fairness, topic fatigue—and offline/online evaluation (A/B holdouts, counter-factual logging). Mention latency budgets (<200 ms) and the need for hierarchical caches at CDN edges to keep tail-latency low.

Design an algorithm for type-ahead search suggestions (e.g., Netflix title autocomplete).

Propose a two-stage system: a trie or FST index for millisecond prefix lookup that surfaces a few hundred candidates, followed by a neural ranker that scores them with signals such as personalized watch history, global popularity, and lexical edit distance. Describe query-level features (language, device, time of day) and document features (recent trend velocity, genre). Address cold-start (fastText embeddings, popularity back-off), spelling mistakes (Damerau–Levenshtein), and multilingual tokenization. Evaluation uses query-CTR, mean reciprocal rank, and head-tail coverage to ensure niche titles still surface.

Build a classifier that predicts the best moment for an ad break inside a long-form video.

Frame it as a sequence-labeling task over shot or scene boundaries. Inputs include visual scene-change scores, audio energy, subtitle sentiment, and historical skip/back-seek positions. Model choices: Bi-LSTM + attention or temporal CNN feeding a sigmoid that outputs “ad-eligible” probability per second; post-process with a minimum-distance constraint to avoid back-to-back ads. Label data can be derived from editor-chosen breaks or user drop-off spikes. Guardrails: never interrupt dialogue mid-sentence, cap total ad load per minute, and provide a confidence threshold to editors for manual review.

Outline an ML pipeline for Spotify-style “Discover Weekly” playlist generation.

Combine collaborative filtering (user-track matrix factorization) to get seed candidates, content-based similarity on acoustic features/lyrics for cold-start tracks, and a diversification step (Maximal Marginal Relevance) to avoid artist clumping. Use a re-ranker trained on historical skip, save, and playlist-add signals to predict 30-track slate utility. Refresh embeddings nightly but re-rank on demand so the playlist adapts if a user binges a new genre mid-week. Success metrics include overall listen-through-rate and discovery-weighted hours (time spent on songs the user had never streamed before).

Detect prohibited firearm listings on an online marketplace.

Start with a multimodal classifier: text CNN/BERT on title + description, image CNN fine-tuned on firearm vs. non-firearm, and metadata (price bands, category). Fuse logits with a small gradient-boosted tree. Address adversarial text (e.g., “pew-pew tool”) via character n-grams and continual-learning on enforcement feedback. Add a high-recall heuristic layer (blocked keywords, dense-vector similarity to known gun images) feeding human reviewers, while a high-precision auto-remove threshold keeps the marketplace clean. Monitor precision/recall drift as sellers adapt.

Design an ML system for automated unsafe-content detection on a social platform.

Present a multi-tier filter: ✧ hash-matching (PhotoDNA, SHA-1) for known bad media ⇒ ✧ real-time lightweight models on-upload (NSFW CNN, toxic-text BERT) ⇒ ✧ heavier ensemble in the cloud for borderline cases. Incorporate user-report signals into a continual-learning loop. For false-negative mitigation, run periodic back-fills on historical content using the latest model versions. Privacy constraints require on-device inference for certain regions; federated learning with secure aggregation can update weights without pulling raw data.

Predict hourly NYC-subway ridership with streaming data.

Ingest GTFS turnstile feeds into Kafka → stream through a Flink job that aggregates per station/hour → write to a feature store (BigQuery/Redis). Train an online ARIMA-XGBoost hybrid (short-term anomalies + seasonality baselines) updated every hour with the latest features (weather, events). Return predictions via a REST/gRPC service with s SLA; batch-retrain nightly to refresh global parameters. Non-functional: 99.9 % uptime, auto-scaling consumer groups, model-version rollbacks, and back-pressure handling when MTA feed lags.

Identify pitfalls when running sentiment analysis on r/WallStreetBets posts.

Challenges include sarcastic language (“to the moon”), ticker-symbol ambiguity (“YOLO”), class imbalance toward hype, and concept drift with meme coins. Label leakage from emoji or upvote counts can inflate metrics. Toxicity and profanity filters may misclassify legitimate sentiment. After deployment, monitor for adversarial pumping—users gaming the model by stuffing bullish keywords. Continuous evaluation with fresh manual labels and threshold calibration protects against mis-priced trading decisions.

Behavioral or “Culture Fit” Questions

Netflix values its Freedom & Responsibility culture, so expect prompts about handling model failures, navigating cross-functional disagreements, or leading experiments. For example, you might describe how you owned a production issue during a high-visibility launch or collaborated with product teams on KPI definitions. Referencing the ethos of autonomous decision-making and including Netflix MLE interview scenarios shows your alignment with Netflix’s principles.

Describe a data project you worked on. What were some of the challenges you faced?

Recount a project that shows end-to-end MLOps ownership—e.g., deploying a real-time model that personalizes thumbnails. Walk through the full lifecycle: defining the success metric, wrangling imperfect data, iterating on feature pipelines, scaling training jobs, and hardening the inference path. Highlight the thorniest hurdles you hit—schema drift, infra bottlenecks, or stakeholder scope-creep—and the concrete actions you took (backfills, feature stores, blue-green rollouts) to keep momentum. Emphasize how those lessons now inform your approach to reliability and experimentation.

What are some effective ways to make data more accessible to non-technical people?

Explain how you translate terabytes of log data into intuitive self-serve tools: opinionated dashboards with clear KPIs, lightweight metric-dictionary layers, and narrative weekly digests that pair charts with plain-language takeaways. Mention guardrails such as templated SQL snippets or Looker Explores that prevent foot-guns while still giving product and design the freedom to slice. Point out that “accessible” also means trustworthy—so you invest in lineage, row-level ACLs, and automated data-quality tests to ensure what stakeholders see is always fresh and consistent.

What would your current manager say about you, and what constructive feedback might they offer?

Anchor strengths in Netflix-style impact—perhaps your bias-for-action on model latency or knack for demystifying complex results in a memo. Balance it with a real growth area (e.g., you sometimes dive so deep on optimization that you initially under-communicate timelines). Show self-awareness by outlining the concrete steps you’re taking—standing “open issues” docs or lightweight async check-ins—to turn that feedback into progress.

Talk about a time you had trouble communicating with stakeholders. How did you overcome it?

Pick an example where model behavior conflicted with product intuition—say, a recommender that lowered short-term clicks but improved long-term retention. Describe how initial metric jargon created friction, so you reframed the conversation around simplified life-of-member diagrams, ran a small shadow experiment, and shared customer anecdotes. The result: stakeholders green-lit the rollout and you instituted a “pre-read & office hours” ritual that now prevents similar gaps.

Why do you want to work with us?

Tie your answer to Netflix’s unique ML surface area—petabyte-scale viewing signals, streaming A/B platform, and a culture that prizes context over control. Highlight how your experience shipping low-latency models or federated-learning pipelines lines up with Netflix’s personalization and studio forecasting roadmaps. Close by noting you’re drawn to the freedom-and-responsibility culture and the opportunity to own business-critical algorithms seen by hundreds of millions of members.

How do you prioritize multiple deadlines, and how do you stay organized when juggling competing tasks?

Outline your triage playbook: score each request by member impact × confidence × effort, slot work into a rolling two-week roadmap, and surface trade-offs during daily async stand-ups. You block focus time for model R&D, reserve buffer for on-call incidents, and rely on tools like Jira dashboards, RFC trackers, and automated Slack reminders. When priorities shift—say leadership asks for a fast cold-start fix—you transparently re-estimate and update the roadmap so dependencies stay visible.

Describe a moment you had to sunset an under-performing model. How did you make the call and drive alignment?

Explain the metrics (e.g., lift decay, infra cost) that flagged the issue, the A/B or causal analysis you ran to confirm regression, and the risk narrative you presented to product and finance. Walk through decommission steps—traffic shadowing, canary rollbacks, dataset archival—and how you captured learnings in a post-mortem to refine future model-review cadences.

Tell me about a time you mentored an engineer to productionize their first model. What was your approach and impact?

Detail how you paired on code reviews, walked them through feature validation checklists, and set up gating experiments. Emphasize the outcome: the mentee now ships autonomously, model latency met SLOs, and the practice scaled via internal “ML bootcamp” sessions you kicked off.

How to Prepare for a Machine Learning Engineer Role at Netflix

A focused preparation strategy is crucial to nail each component of the Netflix machine learning engineer interview. The following tips will help you structure your study plan effectively.

Study Netflix Open-Source ML Tools

Familiarize yourself with Metaflow for workflow orchestration, Netflix’s internal feature-store concepts, and Vectorflow optimizations. Demonstrating knowledge of these frameworks shows you can hit the ground running in Netflix’s engineering environment.

Balance Coding vs. ML Design

Allocate roughly 40 % of your prep time to algorithmic coding challenges and another 40 % to system and ML-architecture design. Reserve 20 % for behavioral rehearsals. This mix mirrors the interview’s weight distribution and ensures rounded readiness.

Think Out Loud & Ask Clarifying Questions

During practice sessions, verbalize your assumptions, hypotheses, and trade-offs. Interviewers appreciate candidates who treat problems methodically, check edge-case requirements, and seek clarity before diving into solutions.

Brute Force, Then Optimize

Showcase your problem-solving process by first outlining a straightforward, working approach and then iterating to a more efficient, vectorized or parallel solution. This illustrates your ability to deliver quick wins and refine for scale.

Mock Interviews & Feedback

Partner with former Netflix engineers or use Interview Query’s mock interview service to simulate the full loop. Solicit candid feedback on your technical explanations, architectural decisions, and cultural fit to sharpen your performance under real interview conditions.

FAQs

What Is the Average Salary for a Machine Learning Engineer at Netflix?

Average Base Salary

Average Total Compensation

Compensation at Netflix often includes a competitive mix of base pay, equity, and bonuses. The typical Netflix ML engineer salary sits at the top of market bands for early-career roles, while the Netflix machine learning engineer salary for mid-level positions reflects substantial stock refreshers. For senior engineers, the Netflix machine learning engineer range expands further to reward leadership and deep technical impact.

Are There Current Netflix MLE Job Postings on Interview Query?

You can always check our job board for MLE openings and access insider prep resources. See the latest roles and stay ready to apply as new positions become available.

Conclusion

Mastering the Netflix machine learning engineer interview format—by combining rigorous coding practice, system-design study, and cultural preparation—is the most direct path to receiving an offer. For further role-specific insights, explore our Netflix Data Engineer Interview Guide and Netflix Data Scientist Interview Guide.

Ready to put your skills to the test? Schedule a mock interview today and learn from candidates like Jeffrey Li.