Netflix Product Manager Interview Guide (2025) – Process, Questions & Salary

Introduction

Breaking into Netflix as a Product Manager means joining a team that builds experiences for over 200 million members worldwide. This guide will demystify the key question formats you’ll face and walk you through each stage of the Netflix interview process. Whether you’re focused on enhancing the viewer journey or empowering internal studio workflows, you’ll find targeted advice to help you stand out.

Role Overview & Culture

The Netflix product manager interview assesses your ability to own end-to-end roadmaps for both consumer-facing features and internal studio tools. At Netflix, PMs balance rigorous, data-driven decision-making with the creative risk-taking necessary to pioneer new entertainment formats. You’ll set vision and priorities under the company’s Freedom & Responsibility ethos—operating with minimal hierarchy, thriving on ambiguity, and driving bottom-up planning. Collaboration spans engineering, design, analytics, and content teams, ensuring rapid iterations aligned with viewer insights. Success in this role means delivering measurable impact while continuously experimenting and learning.

Why This Role at Netflix?

If you’re researching Netflix product manager responsibilities 2025, you’ll find that today’s PMs tackle global personalization algorithms, ads-supported tier launches, and live-event integrations. The Netflix PM interview is known for its rigorous product-sense debates and peer-level compensation packages at the top of the market. In this role, you’ll influence strategic investments across content, UX, and platform infrastructure—shaping how stories reach audiences everywhere. Netflix’s commitment to transparency and high-velocity feedback empowers you to move from prototype to global rollout in weeks, not months. Let’s break down the Netflix product-manager interview process.

What Is the Interview Process Like for a Product Manager Role at Netflix?

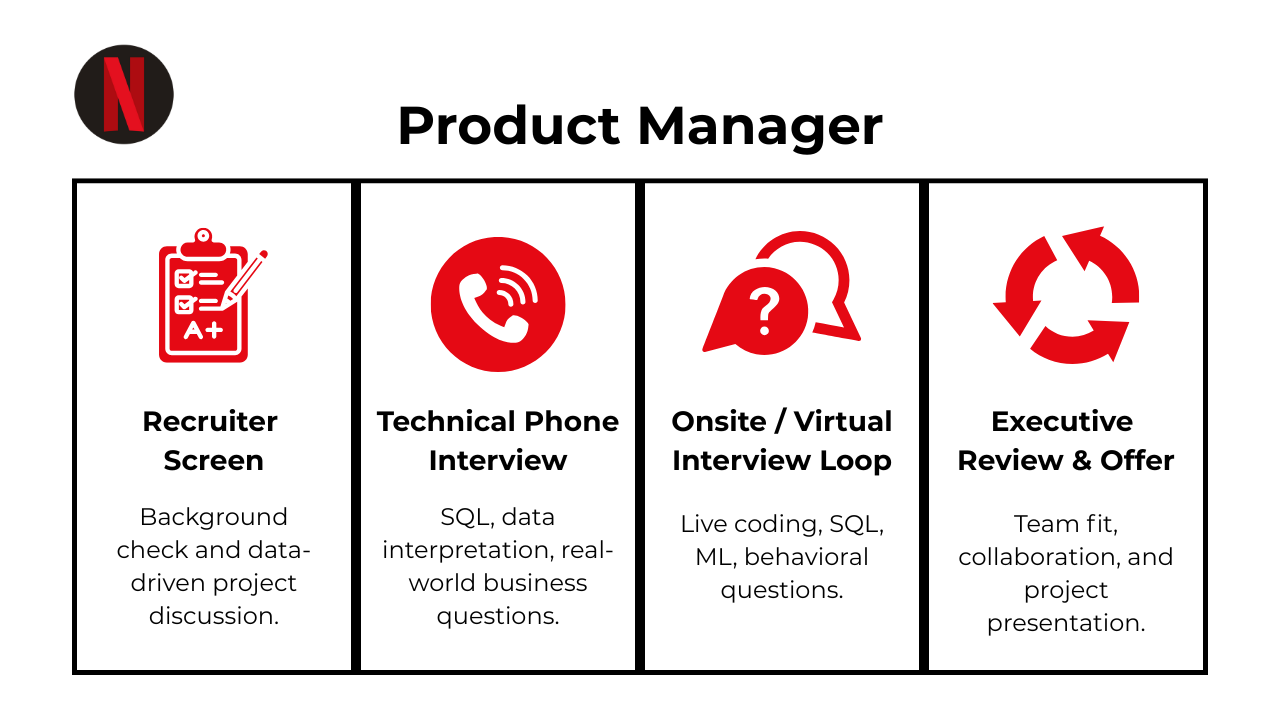

Navigating the Netflix product manager interview process typically unfolds over four main stages, each designed to assess different facets of your product leadership. You’ll move from an initial screen all the way through to an executive review, with rapid feedback loops and a high bar for “keepers.”

Recruiter Screen

Your journey begins with a 30-minute call focused on résumé fit, career motivations, and basic behavioral fit. Recruiters will probe your product background—what you’ve shipped, metrics you’ve moved, and why Netflix’s culture of freedom and responsibility appeals to you. This conversation sets expectations for timeline and next steps.

Product Sense + Strategy Screen

Next, you’ll tackle a 45–60 minute video interview centered on product vision and strategic trade-offs. Expect prompts to evaluate feature enhancements, prioritize investments (e.g., originals vs. international content), and articulate success metrics. Interviewers look for clear frameworks, user empathy, and the ability to balance creative risk with data-driven rigor.

Virtual On-Site

If you advance, you’ll complete four back-to-back remote sessions:

- Product Design (×2): Deep dives into end-to-end feature flows and UX considerations.

- Analytics & Experiments: Define A/B tests, choose North-Star metrics, and interpret hypothetical results.

- Culture & Leadership: STAR-style questions on candor, stakeholder influence, and Netflix values alignment.

Each round lasts about an hour, with minimal breaks to simulate the real fast-pace environment.

Executive Panel & Offer

The final stage is a 60-minute discussion with senior leaders and hiring committee members. Here, you’ll defend your earlier recommendations, demonstrate cross-functional influence, and discuss long-term vision. Successful candidates receive an offer and enter final negotiation, typically within a week of this panel.

Behind the Scenes

All interviewers submit succinct feedback within 24 hours, feeding into Netflix’s “keeper test” mentality. A hiring committee then reviews calibrated scores and cultural fit evaluations to make the final decision.

Differences by Level

For Senior PM roles, expect an additional roadmap deep-dive and stakeholder-management scenario, emphasizing leadership at scale. Associate or entry-level candidates focus more on tactical execution and foundational product sense.

What Questions Are Asked in a Netflix Product Manager Interview?

Netflix product manager interview questions often revolve around defining user-focused features, setting strategic priorities, and driving measurable outcomes. In this section, you’ll learn how each question category maps to the skills Netflix expects from its PMs—creativity balanced with rigor, data fluency, and a culture-first mindset.

Product Design & Strategy Questions

These prompts evaluate your ability to envision new capabilities that delight audiences and differentiate Netflix in a competitive landscape. You’ll be asked to articulate clear problem statements, generate innovative solutions, and justify trade-offs—whether optimizing engagement on children’s profiles, choosing between content investments like live sports versus originals, or sizing new market opportunities for interactive programming.

-

Show two industry-standard approaches. (a) Churn-based: LTV ≈ ARPU ÷ churn = $100 ÷ 0.10 = $1 000, which assumes a steady-state exponential decay. (b) Tenure-based: LTV = ARPU × average lifetime = $100 × 3.5 = $350, which reflects actual retention to date. Reconcile the gap by noting that a 3.5-month lifetime implies an effective churn of ≈ 28 %, so revisit the inputs or choose the definition leadership prefers. Mention adjusting for gross margin and discounting future cash flows if finance tracks LTV on a contribution or NPV basis.

How can you measure acquisition success for Netflix’s 30-day free-trial funnel?

Start with trial-start → paid-conversion rate as the primary KPI, sliced by channel, device, and geography. Track day-N survival curves (D0, D7, D30) to reveal early churn, and compute payback period on acquisition spend versus lifetime value. Pair quantitative metrics with activation actions (e.g., first complete episode watched) to see which behaviors predict conversion. Guardrail metrics—trial abuse, refund rate, and CAC/LTV—ensure growth is sustainable. Dashboards should refresh daily so marketing can iterate quickly on offer wording and targeting.

Watch Next: Netflix Data Scientist Interview Question: Measure Free Trial Success with A/B Testing

When answering this question, it’s worth noting that not all users who convert from a free trial are equally valuable in the long term. In this video, Priya Singla, product data scientist at Meta and formerly at Twitter and Atlassian, gives a detailed breakdown of how to evaluate customer quality through retention and engagement metrics.

By watching this video, you will learn to differentiate between high-quality and low-quality subscribers by analyzing engagement patterns during and after the free trial period.

How would you analyze churn behavior for monthly ($15) versus annual ($100) subscription plans?

Plot Kaplan-Meier survival curves for each plan to visualise retention over equalized tenure. Compute cohort-normalized churn (monthly for both plans) and customer-lifetime revenues to expose economic trade-offs. Segment churn by engagement (hours watched, number of devices) and acquisition channel to spot vulnerable groups. Build a logistic-regression or gradient-boost model using tenure, price plan, content preferences, and engagement to predict cancel probability. Present a “best-next offer” table—e.g., discount coupons or plan downgrades—to guide retention campaigns.

-

Use two subqueries that SELECT DISTINCT user_id from the mobile and web tables. UNION them into a temp table with a platform flag, then

GROUP BY user_idto count how many distinct platforms each user touched. Aggregate again to calculate the share of users with platform_count = 1 (mobile-only or web-only) and platform_count = 2 (both). Divide by the total distinct-user count so the three percentages sum to 1. This cross-device breakdown highlights engagement gaps and prioritizes omnichannel fixes. -

First, segment lapsed users by device, geography, content genre, and last-engagement date to locate clusters of similar behavior. Compare their pre-churn metrics (sessions, watch-time, NPS) with retained cohorts to isolate leading indicators. Survey or run in-app win-back prompts to capture qualitative reasons (price, content gaps, UI friction). Test targeted re-engagement experiments—e.g., personalized “What you’ve missed” emails, reactivation discounts, or push notifications about trending shows—and measure lift in return-to-service and downstream retention.

How would you determine if subscription price is truly the deciding factor for churn or conversion?

Combine price-sensitivity surveys (Van Westendorp) with observational data: correlate regional price tests or historical price changes with churn spikes, controlling for content releases and macro-factors. Run price A/B or geo-lift experiments where feasible and compare conversion, retention, and ARPU. Use a multinomial-logit or discrete-choice model incorporating catalog size, competitor prices, and household income to quantify the marginal effect of price versus other attributes. Validate by back-testing model predictions against real churn events.

-

Estimate incremental engagement uplift (hours watched, retention boost, new-subscriber acquisitions) attributable to the show using pre/post or synthetic-control methods. Convert that engagement to dollar value via LTV of newly acquired users and reduced churn savings among existing ones. Factor in substitution effects—does the show cannibalize viewing of your originals?—and opportunity cost if the budget could license fresh content. Build a discounted cash-flow model over the proposed term, subtracting projected royalty fees, to arrive at net present value. Sensitivity-test assumptions on view-decay curves and competitive availability.

-

Validate the claim by recomputing trial-to-pay rates with consistent definitions and exclusion windows. Examine acquisition mix (paid ads vs. organic), payment-method success, and localization quality to see if funnel leaks differ. Compare engagement during trial—content preference, session depth—to identify behavioral drivers. Analyze macro factors: GDP per capita, local pricing, and competitor presence. Finally, test localized interventions (currency display, messaging tweaks, alternative payments) and measure whether they narrow the conversion gap.

Analytics & Experiments Questions

Netflix places heavy emphasis on data-driven decision-making. In this category, you’ll define North-Star metrics for product initiatives, outline robust A/B test plans, and demonstrate how you’d interpret results to inform iterative improvements. Interviewers look for clarity in metric selection, sound experimental designs, and an ability to connect analyses back to strategic business goals.

What are Z-tests and t-tests? When are they used, and how do you choose between them?

A Z-test compares two means when the population variance is known or the sample is large enough for the Central Limit Theorem to hold; a t-test does the same when variance is unknown and the sample is small. For a Netflix PM, Z-tests are typical in large-scale A/B experiments on millions of viewers, whereas t-tests fit small betas or segmented cohorts. The difference lies in the distribution of the test statistic (normal vs. Student-t) and the resulting confidence intervals. Selecting the right test ensures launch decisions rest on valid statistical evidence.

-

Core demand indicators include ride requests per minute, average wait time, and surge-price multipliers; supply proxies are active driver count and acceptance rate. Create a demand-to-supply ratio and monitor leading thresholds where ETAs or cancellation rates spike. Plot these metrics across city geohashes and time of day to surface stress pockets. Knowing when imbalance starts lets product teams trigger driver incentives or pricing adjustments before rider experience degrades.

-

Begin by building a pre/post interrupted-time-series and a difference-in-differences against a hold-out cohort that kept the old flow. Control for seasonality, marketing spend, and traffic mix to isolate the treatment effect. Validate that measurement windows are identical and that no parallel experiments confound the signal. A clear statistical lift—supported by confidence intervals and power analysis—gives the PM confidence the redesign, not external drift, drove the uptick.

Your landing-page A/B test shows p = 0.04; how do you judge whether the result is trustworthy?

Check pre-experiment power calculations to confirm the test had sufficient sample size. Validate randomisation balance on key covariates and verify no peeking or early stopping inflated false-positive risk. Inspect metric volatility and confidence-interval overlap, and correct for multiple testing if several KPIs were monitored. Only after these checks pass should a Netflix PM green-light the feature based on the observed p-value.

What extra care is needed when you run hundreds of t-tests simultaneously?

Multiple comparisons inflate the family-wise error rate; apply Bonferroni, Holm, or Benjamini–Hochberg corrections to keep false discoveries in check. Use hierarchical modelling or false-discovery-rate dashboards to prioritise truly promising variants. Pre-register hypotheses where possible to avoid “p-hacking.” These safeguards let a PM explore many ideas without shipping features that win by chance alone.

How can you verify that bucket assignment in an A/B test was truly random?

Compare baseline metrics (e.g., past-week engagement, device mix, geography) across control and treatment using chi-square or t-tests; all should show no significant differences. Plot assignment time-series to rule out batch biases and ensure even traffic ramps. Hashing user-IDs with a stable seed and auditing the hash function guarantees deterministic, repeatable splits. These checks protect result integrity before any outcome analysis begins.

-

Use a 2 × 2 factorial design (Red-Top, Red-Bottom, Blue-Top, Blue-Bottom) so you can measure both main effects and their interaction with the same traffic. Randomly assign visitors into the four cells, then analyse click-through with a two-way ANOVA or logistic regression. Factorial tests reach conclusions faster than running two sequential A/B tests and reveal whether color and placement amplify or cancel each other.

-

Build a query that aggregates daily counts of log-in, no-log-in, and unsubscribe events by test bucket (control vs. variant). Produce a time-series graph with dual axes: cumulative unsubscribes and rolling 7-day log-in rate. This visual lets a PM weigh engagement gains against attrition costs and decide whether to tweak notification volume, targeting, or throttling rules.

Write a query to test if click-through rate (CTR) depends on search-result relevance rating.

Group search_events by rating (1-5) and compute CTR = SUM(has_clicked)/COUNT(*). Return rating, impressions, clicks, and CTR so the PM can plot the dose-response curve. If CTR climbs monotonically with rating, the hypothesis holds; if it plateaus, resources might shift to other ranking signals. Including confidence intervals helps judge whether observed differences are statistically meaningful.

Your AB-test metric is non-normal and sample size is low—how do you decide which variant won?

Use non-parametric tests such as Mann-Whitney U or permutation tests that make no normality assumption. Bootstrap confidence intervals on the median or percent-lift to quantify uncertainty. If the metric is count-based with many zeros, a Poisson or negative-binomial model may be more informative. These approaches let a PM make sound decisions even when classical t-tests are inappropriate.

Behavioral & Culture-Fit Questions

The Netflix product manager interview also probes your leadership style and cultural alignment. Expect storytelling prompts that explore how you’ve navigated high-stakes decisions, embraced candid feedback, and rallied cross-functional teams. Responses should be structured using the STAR method, highlighting how you embodied Netflix’s values of Freedom & Responsibility and drove meaningful impact under ambiguity.

What are effective ways to make data accessible to non-technical colleagues?

Start with low-friction, self-serve dashboards that expose only the most critical KPIs; pair them with data dictionaries written in plain language. Automate scheduled insights (e-mail digests or Slack bots) that highlight anomalies rather than forcing users to hunt for answers. Offer office hours and short “how-to” Loom videos to demystify SQL snippets or visualization filters. Providing context, not just numbers, empowers business partners to act without analyst bottlenecks.

Describe a data project you worked on. What hurdles did you face, and how did you overcome them?

Choose a project with multiple moving parts—ingesting messy source data, scaling pipelines, or coordinating cross-team dependencies. Explain the biggest blockers (e.g., schema drift, resource contention, ambiguous requirements) and the systematic steps you took to resolve them: prototyping, stakeholder alignment, incremental roll-outs, or refactors. Emphasize measurable outcomes: runtime reduced by 40 %, data freshness improved from daily to hourly, or dashboard adoption tripled. This shows grit, structured problem-solving, and end-to-end ownership.

What would your current manager say about your strengths and areas for growth?

Pick strengths tied to the role—e.g., translating vague questions into clear metrics or building fault-tolerant ETL—and back them with concrete results. For weaknesses, cite a genuine but non-fatal trait (perhaps over-indexing on detail) and describe the proactive steps you’re taking (time-boxed analyses, peer reviews). Framing feedback in this balanced, action-oriented way signals self-awareness and coachability—key predictors of long-term success.

Talk about a time you struggled to communicate with stakeholders. How did you bridge the gap?

Recount a situation where priorities were misaligned or technical jargon caused confusion. Describe how you iterated on your message—switching from dense SQL outputs to a story-driven slide deck, for instance—and scheduled quick feedback loops to ensure clarity. Highlight the final impact (faster decision, reduced rework) to show you can tailor communication style and medium to the audience’s needs.

Why do you want to work with us, and what makes you the right fit?

Link your motivations to the company’s mission, data scale, or product challenges, demonstrating you’ve done your homework. Show how your past achievements—optimizing experimentation pipelines or driving data-driven product iterations—map directly to the team’s roadmap. Close with what you hope to learn or contribute, conveying both confidence and curiosity.

How do you juggle multiple deadlines and stay organised?

Explain your framework for triaging tasks—impact-versus-effort matrices, OKR alignment, or simple MoSCoW scoring. Describe the tools you lean on (Kanban boards, calendar time-blocks, automated reminders) and how you carve out focus time for deep work. Mention proactive communication: surfacing trade-offs early keeps stakeholders aligned and prevents last-minute surprises.

Tell me about a time you had to make a recommendation with incomplete data. How did you proceed, and what was the outcome?

Outline how you quantified uncertainty, used proxy metrics, or ran a quick pilot to fill gaps. Show that you can balance speed with rigour, clearly flagging assumptions so decision-makers understand risk. Highlight the eventual result—perhaps the initial call was directionally right and subsequent data validated your approach.

Describe a situation where you mentored a teammate on a technical concept they initially found challenging. What teaching approach did you use?

Detail how you broke the topic into digestible pieces, paired on a real task, and provided follow-up resources or code templates. Point out improvements in the mentee’s output or confidence as tangible evidence of your leadership and knowledge-sharing mindset.

How to Prepare for a Product Manager Role at Netflix

Getting familiar with the Netflix product manager interview process is just the first step—delivering structured preparation across culture, frameworks, and mock sessions will set you apart. Below are five targeted preparation strategies to help you confidently navigate each stage of the loop.

Study the Culture Memo

Netflix’s Culture Memo outlines its values of Freedom & Responsibility, context over control, and high performance. Develop STAR stories that explicitly reference these principles—showing how you’ve operated autonomously, embraced constructive candor, and held teams to high standards. This ensures your behavioral answers resonate deeply with Netflix’s leadership criteria.

Refine Product Frameworks

Rigorously practice frameworks like CIRCLES and Business Requirement Documents (BRD) to structure your product thinking. Since roughly 35 % of your evaluation hinges on clear problem definition and prioritization, time-box exercises to develop concise problem statements, user flows, and feature roadmaps. Demonstrating fluency in these models highlights your ability to tackle complex product challenges methodically.

Master Experimentation Design

Netflix is an experimentation powerhouse—about 30 % of product decisions are A/B tested. Brush up on hypothesis formulation, sample-size calculations, test duration, and guardrail metrics to show you can design robust experiments. Be prepared to articulate how you’d interpret nuanced results and iterate quickly on failing variants to drive continuous improvement.

Think Out Loud & Iterate Rapidly

During interviews, narrate your thought process clearly and solicit real-time feedback from interviewers. Netflix values transparency and the ability to pivot based on new information. Practice “rubber-ducking” your reasoning out loud in mock interviews, refining your ideas on the fly to mirror the rapid iteration cycles you’ll encounter on the job.

Mock Panels & 30-Minute Product Pitches

Simulate the on-site intensity by running full mock panels with peers: two product-design rounds, an analytics deep-dive, and a culture-fit discussion. Time-box 30-minute product pitches—ideally on recent Netflix features like mobile previews or interactive storytelling—to practice concise storytelling and quick Q&A handling. These rehearsals build stamina and confidence, ensuring you can perform at your best during back-to-back sessions. Utilize Interview Query’s mock interview services now to kick start your interview preparation.

FAQs

What Is the Average Salary for a Netflix Product Manager?

Average Base Salary

Average Total Compensation

When discussing your product manager Netflix salary, lead with concrete examples of your impact—such as feature launches or revenue growth—to position yourself for top-quartile offers. When it’s time to negotiate your Netflix product manager salary, reference comparable roles at peer companies and ask open-ended questions about total compensation, including RSU refreshers and bonus targets. For senior hires, framing your expectations around Netflix senior product manager salary bands (L5/L6) ensures your ask aligns with level-based norms. Always express genuine enthusiasm for the role and clarify equity refresh cycles to secure the maximum long-term upside.

Are There Job Postings for Netflix Product Manager Roles on Interview Query?

See the latest openings for Product Manager roles and unlock insider prep tactics on our jobs board. Stay up to date on new opportunities and tailor your application with role-specific insights.

Conclusion

Mastering the Netflix product manager interview loop—recruiter screen, product sense and strategy assessments, virtual on-site deep dives, and the executive panel—is your fastest path to an offer. Focus your practice on high-signal product design, analytics, and culture questions to demonstrate the freedom-and-responsibility mindset that Netflix demands.

To broaden your preparation, check out our Netflix Data Scientist Guide and Data Engineer Guide. When you’re ready to simulate the real experience, book a mock interview. And don’t forget to sharpen your quantitative toolkit with our Product Metrics Learning Path and SQL Learning Path. Good luck!