Intuit Data Engineer Interview Guide: Process, Questions, Salary & Prep

Introduction

Preparing for an Intuit data engineer interview means preparing for one of the most production-oriented data engineering loops in big tech. According to LinkedIn’s 2025 Jobs on the Rise data, data engineering roles continue to grow at over 30 percent year over year, driven by cloud migration, real-time analytics, and AI workloads that demand reliable data foundations. At Intuit, that scale is very real. Teams supporting TurboTax, QuickBooks, Credit Karma, and Mailchimp move millions of records daily, where small data reliability issues can cascade into product, financial, or regulatory risk.

As of 2026, the Intuit data engineer interview focuses far less on trivia and far more on whether you can design, reason about, and defend real-world data systems. Candidates are evaluated on how they build scalable pipelines, ensure data quality, and make architectural trade-offs across Spark, SQL, and cloud-native services. A signature part of the process is the “craft demonstration,” where you are asked to present a realistic data engineering solution and walk through decisions as if you were already on the team.

In this guide, we break down the Intuit data engineer interview process step by step, explain what each round tests, and share how to prepare in a way that matches how Intuit actually evaluates data engineers in practice.

Intuit Data Engineer Interview Process

The Intuit data engineer interview process is designed to evaluate whether you can design, build, and own scalable data systems in production. Rather than testing abstract theory, Intuit emphasizes real-world trade-offs around data modeling, scale, reliability, and cross-functional impact.

As of 2026, the process typically spans five stages and takes 4 to 6 weeks from recruiter screen to final decision.

| Interview stage | Typical format | Primary focus |

|---|---|---|

| Recruiter screen | 25–35 minute call | Background, motivation, and role alignment |

| Technical assessment | Proctored online assessment | SQL, programming logic, and data reasoning |

| Craft demonstration | Take-home project + panel | End-to-end data engineering judgment |

| Technical deep-dives | 3 live interviews | SQL, big data systems, and pipeline design |

| Managerial and behavioral round | 30–60 minute interview | Ownership, values, and collaboration |

Each stage builds on the previous one. Candidates who advance are expected to demonstrate increasing levels of system thinking, clarity of trade-offs, and ownership.

Recruiter Screen (25–35 minutes)

The recruiter screen focuses on your background, core technologies such as Spark or AWS, and alignment with Intuit’s values. You’ll also be asked why you’re interested in Intuit specifically and what type of data engineering work you want to do.

Recruiters use this call to assess role fit and explain how the craft demonstration works later in the process.

Tip: Frame your experience around production ownership and downstream impact, not just tools you have used.

Technical Assessment (Initial Technical Gate)

Most candidates then complete an initial technical assessment, which acts as a gating round. This is often a proctored online assessment that mixes SQL, programming logic, and data reasoning.

| Area tested | What interviewers evaluate |

|---|---|

| SQL and data logic | Correctness, edge cases, and assumptions |

| Programming fundamentals | Structured problem-solving in Python or Java |

| Data intuition | Awareness of performance and scalability |

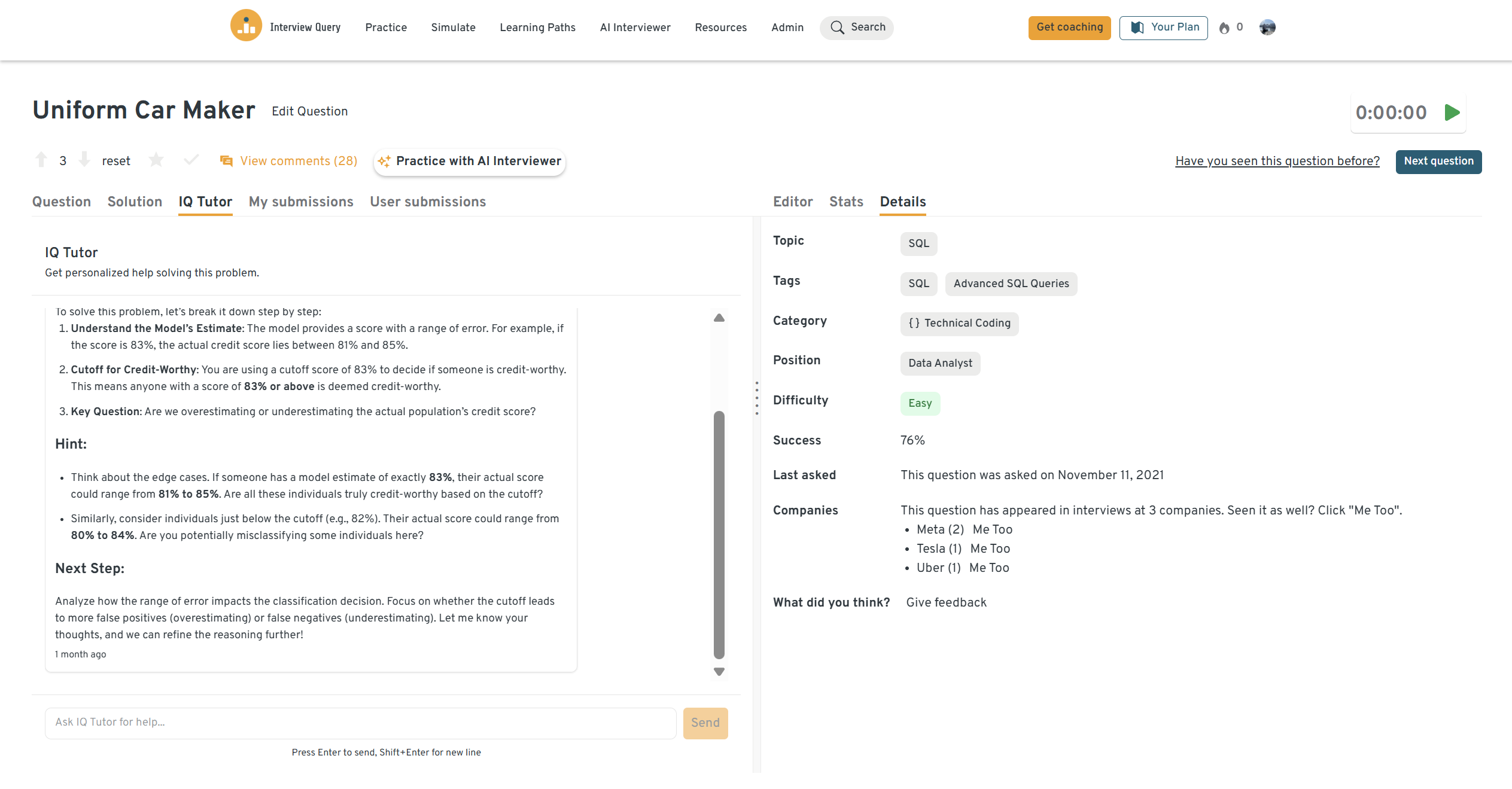

Strong candidates explain how they think about data, not just how they arrive at an answer. Practicing realistic SQL problems through Interview Query’s SQL interview learning path helps build fluency under time pressure, especially for joins, aggregations, and window-function reasoning.

Craft Demonstration (Take-Home + Panel)

The craft demonstration is the hallmark of the Intuit data engineer interview. You are given a realistic data engineering use case, such as building an ETL pipeline or modeling a high-volume transaction system, and given **two to three days to prepare a solution.

You then present your work in a 60 to 90 minute panel session with four to five engineers. The discussion focuses on architectural decisions, data modeling choices, data quality handling, and how your solution scales.

This round evaluates whether you can reason like an Intuit data engineer, not whether you chose the “perfect” tool. Many candidates rehearse this format using mock interviews to refine clarity, pacing, and decision framing before the panel.

Technical Deep-Dive Interviews (3 rounds)

Candidates who pass the craft demo move into three technical deep-dive interviews. These are live, discussion-heavy sessions that test depth across core data engineering domains.

| Deep-dive area | What it tests |

|---|---|

| SQL and logic | Advanced joins, window functions, and reasoning |

| Big data systems | Spark architecture, partitioning, and cloud services |

| Data pipeline design | Scalability, data quality, and failure handling |

Interviewers expect you to explain trade-offs clearly and reason through scale and reliability. Preparing with Interview Query’s data engineering learning path helps simulate the kind of system-level thinking these rounds demand.

Managerial and Behavioral Round (30–60 minutes)

The final round focuses on ownership, leadership, and values alignment. You may speak with a hiring manager or cross-functional partner.

Common themes include owning projects end to end, handling ambiguity, and collaborating with data scientists, product managers, and engineers. Intuit places strong weight on values such as customer obsession and accountability, so behavioral judgment matters as much as technical depth.

Practicing behavioral delivery through Interview Query’s AI interview tool or mock interviews helps candidates tighten structure and confidence under pressure.

Intuit Data Engineer Interview Questions

Intuit data engineer interview questions are designed to test whether you can build reliable, scalable data systems that support analytics, AI models, and real-time reporting across products like TurboTax, QuickBooks, Credit Karma, and Mailchimp. Interviewers care about more than correct outputs. They evaluate how you define assumptions, handle edge cases, and reason about long-term maintainability in production. Expect the question mix to mirror the interview loop: SQL and logic, schema and data modeling, pipeline and systems design, debugging and operational judgment, then behavioral ownership. If you want a single place to drill the same problem types across SQL, pipelines, and system reasoning, the fastest workflow is to practice directly in the Interview Query question library.

SQL and Data Logic Questions

SQL is a core signal in Intuit data engineer interviews because it reflects how you reason about data correctness and metric definitions. These questions typically involve joins, window functions, cohort logic, and careful handling of missing or duplicated records. Intuit interviewers pay close attention to whether you state your definitions clearly before writing logic, especially for metrics that influence financial workflows. If you want to tighten fundamentals quickly, the SQL interview learning path is the most direct prep for this section.

How would you calculate monthly retention for a subscription or recurring user base?

This question tests whether you can translate a business concept into a precise, defensible metric. Intuit cares about retention because it links directly to LTV, payback period, and lifecycle health across consumer and SMB products. A strong answer starts by defining retention unambiguously, then explains cohorting logic, time windows, and what counts as an active user. Interviewers also want to hear how you handle edge cases like plan changes, pauses, or users with sparse activity that can distort cohort curves. The best responses tie the metric back to decisions, such as how you would use retention by cohort to evaluate channel quality or product onboarding changes.

Tip: Say your retention definition out loud first, then defend why that definition matches the decision being made.

How would you identify users who are likely to churn based on recent activity?

This evaluates segmentation judgment and how you convert behavioral signals into an actionable output table. Intuit looks for candidates who can separate temporary inactivity from true churn risk, since reactive targeting can waste spend and harm customer trust. A strong answer defines churn risk signals, describes how you would set thresholds, and explains how you would validate the segment against outcomes over time. Interviewers also listen for how you guard against data artifacts like instrumentation gaps or delayed event ingestion that make users appear inactive when they are not. The most compelling answers finish with what action the segment enables, such as a reactivation experiment or a product nudge, and how success would be measured.

Tip: Call out how you would validate the churn definition using historical backtesting, not intuition.

-

This question tests user-level aggregation and careful denominator handling, which maps to real-world reliability and fraud signals in commerce workflows. Intuit uses similar thinking when analyzing customer consistency, account changes, or suspicious behavior patterns that affect risk scoring. A strong answer explains how you define primary address, how you treat users with multiple primaries, and how you handle users with no primary address at all. Interviewers want to hear how you avoid silent errors, such as counting duplicate address records or mixing historical and current attributes incorrectly. The strongest answers mention how you would sanity-check the output distribution to detect anomalies before stakeholders act on it.

Tip: Explicitly describe how you would handle users with missing or conflicting address metadata.

-

This tests whether you understand selection bias and sampling in SQL, not just syntax. Intuit asks questions like this to see if you recognize that naïve randomness can overweight frequent values and mislead downstream experiments. A strong answer explains the need to deduplicate values before sampling, then describes how you would ensure each distinct make has equal probability. Interviewers also look for whether you can explain why the alternative approach is biased in a way that a non-technical partner would understand. Good answers tie this back to experimentation integrity, since biased sampling can corrupt A/B test conclusions.

Tip: Explain the bias mechanism clearly, because Intuit values engineers who protect decision-making quality, not just query correctness.

Try this question yourself on the Interview Query dashboard. You can run SQL queries, review real solutions, and see how your results compare with other candidates using AI-driven feedback.

Data Modeling and Schema Design Questions

Intuit data engineers are often responsible for building trustworthy datasets that power both reporting and machine learning features. Schema design questions evaluate whether you can model entities cleanly, preserve history, and support scalable analytics without breaking downstream consumers. These questions are where you show that you understand data as a product, not just a storage layer. If you want to practice more of this style, the data engineering learning path is the most aligned prep.

-

This evaluates high-volume event modeling, which is common in product analytics and experimentation systems. A strong answer describes an append-only events table with stable identifiers, timestamps, event types, and contextual attributes, then explains how you would derive session or page-level tables for analytics performance. Intuit cares about how you balance flexibility with schema consistency, especially when product instrumentation evolves. Interviewers also want to hear how you enforce governance, such as validating event contracts, handling schema drift, and documenting definitions so metrics stay consistent across teams. The best answers mention versioning explicitly, because event definitions changing over time is one of the fastest ways analytics becomes untrusted.

Tip: Call out how you would version events and prevent breaking changes for downstream dashboards and models.

-

This tests temporal modeling and whether you understand why overwriting records breaks historical truth. Intuit cares about point-in-time accuracy for compliance, reporting, and customer support workflows where the historical record matters. A strong answer explains using effective start and end timestamps, separating current and historical records, and preventing retroactive changes to past analytics. Interviewers listen for how you handle real messiness, like overlapping effective periods, duplicate updates, or partial address data. The most credible answers connect the design to specific use cases such as audits, dispute resolution, or longitudinal customer behavior analysis.

Tip: Explain why slowly changing dimensions matter, because Intuit values long-term data integrity over short-term convenience.

Data Pipeline and Systems Design Questions

Pipeline and systems design questions are where Intuit evaluates your production judgment. These prompts test whether you can build scalable pipelines, handle late or bad data, and ensure reliability with clear SLAs and monitoring. Strong candidates reason through trade-offs across compute cost, data freshness, and correctness rather than proposing a one-size-fits-all architecture. For broader practice across these scenarios, Interview Query’s data engineer interview questions hub is a useful way to expand your reps.

-

This tests end-to-end thinking across ingestion, modeling, orchestration, and serving layers. Intuit expects you to explain how you define activity, how you aggregate at multiple grains, and how you avoid recomputing everything each run. Interviewers want to hear how you handle late-arriving data, backfills, and the risk of metrics shifting retroactively when raw events arrive out of order. A strong answer also explains how you guarantee consistent definitions across hourly, daily, and weekly metrics so stakeholders do not get conflicting numbers. The best answers emphasize idempotency and reproducibility because those prevent silent corruption in recurring jobs.

Tip: Emphasize idempotent transformations and clear source-of-truth tables so re-runs do not create inconsistent results.

-

This evaluates safe change management at scale, which is critical when tables have many downstream consumers. Intuit cares about this because changes often touch reporting, experimentation, and risk pipelines simultaneously. A strong answer describes a phased approach: introduce the column safely, backfill incrementally, verify coverage, then migrate consumers after validation. Interviewers also listen for operational safeguards like monitoring, throttling, and rollback plans if performance degrades. The strongest answers mention communication and contract management because the technical rollout is only half the risk in shared data systems.

Tip: Describe how you would verify correctness during the backfill and how you would keep consumers stable during the transition.

-

This tests debugging and investigative rigor in a real-world environment where systems are owned by different teams. Intuit values this skill because data incidents often require tracing issues across services, warehouses, and BI tools quickly. A strong answer explains using warehouse query history, audit logs, or connection signatures to trace what runs when the request happens. Interviewers also want to hear how you would reproduce the request with a controlled account and a tight time window to reduce guesswork. The best answers include how you would confirm you have the full set of queries, because partial traces lead to wrong root causes.

Tip: Mention how you would validate the trace end to end using a controlled test case, not assumptions.

Behavioral and Collaboration Questions

Behavioral interviews at Intuit evaluate ownership, cross-functional communication, and whether you operate with customer impact in mind. You should use the STAR format, but the key is to lead with the decision you drove, then quantify impact. Below are common prompts that map well to Intuit’s style. If you want to rehearse these out loud under pressure, the mock interview format is the closest simulation to the real loop.

What makes you a good fit for the data engineer position?

This evaluates alignment with Intuit’s expectations around ownership, reliability, and collaboration. Strong answers show that you understand data engineering as a product discipline, not a ticket-taking support function. Interviewers want evidence that you can build systems others trust and that you can communicate trade-offs when stakeholders disagree. The best answers connect your strengths to how Intuit operates: production pipelines, shared datasets, and customer-impacting metrics.

Tip: Tie your fit to ownership and reliability, not just the tools on your resume.

Sample answer: I am strongest when I own data systems end to end, from ingestion to quality checks to stakeholder adoption. In my last role, I rebuilt a core pipeline that had frequent failures and unclear definitions, then introduced data contracts, freshness SLAs, and automated validation. That reduced incident tickets by 40 percent and improved dashboard trust enough that leadership started using the new metrics in weekly planning. I enjoy working closely with product and analytics partners to align on definitions before shipping, which is why Intuit’s craft-focused process appeals to me. I want to build data foundations that make customer-facing decisions more accurate and reliable.

-

This assesses whether you can translate technical constraints into decisions stakeholders can act on. Intuit data engineers frequently work with partners who have different incentives, such as speed versus correctness. Strong answers show how you clarified the decision, reset expectations, and created shared definitions rather than defending technical detail. Interviewers want to see that you can regain trust and keep projects moving under ambiguity.

Tip: Focus on the behavior change you made in your communication, not just the final outcome.

Sample answer: I once delivered a metric update that stakeholders found confusing and inconsistent with prior reports. Instead of insisting the new definition was better, I scheduled a short working session to clarify what decision they needed the metric to support. We realized the disagreement was definition drift, not computation, so I proposed a versioned metric approach with a clear change log and side-by-side reporting for two weeks. Adoption improved immediately, and we avoided a rushed rollout that would have broken dashboards. After that, we standardized a lightweight metric spec template so future changes were reviewed before implementation. That reduced last-minute reversals and made stakeholder reviews faster.

Tell me about a time you owned a data project from end to end.

This tests ownership, prioritization, and whether you can drive outcomes, not just deliver artifacts. Intuit wants data engineers who can manage ambiguity across requirements, implementation, and rollout. A strong answer includes how you defined success, handled trade-offs, and ensured the output was actually used. Interviewers also look for how you handled reliability, such as monitoring, SLAs, and failure recovery.

Tip: Quantify impact in adoption terms, such as reduced refresh time, fewer incidents, or increased usage of the dataset.

Sample answer: I owned a pipeline that produced weekly revenue attribution data used by marketing and finance, but it frequently broke and required manual fixes. I first aligned stakeholders on a single source-of-truth definition, then rebuilt the pipeline with incremental loads, validation checks, and clear alerting tied to freshness SLAs. I also added a simple consumer-facing data dictionary so teams understood the columns and limitations. After launch, refresh time dropped from six hours to ninety minutes, and incident escalations fell by about 50 percent in the next quarter. The biggest win was that finance stopped maintaining a parallel spreadsheet model because the dataset became reliable enough for planning.

Describe a time you shipped a solution that improved data quality or reliability.

This evaluates whether you treat data quality as an engineering responsibility, not an analytics afterthought. Intuit cares about this because unreliable data erodes customer trust and can create compliance risk. Strong answers explain what broke, how you detected it, and how you prevented recurrence through automation. Interviewers also want to hear how you balanced strict validation with not blocking the business unnecessarily.

Tip: Explain both prevention and detection, because production quality requires both.

Sample answer: We had a recurring issue where upstream instrumentation changes caused sudden drops in event volume, but the pipeline still succeeded, which created silent metric errors. I added automated distribution checks and volume anomaly detection at ingestion, then configured alerts that escalated only when the change crossed a business-impact threshold. I also implemented a quarantine pattern where suspicious partitions were isolated while the last known good data continued serving dashboards. That reduced time-to-detection from days to under an hour and prevented incorrect metrics from being shared in exec updates. Over time, it shifted the team from reactive debugging to proactive reliability work.

For deeper preparation, explore a curated set of 100+ data engineer interview questions with detailed answers. In this walkthrough, Jay Feng, founder of Interview Query, breaks down more than 10 core data engineering questions that closely mirror what Uber looks for, including advanced SQL patterns, distributed systems reasoning, pipeline reliability, and data modeling trade-offs. The explanations focus on how to think through real-world scenarios, making it especially useful for preparing for a data engineer interview loop.

Role Overview and Culture at Intuit

An Intuit data engineer designs and maintains the systems that move, transform, and validate data at scale so that product, analytics, and machine learning teams can make reliable decisions. The role is centered on building a dependable paved path for data, most commonly in cloud-native environments, and ensuring that data products remain trustworthy as the business and instrumentation evolve.

Because Intuit’s products operate at high volume across consumer and SMB segments, data engineering work often sits close to customer experience and financial outcomes. Teams rely on data engineers to build pipelines that are resilient to upstream changes, predictable in freshness, and consistent in definitions over time. Strong data engineers at Intuit are not just builders. They operate like owners who anticipate failure modes, measure reliability, and improve systems iteratively.

What Intuit data engineers typically work on

- Building batch and near-real-time pipelines that ingest data from product events, operational systems, and third-party sources into data lakes and warehouses.

- Designing schemas and dimensional models that power business intelligence, experimentation analysis, and machine learning features.

- Implementing automated data quality checks, monitoring, and alerting so downstream teams can trust core metrics.

- Partnering with data scientists, analysts, product managers, and engineers to translate business requirements into durable datasets.

- Driving operational excellence through root cause analysis, documentation, and engineering practices such as CI/CD, testing, and incident response.

Technical skill areas Intuit expects

Intuit data engineer interviews tend to emphasize practical command of the stack rather than niche trivia. Most teams expect strong proficiency in SQL and at least one programming language such as Python or Java, plus comfort reasoning about distributed processing and cloud architecture. Depending on the team, you may encounter Spark-based batch systems, streaming tools, and AWS services used for storage, compute, orchestration, and monitoring.

Culture: how Intuit evaluates data engineering success

Intuit’s culture places heavy weight on customer impact, accountability, and craft. In interviews, that shows up as an emphasis on how you make trade-offs and how you communicate them. Candidates who do well tend to:

- Prioritize correctness and trust, even when timelines are tight.

- Make assumptions explicit and surface uncertainty early.

- Design systems for long-term maintainability, not just initial delivery.

- Communicate clearly with cross-functional partners who depend on shared datasets.

If you are aiming to stand out, the most reliable path is to prepare in a way that mirrors the job: practice high-signal SQL and pipeline questions, rehearse craft demo storytelling, and refine behavioral answers to show ownership and clarity. If you want a guided prep track, Interview Query’s learning paths help you structure practice across SQL, data engineering, and system design, then you can pressure-test with mock interviews before the final loop.

How to Prepare for the Intuit Data Engineer Interview

Preparing for the Intuit data engineer interview means practicing the same way the job works: define the business need, design a reliable data system, then defend your trade-offs under scrutiny. Intuit’s loop rewards candidates who can reason about correctness, scale, and long-term maintainability, not just those who can solve one-off problems. The best prep plan builds repeatable muscle across SQL, data modeling, pipeline design, and stakeholder communication.

Build SQL fluency around definitions and edge cases

Intuit’s SQL rounds often feel straightforward until you hit ambiguity. That is where most candidates lose points. Practice turning vague prompts into precise definitions, then pressure-test your logic against missing data, duplicates, late events, and changing user attributes. If you want structured reps that mirror interview difficulty, the SQL interview learning path is the most efficient way to build speed and correctness at the same time.

Tip: Before you describe any SQL approach, state your metric definition and grain, then explain how you handle nulls and deduplication.

Prepare for the craft demo like a design review, not a homework assignment

The craft demonstration is where Intuit differentiates between candidates who can build pipelines and candidates who can own them. Your goal is to present a solution that is understandable, defensible, and production-minded. Treat it like an internal design review. Your walkthrough should clearly explain inputs, transformations, data model, reliability strategy, and how you would monitor and iterate after launch. If you want to simulate the format, practicing out loud with mock interviews is the closest proxy to the panel discussion, especially for handling live follow-ups without getting rattled.

Tip: Anchor decisions to constraints such as data freshness, cost, failure recovery, and consumer trust, not just technical elegance.

Refresh distributed systems intuition for Spark and large-scale pipelines

Intuit data engineers are expected to reason through performance and scalability in distributed processing systems. You do not need to memorize niche implementation details, but you should be ready to explain why certain choices improve reliability or cost. Prepare to discuss how you think about partitioning, skew, shuffle cost, late data, and retry behavior in orchestration. The data engineering learning path is a solid way to reinforce the pipeline and system design patterns that show up in interviews.

Tip: When discussing performance, describe what you would observe first, then what you would change, then how you would validate the improvement.

Practice data modeling that preserves history and prevents metric drift

Schema and modeling questions are less about the “correct” star schema and more about whether your design stays correct over time. Intuit cares about point-in-time accuracy because many datasets support finance-adjacent decisions, customer experiences, and AI features. Practice explaining how you track changes, avoid overwrites that break history, and version datasets so downstream teams do not get surprised. Working through schema design prompts in the Interview Query question library is a fast way to build confidence describing these trade-offs without overcomplicating the design.

Tip: Explain how your model prevents double counting and preserves historical truth, then describe how you would communicate changes to consumers.

Prepare behavioral stories that show ownership and cross-functional clarity

Intuit’s final rounds weigh judgment and collaboration heavily. Your stories should demonstrate that you can own a system end to end, communicate trade-offs to non-technical partners, and prioritize customer impact. Prepare three to four core stories that you can adapt: a reliability improvement, a conflict or disagreement resolved through data, an end-to-end project you owned, and a time you made a trade-off under pressure. If you want to pressure-test delivery, the AI interview tool helps you practice staying crisp under follow-up questions.

Tip: Lead with the decision you drove and the measurable outcome, then explain the engineering work that enabled it.

Average Intuit Data Engineer Salary

As of 2026, Intuit does not publish a standalone compensation ladder specifically for data engineers. Instead, data engineers are typically leveled within Intuit’s broader technical framework and aligned with software engineer or data specialist pay bands, depending on scope, ownership, and system complexity. Total compensation generally includes base salary, annual performance bonus, and Restricted Stock Units (RSUs) that vest over four years.

Based on reported data from 2025 through early 2026, estimated total compensation for data engineers and closely aligned technical roles in the United States is outlined below. These figures reflect typical base, stock, and bonus splits observed across Intuit engineering teams.

| Level | Estimated total compensation | Typical components (base / stock / bonus) |

|---|---|---|

| Software Engineer 1 (entry) | ~$158,000 | ~$137K / ~$12K / ~$9K |

| Software Engineer 2 | ~$209,000 | ~$158K / ~$37K / ~$13K |

| Senior Software Engineer | ~$248,000 | ~$184K / ~$42K / ~$22K |

| Staff Software Engineer | ~$378,000 | ~$223K / ~$118K / ~$37K |

| Senior Staff Software Engineer | ~$479,000+ | Varies; highs of ~$660K reported |

These bands are commonly used to benchmark Intuit data engineer compensation, particularly for roles focused on large-scale pipelines, platform ownership, or data infrastructure supporting AI and financial workflows.

Average Base Salary

Average Total Compensation

According to Levels.fyi, compensation progression at Intuit is strongly tied to system ownership and cross-team impact rather than title alone. Data engineers who own shared datasets, platform services, or reliability-critical pipelines tend to progress more quickly into staff and senior staff ranges.

FAQs

How long does the Intuit data engineer interview process take?

Most candidates complete the Intuit data engineer interview process in 4 to 6 weeks. Timelines vary by team and scheduling availability, especially because the craft demonstration includes a take-home component and a live panel discussion. Delays most commonly happen between the craft demo and the technical deep-dive rounds.

How technical is the Intuit data engineer interview?

The interview is highly technical, but in a practical way. You are evaluated on SQL reasoning, data modeling, pipeline and system design, and production judgment rather than academic trivia. Intuit interviewers care more about how you define metrics, handle edge cases, and design for reliability than whether you recall obscure syntax.

Does Intuit require Spark or cloud experience for data engineers?

Most Intuit data engineer roles expect hands-on experience with distributed data processing and cloud-native systems, commonly Spark and AWS. However, interviews focus on how you reason about scale, cost, and reliability rather than testing specific service APIs. Candidates without Spark experience but with strong pipeline and systems intuition can still perform well if they explain trade-offs clearly.

What makes the craft demonstration different from other companies?

The craft demonstration is designed to simulate how Intuit data engineers actually work. Instead of live coding, you are asked to solve a realistic data engineering problem over a few days, then defend your decisions in a panel discussion. Interviewers focus on architecture, data modeling, data quality, and operational thinking, not on producing a perfect solution.

How should I prepare for the SQL portion of the interview?

Focus on definitions, edge cases, and correctness under ambiguity, not just query syntax. Practice cohort logic, joins, aggregations, and window functions while explaining assumptions out loud. Interview Query’s SQL interview learning path is well aligned with the level and style of SQL questions Intuit asks.

How important are behavioral interviews at Intuit?

Behavioral interviews are heavily weighted, especially at senior levels. Intuit looks for data engineers who take ownership, communicate clearly with cross-functional partners, and prioritize customer impact. Strong candidates use concrete examples that show how their decisions improved reliability, trust, or outcomes, not just technical execution.

Final Thoughts: Preparing for the Intuit Data Engineer Interview

The Intuit data engineer interview is designed to identify engineers who can own data systems end to end, not just write queries or build pipelines in isolation. Strong candidates show that they can reason through ambiguity, design for reliability, and communicate trade-offs in a way that product and business partners trust.

The most effective preparation mirrors how the role operates in practice. Build fluency in SQL and data logic, practice explaining schema and pipeline decisions clearly, and rehearse craft demo storytelling as if you were presenting to your future teammates. Pair technical practice with behavioral preparation that highlights ownership and customer impact.

If you want a structured way to prepare, start with Interview Query’s data engineering learning path to reinforce system thinking, then sharpen SQL with the SQL interview learning path. Pressure-test your communication and decision-making through mock interviews or refine delivery using the AI interview tool