Intuit Research Scientist Interview Guide

Introduction

An Intuit research scientist interview is designed for candidates who can bridge advanced AI research with real product impact. Unlike academic-only roles, Intuit’s research positions emphasize applied work that moves from theory to production, shaping how millions of consumers and small businesses make financial decisions.

Intuit operates at massive scale across products like TurboTax, QuickBooks, and Credit Karma. According to Intuit’s FY2024 annual report, the company serves more than 100 million customers globally, which means even small improvements in decision models, forecasting accuracy, or language understanding can have measurable downstream impact. As a result, research scientists are embedded close to product teams, working on areas such as causal inference, decision-making under uncertainty, and large language models that ship into live systems.

In this guide, we break down the Intuit research scientist interview process, including how the technical rounds are structured, what the craft demonstration evaluates, and how Intuit assesses research depth, engineering judgment, and alignment with its applied AI culture.

Intuit Research Scientist Interview Process

The Intuit research scientist interview process evaluates whether candidates can operate as applied researchers in a product-driven environment. Rather than testing theory in isolation, Intuit focuses on how you reason through complex technical problems, translate research into deployable systems, and communicate trade-offs to engineering and product partners.

Most candidates complete the process in three to five weeks and move through several stages that test coding fundamentals, machine learning depth, system design judgment, and research craftsmanship.

| Interview stage | What happens |

|---|---|

| Recruiter screen | Background, role fit, and expectations |

| Online assessment | Coding and problem-solving fundamentals |

| Technical interviews | Coding, system design, and ML or statistics |

| Craft demonstration | Research presentation or design exercise |

| Behavioral and hiring manager | Judgment, collaboration, and values alignment |

Recruiter Screen

The recruiter screen focuses on your background, seniority alignment, and interest in applied AI research at Intuit. Recruiters typically ask about prior research work, publication experience, and how closely your work aligns with areas such as decision-making under uncertainty, causal inference, or large language models.

This stage also checks communication clarity and expectations around scope, leveling, and day-to-day collaboration with product and engineering partners.

Online Assessment

Many candidates complete an online assessment early in the process. This stage usually includes coding questions centered on data structures, algorithms, and general problem-solving ability. The goal is to confirm baseline computer science fundamentals before moving into deeper research discussions.

To prepare, candidates commonly drill fundamentals with Interview Query’s SQL interview questions to reinforce clean query logic and structured reasoning under time pressure.

Technical Interviews

Technical interviews are typically conducted over phone or video and span multiple focus areas.

Coding interviews emphasize data structures, algorithms, and core computer science concepts. Even for a research role, Intuit expects strong fundamentals, especially at senior and staff levels. Practicing core patterns and explaining trade-offs out loud is the main objective, and many candidates warm up using Interview Query’s SQL interview questions and adjacent technical prompts.

System design interviews assess your ability to design scalable, production-ready systems that could support AI-driven features. These discussions focus on architecture, data flow, APIs, and trade-offs between performance, reliability, and interpretability. Interviewers care about how you reason through constraints, such as latency, observability, model updates, and safety requirements, not just whether you can name components.

Machine learning and statistics interviews typically go deep on modeling choices, evaluation, and failure modes. Topics often include deep learning, probabilistic modeling, causal inference, reinforcement learning, and how to validate models under distribution shift. Candidates often rehearse explanation depth using Interview Query’s machine learning interview questions.

Craft Demonstration

The craft demonstration is a defining part of the Intuit research scientist interview. Candidates are usually asked to present a prior research project, prototype, or design exercise that demonstrates both depth and real-world applicability. In some cases, you may get a prompt and work through a research or design problem collaboratively with a panel.

Interviewers evaluate how you frame the problem, justify methodological choices, and think about production constraints such as data availability, scalability, interpretability, monitoring, and downstream user impact.

Behavioral and Hiring Manager Interviews

The final stage focuses on collaboration, leadership, and values alignment. You may speak with a hiring manager or cross-functional partners about decision-making under ambiguity, mentorship, and how you balance long-term research goals with near-term product needs.

Candidates often pressure-test these conversations through mock interviews or practice live delivery using the AI interview to refine clarity without sounding scripted.

Intuit Research Scientist Interview Questions

Intuit research scientist interview rounds are designed to evaluate whether you can develop rigorous AI research and translate it into systems that ship into real financial products. Interviewers assess theoretical depth, algorithmic reasoning, applied modeling judgment, and how clearly you communicate complex ideas under uncertainty. Strong candidates demonstrate not just correctness, but discipline in assumptions, evaluation, and real-world constraints.

Because the role spans research, engineering, and product integration, interview questions often cut across coding, machine learning theory, statistics, and system-level design. Candidates typically prepare by reinforcing fundamentals through Interview Query’s modeling and machine learning resources and practicing structured explanation in live interview settings.

Coding & Technical Questions

Coding questions for Intuit research scientist roles focus on algorithmic reasoning and probabilistic thinking that mirror challenges in large-scale AI systems. Interviewers care less about language syntax and more about how you reason through constraints, correctness, and edge cases.

How would you select a random element from a data stream using only O(1) space?

This question evaluates probabilistic reasoning under memory constraints. Strong answers explain reservoir sampling, including why each element has equal probability of selection despite the stream length being unknown. Interviewers may probe how this behaves for very large or infinite streams.

Tip: Clearly explain why the probability remains uniform, not just how the algorithm works.

How would you detect anomalies in a large-scale financial transaction dataset?

This question evaluates applied modeling judgment in noisy, real-world data. Interviewers look for how you choose between statistical, probabilistic, and learning-based approaches, and how you handle false positives in high-stakes environments.

Tip: Explicitly discuss evaluation metrics and operational trade-offs.

-

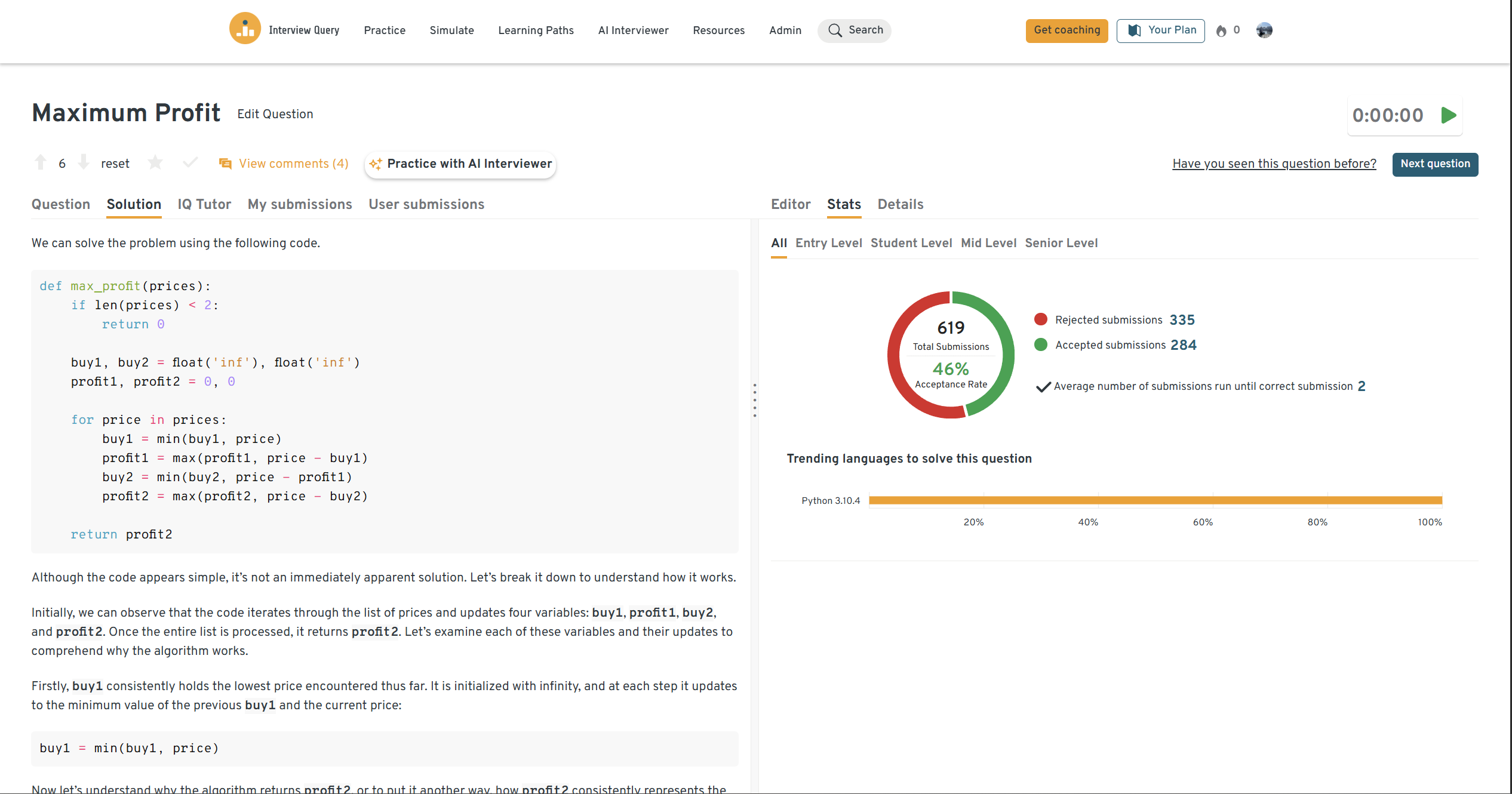

This tests understanding of stochastic processes and state transitions. Strong candidates describe constructing a transition matrix, representing the state vector, and using matrix exponentiation to compute probabilities efficiently for large n.

Tip: Focus on state representation and scalability, not just the final formula.

-

This problem tests dynamic programming intuition and optimization under constraints. Strong answers define clear state variables and explain how each transition maintains optimality over time.

Tip: Walk through the state updates verbally to show you understand why the solution works.

System & Machine Learning Design Questions

System and machine learning design questions evaluate whether you can translate research ideas into scalable, production-ready systems. Intuit interviewers focus on trade-offs across accuracy, latency, interpretability, regulatory constraints, and user trust rather than abstract architecture alone.

How would you design an end-to-end pipeline for training and deploying a large-scale machine learning model?

Interviewers use this to assess systems thinking across data ingestion, training, validation, deployment, and monitoring. Strong candidates describe versioned datasets, reproducible training, evaluation metrics, rollback strategies, and drift detection.

Tip: Frame the pipeline as iterative, not linear, and highlight where failures are most likely.

How would you design a fraud detection system for high-volume financial transactions?

This question tests applied ML judgment under uncertainty. Strong answers balance recall, precision, latency, and human review, while considering adversarial behavior and delayed ground truth.

Tip: Separate offline model evaluation from online decision thresholds.

How would you evaluate whether an LLM-based feature should be used in a customer-facing financial workflow?

Interviewers assess judgment around reliability, hallucination risk, explainability, and user impact. Strong candidates discuss guardrails, fallback mechanisms, and confidence calibration.

Tip: Anchor decisions in user risk and trust, not just model capability.

How would you monitor and evaluate a deployed ML model with delayed or noisy labels?

This evaluates realism in production ML assessment. Strong candidates propose proxy metrics, human-in-the-loop review, drift detection, and early warning signals rather than relying on offline accuracy alone.

Tip: Emphasize robustness and monitoring over perfect measurement.

Behavioral & Research Judgment Questions

Behavioral interviews for an Intuit research scientist role focus on ownership, collaboration, and judgment in applied research environments. Interviewers evaluate how you operate when research is ambiguous, timelines are long, and outcomes must eventually translate into product impact. Strong candidates show rigor in how they make decisions, communicate uncertainty, and align stakeholders without overclaiming.

Tell me about a time when you exceeded expectations on a research or technical project.

This question evaluates initiative and ownership. Interviewers want to see how you identify high-leverage improvements beyond the original scope and how you validate that the extra work mattered.

Tip: Anchor the story on an outcome you unlocked, not the extra effort you spent.

Sample answer: I was asked to build a baseline forecasting model for a product metric, with the expectation that it would be used for a weekly planning dashboard. After validating the baseline, I noticed the largest errors clustered around specific cohorts and time windows, so I ran targeted error analysis and added a simple change-point and seasonality component. I also built a lightweight backtesting harness to compare models consistently, then shared results with product and engineering so we could align on deployment constraints early. The updated model reduced forecast error by a meaningful margin, and it changed how the team planned experiment ramp-ups during peak periods. The work exceeded the initial request, but it directly improved decision quality and prevented repeated rework later.

Describe a challenging research or data project and the obstacles you faced.

This evaluates resilience and problem-solving under uncertainty. Interviewers listen for how you diagnose root causes, handle messy data, and keep rigor when constraints are real.

Tip: Spend more time on diagnosis and trade-offs than on tooling details.

Sample answer: I led analysis on a model that appeared to perform well offline but degraded in online performance after deployment. The main obstacle was that labels were delayed and partially missing, which made it hard to attribute whether the issue was drift, logging gaps, or a true modeling failure. I started by auditing the data pipeline and found a subtle schema change that altered feature distributions for a high-traffic segment. I then segmented performance by cohort and traffic source to isolate where the degradation was concentrated, and I proposed a mitigation plan with short-term guardrails and a longer-term retraining strategy. We shipped a conservative threshold adjustment and monitoring alerts first, then retrained with corrected features. The result was a stable recovery in online performance, and the team adopted the monitoring checklist as a standard release gate.

Why do you want to work at Intuit, and why in a research-focused role?

This tests motivation and alignment. Strong answers connect Intuit’s mission to your research interests and explain why applied impact matters to you.

Tip: Reference a concrete research area you want to build for real users, not a generic “AI at scale” statement.

Sample answer: I want to work at Intuit because the company operates in domains where AI decisions materially affect real financial outcomes, so rigor and user trust are not optional. My research interests sit in decision-making under uncertainty and causal reasoning, and I’m most motivated when those methods translate into measurable improvements in customer outcomes. In a research-focused role, I can help shape approaches that go beyond predictive accuracy, such as building models that are robust to distribution shift and that support human decision-makers. I also like environments where research does not stop at a paper, then the work is stress-tested in production with real constraints like latency, interpretability, and safety. Intuit’s product surface area makes it possible to iterate quickly while still doing deep work. That combination is what I’m optimizing for.

What would your current manager say are your strengths and areas for growth?

This evaluates self-awareness and maturity. Interviewers want a realistic view of strengths tied to outcomes and a growth area you actively manage.

Tip: Make the growth area a controlled trade-off, then explain what you do to mitigate it.

Sample answer: My manager would say my strength is disciplined problem framing, especially when requirements are ambiguous and multiple stakeholders have competing interpretations of success. I’m methodical about defining the objective, choosing evaluation metrics, and clarifying assumptions early, which helps prevent months of work from drifting. They would also point out that I communicate trade-offs clearly, which builds trust with engineering and product partners when constraints force compromises. An area for growth is that I can sometimes over-invest in validation before I ship a first version, especially when stakes are high. I’ve addressed that by time-boxing exploration, agreeing on minimum-viable evaluation standards up front, and using staged rollouts with guardrails. That allows me to maintain rigor while still moving at product speed.

-

This tests whether you can adapt communication without diluting rigor. Intuit research scientist work often requires aligning researchers, engineers, and non-technical partners around the same decision.

Tip: Focus on how you changed the structure of communication, not just the wording.

Sample answer: I once presented a causal inference approach to a cross-functional group, but the discussion got stuck because different stakeholders interpreted “impact” differently. Engineers wanted implementation detail, product wanted a directional recommendation, and leadership wanted confidence bounds and risk. I reset the conversation by reorganizing the narrative: first the decision we needed to make, then the assumptions, then the evidence, then what would change my mind. I also created a one-page visual that separated what we knew from what we inferred, and I explicitly labeled uncertainty and data gaps. After that, the group aligned on a pilot scope and success criteria, and the conversation moved from debating methods to agreeing on next steps. The key change was clarifying decision context and uncertainty, not simplifying the technical content.

How do you resolve conflict when collaborating with strong opinions or competing priorities?

This evaluates how you handle disagreement without damaging trust. Interviewers want to see evidence-based alignment rather than escalation or avoidance.

Tip: Show how you protect the relationship while still advocating for the right technical call.

Sample answer: In one project, two teams disagreed on whether to optimize for precision or recall in a high-stakes detection model, and the debate became polarized. I reframed the conflict around user impact by mapping each error type to downstream cost, operational workload, and customer harm. Then I proposed a staged approach: set conservative thresholds for initial rollout, add human review for high-uncertainty cases, and run an online evaluation to estimate the true cost curve before committing. This gave each stakeholder a clear place where their concern was addressed and converted the debate into measurable trade-offs. We reached agreement without forcing consensus, because the decision was tied to outcomes and monitoring. The relationship improved because everyone felt heard and the plan reduced risk.

How do you prioritize multiple research deadlines or parallel experiments?

This assesses prioritization discipline in exploratory work. Intuit research scientist roles reward candidates who can choose what not to do and justify it clearly.

Tip: Use a simple prioritization rule, then show how you applied it.

Sample answer: I prioritize work based on expected impact, uncertainty, and reversibility, then I factor in time sensitivity like launch windows or dependency chains. When multiple experiments compete, I start by defining the decision each experiment informs and whether we can make that decision with smaller, faster tests. I also look for shared infrastructure work that de-risks multiple paths, such as improved evaluation harnesses or better data labeling. If priorities conflict across stakeholders, I document the trade-offs explicitly and propose a sequencing plan that preserves optionality. In a recent project, this approach helped us ship a smaller but high-confidence improvement first, then run a deeper research track in parallel without blocking product timelines. The main principle is to maximize learning per unit time while keeping the highest-impact path unblocked.

How To Prepare For An Intuit Research Scientist Interview

Intuit research scientist interviews reward candidates who can combine research depth with production judgment. Preparation should mirror what the loop actually tests: disciplined problem framing, strong modeling fundamentals, clear reasoning in coding rounds, and the ability to communicate trade-offs to cross-functional partners. The tips below focus on building those exact skills.

Frame research problems as decisions under uncertainty

Intuit research scientist interviews reward candidates who can start with the decision that must be made, then define what evidence would change that decision. In practice, that means you should be explicit about the objective, constraints, and what “good” looks like before you discuss models. When you practice, state the decision first, then walk through hypotheses, data needs, and evaluation.

To rehearse structured explanation under follow-up pressure, candidates often use Interview Query’s AI interview.

Rebuild fundamentals in modeling, evaluation, and failure modes

Intuit expects depth in core ML ideas, but interviews often hinge on judgment: why this objective, why this baseline, how you know it is not leaking, and what breaks in production. Your prep should prioritize probabilistic reasoning, causal inference intuition, evaluation under delayed labels, distribution shift, and calibration, because these topics come up naturally when discussing fintech use cases.

A structured way to cover breadth without getting scattered is Interview Query’s modeling and machine learning interview learning path, then drilling targeted weak spots from the machine learning interview questions.

Practice coding for clarity and constraints, not trivia

Even research-heavy loops test coding fundamentals. The goal is not cleverness, it is correctness, readability, and reasoning through edge cases. Focus on explaining invariants, complexity, and failure cases out loud, because that is what interviewers use to gauge whether you can build systems that survive production constraints.

For warm-up, candidates often use Interview Query’s SQL interview questions as a structured way to practice clean logic and step-by-step explanation.

Prepare a craft demo story that is end-to-end and production-aware

The craft demonstration is often where Intuit distinguishes strong researchers from researchers who ship. Pick one or two projects you can present end to end: problem framing, baselines, data decisions, modeling choices, evaluation, error analysis, and what you would do differently with more time. You should also be ready to discuss deployment considerations such as monitoring, drift, guardrails, and rollback.

Practicing your narrative in a realistic setting helps you avoid overexplaining and keeps the story decision-led. Many candidates rehearse through mock interviews.

Build behavioral answers around influence and technical judgment

Behavioral rounds for an Intuit research scientist role often probe how you influence without authority, resolve disagreement, and balance rigor with speed. Your examples should emphasize how you made trade-offs explicit, brought stakeholders along, and protected quality when data or requirements were messy. Keep the story anchored on the decision that changed and the outcome that followed.

If you want a practice loop that simulates follow-ups, Interview Query’s AI interview helps you iterate on clarity and structure without memorizing scripts.

Role Overview And Culture At Intuit

An Intuit research scientist works at the intersection of advanced AI research and financial product development. The role focuses on applied research that informs real systems, rather than standalone theoretical exploration.

Research scientists partner closely with software engineers, product managers, and designers. They help define problems, prototype solutions, and guide technical direction while accounting for constraints such as latency, interpretability, regulatory risk, and user trust. Success depends as much on judgment and communication as on technical depth.

Culturally, Intuit emphasizes applied impact and customer-centric decision-making. Research teams are encouraged to publish and engage with the academic community, but work is ultimately evaluated based on whether it improves outcomes across products like TurboTax, QuickBooks, and Credit Karma.

The environment is often described as intrapreneurial. Researchers are expected to take ownership, mentor others, and help translate exploratory work into scalable, production-ready systems. Clear thinking, disciplined experimentation, and credibility with cross-functional partners are core expectations.

FAQs

How long does the Intuit research scientist interview process take?

Most candidates complete the Intuit research scientist interview process in approximately three to five weeks. Timelines vary based on scheduling availability, the number of technical rounds required, and the scope of the craft demonstration or research presentation.

How research-focused is the Intuit interview compared to engineering roles?

The interview places greater emphasis on research judgment, modeling depth, and evaluation rigor than a typical software engineering loop. That said, strong computer science fundamentals are still expected, particularly in coding and system design rounds.

Does Intuit expect publications for research scientist roles?

Publications are common, especially for senior, staff, and principal roles, but they are not a strict requirement. Interviewers also value applied impact, technical leadership, and the ability to translate research into production systems.

What AI domains are most relevant for Intuit research scientist interviews?

Common focus areas include probabilistic modeling, causal inference, decision-making under uncertainty, forecasting, reinforcement learning, and large language models. Candidates are often evaluated on how these techniques apply to real financial decision-making problems.

How important is the craft demonstration?

The craft demonstration is a core part of the interview process. It evaluates how well you frame problems, justify modeling decisions, and reason about production constraints such as monitoring, drift, and user risk. Strong performance here often differentiates candidates at senior levels.

What skills matter most for succeeding as an Intuit research scientist?

Success depends on a balance of technical depth, disciplined problem framing, and clear communication. Interviewers look for candidates who can reason under uncertainty, collaborate across teams, and make trade-offs explicit rather than optimizing for theory alone.

Prepare Like A Research Scientist Shipping Real Impact

The Intuit research scientist interview is designed to identify candidates who can turn advanced AI research into reliable, high-impact systems. Interviewers evaluate not only technical correctness, but also how you diagnose problems, prioritize research directions, and communicate uncertainty in environments where decisions affect real users.

The strongest preparation mirrors that reality. Reinforce modeling fundamentals through Interview Query’s machine learning interview questions, practice structured explanation using mock interviews, and refine delivery with the AI interview.

With disciplined preparation and a clear focus on applied impact, you can approach the Intuit research scientist interview with confidence and clarity.