Intuit Machine Learning Engineer Interview Guide

Introduction

Preparing for an Intuit machine learning engineer interview? This guide breaks down the full process, key technical areas, and tips to help you stand out in one of the most competitive AI roles in fintech. With applications spanning TurboTax, QuickBooks, and Credit Karma, machine learning engineers at Intuit build production-grade AI systems that serve millions of users daily.

In this guide, we cover the full interview loop, from the unique “craft demo” assignment to advanced ML system design and behavioral rounds. We’ll also share the kinds of coding, ML, and system architecture questions you can expect, plus preparation strategies aligned with how Intuit evaluates impact, scalability, and applied ML thinking.

Intuit Machine Learning Engineer Interview Process

The Intuit machine learning engineer interview process is designed to test whether candidates can deliver reliable, production-ready ML systems—not just models in notebooks. Across 4 to 6 stages, the process evaluates technical depth, product alignment, and system-level thinking.

Most candidates complete the process over 4 to 6 weeks. Expect a mix of coding assessments, architectural discussions, and a take-home project that simulates real-world ML engineering work.

| Interview Stage | What to Expect |

|---|---|

| Recruiter Phone Screen | A 25–35 minute call covering your resume, interest in Intuit, and role alignment. |

| Technical Screen / Coding Assessment | A 60–90 minute session focused on data structures, algorithms, or a take-home ML coding task. |

| Craft Demo (Take-Home Assignment) | A multi-day project where you build an ML model or pipeline and present trade-offs in a system design review. |

| On-site / Virtual Interview Loop | 4–6 rounds covering ML system design, algorithm implementation, coding, and behavioral questions. |

Recruiter Screen

The process starts with a recruiter screen, typically lasting 25 to 35 minutes. You’ll discuss your background, motivation for joining Intuit, and alignment with the role’s responsibilities—especially around deploying ML in production environments. Recruiters also outline the rest of the process and timelines.

Tip: Emphasize your experience in taking ML models from research to deployment, not just prototyping.

Technical Screen and Coding Assessment

This stage often takes the form of a 60–90 minute live coding interview or a take-home assignment. You might be asked to write Python code for data processing, implement algorithmic logic, or solve system-level problems in ML pipelines.

Intuit typically tests:

- Data structures and algorithms

- Basic ML concepts (e.g., feature engineering, model evaluation)

- ETL pipelines and batch processing

- Code readability and modularity

Tip: Review data handling patterns in Python and practice working with structured data using libraries like Pandas or PySpark. You can find real practice problems in the Interview Query challenges section.

Craft Demo Presentation

The “craft demo” is a unique and critical part of the Intuit machine learning engineer interview. You’ll receive a take-home assignment that simulates a real-world problem—such as designing an ML pipeline, building a model from scratch, or solving a customer-impacting data problem.

You’ll then present your solution, including:

- High-level and low-level design

- Trade-offs between speed, accuracy, and scalability

- Monitoring and deployment considerations

Tip: Treat this like a live design review. Anchor your decisions to real business metrics and product reliability, not just model accuracy. You can rehearse your storytelling through a mock interview.

Final On-site or Virtual Loop

This round includes 4 to 6 interviews that dive deeper into your ML engineering capabilities. You’ll meet with cross-functional partners—engineers, data scientists, and hiring managers—across a mix of technical and behavioral formats.

Common areas assessed:

- ML System Design: Scalability, orchestration, and latency considerations.

- ML Concepts & Implementation: Model selection, evaluation, and implementing algorithms from scratch.

- Coding: Data manipulation and problem-solving in Python or Java.

- Behavioral Fit: Collaboration, ownership, and learning mindset.

Tip: Frame your system design choices around real production constraints, such as model refresh cycles, fault tolerance, and latency. You can explore how top engineers approach these problems using the Interview Query learning paths.

Intuit Machine Learning Engineer Interview Questions

The Intuit machine learning engineer interview evaluates your ability to deploy reliable, scalable ML systems that create tangible business impact. Questions cover modeling fundamentals, data pipeline design, coding, and cross-functional collaboration. Interviewers assess how you reason through trade-offs, communicate decisions, and solve real-world problems—not just how well you know algorithms.

Explore the machine learning learning path and data engineering interview path to sharpen your technical foundation before diving into real questions.

ML Fundamentals and Modeling Questions

Machine learning fundamentals questions at Intuit focus on how you make modeling decisions under real-world constraints. Interviewers are less interested in textbook definitions and more interested in how you evaluate models, handle changing data, and balance accuracy with stability, interpretability, and customer trust in production systems.

These questions often come up in the technical screen and system-focused onsite rounds, where Intuit evaluates whether you can justify model choices that will operate reliably across products like TurboTax, QuickBooks, and Credit Karma.

How would you handle concept drift in a model used for personalized tax advice?

This question tests whether you understand that user behavior and financial signals change over time, especially across tax seasons, income shifts, or regulatory updates. Intuit wants to see how you detect drift early and respond without destabilizing production systems.

Tip: Talk about monitoring feature distributions and model performance, then explain retraining or recalibration strategies that avoid reacting to short-term noise.

What trade-offs would you consider when choosing between a tree-based model and a deep learning model?

Interviewers use this question to evaluate your model selection judgment. At Intuit, simpler models are often preferred when explainability, latency, or regulatory clarity matters more than marginal accuracy gains.

Tip: Anchor your answer in interpretability, operational cost, latency, and ease of debugging, not just performance metrics.

How would you evaluate a multi-class classification model where some classes are more business-critical than others?

This question checks whether you align model evaluation with business impact. In financial products, certain errors can be far more costly or risky than others.

Tip: Mention class-weighted metrics, custom loss functions, and threshold tuning tied to downstream decisions rather than overall accuracy.

How would you validate a clustering model without labeled data?

This question evaluates how you assess unsupervised models used for segmentation or personalization. Interviewers want to see that you connect clustering quality to practical usefulness.

Tip: Go beyond internal metrics and explain how you would validate clusters through downstream experiments or product impact.

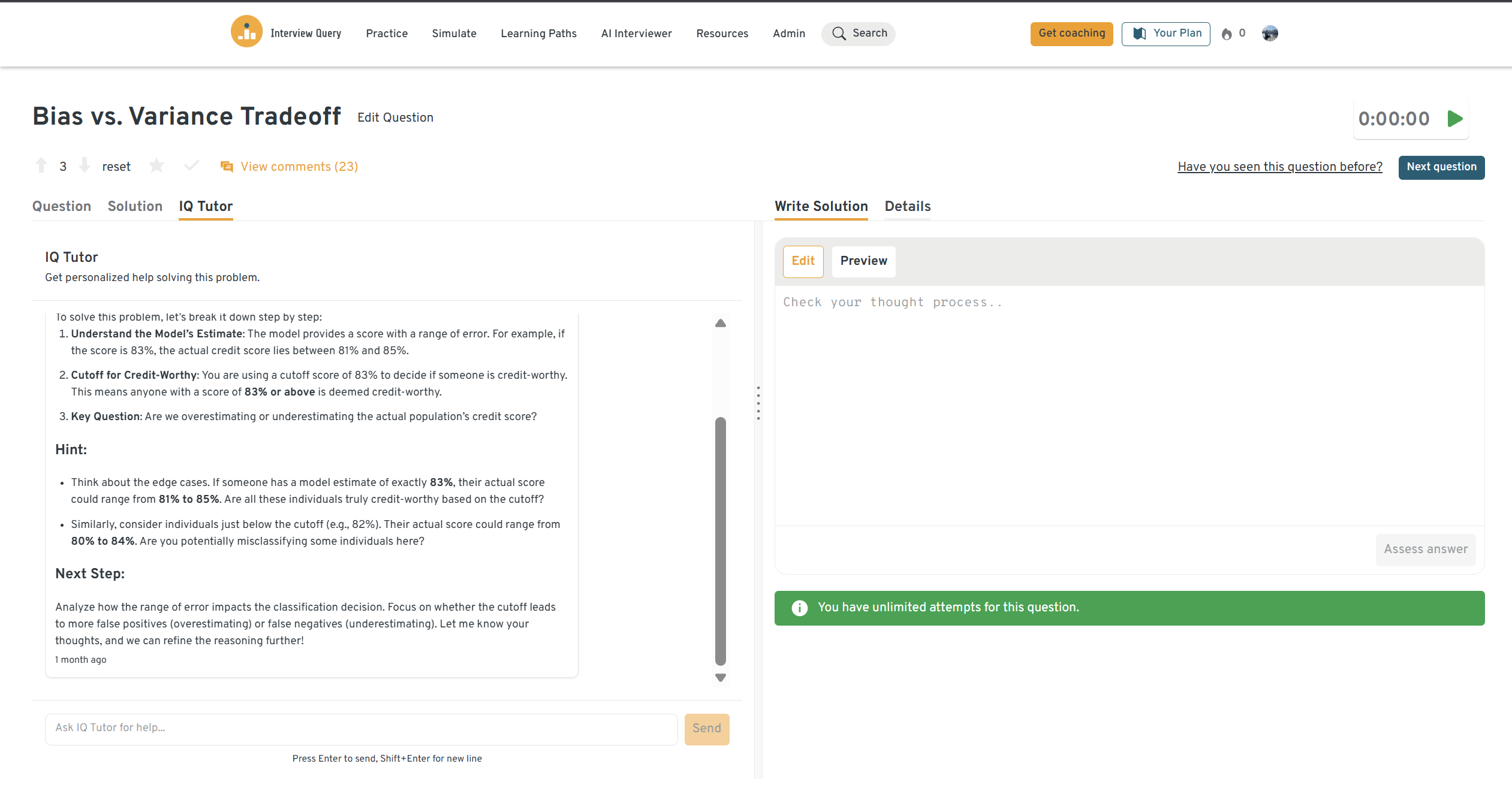

How would you explain the bias-variance tradeoff in the context of a production model?

Intuit asks this to assess whether you understand how model complexity affects reliability at scale. High-variance models can cause unpredictable behavior in live financial workflows.

Tip: Use a concrete example like fraud detection or income estimation, where stability and trust matter more than squeezing out small accuracy gains.

To strengthen your fundamentals before interviews, work through the modeling and machine learning learning path and practice applied scenarios in the Interview Query challenges.

ML System Design and Production Questions

System design questions at Intuit focus on your ability to build machine learning systems that scale safely, serve millions of users reliably, and adapt over time. These questions test whether you can reason through infrastructure trade-offs, architect ML pipelines, and deliver business impact through experimentation and iteration.

You’ll be asked to walk through systems that involve real-time inference, retraining strategies, and decision-making under constraints like latency, safety, and drift.

-

This question tests your ability to build a secure, distributed ML system with strict reliability and privacy requirements.

Tip: Emphasize latency, auditability, safe fallback mechanisms, and how you’d monitor both false accepts and rejects.

How would you build a scalable vector search system for retrieving similar user generated items?

This question evaluates your understanding of large-scale retrieval. At Intuit, this could apply to matching user financial profiles or surfacing relevant help content.

Tip: Talk about embedding generation, approximate nearest neighbor (ANN) indexing, latency, and freshness.

Explain your approach to building an inference pipeline that handles millions of concurrent sessions.

This question tests your ability to design for peak traffic, horizontal scaling, and monitoring.

Tip: Discuss model serving architecture, caching, batching, and failure recovery across distributed systems.

How would you build the recommendation algorithm for a type-ahead search model?

This question evaluates how you balance relevance and latency in an interactive search interface.

Tip: Walk through prefix-based retrieval, lightweight ranking, trending signals, and how to filter unsafe or irrelevant results.

-

This tests how you monitor production classifiers beyond offline metrics.

Tip: Cover precision, recall, false positive rate, threshold tuning, and real-world feedback loops.

For more practice, explore curated machine learning interview questions on Interview Query.

In this ML system design walkthrough, Sachin, a senior data scientist, tackles a common question: how would you architect YouTube’s homepage recommendation system? You’ll learn how to approach real-time vs. offline recommendation pipelines, handle user context, optimize for different devices, and incorporate metrics like NDCG and MRR, ideal for machine learning engineers preparing for design rounds.

Coding and Data Processing Questions

Coding questions at Intuit assess whether you can build core ML logic from scratch and translate it into clean, production-safe implementations. You’ll often be asked to write algorithms for training, data processing, or clustering—without relying on high-level libraries.

These questions test fluency with Python and NumPy, as well as your ability to reason about optimization, convergence, and numerical safety.

-

This question checks whether you understand the fundamentals of training loops and convergence.

Tip: Walk through vectorized gradient updates, learning rate selection, and tracking loss across epochs.

-

This tests your understanding of distance-based classification and scalability.

Tip: Use matrix operations for pairwise distance, majority voting, and explain runtime limits at scale.

-

This evaluates your grasp of probabilistic modeling and training.

Tip: Cover how to compute stable logits, avoid overflow in the sigmoid, and verify gradient correctness.

-

This question tests convergence logic and unsupervised learning intuition.

Tip: Discuss centroid initialization, distance computation, assignment steps, and how you check for convergence.

-

This question tests whether you understand how to match model types to constraints.

Tip: Compare bootstrapping and variance reduction to the simplicity, speed, and interpretability of linear models.

To build fluency, check out the Interview Query dashboard or drill more questions from the challenges section.

Behavioral and Collaboration Questions

Behavioral interviews at Intuit evaluate how you operate in a cross-functional team, communicate complex ideas, and make decisions under ambiguity. Interviewers are looking for machine learning engineers who take ownership, collaborate with product and engineering partners, and prioritize customer impact over model vanity metrics.

Use the STAR method to structure your answers, and focus on decisions that influenced product outcomes, model deployment, or user experience.

Tell me about a time you shipped a machine learning model into production.

This question tests your end-to-end execution, not just modeling. Intuit wants to know how you worked with engineering to deploy and monitor a model that served users.

Tip: Talk about deployment, post-launch monitoring, and iteration based on real-world feedback.

Sample answer:

I built a classification model to flag untagged expenses in QuickBooks. I worked with engineering to serve it via a REST API and partnered with product to define precision targets. After launch, I set up dashboards to monitor user overrides and saw a 15% false positive rate. Based on that, we added a rule-based layer to filter low-confidence predictions, which improved trust and cut overrides by half.

Describe a time you disagreed with a stakeholder or engineer.

This question evaluates your ability to resolve conflict by aligning around data and business goals.

Tip: Show how you de-escalated, introduced evidence, and focused on shared objectives.

Sample answer:

A PM wanted to increase the sensitivity of a fraud model based on a few recent cases, but I was concerned about spiking false positives. I ran an offline analysis showing that lowering the threshold would block 3x more legitimate users. Instead, I proposed launching a secondary shadow model to test the behavior safely. The data supported my concerns, and we used that to adjust thresholds more conservatively.

Tell me about a model that underperformed. What did you learn?

This question assesses your ability to reflect, learn, and improve processes—not just defend past work.

Tip: Focus on what went wrong, how you diagnosed it, and what you changed going forward.

Sample answer:

I deployed a churn model that performed well offline but failed to flag silent churners in production. I discovered the training data didn’t capture reactivation behavior, which skewed the signal. I added features from support tickets and usage streaks, then retrained and tested against newer cohorts. The new model improved recall by 18%, and I added stricter validation to prevent this kind of leakage in the future.

-

This tests whether you can translate technical complexity into clear decisions.

Tip: Lead with the impact, then explain the technical trade-off in language the stakeholder understands.

Sample answer:

I had to explain why a model rollout was delayed due to missing input coverage. The product team was frustrated, so I walked them through a visual of input completeness vs. prediction reliability. Once they saw how the gaps could lead to unsafe predictions, they agreed to delay the launch and help push a tracking fix that sped up resolution.

Why do you want to work as a machine learning engineer at Intuit?

This question evaluates whether you’re aligned with Intuit’s mission and product strategy.

Tip: Tie your answer to specific ML use cases at Intuit (e.g. TurboTax, GenOS, Credit Karma), not just the role.

Sample answer:

I’m excited about the opportunity to work on real production ML systems that impact people’s financial lives. I’ve followed how Intuit uses AI for personalization, fraud detection, and automating taxes. I’m especially interested in how GenOS supports responsible generative AI at scale, and I’d love to work on LLM infrastructure that improves transparency and reliability in customer experiences.

You can practice more behavioral interview prompts using the Interview Query AI Interview tool or get live feedback through mock interviews.

How to Prepare for the Intuit Machine Learning Engineer Interview

Preparing for the Intuit machine learning engineer interview means building strong fundamentals, sharpening applied reasoning, and practicing how you communicate trade-offs and product impact.

You’ll be expected to not only implement ML models, but also explain why those models are the right choice for the system, how they’ll behave in production, and what business metrics they support.

Here’s how to prepare effectively:

Practice modeling and ML theory in product settings.

Don’t just review algorithms—practice framing modeling decisions in ambiguous, evolving product environments. Use the modeling and machine learning learning path to review supervised vs unsupervised learning, evaluation metrics, and trade-offs like bias-variance, interpretability, and latency.

Strengthen your system design thinking.

Intuit expects you to design pipelines that are scalable, testable, and easy to monitor. Explore the data engineering learning path to review ML architecture, retraining strategies, feature pipelines, and serving infrastructure.

Write models and logic from scratch.

Brush up on core ML implementations in NumPy or base Python. Use questions like logistic regression from scratch, k-means from scratch, and gradient descent to test your understanding of optimization, numerical stability, and convergence.

Prepare for communication and cross-functional collaboration.

Review your past projects through the lens of decision-making, not just technical execution. Practice answers to behavioral questions using the STAR format. You can also simulate scenarios using the AI interview tool or get feedback via mock interviews.

Study recent Intuit use cases and AI products.

Learn about GenOS, TurboTax Assistant, Credit Karma’s personalization systems, and Intuit’s commitment to responsible AI. Bring these examples into your answers when explaining impact or alignment.

By practicing across modeling, coding, system design, and stakeholder communication, you’ll show Intuit that you can own ML problems from end to end—and make decisions that improve customer outcomes.

Role Overview: Intuit Machine Learning Engineer

Machine learning engineers at Intuit operate at the intersection of infrastructure, applied modeling, and product impact. The role goes beyond building ML models—you’re responsible for shipping production systems that are reliable, explainable, and aligned with real user needs.

Engineers work across products like TurboTax, QuickBooks, Credit Karma, and Mailchimp, applying ML to domains like tax automation, fraud detection, financial insights, and generative AI assistance. Many projects integrate with Intuit’s GenOS platform to deploy LLMs, embeddings, and retrieval-augmented generation (RAG) responsibly.

Core Responsibilities

- Build and deploy production-grade ML models using frameworks like TensorFlow, PyTorch, Scikit-learn, or Spark

- Design end-to-end ML pipelines for ingestion, transformation, feature engineering, training, and serving

- Monitor performance, detect drift, and iterate on models using online and offline metrics

- Collaborate with product managers, data scientists, and backend engineers to define goals and system constraints

- Implement personalization, recommendation, classification, or ranking models depending on the use case

- Contribute to infrastructure decisions, especially around scalable serving, retraining automation, and MLOps best practices

What Makes the Role Unique at Intuit

At Intuit, machine learning engineers are embedded directly in product teams and play a key role in experimentation and iteration. You’re expected to:

- Ship ML features that are testable, safe, and explainable

- Own the system—not just the model—across training, deployment, and monitoring

- Partner with GenAI and platform teams to integrate large language models and AI assistants into the product

- Work in an experimentation-driven environment with measurable impact on customer retention, revenue, and satisfaction

If you’re looking for a role where ML engineering means ownership, safety, and scale—not just modeling in a vacuum—Intuit offers one of the most product-integrated ML environments in fintech.

Average Intuit Machine Learning Engineer Salary

Intuit does not publish a dedicated compensation table specifically for the machine learning engineer role. As a result, compensation is best benchmarked against Intuit’s software engineer levels, where machine learning engineers are typically leveled and compensated alongside senior backend and platform engineers.

According to Levels.fyi, machine learning engineer compensation at Intuit varies significantly by seniority, scope, and ownership. Roles with heavier system design responsibility, production ML ownership, or GenAI infrastructure work tend to sit toward the higher end of each level’s range.

| Level | Approx. annual total compensation |

|---|---|

| Intuit machine learning engineer (entry-level) | ~$190K – $215K |

| Intuit machine learning engineer (mid-level) | ~$215K – $260K |

| Senior Intuit machine learning engineer | ~$260K – $325K |

| Staff Intuit machine learning engineer | ~$325K – $390K |

| Senior staff Intuit machine learning engineer | ~$390K – $460K |

| Principal machine learning engineer | ~$460K – $950K |

These estimates are inferred from Intuit’s reported software engineer compensation bands, where machine learning engineers are typically mapped by impact and scope rather than title alone. Total compensation generally includes base salary, annual bonus, and equity grants.

Average Base Salary

Average Total Compensation

At Intuit, machine learning engineer compensation scales primarily with system ownership and decision-making responsibility. Engineers who own end-to-end ML platforms, production pipelines, or GenAI infrastructure tend to progress faster into staff and principal bands. Compared to data scientist roles, compensation skews more heavily toward base pay and equity due to engineering ownership and on-call responsibility.

FAQs

What does a machine learning engineer do at Intuit?

Machine learning engineers at Intuit build and deploy production-grade ML systems that power products like TurboTax, QuickBooks, and Credit Karma. They work on personalization, classification, ranking, fraud detection, and generative AI features—owning the end-to-end workflow from data ingestion to model serving and monitoring.

What does a machine learning engineer do at Intuit?

Machine learning engineers at Intuit build and deploy production-grade ML systems that power products like TurboTax, QuickBooks, and Credit Karma. They work on personalization, classification, ranking, fraud detection, and generative AI features—owning the end-to-end workflow from data ingestion to model serving and monitoring.

Final Thoughts: Preparing for the Intuit Machine Learning Engineer Interview

The Intuit machine learning engineer interview is designed to identify engineers who can design, deploy, and maintain high-impact ML systems—not just write models in notebooks. Strong candidates show a clear ability to reason through architecture, implement models from scratch, and communicate clearly with product and engineering stakeholders.

To prepare effectively, build up your product intuition and reinforce your technical execution:

- Practice end-to-end system reasoning in the data engineering learning path

- Review modeling trade-offs with the machine learning learning path

- Solve real-world ML interview questions

- Use the AI Interview tool or book a mock interview to pressure test your thinking

Ready to land your next ML role? Start practicing for your Intuit machine learning engineer interview today on Interview Query.