Apple Data Engineer Interview Guide: Process, Questions & Salary

Introduction

Preparing for an Apple data engineer interview means preparing for one of the largest data environments in consumer technology. Apple supports over 2 billion active devices worldwide, with services that generate billions of events per day across products like Apple Music, iCloud, Apple Pay, and the App Store. Data engineers at Apple build and maintain the pipelines and platforms that enable analytics, experimentation, and machine learning at that scale, while operating under strict reliability and privacy requirements.

The interview process reflects those expectations. Many strong engineers struggle because they prepare for isolated coding problems instead of practicing how to reason through real data systems. Apple interviewers care less about perfect syntax and more about whether you can design scalable pipelines, explain tradeoffs, and justify architectural decisions in a privacy-first environment. This guide breaks down the Apple data engineer interview process, the types of questions you should expect at each stage, and how to prepare for what Apple teams actually evaluate.

Apple Data Engineer Interview Process

The Apple data engineer interview process is designed to evaluate how well you can build, scale, and reason about data systems that operate at massive volume while meeting strict reliability and privacy standards. Across stages, interviewers assess SQL fluency, Python coding, data modeling, pipeline design, and system-level judgment, alongside communication and cross-functional thinking. Most candidates complete the process in four to six weeks, depending on team bandwidth and the number of technical rounds.

| Interview stage | What happens |

|---|---|

| Application and resume screen | Recruiters review your background, technical experience, and relevance to the team’s data needs |

| Recruiter screen | Introductory call covering role fit, motivation, and logistics |

| Technical screen | Live SQL and Python exercises, sometimes with a light coding or data reasoning problem |

| Onsite or virtual loop | Multiple interviews covering system design, data modeling, ETL, SQL, and behavioral scenarios |

| Hiring manager or team discussion | Deep dive into past projects and team fit |

| Offer and team matching | Final review and leveling discussion |

Below is a breakdown of each stage and what Apple interviewers are actually looking for throughout the process.

Application and resume screen

Apple recruiters and hiring teams use the resume screen to identify candidates with real production data engineering experience, not just analytics exposure. Strong resumes highlight ownership of batch or streaming pipelines, experience working with data warehouses or lakes, and familiarity with large-scale systems. Evidence of collaboration with data scientists, analysts, or product teams also matters, since data engineers at Apple rarely work in isolation.

Candidates who stand out clearly describe system scope, data scale, and outcomes rather than listing tools. This stage is also where Apple teams often align candidates to specific orgs or problem areas, which can influence the interview focus later in the process.

Tip: Rewrite key bullets using a clear pattern: system built → data scale → business or product impact. This mirrors how Apple evaluates data engineering experience.

Recruiter screen

The recruiter screen is typically a short conversation focused on background, role fit, and motivation. You can expect questions about the kinds of data systems you have worked on, the tools you are most comfortable with, and the environments you thrive in. Recruiters also confirm logistics such as location, level alignment, and interview timeline.

Unlike some companies, Apple places early emphasis on why you want to work there. Candidates are often expected to articulate interest in a specific Apple product, platform, or data problem, rather than giving a generic answer.

Tip: Prepare a concise explanation of why Apple’s products or data scale interest you, and how your data engineering experience maps to that context.

Technical phone screen

The technical phone screen usually focuses on SQL and Python, with light coverage of data engineering fundamentals. You may be asked to write SQL queries involving joins, aggregations, window functions, or edge-case handling, similar in difficulty to real-world analytics problems rather than puzzle-style questions. Python questions often test data manipulation, basic algorithms, or transformation logic relevant to ETL workflows.

Some teams also include short conceptual prompts around data pipelines, file formats, or performance tradeoffs to gauge practical experience. Interviewers care about how you think through problems and explain assumptions, not just whether you reach a perfect solution.

To prepare effectively, many candidates practice structured SQL and data engineering questions similar to those found in data engineer interview questions and SQL interview questions.

Tip: When writing SQL, talk through your logic step by step. Clear reasoning often matters more than speed at this stage.

Onsite or virtual interview loop

The onsite or virtual loop is the most intensive part of the Apple data engineer interview process and typically consists of four to five interviews. Each round focuses on a different aspect of data engineering at scale.

- Advanced SQL and analytics reasoning: These interviews test complex queries, multi-step logic, and data validation. Interviewers look for correctness, performance awareness, and the ability to reason about imperfect data. Practicing realistic SQL data engineer questions helps build confidence here.

- Data modeling and warehousing design: You may be asked to design schemas for event data, fact and dimension tables, or evolving entities. Interviewers evaluate how well your model supports analytics, reporting, and downstream machine learning use cases.

- ETL and pipeline design: This round often involves designing an end-to-end data pipeline, including ingestion, transformations, orchestration, data quality checks, and monitoring. Streaming concepts such as late-arriving data or fault tolerance may appear depending on the team.

- System design for data platforms: Apple data engineers are expected to reason about scalability, reliability, and cost. Strong candidates clearly explain tradeoffs and describe how systems evolve over time, rather than proposing an overly complex design upfront.

- Behavioral and cross-functional interview: This round focuses on collaboration, ownership, and decision-making. You may be asked about handling ambiguous requirements, pushing back on requests, or working with non-technical stakeholders. Behavioral preparation using structured formats similar to those in behavioral interview questions is especially helpful.

Privacy considerations sometimes appear explicitly in design discussions. Apple interviewers may probe how you minimize data collection, enforce access controls, or reduce exposure risk while still enabling analytics and experimentation.

Tip: In design interviews, state your assumptions and constraints early, especially around privacy and data access. This signals mature engineering judgment.

Hiring manager review, team match, and offer

After the interview loop, feedback is consolidated and reviewed by the hiring team. Some candidates go through additional conversations to finalize team placement, particularly when multiple teams are hiring data engineers with similar skill sets. Once alignment is reached, recruiters move forward with leveling discussions and the offer process.

Tip: Be prepared to clearly describe the type of data engineering work you prefer, such as batch versus streaming or platform versus product analytics. This often helps during team matching.

Apple Data Engineer Interview Questions

Candidates preparing for the Apple data engineer interview should expect questions that test both technical depth and engineering judgment at scale. Apple data engineer interview questions focus heavily on efficient SQL, scalable data architectures, and production-ready ETL workflows, all while operating under strict reliability and privacy constraints. Early rounds commonly include Apple SQL interview questions covering joins, window functions, aggregation logic, and correctness on large datasets.

Beyond querying, Apple interviews emphasize real-world data engineering decisions. Candidates are evaluated on how they reason about distributed pipelines, storage tradeoffs, failure modes, and data access boundaries. Many of these questions align closely with skills covered in the Data Engineering Interview Learning Path and the SQL Interview Learning Path.

Coding/Technical Interview Questions

Apple data engineer interview questions in this section test advanced SQL, data manipulation, and practical coding skills that mirror production systems. Interviewers care about correctness, performance awareness, and clarity of reasoning more than clever tricks. Practicing realistic problems from Interview Query Challenges and timed environments like AI Interviewer helps simulate this pressure.

-

This evaluates aggregation logic, filtering, and window functions on large tables. You need to define the correct grain, exclude small groups, and compute proportions accurately. This mirrors executive-level reporting queries built on data warehouses.

Tip: State the grain before writing SQL. Apple interviewers watch closely for aggregation mistakes.

How would you calculate the first-touch attribution channel for each user who converted?

This tests event sequencing, partitioning, and ordering logic over high-volume logs. A strong answer uses window functions and clearly explains assumptions around timestamps and null handling.

Tip: Call out how you would handle late or duplicated events in a production pipeline.

How would you sort a 100GB file when you only have 10GB of RAM?

This classic external sorting problem evaluates system-level thinking under resource constraints. Apple uses questions like this to test whether you can design batch workflows that scale beyond memory.

Tip: Emphasize streaming, disk I/O efficiency, and merge strategies over in-memory optimization.

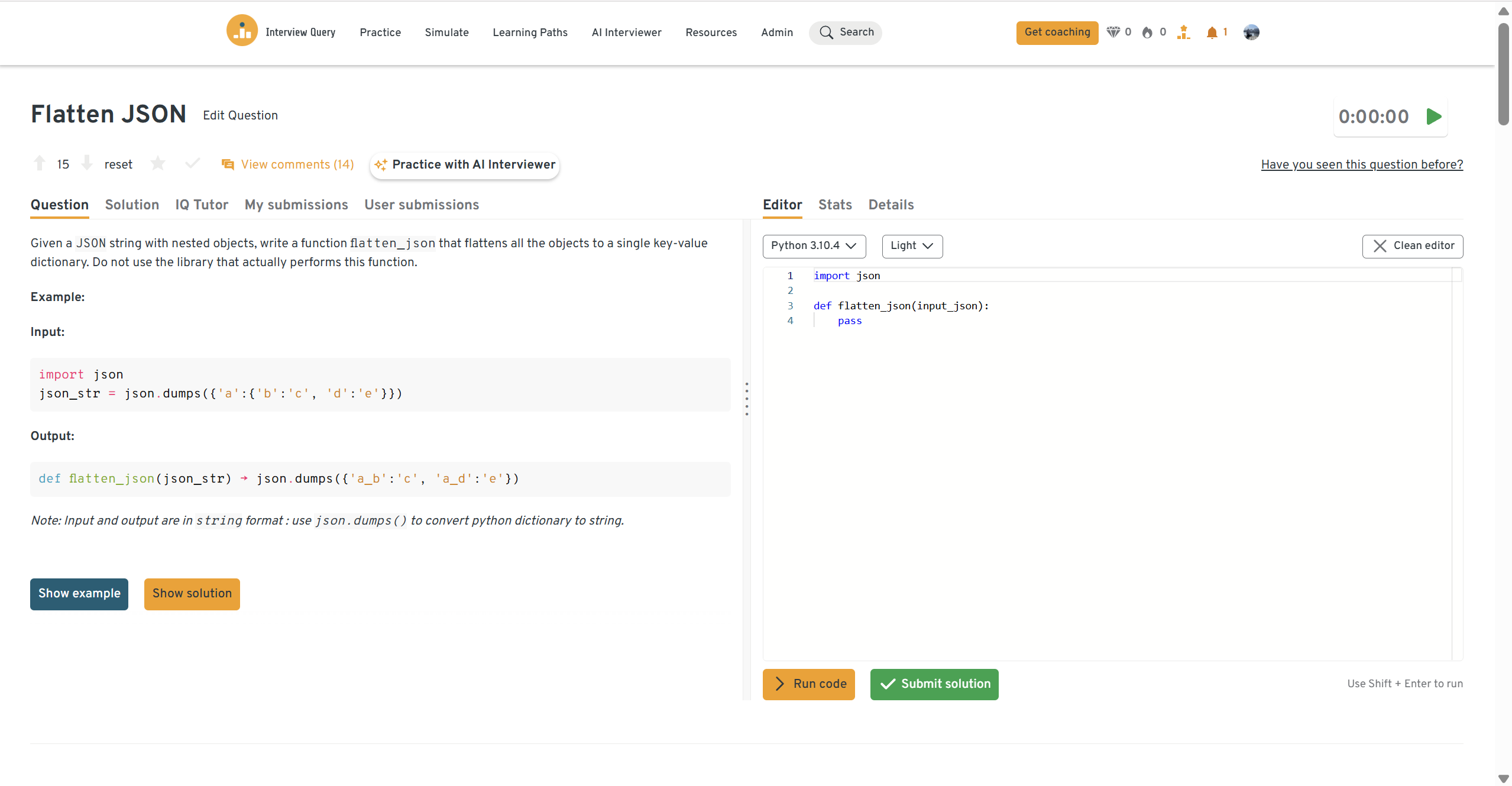

-

This reflects real ingestion challenges when normalizing semi-structured logs. Interviewers look for recursion, clear key construction, and handling of lists or missing fields.

Tip: Explain how you would version schemas to handle evolving JSON structures.

You can practice this exact problem on the Interview Query dashboard, shown below. The platform lets you write and test SQL queries, view accepted solutions, and compare your performance with thousands of other learners. Features like AI coaching, submission stats, and language breakdowns help you identify areas to improve and prepare more effectively for data interviews at scale.

System/Product Design Interview Questions

System and design questions in the Apple data engineer interview evaluate how you architect scalable, reliable, and privacy-aware data systems. Interviewers focus on tradeoffs across storage, compute, latency, cost, and governance rather than tool-specific trivia. These questions align closely with preparation paths like the data engineering interview learning path and realistic practice through take-home style problems.

How would you design a data mart or data warehouse for an online retail store using a star schema?

This question tests your understanding of dimensional modeling and how analytics systems are structured for performance and flexibility. You are expected to define the grain of the fact table, identify core business processes such as orders or returns, and explain how dimensions like product, customer, and time evolve over time. Apple interviewers pay close attention to whether your design supports both ad hoc analysis and long-term scalability without frequent rewrites.

Tip: Always define the grain first and explain how your model adapts to new attributes or events.

-

This question evaluates how you think about ingestion, transformation, and reporting across regions. A strong answer outlines source systems, landing and staging layers, transformation logic for currency and localization, and downstream reporting needs. Interviewers also look for awareness of data residency, latency expectations, and operational monitoring.

Tip: Explicitly call out regional partitioning and how you would handle schema consistency across markets.

How would you design an ETL pipeline to transfer Stripe payment events into an analytics warehouse?

This scenario mirrors real-world financial and subscription data pipelines. You are expected to reason through ingestion methods, raw data retention, normalization, deduplication, and joins with customer or account data. Apple interviewers care about idempotency, schema evolution, and observability more than the specific tools you choose.

Tip: Mention how you would detect duplicates and safely reprocess historical data.

How would you process and clean a 100 GB csv file without loading it entirely into memory?

This question tests your ability to design memory-efficient batch processing workflows. A strong answer describes chunked or streaming reads, parallelization strategies, and incremental writes to downstream storage. Interviewers also look for fault-tolerant design, such as checkpointing progress to avoid full reprocessing after failures.

Tip: Explain how you would resume processing after a crash without corrupting output data.

-

This question evaluates storage tiering, partitioning strategies, and query engine tradeoffs. You should discuss separating hot, warm, and cold data, using columnar formats, and minimizing scan costs through partition pruning. Apple interviewers often probe how you balance cost with performance and access control over long retention periods.

Tip: Clearly explain why different data ages belong in different storage tiers.

How would you plan a migration from a document-based user activity log to a relational database?

This scenario tests your approach to incremental migration and risk management. A strong answer covers schema design, backfill strategy, dual writes, and validation checks to ensure consistency between old and new systems. Interviewers want to see that you can migrate without disrupting downstream consumers.

Tip: Call out reconciliation checks before decommissioning the legacy system.

How would you optimize OLAP aggregations for generating monthly and quarterly performance reports?

This question focuses on performance optimization in analytical systems. You are expected to discuss pre-aggregations, materialized views, or summary tables, along with refresh strategies and storage tradeoffs. Apple interviewers care about whether your solution improves reliability for business users without overcomplicating the pipeline.

Tip: Explain how you would monitor freshness and correctness of aggregate tables.

Behavioral or culture fit questions

Behavioral interviews at Apple focus on ownership, collaboration, and judgment in high-stakes environments. Interviewers look for structured storytelling, clear impact, and evidence that you can operate responsibly with sensitive data. Practicing with mock interviews or guided sessions through coaching helps refine these responses.

-

This question evaluates your ownership of data integrity and your ability to act proactively. Apple values engineers who prevent issues from recurring, not just those who fix them once. Strong answers highlight detection methods, cross-team communication, and long-term safeguards.

-

This assesses how you make tradeoffs under pressure. Interviewers look for clarity in prioritization logic, stakeholder alignment, and transparent communication. Apple prefers candidates who surface risks early rather than silently missing deadlines.

-

This question tests your ability to work across product, analytics, and engineering teams. Strong answers show how you translated technical constraints into shared understanding and resolved conflicting requirements.

Describe a time you introduced a new process or automation that improved reliability or efficiency.

Apple looks for engineers who simplify systems and reduce operational risk. Interviewers want to see initiative, technical judgment, and measurable impact rather than incremental improvements with unclear outcomes.

Explain how you ensured data privacy or compliance while designing or maintaining a data system.

This question reflects Apple’s privacy-first culture. Strong answers demonstrate data minimization, access controls, auditability, and conscious tradeoffs between insight and exposure.

If you want deeper practice, you can explore the full set of 100+ data engineer interview questions with answers. This walkthrough by Interview Query founder Jay Feng covers 10+ essential data engineering interview questions—spanning SQL, distributed systems, pipeline design, and data modeling.

How to Prepare for an Apple Data Engineer Interview

Preparing for an Apple data engineer interview requires more than grinding coding problems. Apple evaluates whether you can reason through real data systems, explain architectural tradeoffs, and operate responsibly in a privacy-first environment at massive scale. Your preparation should mirror how Apple teams actually work, not how generic interview prep is usually structured.

Prioritize SQL and data modeling first.

SQL is foundational at Apple, especially for querying large telemetry, product usage, and operational datasets. Focus on joins, window functions, aggregation correctness, and performance considerations rather than memorizing syntax. Pair this with dimensional modeling fundamentals like fact–dimension separation, grain definition, and handling slowly changing dimensions. Structured practice from the SQL interview learning path helps build this fluency.

Practice end-to-end pipeline and system design thinking.

Apple interviews frequently test how you design ingestion, transformation, storage, and serving layers together. You should be able to explain tradeoffs between batch vs streaming, cost vs latency, and flexibility vs governance. Use realistic scenarios, such as event ingestion or large-scale reporting, and practice articulating failure modes and monitoring. The data engineering interview learning path is especially useful for this type of preparation.

Develop clear architectural communication.

Apple cares less about tool choice and more about how clearly you justify decisions. Practice explaining designs verbally using simple diagrams and structured logic. Mocking full interview flows through mock interviews or guided feedback via coaching helps surface gaps in clarity and structure.

Prepare privacy-aware reasoning.

You should be ready to explain how you minimize data access, enforce permissions, and design systems that respect user privacy by default. Even when questions are technical, Apple interviewers often probe how your solution limits exposure and risk. This is where practicing real-world scenarios through takehomes or applied exercises from challenges pays off.

Rehearse behavioral answers with ownership and judgment.

Apple behavioral interviews emphasize accountability, discretion, and collaboration. Prepare stories where you identified risks early, improved reliability, or navigated ambiguity responsibly. Tools like the AI interview can help you practice concise, structured delivery under time pressure.

Role Overview and Culture at Apple

An Apple data engineer designs, builds, and maintains the data infrastructure that supports some of the largest consumer technology products in the world, including services such as Apple Music, iCloud, Apple Pay, and the App Store. The role focuses on enabling analytics, experimentation, and machine learning by ensuring data is reliable, scalable, and accessible across product and business teams.

Key responsibilities typically include:

- Designing and maintaining scalable batch and streaming data pipelines for high-volume product and operational data.

- Building and managing data lakes, data warehouses, and processing layers that support analytics and reporting at scale.

- Implementing ETL and ELT workflows that ingest, transform, and validate data from multiple internal and external sources.

- Defining data models that balance query performance, storage efficiency, and long-term flexibility.

- Partnering with data scientists, analysts, product managers, and engineers to translate requirements into data solutions.

- Ensuring data quality, observability, and reliability across pipelines, including monitoring for late or missing data.

- Optimizing system performance and cost while maintaining fault tolerance and availability.

- Designing systems with privacy and security as first-class constraints, enforcing strict access controls and data minimization.

Day to day, Apple data engineers work in highly collaborative, fast-paced environments where ownership and precision matter. Teams expect engineers to think beyond immediate deliverables and consider how systems will scale, evolve, and be maintained over time. Privacy is deeply embedded in the culture, and engineers are expected to justify how data is collected, processed, and accessed at every stage.

Success in this role looks like delivering data platforms that are trusted by downstream teams, resilient under heavy load, and aligned with Apple’s high standards for privacy, security, and user experience.

Apple-specific tip: Unlike many data engineering roles, success at Apple is measured as much by judgment and data stewardship as by technical output.

Average Apple Data Engineer Salary

Recent data from Levels.fyi shows that the Apple data engineer salary in the United States spans a wide range depending on level and scope. Total compensation typically starts around $125,000–$135,000 per year for entry-level roles and can exceed $350,000 per year at senior levels. The median total compensation for Apple data engineers in the United States is approximately $240,000 per year, reflecting Apple’s strong base pay combined with meaningful stock grants and performance bonuses.

Average Base Salary

Average Total Compensation

Compensation at Apple is heavily influenced by level, with base salary forming the largest component, followed by stock and annual bonus. Compared to many tech companies, Apple’s compensation structure emphasizes stability and long-term equity growth, particularly at senior and staff levels.

National Compensation Overview (United States)

| Level | Total Compensation (Annual) | Base Salary (Annual) | Stock (Annual) | Bonus (Annual) |

|---|---|---|---|---|

| ICT2 (entry level) | $130,000 | $120,000 | $7,500 | $2,500 |

| ICT3 (mid level) | $230,000 | $160,000 | $54,000 | $13,000 |

| ICT4 (senior) | $280,000 | $180,000 | $84,000 | $16,000 |

| ICT5 (staff / principal) | $370,000 | $230,000 | $108,000 | $34,000 |

Across most U.S. locations, ICT2 and ICT3 data engineers cluster between $130K–$230K, while ICT4 and ICT5 roles show a significant increase driven primarily by larger equity grants and higher-impact responsibilities. Senior and staff-level data engineers at Apple are compensated for both technical leadership and long-term ownership of critical data systems.

FAQs

How long does the Apple data engineer interview process take?

Most candidates complete the Apple data engineer interview process within four to six weeks, depending on team availability and role seniority. Recruiter screens and technical interviews usually move quickly, while onsite or virtual loops can take longer due to interviewer coordination. Apple recruiters typically provide updates after each stage, though timelines may vary across teams.

How technical is the Apple data engineer interview?

The interview is highly technical, but not purely algorithm-focused. Candidates are evaluated on SQL proficiency, data modeling, ETL and pipeline design, and system-level reasoning. While basic coding is required, Apple interviewers place more emphasis on architectural judgment, scalability, and correctness than on complex data structures.

Does Apple require prior big tech or FAANG experience for data engineers?

No. Apple values relevant experience over company pedigree. Candidates from startups, mid-sized companies, or non-traditional backgrounds can perform well if they demonstrate strong data engineering fundamentals, clear communication, and sound judgment when designing scalable systems.

How important is privacy and data governance in the interview?

Privacy is a core evaluation theme at Apple. Interviewers frequently probe how you limit data access, enforce controls, and design pipelines that minimize exposure. Candidates who proactively discuss privacy tradeoffs and governance considerations tend to stand out.

Become An Apple Data Engineer With Interview Query

Landing an Apple data engineer role means showing more than technical ability. You need to demonstrate how you think about data at scale, explain tradeoffs clearly, and design systems that respect privacy while delivering real business impact. Apple interviews reward candidates who prepare deliberately, practice realistic scenarios, and communicate with confidence and judgment.

The most effective way to prepare is to mirror the interview itself. Work through SQL, data modeling, and pipeline design problems that reflect real production constraints, then practice explaining your reasoning out loud. Use targeted preparation resources like the data engineering interview learning path to focus on the skills Apple actually tests, and simulate real interview pressure with mock interviews or structured feedback through coaching.

With the right preparation strategy and consistent practice, you can walk into each stage of the Apple data engineer interview process clear, composed, and ready to perform at the level Apple expects.