Apple Machine Learning Engineer Interview Questions, Process and Salary

Introduction

Apple’s machine learning organization powers some of the most widely used AI systems in the world, from Siri and on-device intelligence to computer vision, personalization, and hardware-optimized neural engines. With over 2 billion active Apple devices generating signals, ML engineers at Apple operate at a scale where small model improvements translate into massive global impact. Teams across Apple Services, Siri, Health, Maps, Photos, and Core ML rely on ML engineers to design models that are accurate, low latency, private by design, and efficient on-device.

Because of this, Apple’s interview process places heavy emphasis on algorithmic thinking, deep learning fundamentals, system design for ML, and end-to-end applied reasoning. Many candidates prepare with the machine learning interview learning path and the data science interview learning path to build fluency in both theory and production-level ML engineering.

What does an Apple Machine Learning Engineer do

Apple machine learning engineers design, develop, and deploy ML systems that power critical product experiences. The role blends modeling expertise with production engineering, requiring the ability to reason about model performance, reliability, and system constraints.

Key responsibilities include:

- Model development: Build and train machine learning and deep learning models, including generative and multimodal architectures.

- Data analysis: Preprocess large datasets and implement scalable feature pipelines.

- Performance and evaluation: Run experiments, monitor metrics, and lead error triage and model iteration.

- Cross-functional collaboration: Work with research scientists, software engineers, product teams, designers, and hardware engineers to integrate models into Apple systems.

- Research and innovation: Explore new modeling approaches, training strategies, and frameworks to push product capabilities.

- ML system architecture: Design inference pipelines, distributed training setups, and model deployment workflows that meet Apple’s scale and privacy requirements.

Why this role at Apple

The Apple machine learning engineer role is ideal for those who want to shape ML experiences at global scale. Engineers who choose Apple often highlight:

- Impact at massive scale: Models support billions of daily interactions across Siri, Photos, App Store, Health, or on-device personalization.

- Unique constraints and challenges: Apple’s focus on privacy and on-device computation requires highly optimized and innovative modeling approaches.

- Cross-functional collaboration: MLEs partner closely with teams in hardware, software, and research, gaining visibility into end-to-end product development.

- Research-to-production opportunity: Apple encourages advancement of state-of-the-art models while maintaining a pragmatic focus on reliability and safety.

- Clear growth paths: Engineers can deepen expertise in areas like LLMs, multimodal ML, distributed systems, optimization, or applied ML research.

If you want to explore other roles for comparison, you can review the broader Apple interview guides and see how machine learning engineering differs from data science and analytics paths.

Apple Machine Learning Engineer Interview Process

Apple’s MLE interview loop is rigorous and evaluates ML intuition, coding ability, deep learning expertise, and system design skills. When candidates look up the Apple machine learning engineer interview process, what they’re usually trying to understand is how the stages fit together: recruiter screens, technical phone screens, a potential take-home or ML coding assessment, onsite interviews, and final team match. While specific interview content varies by team, most candidates go through a structured process similar to Apple’s DS loop but with significantly deeper emphasis on engineering and model optimization.

Candidates often prepare through the ML interview learning path for theory and modeling practice, and the data science interview learning path for coding, statistics, and case reasoning.

| Stage | What it evaluates |

|---|---|

| Application and resume review | ML expertise, deep learning experience, applied research or production deployments |

| Recruiter screen | Background, alignment with team needs, communication clarity |

| Technical phone screens | ML fundamentals, deep learning, coding in Python, practical reasoning |

| Take-home or ML coding assessment | Modeling, data processing, implementation, reproducibility |

| Onsite or virtual loop | 5 to 8 rounds covering coding, ML theory, DL architecture, ML system design, optimization, and behavioral skills |

| Hiring manager conversation | Project depth, system thinking, leadership in ambiguity |

| Final decision | Cross-interviewer calibration and team match |

Application and resume review

This stage identifies candidates with strong end-to-end ML engineering experience. Apple looks for the ability to translate high-level product goals into measurable modeling tasks and to design systems that work reliably in production.

What Apple looks for:

- Experience training and deploying deep learning models

- Systems thinking applied to data pipelines, model serving, or distributed training

- Evidence of measurable impact (accuracy improvements, latency reduction, model robustness)

- Publications or patents are a plus but not required

- Clear, outcome-oriented resume bullets

Candidates can explore real Apple ML interview patterns in the machine learning engineer question bank.

Tip: Frame each project in terms of problem → approach → outcome. Apple responds strongly to measurable results.

Recruiter screen

The recruiter call ensures your background aligns with the technical depth and domain focus of the hiring team, which may include Siri, Core ML, Vision, Personalization, Apple Services Intelligence, or Hardware Engineering.

You may discuss:

- Summary of your ML engineering experience

- Experience with PyTorch, TensorFlow, JAX, or distributed training frameworks

- Familiarity with on-device ML, transformers, or privacy-preserving ML

- Interest in specific Apple product areas

- Timeline, interview structure, and expectations

Tip: Prepare a two to three sentence summary of your ML expertise, emphasizing modeling impact and production experience.

Technical phone screens

Apple typically conducts one or two technical phone screens lasting 45 to 60 minutes. These sessions test both depth of understanding and clarity of communication, and they’re where most of the Apple machine learning engineer phone screen questions show up around ML fundamentals, deep learning, and Python coding.

| Focus area | What to expect |

|---|---|

| Deep learning fundamentals | Forward and backward passes, gradients, loss functions, CNNs, RNNs, transformers |

| ML algorithms | Bias variance, regularization, optimization, feature extraction |

| Coding in Python | Implementing functions, manipulating arrays, writing clean and testable code |

| Applied reasoning | Designing a model pipeline, evaluating tradeoffs, debugging behavior |

Many candidates reinforce these fundamentals using the ML learning path.

Tip: Interviewers assess how clearly you structure your explanation. Think aloud and justify tradeoffs.

Take-home or ML coding assessment

Some Apple teams include a short assignment that evaluates practical ML engineering skills. These assessments vary but generally reflect realistic tasks such as data preparation, model prototyping, and reproducible experimentation. The goal is not to produce a state-of-the-art model but to demonstrate clarity, engineering discipline, and thoughtful evaluation.

What the assessment may include:

- Implementing a small model using PyTorch or TensorFlow

- Cleaning and transforming a raw dataset

- Running baseline experiments and reporting metrics

- Comparing two training approaches

- Writing a concise explanation of results and next steps

Teams look for correctness, reproducibility, and clarity of reasoning. Candidates often prepare by practicing structured workflows in the machine learning interview learning path, reviewing coding exercises in the data science interview learning path, and studying past project prompts in the takehomes library.

Tip: Use clean abstractions, include comments explaining assumptions, and prioritize readability over cleverness. Apple values engineering craftsmanship.

Onsite or virtual loop

The onsite loop is the most in-depth stage of the Apple MLE interview. Candidates typically complete five to eight interviews, each targeting a different dimension of ML engineering. Sessions are often discussion-driven rather than purely whiteboard-based, and interviewers expect structured thinking, clarity, and strong intuition about model behavior.

| Interview Type | What It Evaluates |

|---|---|

| Coding (Python) | Implement functions, manipulate tensors, build utility scripts, and demonstrate clean engineering practices. Problems often mirror tasks in analytics pipelines or model preprocessing. Candidates frequently prepare using the coding modules in the data science learning path. |

| ML theory and deep learning fundamentals | Concepts such as gradient flow, regularization, optimization, activation behavior, CNNs, RNNs, transformers, attention mechanisms, and training stability. Interviewers test intuition and the ability to explain model behavior clearly. |

| DL architecture and model design | Design a model for a real Apple use case such as speech, personalization, or computer vision. Expect to discuss architecture choices, training strategies, and tradeoffs. Many candidates review concepts in the machine learning interview learning path. |

| ML system design | Design large-scale ML systems including feature pipelines, distributed training, model deployment, or on-device inference. Interviewers evaluate scalability, latency, privacy constraints, and long-term maintainability. |

| Model evaluation and optimization | Error analysis, metric selection, ablation studies, debugging unstable training runs, identifying failure cases, and thinking through model improvements. |

| Behavioral and collaboration | Communication clarity, ownership, conflict resolution, decision-making, and ability to work with cross-functional teams such as engineering, product, and research. Apple looks for structured, reflective storytelling rooted in real projects. |

Tip: Use a consistent flow when answering design questions: problem definition, constraints, architecture, training strategy, evaluation, tradeoffs, and potential failure modes.

Hiring manager conversation

The hiring manager interview focuses on depth, leadership, and how you handle ambiguity. It often centers around one or two of your most significant ML projects and evaluates your ability to move from research concepts to production systems.

Hiring managers evaluate:

- How you define ML problems when goals are ambiguous

- Your approach to designing model pipelines end to end

- Experience with data quality issues, model iteration, and debugging

- How you collaborate with cross-functional teams such as software, research, and product

- Whether you can scale your thinking to Apple-level constraints such as privacy, on-device inference, or large user bases

Tip: Choose one anchor project you can explain at three levels: high-level overview, modeling decisions, and system constraints. Apple expects depth, not just breadth.

Final decision

After the onsite loop, Apple holds a calibration meeting where interviewers discuss your performance across all dimensions. The evaluation is holistic and considers:

- Technical signals

- Communication clarity

- System design depth

- Collaboration and leadership potential

- Alignment with the team’s domain and product needs

If a team match is found, the recruiter contacts you with next steps, which may include compensation discussions or additional team-fit conversations for roles with multiple potential placements.

Tip: Follow up with your recruiter if timelines are not clear. Apple sometimes conducts secondary team matches for candidates with strong general signals.

Apple Machine Learning Engineer Interview Questions

Apple evaluates ML engineers across three core dimensions: depth of machine learning and deep learning intuition, ability to architect scalable ML systems, and engineering capability through Python coding questions. These categories map closely to the onsite loop and reflect what ML teams at Apple prioritize across Siri, Photos, Core ML, Vision, Health, and Apple Services Intelligence. If you’re trying to understand what the Apple machine learning engineer interview experience feels like, think of it as rotating through these three dimensions with different interviewers, all testing how you reason rather than how many buzzwords you can recall.

Machine learning and deep learning interview questions

ML interviews test your ability to explain model behavior, compare algorithms, and reason about training dynamics at scale. Apple expects engineers to understand both theoretical underpinnings and practical model debugging. If you’re searching for Apple MLE interview questions or how to crack the Apple machine learning engineer interview, this is the core you’re being tested on. Reviewing conceptual problems in the machine learning interview learning path helps reinforce the fundamentals Apple emphasizes.

How would you explain the bias variance tradeoff when choosing a model?

Explain how simpler models tend to have high bias and low variance while flexible models usually have low bias but high variance. Apple evaluates whether you can articulate how this tradeoff affects generalization when operating at global scale across billions of device interactions.

Tip: Always map the tradeoff to a concrete Apple style scenario such as personalization or speech modeling.

How does a random forest generate its trees, and when might you use it over logistic regression?

Describe bootstrap sampling, feature subsampling, and majority vote aggregation and contrast this with logistic regression’s linear decision boundary. Apple tests whether you can justify algorithm selection based on dataset complexity, interpretability needs, and computational constraints.

Tip: Clarify how you compare feature interactions or stability between the two algorithms.

Explain the difference between XGBoost and random forest. When would you prefer one over the other?

Contrast random forest’s independent tree bagging with XGBoost’s sequential boosting that optimizes gradients. Interviewers assess whether you can map algorithmic structure to use cases where boosting’s regularization and fine tuned optimization outperform bagging methods.

Tip: Give a specific application such as ranking or anomaly detection to highlight your choice.

How would you design a facial recognition authentication system for secure employee access?

Outline how embeddings are generated, matched, thresholded, and validated and describe fallback mechanisms for edge cases. Apple evaluates your ability to design secure ML systems that integrate privacy, computation, and real world reliability.

Tip: Explain how you evaluate false positives and false negatives for different access tiers.

How would you design and evaluate a model that detects unsafe objects at a checkpoint?

Describe how you choose model inputs, evaluation metrics, and sampling strategies in a safety critical domain. Apple tests your ability to handle imbalanced data, identify sources of error, and design experiments that stress test edge cases.

Tip: Discuss calibration strategies to reduce catastrophic false negatives.

How would you diagnose unstable gradients while fine tuning a transformer on device specific text?

Discuss potential causes such as learning rate spikes, activation saturation, or mismatched token statistics. Apple evaluates whether you understand transformer training dynamics and can debug instability in a principled way.

Tip: Mention gradient clipping or warmup schedules as mitigation strategies.

A vision model performs well in internal validation but fails heavily on field data. How would you debug the gap?

Explain dataset drift analysis, segmentation of failure clusters, and hardware variation checks. Apple values engineers who can distinguish between model deficiencies and environmental mismatches that arise in global deployments.

Tip: Highlight the importance of monitoring offline to online divergence.

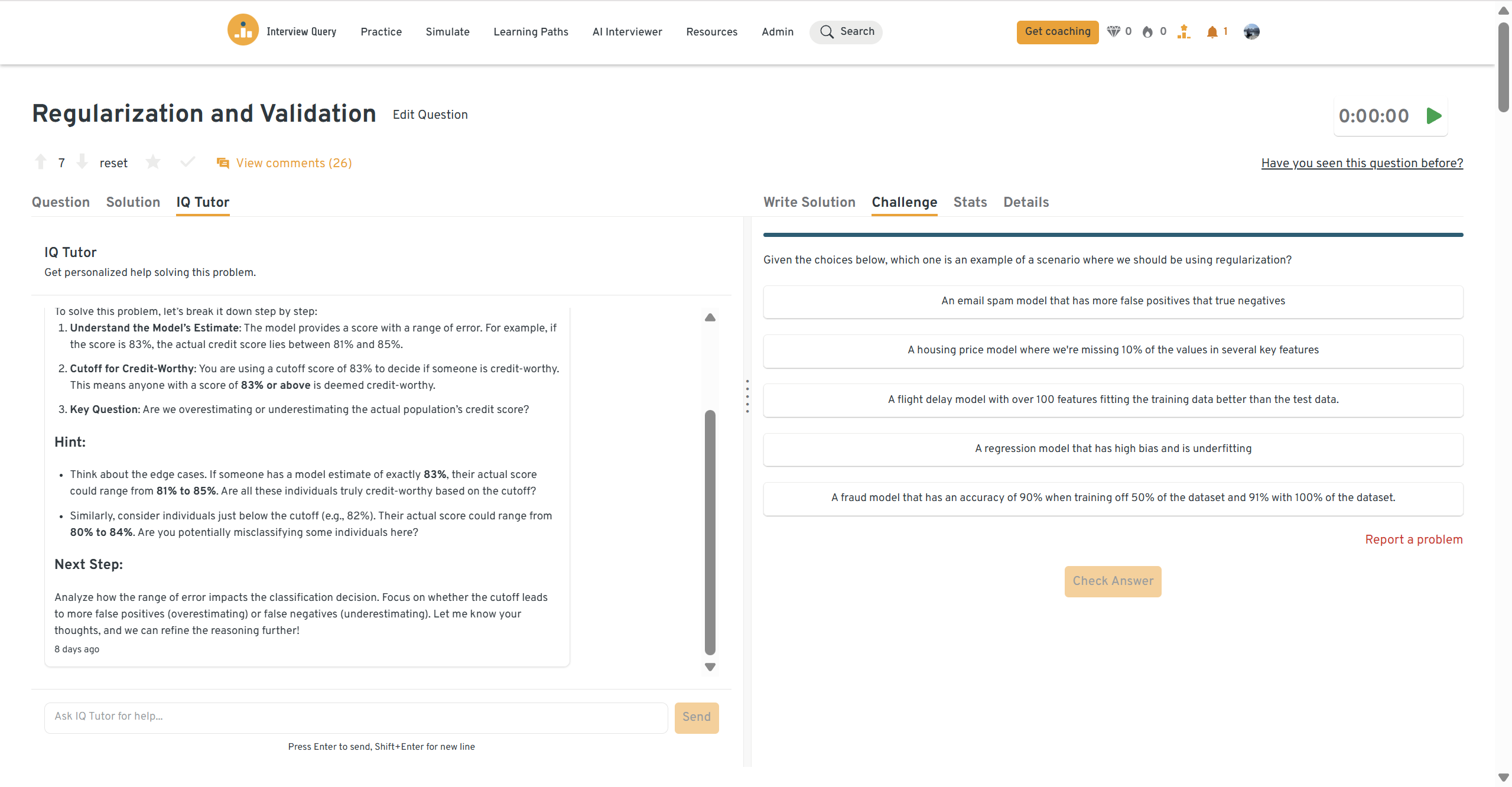

When should you use regularization and when should you use validation to improve model performance?

Explain how regularization constrains model complexity while validation measures generalization across unseen data. Apple evaluates whether you understand how both mechanisms work together during model development and hyperparameter tuning.

Tip: Call out how you prevent overfitting when iterating rapidly.

You can practice this exact problem on the Interview Query dashboard, shown below. The platform lets you write and test SQL queries, view accepted solutions, and compare your performance with thousands of other learners. Features like AI coaching, submission stats, and language breakdowns help you identify areas to improve and prepare more effectively for data interviews at scale.

ML system design interview questions

System design interviews evaluate your ability to architect end to end ML solutions that scale across Apple’s vast ecosystem. This requires thinking about data ingestion, distributed training, inference, privacy, and device constraints. Reviewing case styles in the data science interview learning path helps reinforce structured reasoning.

Design a distributed authentication model for employee and contractor access.

Explain how you collect identity signals, generate embeddings, perform secure lookup, and orchestrate inference at scale. Apple evaluates whether your design balances accuracy, reliability, and privacy concerns.

Tip: Describe how you control load and latency using batching or caching.

How would you design an on device speech recognition system that works offline?

Discuss model compression, quantization, streaming inference, and noise handling strategies. Apple tests how well you reason about latency, memory limits, and end user privacy.

Tip: Mention how you validate model performance across different hardware generations.

How would you architect a distributed training pipeline for a multimodal text and image model?

Explain data sharding, synchronization across modalities, mixed precision training, and monitoring strategies. Apple evaluates whether you can build resilient pipelines that support heavy workloads.

Tip: Highlight how you detect skew or imbalance in multimodal inputs.

How would you design a feature extraction pipeline used by hundreds of millions of users?

Describe how you manage freshness, caching, offline computation, and consistency guarantees. Apple tests whether you can create pipelines that remain stable under massive load.

Tip: Reference how you monitor drift or stale features over time.

How would you compare transformer architectures for low latency deployment on the neural engine?

Discuss compute budgeting, kernel fusion, quantization schemes, and ablation strategies. Apple values engineers who can optimize tradeoffs under strict hardware constraints.

Tip: Call out how you measure latency and energy efficiency jointly.

How would you build a continuous evaluation system that detects silent model degradation in production?

Explain shadow deployment, holdback traffic, monitoring metrics, and alert thresholds. Apple evaluates whether you understand long term model reliability at scale.

Tip: Mention how you avoid logging sensitive or identifiable data.

Coding and data manipulation interview questions

Coding interviews test whether you can write clean, efficient Python for modeling, experimentation, or preprocessing. These are the Apple machine learning engineer coding questions that often surprise candidates who focused only on theory. Reviewing algorithmic problems in the data science learning path helps reinforce the implementation quality Apple expects.

Group anagrams from a list of strings.

Explain how hashing normalized string keys allows efficient grouping and discuss complexity implications. Apple evaluates whether you can write structured, maintainable code for ML utility functions.

Tip: Mention how you avoid recomputing string transformations.

Determine whether a string is a palindrome.

Explain how a two pointer approach or normalized slicing checks symmetry and discuss edge cases. Apple tests whether you can balance readability with correctness.

Tip: State how you handle punctuation or mixed case formatting.

Count daily active users for a given year.

Describe how you parse timestamps, group events, and aggregate unique users in Python. Apple evaluates your ability to translate analytical SQL logic into Pythonic transformations.

Tip: Mention how timezone handling affects accuracy.

Identify upsell transactions based on purchase order.

Explain how sorting, grouping, and sequential comparisons allow you to identify purchases beyond the first event. Apple tests your ability to implement business logic cleanly in code.

Tip: Clarify how you treat same day purchases that should not count as upsells.

Implement layer normalization from scratch using NumPy.

Explain how to compute mean and variance per feature, stabilize division using eps, and scale with gamma and beta. Apple evaluates understanding of deep learning internals and numerical stability.

Tip: Address how batch size affects normalization behavior.

Write a rolling median function for streaming sensor data.

Explain how to maintain two heaps or a balanced structure to support insertions and median queries efficiently. Apple tests reasoning about scalability under continuous data streams.

Tip: Highlight how you manage memory over long running streams.

Behavioral and cross-functional interview questions

Behavioral interviews at Apple evaluate how you communicate complex ideas, collaborate with engineering and research partners, and navigate ambiguity in fast-moving ML environments. Strong answers demonstrate ownership, clarity, and end-to-end thinking. Reviewing example prompts in the data science interview learning path helps reinforce the storytelling structure Apple expects.

Describe a data or ML project you worked on. What were the main challenges you faced?

Apple looks for candidates who can manage incomplete data, debug unstable training runs, and collaborate with cross-functional partners when experiments fail. Interviewers expect you to articulate both technical challenges such as model drift or preprocessing issues and organizational challenges such as conflicting requirements or evolving product goals.

Tip: Mention one technical challenge and one collaboration challenge to demonstrate full-spectrum ownership.

Sample Answer: I worked on a multilingual sentiment model where data quality varied significantly by language. Midway through training, I discovered unstable gradients caused by token frequency imbalance. I partnered with data engineering to rebalance sampling and worked closely with PMs to redefine success criteria for low-resource languages. After these adjustments, the model improved stability and increased macro-F1 by more than 11 percent across target markets.

What are effective ways to make complex ML outputs easier for non-technical teams to understand?

Apple values communication as much as technical ability since ML engineers frequently work with product, design, and operations teams. Interviewers want to see whether you can simplify deep learning concepts into decision-ready insights rather than algorithmic details.

Tip: Tie your communication approach to faster decision making or reduced risk.

Sample Answer: When explaining model performance to product teams, I start by tying metrics directly to user experience. Instead of diving into architecture, I translate outputs into clear user-centric terms like error rates for specific flows. I also build short annotated visualizations to show patterns instead of dense tables. This helps non-technical partners give actionable feedback early without getting lost in jargon.

What would your current manager say about your strengths and weaknesses?

Interviewers want balanced self-awareness, especially for ML roles where iteration speed, experimentation discipline, and communication matter. Choose strengths that align with ML engineering, such as debugging ability, reproducibility rigor, or cross-functional alignment.

Tip: Pair each weakness with a concrete mitigation technique you already use.

Sample Answer: My manager would highlight structured debugging and clarity of communication as my key strengths. I am able to break down training failures into interpretable sub-problems and communicate the fix path clearly to partners. A weakness is that I sometimes over-optimize early prototypes. To address this, I now time-box early iterations and validate assumptions before deep optimization.

Talk about a time when you had trouble communicating with stakeholders. How did you overcome it?

Apple assesses whether you can tailor communication styles to engineering, product, and leadership audiences. This matters because ML engineers often need to explain tradeoffs and constraints while still enabling partners to move forward.

Tip: Emphasize how the relationship or workflow improved as a result.

Sample Answer: I once collaborated with a product manager who preferred high-level insights while I initially shared detailed experiment logs. This caused misalignment around priority decisions. I shifted to a one-page summary format that framed the core question, the experiment outcome, and the recommendation. This improved alignment immediately and we reduced iteration cycles by nearly half.

Why do you want to work at Apple?

Apple looks for mission alignment, product understanding, and appreciation for privacy-driven ML. Interviewers want an answer grounded in the unique constraints and opportunities of building ML experiences for billions of users.

Tip: Mention a specific Apple product or ML domain that motivates you.

Sample Answer: I want to work at Apple because the company builds ML experiences that directly impact daily life at global scale. I’m particularly motivated by Apple’s privacy-preserving ML philosophy and the opportunity to design models that run efficiently on-device. The engineer-researcher collaboration at Apple also matches how I like to work: fast iteration grounded in strong fundamentals.

Tell me about a time you influenced a decision using data or model results.

ML engineers often need to persuade teams to adopt or sunset models based on evidence. Apple evaluates whether you can interpret results responsibly, acknowledge limitations, and frame decisions in business or product terms.

Tip: Show that your recommendation led to measurable changes.

Sample Answer: I worked on an intent classifier whose false negative rate was hurting user task completion. By segmenting errors, I showed that the model underperformed on short queries and proposed a hybrid fallback rule. After piloting the change, overall task completion improved by 8 percent and the team adopted the hybrid system permanently.

Describe a time you had to work through ambiguity in an ML project. How did you bring clarity?

Ambiguity is common in early stage research and in production ML where requirements shift. Apple looks for the ability to structure uncertainty rather than wait for instruction.

Tip: Mention how you aligned stakeholders through clear definitions or metrics.

Sample Answer: During an early recommendation prototype, the product goal lacked a clear metric. I defined three viable success metrics and walked stakeholders through tradeoffs. We aligned on precision at K as the primary signal and retention as a guardrail. That clarity enabled faster experimentation and led to a successful launch.

How To Prepare For An Apple Machine Learning Engineer Interview

Preparing for an Apple MLE interview requires strong ML fundamentals, engineering discipline, and the ability to reason clearly about model behavior at scale. Apple expects candidates to understand deep learning architectures, optimization, system design, and failure modes, while also demonstrating excellent communication and collaboration. Many candidates reinforce their foundation through the machine learning interview learning path, the data science interview learning path, hands-on practice in the ML question bank, and timed drills in the challenges library. To rehearse answers end to end, you can also use the AI interview tool, which simulates realistic follow-ups.

If you prefer to start with a quick visual overview, watch this guide on how to become a machine learning engineer by Jay Feng, co-founder of Interview Query. It’s a concise walkthrough of the skills, benefits, and differentiation strategies for aspiring ML engineers.

Strengthen your deep learning fundamentals and training intuition

Apple expects MLEs to understand core DL concepts thoroughly, including gradient behavior, regularization strategies, architecture tradeoffs, and training stability. You should be able to explain exactly why a model diverges, underfits, or fails specific edge cases. The machine learning learning path offers structured practice on these topics.

Tip: Practice articulating failure modes of architectures you have worked with, such as transformers or CNNs.

Refresh your Python coding, data structures, and modeling utilities

Coding interviews often involve building small components like normalization layers, custom losses, or preprocessing pipelines. Apple evaluates code readability, performance awareness, and debugging clarity. Reviewing implementation focused problems in the data science learning path helps reinforce structure and quality.

Tip: Focus on writing clean helper functions and clear docstrings to show engineering maturity.

Practice ML system design using a structured, repeatable framework

ML system design questions require thinking about data ingestion, training pipelines, monitoring, deployment, and privacy. You should be able to break down ambiguity and propose scalable, robust architecture flows. Reviewing end to end scenarios in the machine learning learning path helps build the structure Apple looks for.

Tip: Use a problem definition, constraints, architecture, evaluation, risks flow for every system design answer.

Prepare one or two anchor ML projects to explain in depth

Apple expects deep dives into technical decisions, modeling tradeoffs, data challenges, and long term maintenance implications. You should be comfortable explaining experiments you ran, decisions you made, and what you would change with more time or resources.

Tip: Be ready to sketch diagrams or pipelines when describing your project flow.

Review statistics and experimentation basics for evaluation questions

While not as statistics heavy as DS interviews, MLE loops still include reasoning about metrics, error analysis, confidence intervals, and experiment interpretation. Reviewing evaluation style prompts in the data science learning path helps reinforce structured decision making.

Tip: Always connect evaluation choices to the product or system constraints rather than treating metrics as generic.

Build familiarity with Apple products and on device ML constraints

Many Apple MLE roles involve latency sensitive, privacy preserving, or hardware constrained environments. Interviewers expect candidates to understand why Apple favors on device inference and how this influences architecture selection.

Tip: Study quantization, pruning, and model compression mechanisms that support neural engine deployment.

Practice behavioral storytelling that highlights ownership and clarity

Behavioral interviews are structured and expect reflection, concise storytelling, and strong collaboration examples. Reviewing behavioral prompts in the mock interview library helps you practice explaining how you overcame obstacles, aligned stakeholders, and made decisions under ambiguity.

Tip: End each STAR story with a measurable outcome or what you learned for future work.

Apple Machine Learning Engineer Salary

Machine learning engineers at Apple earn some of the highest compensation packages in the industry, reflecting the technical depth and product impact of ML roles across Siri, Vision, on-device ML, Apple Services, and Hardware Technology. Based on 2025 data from Levels.fyi, total annual compensation for Apple MLEs typically ranges from about $189K for ICT2 roles to over $528K for ICT6 roles. The median total annual compensation reported for Apple MLEs in the United States is approximately $363K. Compensation varies significantly by team, location, and equity refresh cycles.

Average Annual Compensation by Level

| Level | Total (Annual) | Base (Annual) | Stock (Annual) | Bonus (Annual) |

|---|---|---|---|---|

| ICT2 (Junior Software Engineer) | $192K | $132K | $43K | $7.9K |

| ICT3 (Software Engineer) | $264K | $168K | $72K | $16.8K |

| ICT4 (Senior Software Engineer) | $384K | $216K | $144K | $21.6K |

| ICT5 | $540K | $252K | $252K | $39.6K |

| ICT6 | $528K | $252K | $276K | - |

Average Base Salary

Average Total Compensation

FAQs

How competitive is the Apple MLE interview?

Apple’s MLE interviews (sometimes called the “Apple AIML interview” in job posts) are highly competitive because they evaluate both deep technical ability and strong engineering judgment. Candidates must demonstrate fluency in ML fundamentals, deep learning architectures, system design, and coding quality. Practicing realistic problems in the machine learning interview learning path can help you benchmark against Apple’s expectations.

What is the typical timeline for the Apple MLE interview process?

Most candidates complete the full process in four to six weeks. This includes recruiter screenings, one or two technical phone interviews, a take home or coding assessment for some teams, an onsite loop with five to eight rounds, and a final hiring manager conversation. Timelines may vary depending on team availability and whether multiple teams are considering your profile.

Do I need deep learning experience for Apple MLE roles?

Yes. Many Apple MLE teams expect hands on experience training and debugging deep learning models, including CNNs, transformers, or multimodal architectures. Reviewing model fundamentals in the machine learning interview learning path strengthens the foundations necessary for these discussions.

How much coding is included in the Apple MLE interview?

Coding interviews typically involve Python and focus on model utilities, data transformations, numerical stability, and ML oriented coding patterns. Apple values clean, readable code with clear reasoning. To prepare, many candidates use the coding modules in the data science learning path.

What system design concepts should I expect?

Expect questions about designing training pipelines, distributed architectures, on device inference flows, feature stores, monitoring systems, or privacy preserving ML. Apple evaluates whether you can structure ambiguous requirements into scalable, reliable systems.

Does Apple expect MLEs to understand statistics and experimentation?

Yes. While Apple MLE roles lean heavily toward modeling and engineering, you still need to reason about metrics, error analysis, confidence intervals, drift detection, and experiment interpretation. Reviewing fundamentals in the data science learning path helps reinforce these skills.

Do Apple MLEs work closely with product teams?

Most do. Apple’s ML engineers frequently collaborate with product managers, designers, and applied researchers to translate high level goals into measurable, ML driven requirements. Strong communication and alignment skills are essential.

Is prior Apple experience required?

No. Apple hires candidates from diverse backgrounds, but they expect clear ownership of end to end ML work, including debugging, deployment, and iteration. Candidates should be able to explain real projects deeply and confidently.

If you want to see how your current skill set compares to typical Apple MLE interview questions, start with the machine learning interview learning path and then schedule a session in mock interviews to pressure-test your stories.

Your Apple MLE Journey Starts With How You Train Your Mind

Apple’s MLE roles demand a rare blend of scientific rigor, engineering precision, and elegant problem framing. These interviews reward people who don’t just know ML, but who reason about ML the way Apple builds ML: thoughtfully, systematically, and with extreme clarity.

The most effective way to prepare is to train your thinking the same way. Use the learning paths to build a foundation of structured reasoning. Practice ML system design and modeling tradeoffs with curated ML questions. And when you’re ready to test your communication and decision-making under pressure, schedule a session through mock interviews.

Give yourself the preparation that rewires how you approach machine learning—because the moment you start thinking like an Apple engineer is the moment you become one.