Apple Data Scientist Interview Guide: Process, Questions & Salary

Introduction

Apple operates one of the world’s largest consumer technology ecosystems, with more than 2 billion active devices globally and hundreds of millions of users generating signals across hardware, software, and services every day. From iPhone telemetry to App Store behavior, Apple Music streams, Siri interactions, and iCloud usage patterns, the company processes petabytes of data daily to improve performance, reliability, personalization, and customer experience.

Data scientists sit at the center of this system. They build models that power real-time recommendations, optimize wireless connectivity for millions of devices, evaluate product changes through rigorous experimentation, and inform strategic decisions across Apple’s $390B+ annual business. The work blends deep technical skill, product intuition, and the ability to influence decisions across engineering, product, and design.

If you are preparing for the Apple data scientist interview, this guide breaks down the role, the Apple data scientist interview process, and the most common Apple data scientist interview questions you can expect. Many candidates strengthen their foundations using the data science interview learning path, since Apple places strong emphasis on statistics, machine learning, coding, and structured problem solving.

What does an Apple data scientist do?

Apple data scientists apply machine learning, statistics, and experimentation to improve products used by hundreds of millions of people daily. Because Apple integrates hardware, software, and services, data scientists work across systems that generate billions of events per day, from App Store search queries to iPhone sensor signals to Siri voice interactions.

Common responsibilities include:

Data analysis and modeling:

Analyzing massive datasets (often at petabyte scale) to uncover behavioral patterns, optimize performance, predict failures, or personalize user experiences.

Machine learning development:

Building ML models for search ranking, recommendation systems, anomaly detection, user segmentation, predictive maintenance, and natural language understanding

Experimentation and causal inference:

Designing A/B tests and quasi-experiments to evaluate product changes across services like Apple Music, Apple TV+, the App Store, and iCloud. Helping teams interpret impact beyond click-through rates such as retention, latency, or system efficiency.

Data infrastructure & pipeline design:

Partnering with data and software engineers to develop high-quality datasets, ETL pipelines, dashboards, and monitoring tools with strict privacy safeguards.

Cross-functional partnership:

Working with engineering, product, design, marketing, and hardware teams to define metrics, improve user journeys, and model trade-offs for global-scale launches.

Communication & influence:

Presenting insights to senior leadership and translating statistical findings into product decisions. Apple expects data scientists to influence direction without relying on excessive jargon.

Typical backgrounds include:

- Advanced degrees (MS/PhD) in statistics, computer science, ML, operations research, or related fields

- Strong Python, SQL, and experimentation foundations

- Experience applying ML and analytics to complex, real-world systems

- Ability to communicate clearly and work collaboratively across disciplines

You can practice these fundamentals through Interview Query’s Apple data scientist interview questions, which mirror the statistical reasoning and modeling depth Apple expects.

Apple Data Scientist Interview Process

Apple’s interview process emphasizes technical depth, statistical rigor, and clarity of thought. While formats vary by team (Apple Services, Siri/ML, Maps, Health, Wireless Technology, App Store, etc.), most follow a multi-stage structure that evaluates your coding ability, machine learning intuition, experimentation skills, and product sense. Whether you’re targeting a data scientist at Apple role or a broader Apple data science interview, the core structure of the loop is similar: multiple rounds focused on coding, ML depth, experimentation, and communication.

Candidates often supplement their preparation with the data science interview learning path and the machine learning interview path, especially since Apple’s loops frequently mix theory with practical business and product reasoning.

Interview stages overview

| Stage | What it focuses on |

|---|---|

| Application & resume review | Background fit, research experience, relevant ML/analytics projects |

| Recruiter screen | Experience overview, team alignment, high-level technical depth, motivation |

| Technical phone screens | Coding (Python/SQL), ML fundamentals, statistical reasoning |

| Take-home or assessment (role-dependent) | Light modeling task, data analysis, or SQL challenge |

| Onsite or virtual loop | 5–8 interviews covering ML, coding, statistics, experimentation, product sense, and behavioral skills |

| Hiring manager + cross-functional conversations | Deep dive into past projects, impact, collaboration style, and culture fit |

| Final decision | Team sync, feedback calibration, and offer decision |

Application & resume review

Apple’s initial screen focuses on identifying candidates with strong quantitative backgrounds and clear evidence of real-world impact. Reviewers look for end-to-end project ownership, especially in experimentation, model deployment, and result interpretation. Clear communication around statistical decisions and uncertainty is viewed as a strong signal.

What Apple looks for:

- Strong foundation in statistics, experimentation, ML, and coding

- Experience owning end-to-end DS or ML projects, including experiment design and evaluation

- Measurable impact tied to decisions, not just metrics

- Comfort working with large datasets or distributed systems

- Ability to explain statistical reasoning and trade-offs clearly

This stage determines whether your experience matches the expectations of specific Apple teams. A well-scoped resume with measurable outcomes and clear experimental reasoning stands out.

You can use the Apple data scientist interview questions repository to benchmark the level of detail Apple expects.

Tip: When describing experiments, include not just outcomes but also why results were or were not trusted.

Recruiter screen

The recruiter screen confirms alignment between your background and the needs of the hiring team. This conversation sets expectations for timelines, interview format, and technical depth. Recruiters also assess how clearly you can explain past experimentation and analytical decisions.

What you’ll discuss:

- Summary of your DS background and key projects

- Your experience with experimentation, statistics, ML, and coding

- How you evaluated results and handled ambiguous or noisy outcomes

- Which Apple teams you may fit best

- Why you are interested in Apple and this role

- Timeline, location, and interview expectations

Recruiters relay your summary to hiring managers, so your explanation of past projects should be structured and concise, especially around experimental judgment.

If you want a sense of the skills Apple prioritizes in early screens, review the fundamentals in Interview Query’s SQL interview learning path and machine learning overview.

Tip: Prepare a short overview that highlights how you reasoned through experimental results, not just what you built.

Technical phone screens

Technical phone screens evaluate your mastery of data science fundamentals. Most roles include one or two 45–60 minute sessions. Recent candidates report that experimentation questions are largely discussion-based rather than calculation-heavy.

Focus areas and what to expect:

- Coding (Python/SQL): Data cleaning, joins, metric computation, and transformations. You can practice this style through Interview Query’s SQL interview questions and scenario-based SQL problems.

- Statistical reasoning: Experiment design, power considerations, and interpreting inconsistent or noisy results.

- ML fundamentals: Evaluation metrics, bias and variance, feature choices, and trade-offs.

- Applied DS thinking: Structuring experimentation or modeling problems end-to-end.

Interviewers emphasize how you reason through design choices, assess validity, and communicate uncertainty. Precise formulas matter less than sound judgment and clear explanation.

Tip: Walk through your thinking step by step and explain why an experiment should or should not be trusted.

Take-home or assessment (team-dependent)

Some Apple groups use a short assignment to evaluate analytical thinking and communication. These assessments often mirror real experimentation or analysis tasks.

Possible formats include:

- Data analysis such as exploratory analysis and trend interpretation

- Modeling tasks involving building and evaluating a simple model

- SQL challenges focused on querying and aggregating structured data

- Experiment interpretation, including assessing A/B test results or diagnosing anomalies

The focus is on clarity, reproducibility, and the logic behind conclusions rather than complex math or over-engineered solutions. You can practice this format in Interview Query’s take-homes library.

Tip: Clearly state assumptions, limitations, and confidence in your conclusions.

Onsite or virtual loop

The onsite loop consists of 5–8 interviews that test deep technical ability, structured problem solving, and collaboration. Experimentation interviews are typically conversational and probe judgment rather than computation.

Typical interview components include:

- Machine learning and modeling, covering feature choices, evaluation, and limitations

- Statistics and experimentation, including A/B test design, result validity, uncertainty, and error sources

- Coding and data manipulation using Python and SQL on realistic datasets

- Product or ML design focused on end-to-end systems for Apple products

- Behavioral and cross-functional interviews assessing communication, ownership, and collaboration

Apple’s interviews are discussion-driven. You are expected to justify choices, compare alternatives, and explain how you would act when results are unclear.

Tip: For experimentation questions, focus on reasoning, risks, and decision-making rather than equations.

Hiring manager conversation

This round focuses on depth, communication, and long-term alignment. It often centers on one or two major projects from your resume, with close attention to how you evaluated results and made decisions under uncertainty.

Hiring managers evaluate:

- How you frame ambiguous problems

- Your end-to-end thinking from experiment design to interpretation

- How you collaborate with PMs, engineers, and researchers

- Whether your judgment scales to Apple-level complexity

- Your ability to communicate trade-offs clearly

Tip: Be ready to discuss cases where experimental results were inconclusive and how you handled them.

Final decision

After interviews, Apple conducts a calibration across interviewers and teams. Feedback is synthesized holistically, with strong emphasis on experimental judgment, technical rigor, and communication maturity. Timelines vary from several days to a few weeks.

Tip: Follow up with your recruiter for updates and clarification on next steps.

Apple Data Scientist Interview Questions and Answers

Apple data scientist interviews emphasize depth of reasoning, the ability to explain trade-offs clearly, and comfort working with ambiguous real-world data. You can expect a mix of machine learning fundamentals, applied statistics and experimentation, coding with Python or SQL, and product/ML design questions that evaluate how you think about models at scale. If you’re specifically looking for Apple data science interview questions, start with the Apple data scientist question bank and then layer on practice from the machine learning interview learning path to build the structured thinking Apple expects.

Machine learning interview questions

ML interviews test whether you can explain model behavior, compare algorithms, and design systems that scale across billions of devices. Reviewing conceptual problems in the machine learning interview learning path can help reinforce the fundamentals Apple expects.

How would you explain the bias–variance tradeoff when choosing a model?

Explain how simpler models typically exhibit high bias and low variance, while more flexible models show low bias and high variance. Interviewers evaluate whether you can articulate how this tradeoff affects generalization, especially across Apple’s diverse user base. For example, personalization systems must balance stability against adapting to changing behavior.

Tip: Always connect the tradeoff to real product constraints like data sparsity, privacy limits, or heterogeneous populations.

How does a random forest generate its trees, and why might you use it over logistic regression?

Describe bootstrap sampling, feature subsampling, and aggregating predictions across trees. Then compare RF to logistic regression: the former captures nonlinear interactions while the latter offers interpretability and requires linear relationships. Interviewers want to see disciplined comparison rather than algorithm memorization.

Tip: Clarify how you would evaluate feature interactions and model complexity when choosing between the two.

Explain the difference between XGBoost and random forest. When would you prefer one over the other?

Contrast random forest’s bagging approach with XGBoost’s boosting framework that builds trees sequentially to reduce residual errors. Mention that XGBoost allows tighter control over regularization and optimization. Interviewers assess whether you can match model capabilities to problem characteristics.

Tip: Explicitly state use cases—e.g., ranking for App Store search (XGBoost) vs. baseline anomaly modeling (RF).

How would you design a facial-recognition authentication system for secure employee access?

Outline a system that includes data collection, embedding generation, face matching heuristics, thresholding logic, and fallback options for contractors. Discuss privacy considerations, on-device processing, and drift monitoring. This tests structured ML system design.

Tip: Mention how you would evaluate false positives and false negatives differently depending on access security levels.

How would you design and evaluate a model that detects unsafe objects at a checkpoint?

Describe the input data (images, metadata), labeling, feature extraction or deep learning approaches, and evaluation metrics prioritizing recall. Interviewers watch for thoughtful discussion of asymmetric costs and robustness testing.

Tip: Explain how you would test edge cases and operational failures before deployment.

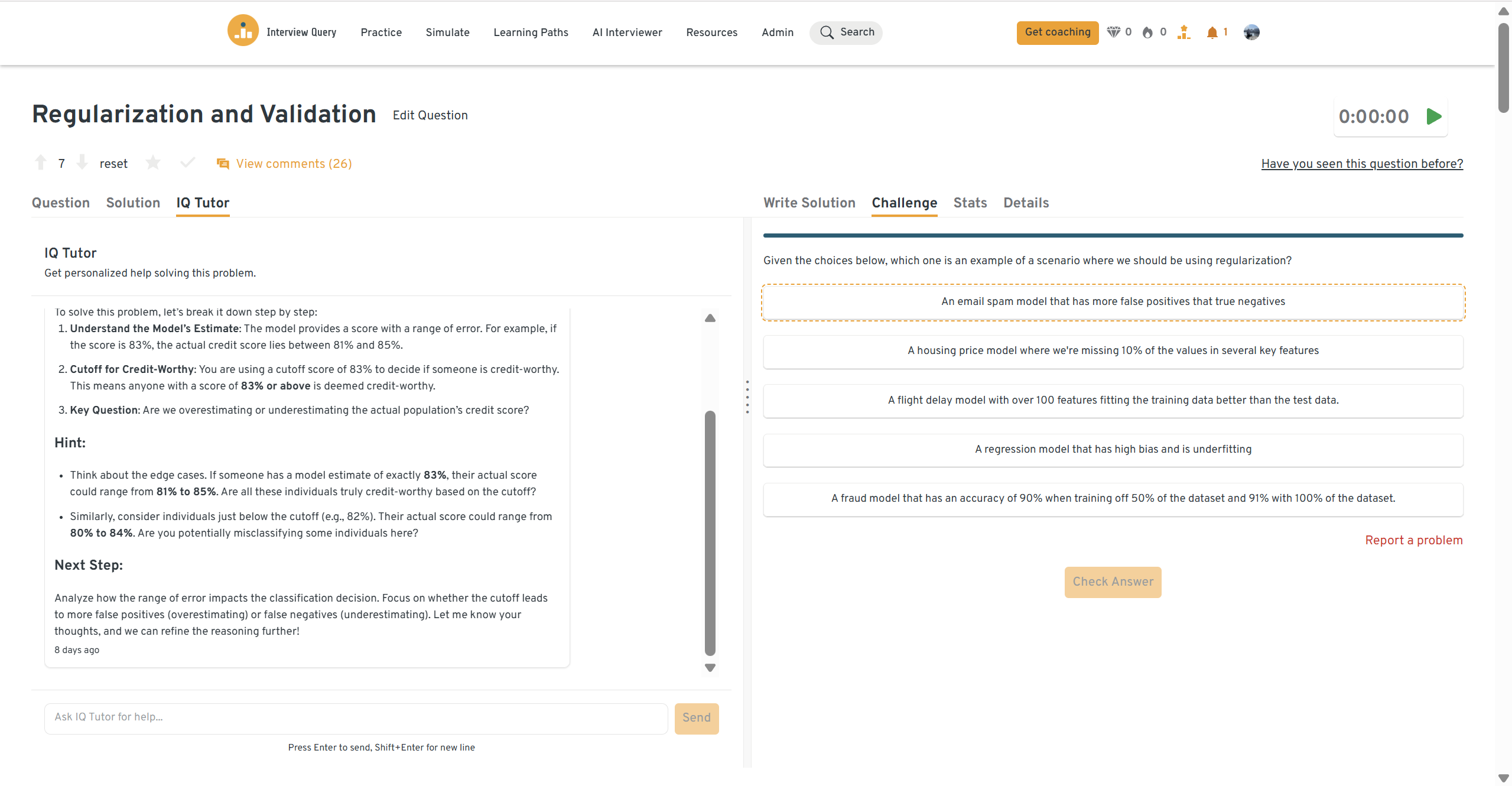

When should you use regularization vs. cross-validation?

Clarify that regularization reduces model complexity, while cross-validation evaluates generalization. Apple expects candidates to distinguish between improving fit and assessing fit.

Tip: Give a concrete example where using both is necessary to avoid misleading validation results.

You can practice this exact problem on the Interview Query dashboard, shown below. The platform lets you write and test SQL queries, view accepted solutions, and compare your performance with thousands of other learners. Features like AI coaching, submission stats, and language breakdowns help you identify areas to improve and prepare more effectively for data interviews at scale.

Statistics and experimentation interview questions

Apple frequently uses A/B testing and quasi-experiments across services such as App Store, Apple Music, iCloud, and Siri. You can practice similar reasoning patterns through the experimentation interview questions library.

How many more samples are needed to reduce a margin of error from 3 to 0.3?

Because margin of error is inversely proportional to the square root of sample size, reducing it by a factor of 10 requires roughly 100× the sample size, assuming similar variance and confidence level. Interviewers want to see the underlying statistical reasoning.

Tip: Rewrite the formula before solving—this models disciplined statistical thinking.

What is a confidence interval, and why is it useful?

Define the CI, explain how it quantifies uncertainty around parameter estimates, and describe why Apple uses CIs for understanding engagement, performance, and latency variability.

Tip: Emphasize that confidence intervals do not describe the probability that a parameter lies in an interval.

-

Sample size depends on effect size, variance, significance level, and desired power. Detecting small effects requires higher power and dramatically larger sample sizes. Interviewers evaluate whether you know how these components interact.

Tip: Give an Apple-relevant example such as detecting small Siri latency improvements.

How would you diagnose an A/B test that shows a positive directional lift but is not statistically significant?

Investigate tracking issues, validate randomization, check variance sources, perform power calculations, and review segmented results. Apple looks for systematic diagnosis rather than defaulting to “run it longer.”

Tip: Mention at least one non-sample-size explanation (e.g., attribution window mismatch).

If you run an A/B test 20 times and only one shows a positive result, would you trust it

This question evaluates statistical reasoning and skepticism around false positives. Interviewers assess whether candidates understand multiple testing, randomness, and the difference between statistical significance and practical significance.

How do you determine the duration of an A/B test and whether it ran successfully?

This question tests knowledge of experimental design fundamentals. Candidates are expected to discuss power analysis, minimum detectable effect, traffic considerations, and criteria for determining whether results are reliable and actionable.

SQL and coding interview questions

Apple’s SQL and Python interviews evaluate whether you can transform large-scale data into reliable, structured outputs. If you want to practice similar patterns, explore the SQL interview learning path.

How would you compute cumulative sales for each product since its last restocking date?

Join restocking to sales, identify the last restock event per product, and use a window function to compute running totals from that point forward. Interviewers evaluate temporal joins and window frame definitions.

Tip: State the output grain first—“one row per product per day.”

How would you create a table listing each student’s scores across four exams?

Use conditional aggregation or pivot logic to produce a single consolidated row per student. Interviewers check grouping logic, completeness, and treatment of missing exams.

Tip: Explain how you guarantee that each student appears exactly once.

Which shipments were delivered during a customer’s membership period?

Perform an interval join between shipments and membership dates and classify rows as Y/N. Apple tests temporal reasoning and careful boundary handling.

Tip: Always clarify inclusivity of start and end dates.

How many customers were upsold after their first purchase?

Identify each customer’s first purchase date, then count subsequent purchases on later dates. Interviewers examine your treatment of same-day purchases.

Tip: Reiterate that same-day multiple purchases do not count as upsells.

How would you count daily active users on each platform for 2020?

Filter logs to 2020, extract date and platform, and compute distinct users per day. This tests correctness in date handling and grouping.

Tip: Mention timezone normalization when grouping by day.

How would you retrieve the earliest date each user played their third unique song?

Deduplicate song plays, rank unique songs per user using window functions, and select the third-ranked entry. Handle users with fewer than three unique songs.

Tip: Explain how to avoid repeated plays inflating unique song counts.

Write a Python function to group words into anagram buckets.

Normalize each word by sorting characters or building frequency maps, and store results in a hash map. Interviewers evaluate grouping logic and clarity.

Tip: Mention expected time complexity and possible optimizations.

Determine whether a given string is a palindrome.

Compare characters symmetrically or reverse the string. Apple tests clean problem decomposition and handling of corner cases.

Tip: State assumptions about casing, punctuation, and whitespace.

Behavioral interview questions

Behavioral interviews at Apple evaluate how you solve ambiguous problems, communicate with clarity, collaborate across engineering and product teams, and maintain high standards for data integrity and user experience. Apple expects candidates to demonstrate ownership, structured thinking, cross-functional alignment, and the ability to translate analytical insights into product or business impact. Interviewers look for concise STAR-style answers grounded in real outcomes.

Describe a data project you worked on. What were the main challenges you faced?

Interviewers want to see how you handle ambiguity, navigate incomplete or noisy datasets, and partner with engineering and product teams to resolve blockers. Apple looks for candidates who can articulate both technical challenges (data quality, modeling issues, pipeline instability) and stakeholder challenges (changing requirements, unclear goals). Strong answers demonstrate structured problem solving, communication under uncertainty, and measurable impact.

Tip: Mention one technical challenge and one stakeholder challenge to show full-spectrum ownership.

Sample Answer: I worked on improving recommendation quality for an internal search feature where user events were inconsistently logged across devices. Early in the project, I discovered timestamp drift and missing identifiers for certain regions. I partnered with data engineering to redesign logging schemas and build validation checks. At the same time, product needed quick insights, so I created an interim heuristic model to approximate ranking signals. Once logging stabilized, I retrained the model and ran offline and online evaluations. The final system improved click-through rates by 11% and reduced misclassified sessions by 30%.

What are effective ways to make data more accessible to non-technical teams?

Apple values candidates who can convert complex analyses into simple, actionable insights for design, product, operations, and leadership teams. Interviewers want to see how you choose the right abstraction level, build intuitive visualizations, define metrics clearly, and create artifacts that empower partners to self-serve. Strong answers highlight storytelling, clarity, and measurable improvements in alignment or decision-making.

Tip: Tie your communication strategy to improved decisions, faster execution, or reduced confusion.

Sample Answer: For an experimentation program, non-technical stakeholders struggled to interpret confidence intervals and treatment effects. I redesigned the experiment dashboard with visual metaphors, clear labeling, and in-context explanations for each metric. I also created a two-page “How to Interpret Results” guide and held short monthly office hours. After the redesign, experiment review time dropped by 40% and product teams were able to make decisions independently without waiting for analysts.

What would your current manager say about your strengths and weaknesses?

Interviewers look for self-awareness and honesty. Strong answers pair each strength with a real example and each weakness with an active mitigation plan. Apple especially values structured thinking, communication clarity, and bias toward action.

Tip: Choose a weakness that is real but not role-fatal, and describe the system you use to improve it.

Sample Answer: My manager would highlight structured problem solving and communication as strengths—I’m often asked to translate analytical findings for engineering, design, and leadership. One area I’ve worked on is limiting the amount of time I spend exploring edge cases before sharing work. To address this, I set internal deadlines and share prototypes earlier. This improved team velocity and ensured my work aligned with evolving priorities.

Talk about a time you had trouble communicating with stakeholders. How did you overcome it?

Apple values cross-functional alignment, especially in data and ML roles where partners have varying levels of technical familiarity. Interviewers want to see how you diagnose miscommunication, adjust your approach, and deliver clarity while maintaining trust.

Tip: Emphasize how your communication change improved workflow, alignment, or decision-making.

Sample Answer: I once partnered with an engineering lead who preferred highly technical detail, while product preferred concise narratives. My initial updates satisfied neither. I created a layered communication format: a one-page summary for product plus expandable sections with data tables, assumptions, and model diagnostics for engineering. This approach aligned both groups, shortened review cycles, and improved the team’s ability to make informed tradeoffs.

Why do you want to work with us?

Apple expects a thoughtful, specific answer rooted in product impact, data culture, and the scale of the problems the company solves. Interviewers look for alignment with Apple’s values around user experience, privacy, quality, and innovation.

Tip: Mention a product, technical challenge, or user problem that genuinely interests you.

Sample Answer: I’m excited about Apple because the company solves problems at global scale while maintaining extremely high standards for user experience and privacy. As a data scientist, I’m motivated by work that directly shapes products people rely on daily. I’m also drawn to Apple’s focus on on-device intelligence and thoughtful experimentation. The role aligns with how I like to work—translating messy user signals into insights and models that improve real customer experiences.

Tell me about a time you had to make a decision with incomplete data. How did you handle the uncertainty?

Apple values principled decision-making under ambiguity. Interviewers want to see how you scope risk, identify assumptions, validate quickly, and communicate trade-offs.

Tip: Highlight how you balanced speed vs. accuracy and how you validated your decision afterward.

Sample Answer: While estimating the impact of a redesign on user retention, key cohorts lacked historical coverage due to recent tracking changes. Instead of postponing the recommendation, I built bounding estimates, triangulated with proxy metrics, and presented three scenarios with explicit assumptions. I aligned with product on which risk profile was acceptable and launched a small-scale experiment to validate quickly. The eventual lift fell within my projected range, confirming the approach.

Describe a situation where you challenged an assumption or direction from leadership using data. What happened?

Apple looks for candidates who can respectfully challenge decisions with evidence while maintaining trust. Interviewers want to see the balance between conviction and collaboration.

Tip: Show how you framed the discussion constructively and what changed as a result.

Sample Answer: A leadership team believed that reducing the onboarding flow from three steps to two would automatically improve first-day activation. My early analysis suggested that the drop-off wasn’t in the number of steps but in a specific permission request. Instead of presenting a flat disagreement, I walked through session recordings, attribution data, and segmented funnels. This reframed the discussion from simplifying the flow to clarifying the permission rationale. After implementing targeted UI guidance, activation rates increased by 9%. Leadership later adopted this evidence-first decision style for similar initiatives.

You might think that behavioral interview questions are the least important, but they can quietly cost you the entire interview. In this video, Interview Query co-founder Jay Feng breaks down the most common behavioral questions and offers a clean framework for answering them effectively.

How to Prepare for an Apple Data Scientist Interview

Preparing for an Apple data scientist interview requires depth in machine learning, practical statistical intuition, strong SQL fundamentals, and clear, structured communication. Apple evaluates how you reason from first principles, handle ambiguity, collaborate across engineering and product teams, and design models or experiments that meet the company’s high bar for quality and privacy. Reviewing core concepts through the data science interview learning path, practicing applied ML reasoning through the modeling and machine learning path, and running timed sessions in the AI interview practice tool can help anchor your preparation.

Strengthen your foundations in machine learning with first principles reasoning

Apple often tests how well you explain why a model works, how it behaves under different conditions, and what assumptions it depends on. Expect questions about regularization, bias variance tradeoffs, on-device learning, drift challenges, and data constraints at massive scale. Interviewers want structured, intuitive explanations that show real understanding rather than memorized definitions.

Tip: Practice explaining ML concepts in a concise, intuitive way, focusing on tradeoffs and real product constraints.

Revisit statistics and experimentation, especially power, variance, and attribution

Many Apple teams run experiments across heterogeneous user groups, varying device capabilities, and small incremental effects. You should be comfortable designing hypothesis tests, interpreting confidence intervals, reasoning about power, and diagnosing experiments that produce inconclusive or contradictory results.

Tip: Be ready to walk through a real A and B test you owned and explain how your analysis led to a product or business decision.

Refresh your SQL and data manipulation speed, accuracy, and framing

Apple values precision in data handling and expects candidates to be fluent in joins, window functions, date logic, and large scale data processing. Framing assumptions explicitly is part of the evaluation. You can sharpen these skills through the SQL interview questions library.

Tip: State your assumptions before writing a query so the interviewer sees your reasoning structure.

Build familiarity with Apple’s products, on device philosophy, and data minimization principles

Apple prioritizes privacy preserving analytics and often designs models that run directly on devices. Demonstrating awareness of what data is available and how you would design systems within strict privacy constraints signals strong alignment with Apple’s engineering culture.

Tip: Prepare an example of how you would design an ML system using only aggregated or on device features.

Prepare one end to end project story that showcases modeling, engineering partnership, and cross functional alignment

Apple’s final rounds often include a walkthrough of a past project where you defined the problem, worked through messy data, partnered with engineering, made tradeoffs, and delivered measurable impact. Interviewers look for clarity, structure, and ownership.

Tip: Choose a project where you had to adapt your approach as new information emerged since this reflects how Apple teams operate.

Practice articulating tradeoffs and decision frameworks rather than memorizing answers

Interviewers frequently push candidates to justify their choices for models, metrics, sampling strategies, or experimental design. Being able to clearly explain alternatives and why you chose a specific path is often more important than the answer itself.

Tip: Use a simple structure such as Compare, Decide, Justify whenever explaining a choice.

Prepare for behavioral interviews that focus on clarity, ownership, and communication under ambiguity

Apple expects concise, well structured stories that highlight decision making, stakeholder alignment, and impact. Reviewing patterns in the behavioral interview questions library can help you practice delivering clean STAR stories that connect to tangible outcomes.

Tip: End every story with a clear business or product result so your impact is immediately visible.

Practice dry runs of ML system design questions

Some teams will ask you to design a complete machine learning system from data collection to evaluation to ongoing monitoring. Apple focuses on clarity, privacy considerations, and thoughtful handling of failure modes.

Tip: Use a consistent structure such as Problem, Inputs, Model, Evaluation, Risks, Extensions to keep your thinking organized.

Apple Data Scientist Salary

Recent 2025 data from Levels.fyi shows that Apple data scientists in the United States earn highly competitive compensation packages that scale significantly with level and region. Total compensation includes base salary, annualized stock grants, and bonuses. Across all levels, the median total annual compensation is approximately $312K, with senior roles reaching well above $450K.

| Level | Total (annual) | Base (annual) | Stock (annual) | Bonus (annual) |

|---|---|---|---|---|

| ICT2 (Junior Data Scientist) | $120K | $108K | $8K | $0.4K |

| ICT3 (Data Scientist) | $228K | $168K | $44K | $14K |

| ICT4 (Senior Data Scientist) | $312K | $204K | $94K | $20K |

| ICT5 | $492K | $276K | $192K | $26K |

| ICT6 | No data | No data | No data | No data |

| ICT7 | No data | No data | No data | No data |

These annualized figures reflect user submitted data and should be interpreted as directional rather than guaranteed offers.

Average Base Salary

Average Total Compensation

Regional Apple Data Scientist Salary Comparison

Compensation varies by location due to team distribution, seniority mix, and cost of living. The table below consolidates annual total compensation across major U.S. markets based on Levels.fyi submissions.

All values below are annualized totals.

| Region | ICT2 Annual | ICT3 Annual | ICT4 Annual | ICT5 Annual | Median Annual Total | Source |

|---|---|---|---|---|---|---|

| United States (Overall) | $120K | $228K | $312K | $492K | $312K | Levels.fyi |

| San Francisco Bay Area | $180K | $228K | $324K | $444K | $276K | SF Bay Area |

| Greater Austin Area | $120K | $204K | $264K | No data | $240K | Austin |

| Greater Los Angeles Area | $80K | $264K | $276K | No data | $228K | Los Angeles |

| Greater Seattle Area | No data | $192K | $312K | $444K | $372K | Seattle |

| New York City Area | No data | $216K | $348K | No data | $276K | NYC |

The Bay Area and Seattle remain the most lucrative regions for Apple data scientists, with Seattle showing particularly high senior-level compensation. These hubs house machine learning, platform intelligence, and core engineering teams that tend to offer larger equity packages. Austin and Los Angeles provide competitive compensation but typically reflect lower cost of living and fewer senior level submissions. New York City compensation aligns with the overall U.S. median, especially at mid levels.

FAQs

How competitive is the Apple data scientist interview?

Apple’s process is highly competitive because data scientists support products that operate at global scale, such as Siri, Apple Music, App Store, iCloud, and on-device ML systems. Interviewers expect strong fundamentals in machine learning, statistics, experimentation, and data manipulation, as well as structured communication and cross functional alignment. Practicing through the data science interview learning path can help build familiarity with the depth Apple expects.

What is the typical interview timeline for an Apple data scientist role?

Most candidates move from recruiter screen to final decision within four to six weeks. The timeline may extend if the team includes take home assignments or if multiple technical loops need to be scheduled across engineering, product, and machine learning stakeholders.

Does Apple require strong machine learning knowledge for data scientist roles?

Yes. ML depth is a core component of many interviews. You should be comfortable explaining algorithms from first principles, discussing tradeoffs, evaluating models, and designing systems that comply with Apple’s privacy and on device constraints. Some teams emphasize ML more heavily than others, but all expect strong analytical reasoning.

How important is SQL in the Apple data scientist interview?

SQL is tested in almost every process. You should be comfortable with joins, window functions, aggregation logic, temporal filtering, and validating assumptions. Correctness and clarity matter more than advanced tricks. You can practice similar patterns in the SQL interview questions library.

What types of case questions should I expect?

Expect structured questions about designing ML systems, diagnosing metrics, reasoning about experiments, exploring ambiguous product signals, or creating decision frameworks. Apple interviewers push candidates to articulate tradeoffs, constraints, and measurable outcomes.

Does Apple emphasize privacy in data science interviews?

Yes. Apple differentiates itself through user privacy and on device intelligence. Interviewers expect you to incorporate privacy aware reasoning into ML design, metric selection, experiment structure, and data collection strategies.

What background do most successful candidates have?

Candidates often come from machine learning, analytics, applied research, data engineering, or quantitative product roles. Strong performers demonstrate structured thinking, a bias toward clarity and simplicity, and the ability to collaborate across engineering, design, and product.

Is Apple a remote friendly company for data science roles?

Some teams are hybrid while others require on site presence depending on the function. Many machine learning and platform intelligence teams prefer engineers to be on site due to secure environments, proprietary systems, or hardware integration. Your recruiter can confirm expectations early in the process.

How deep should my A/B testing knowledge be for this role?

Interviewers expect senior-level candidates to go beyond basic metrics and p-values. You should be comfortable discussing experimental validity, statistical power, and how to interpret noisy or conflicting results in real-world testing scenarios.

Prepare Like You Are Already on the Team

Apple’s interviews reward candidates who think the way Apple builds products: simplify the complex, obsess over quality, and ground every decision in clarity. When you practice breaking down ML systems, defending experimental choices, and communicating insights with precision, you are not just preparing for an interview. You are rehearsing the same thinking that drives Siri, Apple Music recommendations, and on-device intelligence.

If you want to train these muscles with real-world problems, explore guided learning paths, sharpen judgment in mock interviews, get targeted feedback through coaching, and strengthen fundamentals with hands-on challenges. You can also browse more company-specific interview guides to compare how Apple’s process differs from other top tech firms. Start preparing today and walk into your Apple interview already thinking like an Apple data scientist.