Databricks Data Scientist Interview Guide (2025) – Process, Salary & Questions

Introduction

If you’re preparing for a Databricks Data Scientist interview, you’re about to step into one of the most dynamic and technically rigorous environments in the data and AI industry. Databricks is renowned for its innovative approach, leveraging cutting-edge data science to drive real business impact. You’ll encounter questions that test your expertise in statistics, machine learning, and SQL, as well as your ability to solve complex, real-world problems. Recent interviews have included probability puzzles, hypothesis testing, and case studies that mirror the challenges Databricks faces daily. By focusing on both your technical depth and your ability to communicate insights, you’ll stand out and feel confident navigating the process.

Role Overview & Culture

As a Databricks data scientist, you’ll play a pivotal role in transforming massive datasets into actionable insights that fuel product innovation and strategic decisions. You’ll collaborate with cross-functional teams—engineering, product, and customer success—to design and deploy scalable machine learning solutions. The culture at Databricks is rooted in transparency, first-principles thinking, and a relentless drive for innovation. You’ll thrive in an environment that values diversity, continuous learning, and open collaboration, with regular access to leadership and a strong emphasis on mentorship and professional growth. This is a place where your contributions directly shape the company’s future.

Why This Role at Databricks?

Choosing the Databricks data scientist role means joining a company at the forefront of AI and big data, trusted by over 10,000 organizations, including more than 60% of the Fortune 500. Here, you’ll work with exabytes of data, build solutions that impact millions, and have the freedom to innovate with the latest tools and frameworks. Databricks invests heavily in your growth, offering a supportive, inclusive environment and opportunities to present at conferences or lead high-impact projects. The average Databricks data scientist salary is highly competitive, with total compensation packages averaging $166,000 to $237,000 annually, including bonuses and equity. If you’re ready to take the next step, let’s dive into the interview process, and we’ll break down the Databricks Data Scientist salary later.

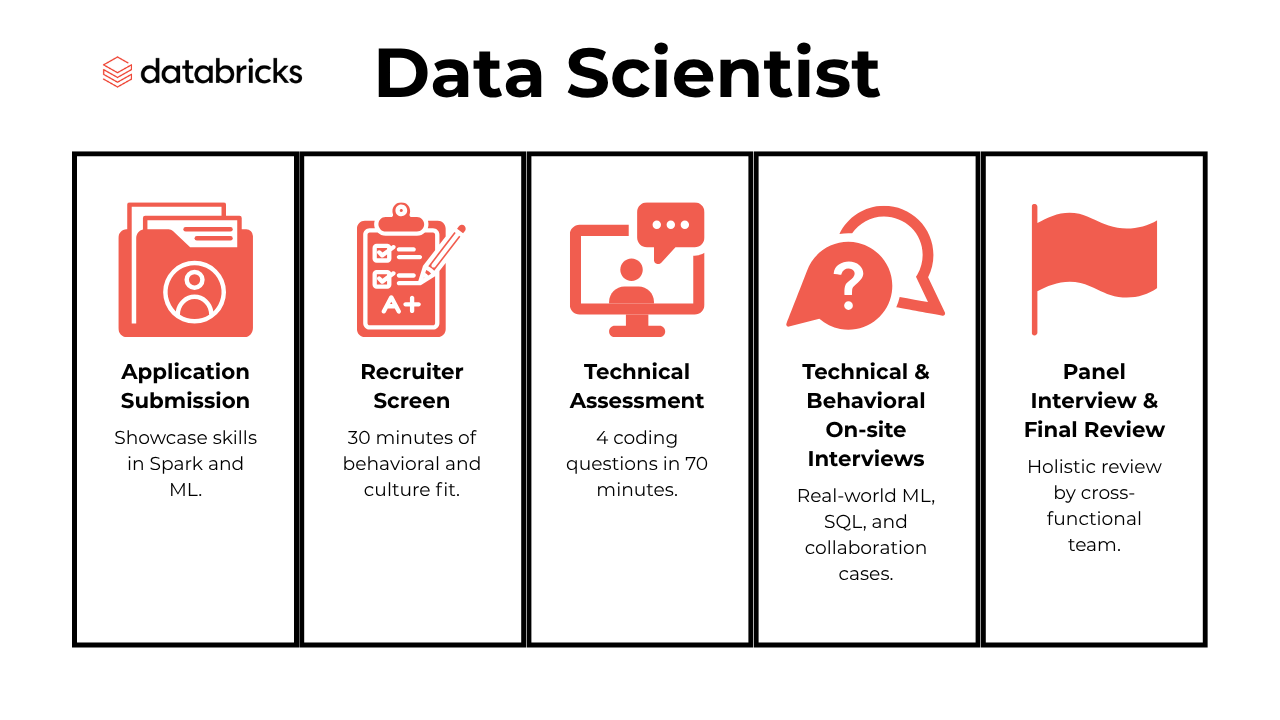

What Is the Interview Process Like for a Data Scientist Role at Databricks?

The Databricks data science interview process typically spans five rigorous stages, each designed to evaluate your technical depth, problem-solving ability, and cultural fit. The process is highly competitive, with Databricks seeking candidates who can thrive in a fast-paced, collaborative environment and demonstrate a passion for innovation and data-driven impact. Here is how it usually goes:

- Application Submission

- Recruiter Screen

- Technical Assessment

- Technical & Behavioral On-site Interviews

- Panel Interview & Final Review

Application Submission

Your journey begins with a detailed online application, where you’ll showcase your academic background, technical skills, and relevant project experience. Databricks uses both automated screening and manual review to identify candidates who align with their core requirements: strong programming skills in Python or Scala, advanced SQL proficiency, and hands-on experience with big data tools like Apache Spark. The application portal asks for links to your GitHub, Kaggle, or portfolio, and emphasizes accomplishments in data engineering, machine learning, or analytics. Behind the scenes, recruiters and technical leads assess your fit for the specific team and role, often looking for evidence of impact, leadership in past projects, and alignment with Databricks’ mission.

Recruiter Screen

If your application stands out, you’ll be invited to a 30-minute recruiter screen. This conversation focuses on your motivation for joining Databricks, your career trajectory, and your understanding of the company’s culture. The recruiter will probe your experience with collaborative data science projects, your familiarity with Databricks’ platform, and your ability to communicate complex ideas clearly. Expect behavioral questions that assess your adaptability, teamwork, and alignment with Databricks’ values of transparency and continuous learning. Behind the scenes, your responses are scored against a standardized rubric, and strong candidates are flagged for technical review. This is also your opportunity to ask about team dynamics and growth opportunities.

Technical Assessment

Next, you’ll complete a technical assessment, typically delivered via CodeSignal or CoderPad, lasting 60–70 minutes. You’ll tackle four coding problems ranging from medium to hard, covering algorithms, data structures, and SQL queries involving window functions and data manipulation. The assessment may also include a data case study, requiring you to analyze a dataset, apply statistical tests, and present actionable insights. Proficiency in Python, Pandas, and PySpark is essential, as is your ability to write efficient, readable code under time constraints. Your solutions are automatically scored for correctness and efficiency, and reviewed by engineers for code quality and problem-solving approach.

Technical & Behavioral On-site Interviews

Passing the assessment advances you to a series of on-site or virtual interviews, typically four to five rounds. You’ll face deep technical interviews on machine learning algorithms, statistical inference, and system design—often using collaborative tools for whiteboarding or document sharing. You’ll also work through real-world business cases, demonstrating your ability to translate messy data into impactful solutions. Behavioral interviews focus on your experience collaborating across teams, handling ambiguity, and driving projects to completion. The Databricks data scientist interview loop emphasizes both technical excellence and your fit with Databricks’ culture of innovation, transparency, and diversity.

Panel Interview & Final Review

The final stage is a panel interview with engineers, managers, and sometimes cross-functional partners. Each interviewer independently scores your performance across technical and behavioral dimensions. Afterward, a hiring committee conducts a holistic review, weighing feedback, references, and your overall trajectory. The process is designed to be transparent and efficient—most candidates receive a decision within days, and the full process rarely exceeds five weeks. Behind the scenes, Databricks prioritizes quick feedback loops and ensures only the most qualified, culturally aligned candidates receive offers, maintaining its high bar for talent and innovation.

What Questions Are Asked in a Databricks Data Scientist Interview?

The Databricks data scientist interview includes a blend of technical, machine learning, and behavioral questions that assess both your analytical ability and your communication skills.

Coding / Technical Questions

Most Databricks data science interview questions begin with exploratory data analysis, SQL challenges, or programming tasks that test your ability to work with real-world datasets:

To solve this, join the transactions table with the products table to calculate the total order amount. Use COUNT with DISTINCT for the number of customers and COUNT for the number of transactions. Group by the month extracted from the created_at field and filter for the year 2020.

To solve this, calculate weekly revenue for each advertiser using a CTE, then identify advertisers with the highest weekly revenue using the RANK() function. Next, find the top three best-performing days for these advertisers using ROW_NUMBER(), and finally, join the results with the advertisers table to display the advertiser name, transaction date, and amount.

To solve this, use the LAG window function to create a column that shows the previous role for each user. Filter the rows where the current role is “Data Scientist” and the previous role is “Data Analyst”. Finally, calculate the percentage by dividing the count of such users by the total number of users.

4. Find the five lowest-paid employees who have completed at least three projects

To solve this, join the employees and projects tables using an INNER JOIN. Filter for employees who have completed at least three projects using GROUP BY and HAVING COUNT(p.End_dt) >= 3. Finally, order the results by salary in ascending order and limit the output to the lowest five employees.

5. Calculate total and average expenses for each department

To solve this, use a LEFT JOIN to combine the departments and expenses tables, ensuring departments with no expenses are included. Use a CASE statement to sum expenses for 2022 and calculate the average expense across all departments using a Common Table Expression (CTE). Finally, sort the results by total expenditure in descending order.

6. Compute the cumulative sales for each product

To compute cumulative sales, use a self-join on the sales table where rows are matched based on product_id and date. Aggregate the price column using SUM() for all rows where the date is less than or equal to the current row’s date. Group by product_id and date to calculate the cumulative sum for each product.

Machine-Learning & Product-Sense Questions

In this stage, you’ll encounter databricks data science interview questions that focus on model development, experimentation, and product intuition, all framed within practical business contexts:

The model would not be valid because the removal of the decimal point introduces significant errors in the independent variable, distorting the relationship between the variable and the target label. To fix the model, you can visually identify and correct errors using histograms or apply clustering techniques like expectation maximization to detect and resolve anomalies in cases with large data ranges.

8. What would you look into if the fill rate in ads has dipped by 10%?

To address a 10% drop in fill rate, investigate potential causes such as reduced ad demand, increased competition for ad slots, or technical issues affecting ad delivery. Analyze metrics like ad inventory, user engagement, and system performance to identify the root cause and implement corrective measures.

To diagnose the issue, investigate segmentation factors such as bugs, platform-specific anomalies, or demographic shifts impacting email open rates. Consider hypotheses like a surge in new users affecting WAU while lowering email open rates or increased email notifications causing email fatigue. Analyze email metrics and segment data for insights.

To measure the success of private stories on Instagram, consider tracking metrics like story views, unique viewers, and engagement actions such as reactions or replies. Additionally, analyze retention rates to understand how often users return to post or view private stories, and examine the frequency of content creation among users. These metrics collectively indicate the feature’s effectiveness in fostering close connections and maintaining user interest.

To solve this, start by defining what constitutes “major health issues” with input from healthcare professionals. Select an appropriate model, such as logistic regression for simple data or decision trees/random forests for complex datasets. Address missing values using imputation techniques and prioritize sensitivity to false negatives to minimize risks. Ethical considerations regarding demographic data should be handled carefully to avoid bias.

Behavioral / Collaboration Questions

These questions evaluate how well you communicate insights, collaborate across teams, and align with Databricks’ values of transparency, innovation, and impact:

At Databricks, data scientists frequently engage with cross-functional teams, so communication challenges are inevitable. You might share an example where you struggled to explain Spark optimizations or ML pipeline results to a product team unfamiliar with the technology. Then explain how you adjusted your message using analogies or visual tools and emphasize the importance of tailoring insights to each audience.

13. How comfortable are you presenting your insights?

Databricks values data scientists who can present insights clearly to drive product and business decisions. You should talk about how you structure your presentations using dashboards or notebooks, how you adapt to your audience, and what tools you use to visualize large-scale data from the Lakehouse. Mention past experiences presenting to executives or technical leads in both remote and in-person settings.

14. Why Do You Want to Work With Us

This question requires demonstrating alignment with Databricks’ mission, such as unifying data and AI or enabling open-source innovation. You should reference specific products like Delta Lake or MLflow that excite you, along with how your background in scalable data science fits into the company’s direction. Make it personal by tying your motivation to Databricks’ collaborative culture or the impact of their work across industries.

15. How would you convey insights and the methods you use to a non-technical audience?

At Databricks, your audience may include business leads or client partners who rely on your insights to guide strategy. Use a concrete example where you broke down a complex method—such as distributed model training or time series forecasting—into intuitive concepts. Explain how you framed the problem, simplified the method, and focused on outcomes, so the audience could make informed decisions.

How to Prepare for a Databricks Data Scientist Interview

To excel in your Databricks data scientist interview, start by refreshing your machine learning fundamentals. Deepen your understanding of supervised and unsupervised algorithms, model evaluation metrics, and the bias-variance tradeoff, as these concepts are frequently tested in both technical rounds and case studies. Make sure you can confidently explain your approach to A/B testing, including hypothesis formulation, statistical significance, and interpreting p-values. Databricks places a strong emphasis on candidates who can design and analyze experiments to drive business impact.

Practice communicating complex technical ideas clearly and concisely, as you’ll often need to present your findings to cross-functional teams. Use frameworks like STAR (Situation, Task, Action, Result) to structure your responses during behavioral interviews, and be ready to discuss how you’ve collaborated on past projects or handled ambiguity.

A highly effective strategy is to simulate the real interview environment by scheduling mock interviews with peers or using online platforms. This will help you identify gaps in your technical knowledge and refine your ability to articulate solutions under pressure. For domain-specific preparation, focus on practicing Databricks data science interview questions that mirror real business challenges, such as designing scalable ML pipelines or optimizing SQL queries for big data. As a Databricks data scientist, demonstrating both technical mastery and strong communication skills will set you apart and boost your confidence on interview day.

FAQs

What Is the Average Salary for a Data Scientist at Databricks?

Average Base Salary

How Is the Databricks Data Science Interview Different from Other Companies?

The Databricks data science interview places more emphasis on scalable systems, distributed computing, and product impact. Candidates should be ready for deep technical questions and case-based discussions around real-world data applications.

Where Can I Practice Databricks Data Science Interview Questions?

You can find curated Databricks data science interview questions in the Interview Query questions collection. These reflect the latest topics in machine learning, Spark, and collaborative workflows.

Conclusion

Preparing for a Databricks data scientist interview means going beyond algorithms and SQL—it requires clear communication, real-world problem-solving, and a deep understanding of scalable systems. To see how others have succeeded, read Alex Dang’s interview success story. If you’re looking to build your skills step by step, follow our data science learning path. And when you’re ready to dive into hands-on prep, explore this full set of Databricks data science interview questions. With practice and the right strategy, you can approach your interview with confidence.