Databricks Data Analyst Interview Guide (2025): Process, Questions & Prep Tips

Introduction

A Databricks data analyst stands at the intersection of cutting-edge technology and business impact, transforming raw data into actionable insights. As you prepare for your 2025 interview, it’s essential to recognize that Databricks is not just a technology leader but a company where your analytical skills can drive strategic decisions across global industries. With over 10,000 organizations—including more than 60% of the Fortune 500—relying on Databricks’ Data Intelligence Platform, your expertise will help shape the future of data and AI. This guide will equip you with the technical depth, industry statistics, and executive insights you need to excel in your Databricks data analyst interview.

Role Overview & Culture

In the Databricks data analytics environment, your day-to-day will be dynamic and collaborative, involving the creation of advanced dashboards, AI/BI Genie Spaces, and the management of data assets for diverse stakeholders. You’ll work closely with cross-functional teams—ranging from Strategy and Operations to Marketing and Finance—to gather requirements, execute analytic projects, and deliver business-critical insights. Databricks’ culture is rooted in data democratization, empowering every employee to access and leverage data for smarter decision-making. The company’s commitment to a data-driven culture is evident, with studies showing organizations embracing this approach are nearly three times more likely to achieve double-digit growth. At Databricks, you’ll thrive in an environment that values open communication, rapid feedback, and continuous learning, ensuring your contributions have a measurable impact on business outcomes.

Why This Role at Databricks?

Databricks’ Lakehouse vision is revolutionizing the analytics landscape by unifying data lakes and warehouses, enabling real-time data processing, and supporting advanced AI workloads. The company’s rapid growth—serving over 10,000 organizations and partnering with 1,200+ global cloud and consulting partners—means you’ll be part of a team at the forefront of data innovation. Databricks offers robust career paths, from analyst to data engineer or data scientist, supported by a culture of learning and advancement. Compensation is highly competitive, with U.S. data analysts earning between $80,000 and $155,000. You’ll join a company where your skills are valued, your career can accelerate, and we’ll break down Databricks data analyst salary later.

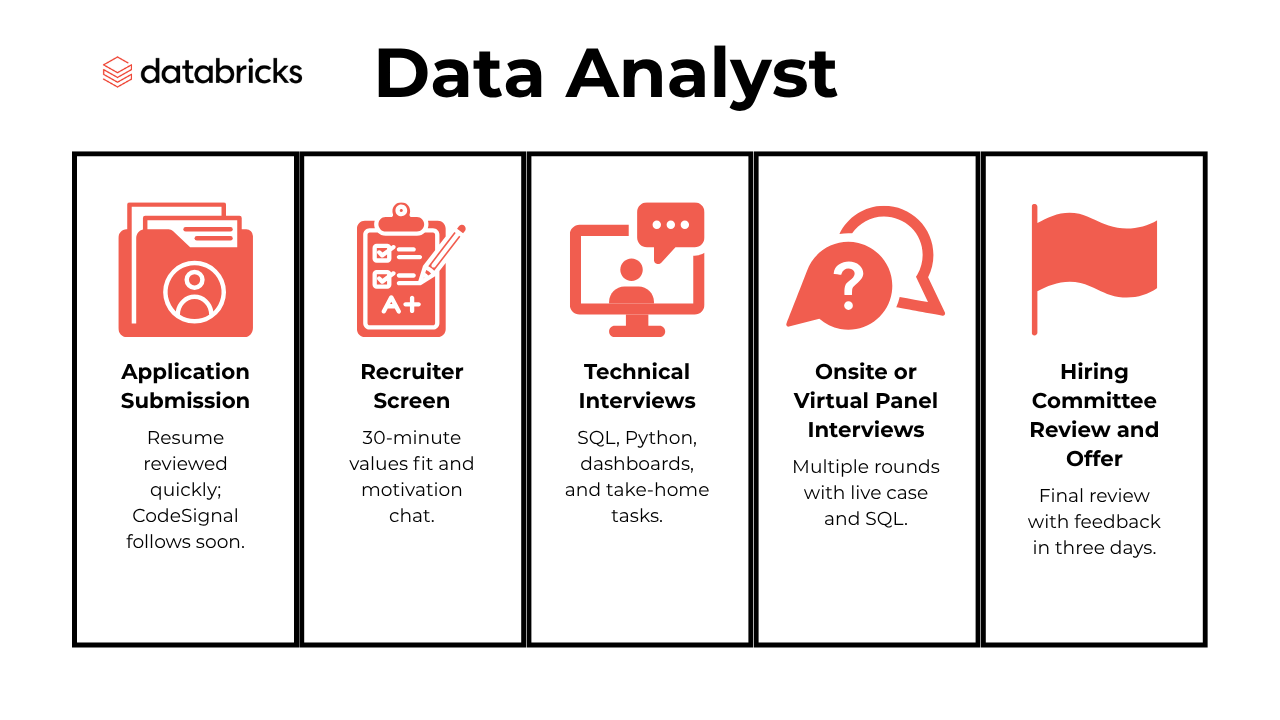

What Is the Interview Process Like for a Data Analyst Role at Databricks?

Applying for a Databricks data analytics position? The interview journey usually moves quickly, often wrapping up in just over three weeks. The process unfolds in five main stages. It starts with:

- Application Submission

- Recruiter Screening

- Technical Interviews

- Onsite or Virtual Panel Interviews

- Hiring Committee Review and Offer

Application Submission

Your Databricks journey begins with your resume. To stand out, tailor it to emphasize your work with SQL, Spark, and data visualization. Make sure to show clear, measurable business impact.

Most successful candidates—about 87%—get noticed through online applications, not referrals. That means your written materials carry a lot of weight. Databricks’ systems scan for keywords like Lakehouse, Delta Lake, and Tableau, so including those is a smart move.

Once you apply, the team usually reviews your resume within 72 hours. And if you’re a strong match, you can expect to receive a CodeSignal test invite within a week. This is a 70-minute technical assessment, and it marks the start of your formal vetting.

Recruiter Screening

If your resume and assessment pass the initial review, your first live conversation will be with a recruiter. This call typically lasts around 30 minutes. Think of it more as a two-way conversation than an interrogation.

You’ll talk about your experience and communication style. The recruiter wants to see if you align with Databricks’ values, especially their focus on being low-ego and customer-obsessed. It helps to come prepared with STAR-style stories that highlight how you’ve contributed to successful analytics work across teams.

The recruiter will also ask why you’re interested in Databricks. Show genuine curiosity. Candidates who ask thoughtful questions about the Lakehouse roadmap tend to advance more often. You’ll also get clarity on location preferences, salary bands, and the next technical steps. Most people hear back about next steps within two business days.

Technical Interviews

This is where things get more in-depth. You’ll begin with a 70-minute proctored CodeSignal challenge that includes four tasks. These combine SQL and Python, and they usually fall into the medium-to-hard difficulty range. To stay competitive, you should aim for a score above 810 out of 850.

Once you pass the assessment, you’ll move into live technical rounds. These interviews will test your understanding of data modeling, dashboard design within Databricks’ AI and BI tools, and debugging SQL queries in realistic scenarios. You might also encounter tasks related to Delta Lake’s time-travel features, A/B testing, or optimizing ETL workflows.

Some rounds may include a take-home task involving data analysis with Databricks. This is your chance to showcase how you handle real-world data challenges in notebooks.

If you’re interviewing at a more senior level, the expectations will increase. Senior Databricks data analyst candidates should be ready to discuss system design, stakeholder management, and long-term strategy in addition to technical execution.

Onsite or Virtual Panel Interviews

When you reach the final stages, you’ll participate in four or five one-hour interviews. These are usually conducted virtually, especially for global applicants. You’ll speak with a range of people, including fellow analysts, data engineers, a hiring manager, and someone from a cross-functional team.

Each interviewer scores your performance individually. They focus on your technical depth, how clearly you communicate insights, and how well you collaborate. After the sessions, the interviewers debrief on the same day to help reduce bias in decision-making.

You may also be asked to present a short case study. Usually, this involves translating raw data into executive-level insights using five slides. Candidates who tell compelling stories through data tend to have nearly twice the success rate in receiving offers.

Expect real-time challenges too. These could include whiteboarding SQL queries, critiquing a visualization, or answering culture-fit questions like, “How have you made data more accessible for nontechnical teams?”

Hiring Committee Review and Offer

Once interviews are complete, your application goes to the central hiring committee. This group reviews every scorecard and your references. They evaluate your technical performance, cultural alignment, and growth potential.

In most cases, the decision is made within three business days. Even if you don’t receive an offer, feedback is usually constructive and clear. Around 43% of candidates on Glassdoor described the experience as positive.

The committee weighs your interview performance heavily—technical results count for 60%, culture fit for 25%, and long-term potential for 15%. For borderline cases, strong references can be the deciding factor.

Final documents, including your equity grant and start date info, usually arrive within a week. That gives you plenty of time to prepare for the transition ahead.

What Questions Are Asked in a Databricks Data Analyst Interview?

If you’re preparing for a Databricks interview, it’s important to know what types of questions you’ll face across technical, product, and behavioral rounds so you can tailor your preparation with confidence.

SQL / Technical Questions

Expect many data analysis with Databricks style prompts that test your SQL fluency, data modeling logic, and ability to translate messy datasets into actionable insights using scalable techniques:

To determine the top 5 actions performed on Apple platforms during November 2020, filter the events table for rows where the created_at column matches the specified month and year, and the platform column is either ‘iphone’ or ‘ipad’. Group the results by action, count occurrences, and use the dense_rank() window function to rank them in descending order of frequency. Limit the output to 5 rows to get the top-ranked actions.

2. Given a table of payments data, write a query to calculate the average revenue per client

To solve this, use SQL to calculate the total revenue by summing amount_per_unit * quantity and divide it by the number of distinct user_id. Use CAST to round the result to two decimal places for the output.

To solve this, use window functions to rank song plays by user and song name, and then rank them again to find the third unique song for each user. Finally, use a LEFT JOIN with the users table to include user names and handle cases where the third unique song doesn’t exist.

To solve this, select the platform and created_at columns along with the count of distinct user IDs as daily_users. Filter the rows for the year 2020 using a WHERE clause and group the results by platform and created_at to summarize daily active users for each platform.

5. How would you interpret fraud detection trend graphs to detect emerging fraud patterns?

To interpret fraud detection trend graphs, focus on identifying anomalies, spikes, or patterns in fraudulent activities over time. Look for trends in transaction amounts, geographic locations, or user behavior that deviate from the norm. Use these insights to refine fraud detection algorithms, adjust thresholds, and implement preventive measures proactively.

6. How would you analyze the dataset to understand exactly where the revenue loss is occurring?

To analyze the dataset, start by segmenting the data by key factors such as item category, subcategory, marketing attribution source, and discount applied. Examine trends in revenue, quantity sold, and profit margin over the past 12 months to identify areas of decline. Consider correlating discounts with changes in revenue and analyzing specific marketing sources for inefficiencies.

Product & Metrics Questions

Databricks data analytics interviews often go beyond the numbers to assess how well you understand product strategy, metric design, and business decision-making in data-rich environments:

7. How would you go about increasing the number of comments on each Group post?

To increase the number of comments on Facebook Group posts, you can implement strategies such as improving post visibility through notifications, creating engaging content prompts, and fostering community interaction with features like polls or tagging members. Analyzing user engagement metrics can help refine these approaches.

To investigate the 5% increase in rider cancellations, analyze factors such as driver availability, wait times, pricing changes, app issues, or external events. Additionally, segment the data by location, time, and ride type to identify patterns or anomalies contributing to the cancellations.

9. Which is a better metric to look at, the average or the median?

When analyzing late orders, the median is often a better metric than the average, especially if the data is right-skewed. The median provides a more robust measure of central tendency that isn’t disproportionately influenced by extreme outliers, making it a better representation of typical lateness.

10. Given 100 Twitter users, what metrics would you use to rank their influence?

To quantify the influence of a Twitter user, you can use metrics such as follower count, engagement rate (likes, retweets, and comments per post), tweet reach, and sentiment analysis of their content. Additionally, network metrics like centrality in Twitter’s graph and the frequency of mentions by other influential accounts can provide deeper insights.

11. How would you determine customer service quality through a chat box?

To determine customer service quality through a chat box, analyze metrics such as response time, resolution time, and customer satisfaction scores from feedback surveys. Additionally, assess conversation sentiment using NLP techniques and track the frequency of unresolved issues to measure service effectiveness.

Behavioral / Collaboration Questions

To succeed as a Databricks data analyst, you’ll need to show how you communicate, collaborate, and drive impact across teams—so expect behavioral questions that reveal how you work with others under real-world conditions:

As a Databricks data analyst, you’ll often be the bridge between raw data and strategic business decisions. This question is your chance to show how you adapt your communication style to suit different audiences. If you’ve ever had a moment where your message didn’t land—like using too much technical jargon—explain how you realized the disconnect and changed your approach moving forward.

13. How comfortable are you presenting your insights?

Databricks values clear, confident communication, especially when explaining technical findings to executives or cross-functional partners. When answering this, walk through how you prepare your insights, including how you use tools like dashboards or notebooks to make data more accessible. Be sure to mention experience presenting both in-person and virtually, since most interview loops now include remote components.

14. Why Do You Want to Work With Us?

This is your opportunity to show that you’ve done your homework on Databricks. Focus on what excites you about their mission, products like the Lakehouse platform, or recent innovations in data and AI. When you tie your goals to Databricks’ culture of being customer-obsessed and low-ego, you’ll stand out from generic answers.

15. What do you tell an interviewer when they ask you what your strengths and weaknesses are?

To answer this well, pick a strength that’s directly relevant to data analysis—like digging into ambiguous datasets or communicating findings with clarity. Use a real example to show how that strength helped a team or project succeed. For weaknesses, keep it honest but constructive, showing how you’re working to improve without undermining your qualifications.

16. How would you convey insights and the methods you use to a non-technical audience?

At Databricks, analysts are often responsible for making complex concepts click for business stakeholders. When answering this, show how you simplify technical methods using visuals, analogies, or step-by-step breakdowns. The key is focusing on what the audience cares about and adjusting your language to keep them engaged and informed.

How to Prepare for a Databricks Data Analyst Interview

Preparing for a Databricks data analyst interview means blending sharp technical skills with thoughtful communication. You’ll want to start by refining your SQL through advanced drills that go beyond basics. Focus on complex joins, window functions, and performance tuning techniques like Z-ordering and file pruning. Databricks interviews frequently include Delta Lake time-travel syntax, so practice those patterns until they feel natural. Set up data analysis with Databricks using the free Community Edition workspace. This gives you hands-on experience and demonstrates initiative when discussing real-world scenarios.

At the same time, make sure you understand how the Lakehouse architecture works. Get familiar with Delta Lake’s ACID properties, schema enforcement, and medallion architecture. You should be able to explain how these concepts support scalable analytics and AI workloads across Databricks. Tools like Unity Catalog and Genie Spaces are also worth exploring, since you may be asked how Databricks supports governance and data democratization.

To get a sense of the real pressure and pacing, try a mock Databricks data analyst interview with a peer or mentor. Rehearsing in a timed setting builds confidence and helps refine how you tell the story of your work under interview conditions.

But don’t stop at technical prep. Databricks highly values communication. Practice presenting insights like a product analyst: start with the business question, focus on impact, and finish with clear recommendations. Prepare examples using the STAR method that show collaboration, customer focus, and innovation. During the process, remember that strong storytellers are nearly twice as likely to receive offers.

To polish your delivery further, test your skills with an AI-powered Databricks interviewer simulator. It’s a quick way to get feedback, identify gaps, and fine-tune your responses before the real thing. Finally, align your preparation with the broader Databricks data analytics mission. Show that you’re not just fluent in code, but also ready to drive business impact in a fast-moving, AI-first organization.

Conclusion

Landing a Databricks data analyst role means joining one of the most innovative teams shaping the future of data and AI. Your preparation should reflect the high standards of the company—both technically and culturally. Whether you’re diving deep into SQL, refining your Delta Lake fluency, or practicing storytelling under time pressure, every hour you invest can translate into a stronger interview performance. To keep growing, follow our Databricks Data Analyst Learning Path for guided prep. You can also find inspiration in Simran Singh’s success story, where she walks through how she landed her offer. And when you’re ready to drill into the specifics, explore our full Databricks Data Analyst Interview Questions Collection to stay sharp and focused.