Databricks Software Engineer Interview Guide (2025)

Introduction

Preparing for a Databricks software engineer interview means positioning yourself within one of the fastest-growing tech companies. Databricks experienced 70% year-over-year growth in 2024 and now powers over 10,000 organizations worldwide. That includes 60 percent of the Fortune 500. Its platform is central to modern data infrastructure, making it essential to enterprises across industries. The UK has seen Databricks-related roles grow 139 percent, showing global momentum. As demand for data and AI expertise rises, talent remains scarce. This gives a clear edge to candidates who are prepared. McKinsey projects that hundreds of millions may need to shift into data roles, yet few possess specialized Databricks skills. Joining Databricks now places you at the forefront of a $62 billion data revolution.

Role Overview & Culture

As a Databricks software engineer, you will join a team that is shaping the future of data intelligence through large-scale distributed systems, data infrastructure, and AI platforms. Engineers work across technologies like Apache Spark, Delta Lake, MLflow, and Kubernetes, building tools that power real-time analytics and machine learning at the enterprise scale. The engineering culture values the first principles of thinking, speed of execution, and high ownership. You will collaborate with some of the brightest minds in the industry and be supported through mentorship, technical training, and leadership development programs. Databricks fosters transparency and open communication, with weekly Q&As and regular team feedback. It is a place where your ideas matter and your code makes a meaningful impact.

Why This Role at Databricks?

Joining Databricks as a software engineer puts you at the heart of a company that is shaping the future of data and AI infrastructure. You are not just filling a role—you are entering a high-impact position at a company now valued at $62 billion, outpacing Snowflake, and primed for IPO in 2025 or 2026. With Databricks surpassing $3 billion in annualized revenue and growing at 60% year-over-year, your work directly contributes to a platform used by over 10,000 global enterprises. You’ll benefit from flexible remote work, rapid career growth, and strong equity upside through RSUs that vest over four years. And yes, we’ll cover Databricks SWE salary ranges later—including how compensation here rivals the highest in the industry.

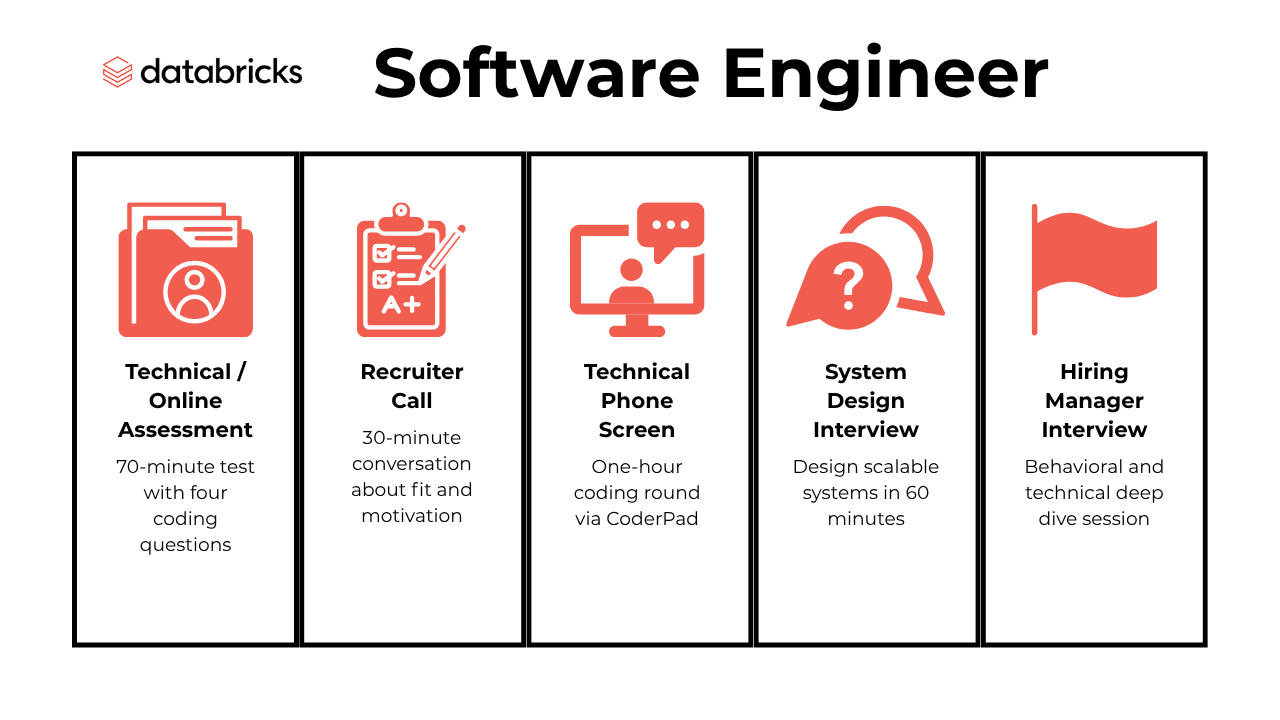

What Is the Interview Process Like for a Software Engineer Role at Databricks?

The Databricks software engineer interview process is designed to rigorously assess your technical depth, problem-solving ability, and culture fit, ensuring you’re ready to contribute to a $62 billion data and AI powerhouse. You’ll move through a multi-stage journey that typically takes four to eight weeks, with each round mapped to real-world challenges Databricks engineers solve every day. The process is highly competitive, moving through rounds like:

- Technical / Online Assessment

- Recruiter Call

- Technical Phone Screen

- System Design Interview

- Hiring Manager Interview

Technical / Online Assessment

Most SWE candidates begin with the Databricks online assessment, a 70-minute proctored coding test delivered through platforms like CodeSignal or HackerRank. You’ll face four questions—typically two easy and two medium/hard—covering data structures, algorithms, and real-world data manipulation scenarios. This assessment is designed to simulate the daily challenges Databricks engineers tackle, such as optimizing Spark jobs or transforming large datasets. Only about 30% of applicants pass this round, making preparation critical. Focus on LeetCode-style problems, especially those involving graphs, concurrency, and distributed computing. Excelling here signals your readiness for the technical rigor ahead and places you among the top contenders for the role.

Recruiter Call

The Databricks recruiter call is your first live interaction with the company and typically lasts 30 minutes. Here, you’ll discuss your background, technical interests, and motivation for joining Databricks. Recruiters are looking for alignment with Databricks’ mission and values, as well as clarity on your role preferences and location flexibility. Avoid discussing compensation at this stage; instead, focus on your passion for data and AI. This step is also where your profile is shared with engineering leads for potential team matching. With Databricks planning over 3,000 hires in 2025 and a 60% year-over-year growth rate, recruiters are keen to identify candidates who can thrive in a high-velocity, innovation-driven environment.

Technical Phone Screen

The Databricks technical phone screen is a one-hour session with a Databricks engineer, conducted via CoderPad or Google Meet. You’ll tackle medium to hard LeetCode-style questions, often involving graph algorithms, optimization, or concurrency. Expect follow-up questions on time and space complexity, and be ready to explain your thought process clearly. This round tests your coding fluency, problem-solving under pressure, and ability to communicate technical solutions. Only about 20% of candidates advance past this stage, so practicing recent Databricks-tagged LeetCode problems and reviewing core computer science concepts is essential. Your performance here is a strong indicator of your technical fit for the engineering team.

System Design Interview

The Databricks system design interview is a cornerstone of the onsite loop, lasting about an hour and often conducted via Google Docs. You’ll be asked to architect scalable systems—think designing a high-throughput data pipeline or a fault-tolerant distributed service. Interviewers look for your ability to break down complex problems, justify design trade-offs, and optimize for performance, scalability, and reliability. For senior candidates, this may include two system design rounds: one broad architecture session and one focused on a specific component. Success here demonstrates your readiness to build and scale platforms that process exabytes of data for Fortune 500 clients, a daily reality at Databricks.

Hiring Manager Interview

The final stage is the Databricks hiring manager interview, a 60-minute behavioral and technical deep dive with your prospective manager or a senior leader. You’ll discuss your previous projects, technical decision-making, and how you handle ambiguity and team dynamics. Expect scenario-based questions about conflict resolution, leadership, and adapting to changing requirements. The hiring manager assesses your alignment with Databricks’ culture of ownership, innovation, and transparency. With 89% employee satisfaction and a high bar for technical excellence, this round ensures you’re a technical and cultural fit. Strong performance here often leads to an offer, bringing you one step closer to joining a team shaping the future of data and AI.

What Questions Are Asked in a Databricks Software Engineer Interview?

Knowing what types of questions to expect in a Databricks software engineer interview can give you a major advantage as you prepare to demonstrate both your technical skills and cultural fit.

Coding / Technical Questions

Most candidates report that around 50% of the loop involves Databricks coding interview questions, with a strong emphasis on data structures, algorithms, and real-world data manipulation problems:

1. Calculate the first touch attribution for each user_id that converted

To determine the first touch attribution, first subset the users who converted using a CTE. Then, identify the first session visit for each user by grouping by user_id and applying the MIN() function to the created_at field. Finally, join this information back to the attribution table to retrieve the channel associated with the first session.

2. Find the total salary of slacking employees

To solve this, use an INNER JOIN between employees and projects to find employees assigned to at least one project. Then, group by employee ID and filter using HAVING COUNT(p.End_dt) = 0 to identify employees who haven’t completed any projects. Finally, sum their salaries using SELECT sum(salary).

3. Calculate the 3-day rolling average of steps for each user

To calculate the 3-day rolling average of steps for each user, use self-joins on the daily_steps table to fetch step counts for the current day, the previous day, and two days prior. Then, compute the average by summing these values and dividing by 3, rounding to the nearest whole number. Ensure that only days with complete data for all three days are included in the output.

To solve this, calculate the total sum of the list and iterate through the list while maintaining a running sum of the left side. For each index, compute the right side sum using the formula total - leftsum - current_element. If the left and right sums match, return the index; otherwise, return -1 after completing the iteration.

To solve this, iterate through the friends_removed list to find corresponding entries in friends_added with matching user IDs. Ensure the user IDs are sorted to standardize comparisons. Remove matched entries from friends_added to avoid duplicate pairings and append the friendship details to the output list.

6. Write a function to compute the average salary using recency weighted average

To compute the recency weighted average salary, assign weights to each year’s salary based on its recency, with the most recent year having the highest weight. Multiply each salary by its respective weight, sum the results, and divide by the total weight. Round the result to two decimal places.

System / Product Design Questions

Expect Databricks system design interview questions that focus on designing scalable, fault-tolerant systems capable of handling massive data volumes, often under real-world engineering constraints:

To design the data warehouse, start by identifying the business process, which involves storing sales data for analytics and reporting. Define the granularity of events, identify dimensions (e.g., buyer, item, date, payment method), and facts (e.g., quantity sold, total paid, net revenue). Finally, sketch a star schema to organize the data efficiently for querying.

8. Design a secure and scalable instant messaging platform

To architect this system, use a publish-subscribe model for real-time messaging, distributed databases for message storage, and end-to-end encryption for security. Implement audit logging and tamper-proof storage for compliance, and use container orchestration platforms for scalability and reliability. Rigorous testing ensures compliance, security, and performance.

9. Create a schema to keep track of customer address changes

To track customer address changes, design a schema with three tables: Customers, Addresses, and CustomerAddressHistory. The CustomerAddressHistory table includes move_in_date and move_out_date fields to record occupancy periods, allowing retrieval of both historical and current address data. This schema ensures normalization and preserves address change history.

10. Design a database schema for a ride-sharing app

To design a database for a ride-sharing app, consider two use cases: app backend and analytics. For the backend, prioritize query speed and immutability, using RiderID and DriverID as primary keys in a NoSQL database for flexibility. For analytics, use a star schema with dimension tables (e.g., users, riders, vehicles) and a fact table with constants like Payment and Trip, regionalizing data to optimize costs and query speeds.

To design a unified commenting system, you would need a scalable architecture capable of handling real-time data updates across multiple platforms. This could involve using distributed databases for persistence, WebSocket or similar technologies for real-time updates, and caching mechanisms to optimize performance. For AI censorship, dynamic NLP-based filtering could be recommended for flexibility, but latency concerns should be addressed by pre-processing comments asynchronously or using hybrid approaches combining static and dynamic methods.

Behavioral / Culture Fit Questions

Databricks behavioral interview questions help assess how well you align with the company’s core values, like ownership, customer obsession, and transparency in high-impact team environments:

In the context of a Databricks engineering role, strong communication is essential because engineers often collaborate with cross-functional teams such as data scientists, product managers, and enterprise clients. If you are asked this question, consider sharing an example where you had difficulty aligning on expectations or translating complex technical ideas to a broader audience. For instance, maybe you had to explain the implications of a Spark pipeline’s scalability to a non-technical product owner. Describe how you identified the gap, clarified the technical jargon into business-friendly language, and confirmed mutual understanding. At Databricks, engineers are expected to be customer-obsessed and solutions-oriented, so it is critical to show that you learned to adapt your communication style to better serve diverse stakeholder needs.

13. Why Do You Want to Work With Us

This question is particularly important when applying to Databricks because the company is known for its innovation in distributed computing and its strong open-source culture. To respond effectively, go beyond general admiration. Mention specific elements such as the company’s leadership in Apache Spark, its move toward lakehouse architecture, or its collaboration with the MLflow and Delta Lake communities. You could also talk about how Databricks’ emphasis on learning, transparency, and engineering excellence aligns with your values. If you have experience contributing to open-source projects or working on large-scale data platforms, explain how those experiences motivate you to join a company at the forefront of data infrastructure.

14. What do you tell an interviewer when they ask you what your strengths and weaknesses are?

At Databricks, technical rigor and personal growth are equally valued. When discussing strengths, choose ones that are relevant to the role, such as experience building distributed systems, optimizing Spark jobs, or leading cross-functional initiatives. Support these with examples that demonstrate measurable impact. For weaknesses, pick something authentic but manageable, such as initially struggling to say no to competing priorities. Emphasize how you developed strategies to improve, like using time-blocking or actively communicating trade-offs. The key is to demonstrate self-awareness, a desire to improve, and the maturity to turn challenges into growth opportunities.

15. How would you convey insights and the methods you use to a non-technical audience?

This question reflects a real-world expectation for software engineers at Databricks. While much of the work may be deeply technical, it often needs to be shared with users, partners, or business leaders who lack deep engineering backgrounds. To answer well, describe how you first assess the audience’s familiarity with technical terms, then adjust your language accordingly. For example, when explaining model drift or a Spark job’s execution plan, you might use analogies, visualizations, or simplified diagrams to make the insights clear. Highlight how you ensure understanding by validating feedback, encouraging questions, and iterating based on the audience’s needs. Demonstrating this skill shows you can bridge the gap between complex systems and business outcomes, which is highly valued at Databricks.

How to Prepare for a Databricks Software Engineer Interview

Preparing for a Databricks software engineer interview means building confidence across multiple technical domains while understanding how Databricks engineers think and solve problems at scale.

Expect questions rooted in distributed computing, especially around the Databricks Lakehouse Platform, Delta Lake, and open-source tools like Apache Spark. Practicing systems-level thinking is key, as Databricks engineers often design for exabyte-scale performance.

To prepare, go beyond generic LeetCode and explore Databricks practice exercises available through community repositories, Advanced Interview Query Questions, and Databricks Academy labs. These will help you get comfortable with Spark internals, SQL performance tuning, and pipeline orchestration. You may be asked to complete a Databricks take-home assignment, often involving a mini ETL or data engineering project. Focus on clear documentation, test coverage, and scalability—Databricks is deeply metrics-driven, and interviewers care about real-world tradeoffs.

If you’re navigating the Databricks new grad interview process, expect additional rounds on CS fundamentals and collaborative problem-solving. Databricks values curiosity and depth over rote memorization, so don’t be afraid to explore edge cases and ask clarifying questions through mock interviews and our AI Interviewer.

Throughout the Databricks SWE interview, stay grounded in fundamentals but ready to scale your solutions. Your ability to reason from first principles, communicate clearly, and iterate quickly will be as important as the code you write.

FAQs

What Is the Average Salary for a Software Engineer at Databricks?

Average Base Salary

Average Total Compensation

How Hard Is the Databricks System Design Interview?

The Databricks system design interview is rigorous and tailored to real-world challenges at massive scale. You’ll be asked to design distributed architectures for data ingestion, processing, and analytics on exabyte-scale workloads. Interviewers expect deep knowledge of data modeling, fault tolerance, and cloud-native design patterns. Familiarity with Spark, Delta Lake, and caching strategies is essential. The key to success is demonstrating tradeoff thinking, scalability, and alignment with Databricks’ high-performance engineering culture.

What Coding Platforms Should I Practice On?

Interview Query is the go-to resource for sharpening your data structures and algorithm skills, especially for those targeting technical screens. Practicing with our databricks tagged problems will help you focus on relevant topics like graph traversal, concurrency, and distributed systems. For system design and SQL-heavy rounds, supplement your prep with real Databricks notebooks, open-source Spark challenges, and internal Databricks practice exercises when available.

Conclusion

The Databricks software engineer interview is more than a hiring process—it is your entry point into a company driving the next era of AI and data innovation. As you prepare, remember that mastering the interview is not just about technical accuracy. It’s about showing you’re ready to solve problems that matter on a global scale. If you’re looking to build real fluency, start with our Databricks SQL Learning Path tailored for engineers. Need practice? Our curated Databricks Python questions collection mirrors the exact challenges you’ll face. And for extra motivation, check out Simran Singh’s success story, who turned prep into a six-figure offer. You’re closer than you think.