Databricks Product Manager Interview Guide – Process, Questions, and Tips

Introduction

Databricks product manager interview represents one of the most sought-after opportunities in the data and AI industry. As Databricks continues redefining how organizations work with big data and machine learning, the company seeks product managers who thrive at the intersection of technical depth and strategic vision. If you are passionate about building transformative data platforms, the Databricks PM interview gives you a chance to showcase your technical fluency, customer obsession, and ability to drive product impact. Databricks offers a unique culture that rewards first-principles thinking, bold innovation, and collaboration across functions. Whether you’re coming from a startup, a tech giant, or a data science background, this guide will help you understand the expectations, prep with purpose, and present your best self throughout the process.

Role Overview & Culture

When preparing for your Databricks PM interview, it’s important to understand what defines the day-to-day experience of a product manager within the company. At Databricks, PMs lead with ownership across the full product lifecycle. You will define product strategy, translate customer needs into features, and partner deeply with engineering, design, marketing, and go-to-market teams. The work is fast-paced and technical, often requiring fluency in Python and familiarity with tools like Apache Spark. You are expected to be data-driven and deeply empathetic toward users. Databricks PMs spend considerable time listening to customers and then distilling those insights into product decisions. The company culture prioritizes customer obsession and truth-seeking. This means every feature must be justified with both user feedback and rigorous data. You are not only responsible for roadmap execution but also for defining success using OKRs, experimenting quickly, and learning continuously.

Why This PM Role at Databricks?

If you’re evaluating PM roles in the data and AI space, Databricks stands apart due to its unmatched market momentum and scope of impact. As the pioneer of the Lakehouse architecture, Databricks has created a platform that merges the flexibility of data lakes with the performance of data warehouses. According to Forrester’s 2024 Wave report, Databricks leads the Data Lakehouse category in both strategy and current offering. You will be building on a platform that delivers up to 9 times better price-performance than traditional cloud warehouses. The company’s growth reinforces this advantage. Databricks reached $3 billion in ARR in 2024, growing 60% year-over-year. With a customer base that includes over 60% of the Fortune 500, your work directly influences thousands of enterprises. Compensation is top-quartile, with PM packages ranging from $323K to $898K annually. More importantly, you will contribute to cutting-edge work in generative AI, data governance, and automation at massive scale. This is a role where both your technical abilities and strategic leadership are magnified.

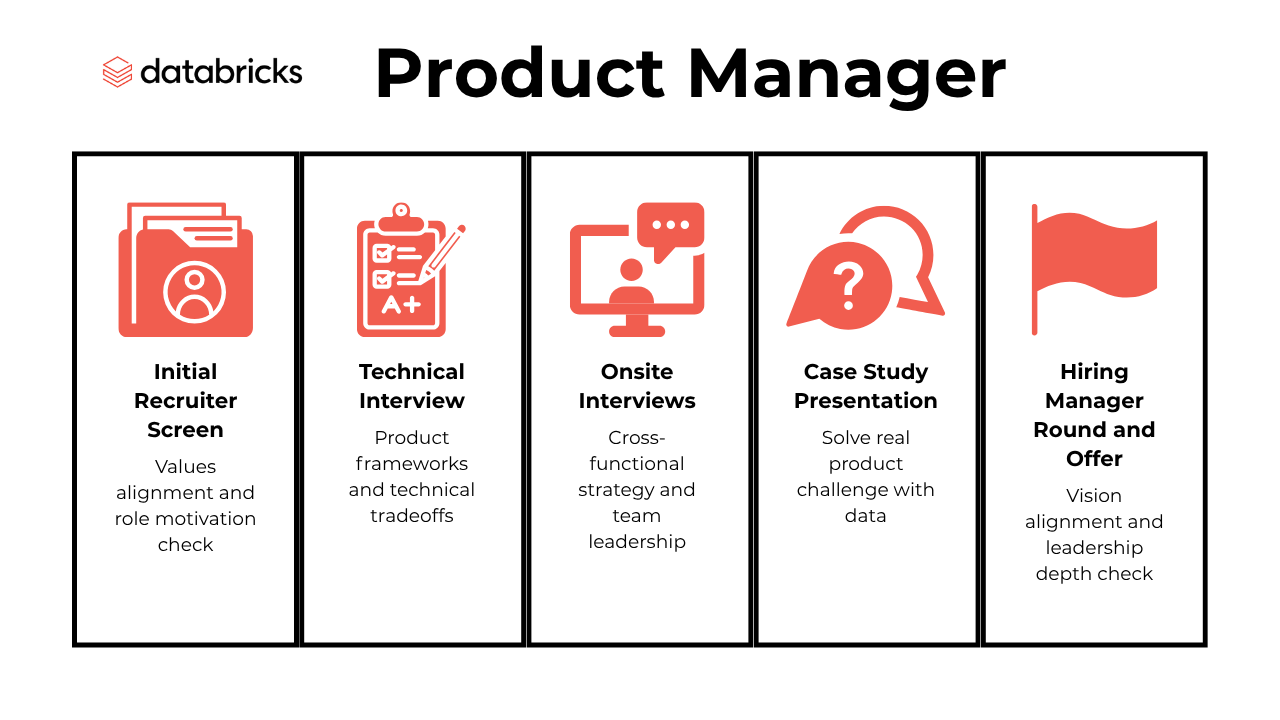

What Is the Interview Process Like for a Product Manager Role at Databricks?

The Databricks product manager interview is a comprehensive and well-structured process designed to evaluate candidates on both technical skills and cultural fit. It typically includes several stages to ensure alignment with Databricks’ mission and values while assessing product management expertise:

- Initial Recruiter Screen

- Technical Interview

- Onsite (Virtual or In-Person) Interviews

- Case Study Presentation

- Hiring Manager Round and Offer

Initial Recruiter Screen

The Databricks PM interview typically begins with a 30-minute recruiter phone call focused on understanding who you are beyond your resume. You can expect questions about your product background, how you’ve solved customer pain points, and your motivations for joining Databricks. Recruiters look for clear alignment with core values like customer obsession and data-first decision-making. They will walk you through the interview process, answer your initial questions, and clarify expectations for the role. This stage is your opportunity to demonstrate curiosity about Databricks’ mission and communicate how your experience aligns with the technical and strategic demands of the PM role. Think of it as setting the foundation for your candidacy, not just a formality.

Technical Interview

In the next phase, you will complete a video-based technical interview that assesses how well you bridge the technical and product worlds. Interviewers will dive into product management frameworks like opportunity sizing, customer segmentation, or feature prioritization. You’ll be expected to explain data workflows and make tradeoffs using concepts like system latency, throughput, or API design. Expect technical questions involving SQL, Python, or Apache Spark. You may be asked to articulate how the Lakehouse architecture differs from legacy architectures and what this means for customer value. This interview is less about coding and more about how well you can reason through technical problems, communicate with engineers, and make sound product decisions under real-world constraints.

Onsite (Virtual or In-Person) Interviews

The onsite phase consists of four to five structured interviews with cross-functional stakeholders. These include engineering managers, PM peers, data scientists, and possibly a design or go-to-market leader. Each conversation probes your ability to define product strategy, translate customer feedback into product specs, and lead prioritization discussions with clarity. You’ll be asked how you’ve driven impact using OKRs or metrics frameworks, how you resolve conflict across teams, and how you’ve turned ambiguous product opportunities into successful launches. At this stage, cultural fit becomes even more important. Your ability to reflect Databricks’ bias for action, truth-seeking, and ownership mindset will be closely evaluated, often through scenario-based behavioral questions.

Case Study Presentation

This critical step involves a take-home case study that simulates a real Databricks product challenge. You’ll be given a few days to craft a strategy addressing customer pain points, product-market fit, and key metrics. Your presentation should not only walk through your solution but also demonstrate how you prioritize, frame trade-offs, and align teams around product goals. You will present to a panel of senior product leaders who assess clarity, structure, and the depth of your thinking. Use this opportunity to show how you use data to drive decisions, how you communicate across technical and non-technical stakeholders, and how your thinking reflects real-world constraints and opportunities.

Hiring Manager Round and Offer

The final round is a strategic conversation with the hiring manager or executive leadership. You will discuss how your product thinking aligns with Databricks’ platform vision, particularly around AI and data democratization. This is your moment to convey long-term interest and articulate how you see yourself growing with the company. Leaders may probe your thoughts on where Databricks should invest next or ask you to critique a recent product launch. This is not just about assessing your readiness to lead but understanding how you think about systems, innovation, and scale. If all goes well, and your skills and values align, this conversation can lead directly to an offer.

What Questions Are Asked in a Databricks Product Manager Interview?

If you’re preparing for a Databricks product manager interview, understanding the types of questions asked can help you focus your prep and practice with purpose.

Product Sense & Strategy Questions

Nearly every Databricks product manager interview includes a product-sense prompt to evaluate how you frame problems, prioritize features, and balance user needs with business goals:

1. How would you measure the impact on customer lifetime value of entering the podcast space?

To measure the impact on customer lifetime value (CLV) of entering the podcast space, consider analyzing metrics such as customer acquisition costs (CAC), retention rates, and average revenue per user (ARPU) before and after launching the podcast feature. Additionally, evaluate the effect of podcasts on reducing churn, increasing engagement, and driving upsells or cross-sells to determine their contribution to CLV.

To evaluate this situation, analyze the trade-off between revenue growth and user retention. While increased revenue is positive, a decline in user searches could indicate a negative user experience, which may harm long-term sustainability. Metrics such as lifetime value (LTV) of users, churn rate, and overall user satisfaction should be considered to assess whether the strategy is beneficial in the long run.

To test this, analyze data by grouping users into buckets based on their friend count six months ago and tracking their activity over time. Alternatively, use a binary classification model with features like account age, demographics, and past activity to determine the relationship between friend count and user activity probability.

4. How would you use the ride data to project the lifetime of a new driver on the system?

To project the lifetime of a new driver, analyze the 90 days of ride data to identify patterns in driver retention and activity, such as the average tenure of drivers and factors influencing drop-off rates. For lifetime value (LTV), calculate the revenue generated per driver and discount it over their expected lifetime, factoring in retention rates, ride frequency, and average revenue per ride.

5. What’s the expected churn rate in March for all customers that bought the product since January 1st?

To calculate the expected churn rate, consider that churn decreases by 20% month over month. For customers acquired in January, the churn rate drops to 8% in February. By combining the churn rates for January and February cohorts and calculating their weighted average, the expected churn rate in March is approximately 0.864 or 86.4%.

Technical & Data Questions

In the Databricks pm interview, you’ll also be asked to demonstrate fluency in data concepts, from experiment design to SQL-based analytics, reflecting Databricks’ engineering-driven culture:

6. How would you assess the validity of the result?

To assess the validity of the results, start by examining the setup of the A/B test. Ensure user groups were randomly assigned and distributions across groups are similar. Check whether external factors or unequal variants influenced the results. Finally, evaluate the p-value measurement, considering sample size, duration, and potential biases introduced by continuous monitoring.

7. In an A/B test, how can you check if assignment to the various buckets was truly random?

To determine if bucket assignment is random, you can analyze the distribution of traffic sources, ensuring they are balanced across variants. Additionally, assess user group attributes (like demographics or device type) and compare distributions of unrelated metrics to confirm no systematic bias in the randomization process.

8. Can unbalanced sample sizes in an AB test result in bias?

The bias depends on factors such as how the data was collected and whether the groups were randomized properly. While a smaller sample size can still provide a powerful test if it is sufficiently large (e.g., 50K users), issues like pooled variance being heavily weighted toward the larger group or differences in variances and means between groups can introduce bias. Downsampling the larger group to match the smaller group can help mitigate potential bias.

9. Categorize sales based on the amount of sales and the region

To solve this, use SQL CASE statements within aggregate functions to categorize sales into “Standard,” “Premium,” and “Promotional” based on the given conditions. Group by region and sum the sale_amount for each category, ensuring proper conditions for July sales and the East region.

To count daily active users on each platform for 2020, select the platform and created_at columns, and use COUNT(DISTINCT user_id) to count unique users. Filter the rows to the year 2020 using WHERE YEAR(created_at) = 2020, and group the results by platform and created_at using GROUP BY 1, 2.

Leadership / Behavioral Questions

For senior candidates, expect questions akin to senior product manager interview questions around stakeholder alignment, influence without authority, and embodying customer-obsessed leadership under pressure:

At Databricks, you’ll often work with a spectrum of stakeholders—from data scientists to C-suite leaders—each with different technical fluency. Use this question to show how you translate complex data topics like Delta Lake architecture or cost-performance tradeoffs into language each audience understands. For example, if you once struggled to explain the performance impact of compute clusters to a non-technical partner, describe how you re-framed your narrative using real-world analogies, visual data summaries, or interactive dashboards. Emphasize how that led to faster decision-making and better alignment, and how you evolved your communication toolkit from that point on.

12. How comfortable are you presenting your insights?

Databricks PMs are expected to regularly present roadmaps, OKR outcomes, or launch impact to technical and non-technical audiences. In this answer, share how you’ve delivered insights with clarity using tools like Databricks notebooks, dashboards, or tools like Tableau and Looker. Detail how you prepare for different formats—whether it’s a live all-hands, executive briefing, or a customer webinar—and how you adjust your data story based on the audience’s needs. A strong answer would show you’re adept at using SQL and Python to extract insights, but equally skilled at telling a narrative that inspires action.

Databricks PMs operate in a fast-moving, enterprise-grade environment where downstream impacts of delays can ripple across multi-million-dollar deals and partner integrations. Use this question to show your maturity under pressure. For instance, walk through a time you faced a last-minute delay in a product roll-out, how you collaborated with marketing and sales to reframe external messaging, and how you prioritized partial feature delivery or early access for key customers. Highlight your cross-functional orchestration and how you relied on data to make real-time trade-offs. Post-incident, you might mention introducing better sprint forecasting or updating launch readiness frameworks.

14. Why Do You Want to Work With Us

This is your opportunity to align with Databricks’ mission to democratize data and AI. Reference specific elements like their leadership in the Lakehouse paradigm, their open-source contributions like Apache Spark, or recent innovations in generative AI with the Data Intelligence Platform. Tie this to your personal background—for instance, your passion for building platforms that enable analysts and engineers to collaborate, or your excitement to work with customers who process petabytes of data daily. Refer to values like first-principles thinking and truth-seeking, and express how these resonate with how you approach product decisions and long-term strategy.

How to Prepare for a Databricks Product Manager Interview

Treat the Databricks product manager interview like a product launch by building a preparation roadmap anchored in two frameworks—STAR for behavioral narratives and Jobs-to-Be-Done for product thinking—because reviewers expect structured storytelling and customer-centric insight. Formally rehearse these frameworks aloud, iterating until every example highlights the measurable impact and aligns with Databricks’ values of customer obsession and truth-seeking.

Deep-dive Lakehouse research next; read the Delta Lake docs, skim the CIDR Lakehouse paper, and prototype a notebook to articulate how a unified architecture reduces latency, cost, and governance friction.

During interviews, weave those insights into market narratives that show you can translate technical differentiation into business outcomes, a frequent on-site discussion theme. Build metric trees for every feature you mention—start with business objectives, cascade to leading indicators, and finish with actionable telemetry—to demonstrate Databricks’ “let the data decide” ethos. Practice defending these trees under friendly fire; ask peers to push on metric sensitivity, experiment design, and trade-offs until your reasoning feels bulletproof.

Simulate mock cases weekly; time-box 30-minute product sense prompts, leave 15 minutes to outline, then present crisp narratives that balance problem framing, prioritization, and launch sequencing. Record sessions to refine pacing and slide craft, mirroring the take-home presentation panel.

Experienced candidates should drill into senior product manager interview questions focusing on roadmap bets, multi-cloud strategy, and organization-level influence to illustrate readiness for Staff and above scope. Finally, debrief after every rehearsal, logging gaps and next steps; iterative refinement is how great PMs ship and how you’ll earn the offer.

FAQs

Does the Databricks PM Interview Differ for Senior vs. Mid-Level Roles?

Yes, and quite significantly. While the core structure of the interview remains consistent, SR product manager interview questions at Databricks place much more emphasis on product vision, team leadership, and long-range strategy. Senior candidates are expected to drive cross-org initiatives, influence executive stakeholders, and define multi-quarter OKRs tied to company-level goals. Mid-level PMs, on the other hand, focus more on execution, iteration, and end-to-end ownership within a defined product scope. Both roles require strong technical fluency and customer empathy, but senior roles push harder on systems-level thinking, managing ambiguity, and mentorship. Tailor your preparation to match the expectations for the role you are targeting.

What Skills Does Databricks Test Most in a PM Interview?

Throughout the Databricks pm interview, you are tested on your ability to think from first principles, break down complex systems, and build products that solve real customer pain. Databricks looks for candidates who can seamlessly combine technical depth with strategic clarity. This means you should be ready to dive into data architecture concepts like Delta Lake or Spark, explain trade-offs using performance metrics, and show evidence of making product decisions that scale. In addition, the company highly values collaboration and truth-seeking, so expect rigorous questioning around cross-functional teamwork, using data to resolve conflicts, and continuously raising the product quality bar.

Where Can I Practice Databricks Product Manager Interview Questions?

To get the most out of your prep, consider reviewing real-world Databricks product manager interview questions on Interview Query’s PM question bank. You’ll find behavioral prompts, case studies, and technical questions modeled after actual Databricks interviews. Practicing these questions helps you get comfortable articulating your thought process, structuring complex answers, and demonstrating how your experience fits the unique demands of the role. It’s especially helpful for preparing for the case study and cross-functional interviews, where clarity and structure matter as much as the content itself.

Conclusion

The Databricks product manager interview is more than just a series of questions—it’s a high-stakes opportunity to demonstrate how you think, lead, and build in one of the fastest-moving data companies in the world. If you’re just beginning your prep, start with our Databricks PM learning path to build a foundation in technical and strategic fluency. To dive deeper into actual prompts, visit the Databricks A/B Testing interview question bank for product sense, data, and behavioral examples. And if you need real-world inspiration, check out Dania’s success story to see how focused, data-driven preparation leads to an offer.