Waymo Data Engineer Interview Guide (2025) – Process, Questions, Prep Tips

Introduction

Waymo is pioneering the future of transportation by deploying fully autonomous vehicles at scale. Originally launched in 2009 as Google’s Self-Driving Car Project, Waymo has matured into a $45 billion powerhouse within Alphabet’s portfolio. This growth is not just financial. The company has expanded rapidly from 800 to over 1,500 employees in just a year. With this scale-up, the data engineering function has become a backbone of operations. Every hour, each vehicle contributes up to 18TB of sensor data—from lidar, radar, and high-definition cameras—which must be cleaned, transformed, and analyzed. As Waymo aims to operate in 10 cities by the end of 2025 while managing 250,000 weekly rides, data engineers are essential to enabling both operational growth and technological innovation.

Role Overview & Culture

The Waymo data engineer role centers on designing and operating real-time telemetry pipelines that ingest, transform, and serve vast volumes of sensor data—up to 40Gbit/s per vehicle. You will manage big-data ETL workflows that support real-time analytics and historical reporting, using distributed tools like Apache Spark and BigQuery. These pipelines power everything from internal dashboards for fleet health to regulatory compliance metrics. You will also build safety-critical dashboards that must be both precise and resilient, as they directly inform decisions that affect passenger safety and service uptime.

You will collaborate with teams spanning ML, mapping, and core infrastructure. ML teams rely on your data systems to train perception and prediction models. Mapping teams depend on accurate sensor-to-map alignment, and the infrastructure teams need your help scaling systems as new cities come online. All of this is embedded within Waymo’s “Safety is Urgent” culture. Your systems must deliver fault-tolerant, verified data. At Waymo, iteration is fast but never careless. The organization combines speed and discipline, placing you in an environment where your work directly supports one of the safest autonomous fleets in the world.

Why This Role at Waymo?

You will be working at the heart of autonomous driving, where every line of pipeline code has a real-world consequence. Unlike typical backend roles, your work will influence mission-critical systems that keep passengers safe. Waymo’s fleet generates petabytes of data monthly, and your pipelines must not only scale but also meet the demands of real-time safety and operational oversight. This is your chance to own architecture that supports 250,000 weekly rides across multiple cities, all while improving safety in a domain that has already shown 88% fewer serious injuries than human driving.

Beyond the mission, Waymo provides industry-leading compensation and benefits. H-1B filings in 2025 show data engineers earning base salaries from $170,000 to over $400,000, with generous equity grants and Alphabet-level perks. These include comprehensive health insurance, 24 weeks of paid parental leave, education reimbursements, and access to Google facilities. You will also receive free autonomous rides and the chance to work on some of the most advanced real-world data systems on the planet.

Waymo’s 2025 roadmap means your career will scale alongside the business. With expansion into 10 cities and a goal of 25 to 50 million rides, the engineering challenges are multiplying. Whether you want to move into ML infrastructure, lead distributed systems efforts, or contribute to open-source and academic research, this role opens doors. If you thrive on solving hard, safety-critical problems at massive scale, then Waymo is one of the few places where you can make an immediate and long-term impact in a field that is actively redefining transportation.

What Is the Interview Process Like for a Data Engineer Role at Waymo?

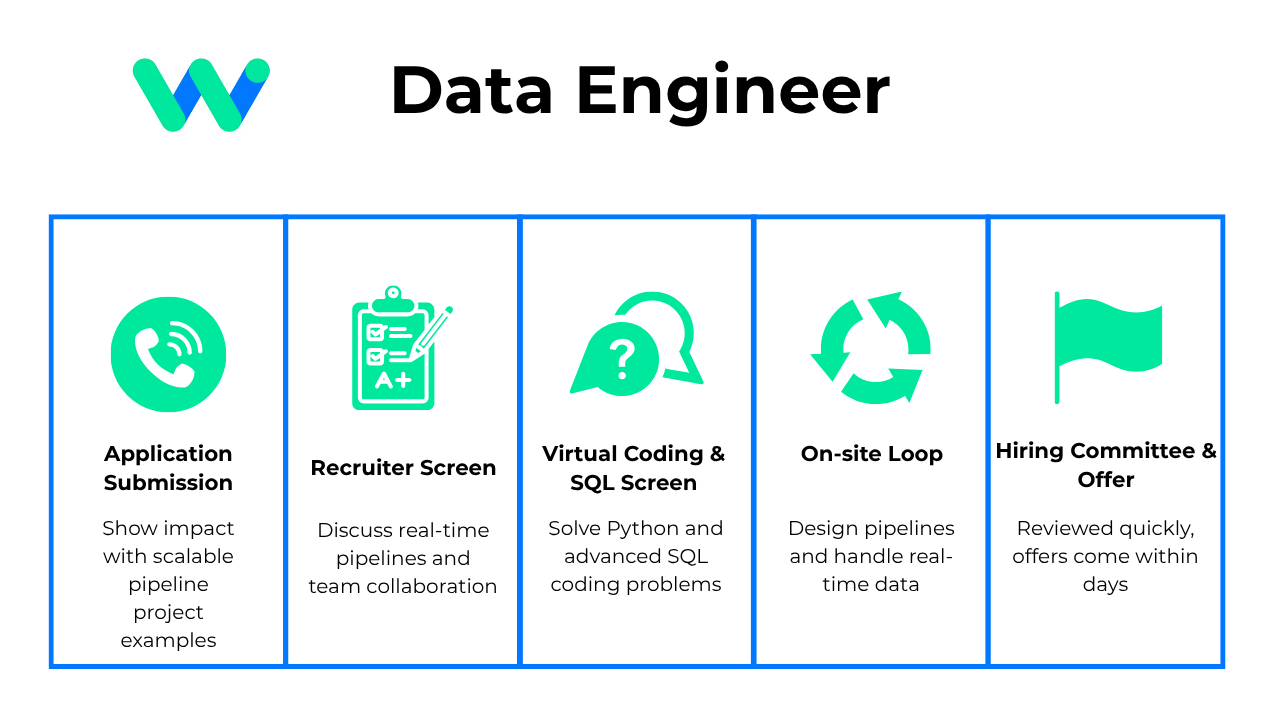

The Waymo data engineer interview typically unfolds in five phases, designed to rigorously evaluate your technical depth, problem-solving abilities, and alignment with the company’s safety-first mission. From your first screen to the final offer, the process can take 8 to 10 weeks, and high-performing candidates are often fast-tracked through Waymo’s hiring committee within 48 hours. Here is how it goes:

- Application Submission

- Recruiter Screen

- Virtual Coding & SQL Screen

- On‑site Loop (pipeline design, Python coding, behavioral)

- Hiring Committee & Offer

Application Submission

Your Waymo journey begins with an application submission through the official careers page or via a recruiter referral. Recruiters look for specific skills like Python, SQL, Apache Spark, and Google Cloud Platform, but they also assess impact and scalability.

For instance, describing how you optimized an ETL pipeline that reduced processing time by 60% on a multi-terabyte dataset demonstrates both relevance and scope.

Waymo receives thousands of applications weekly, so precise articulation of outcomes is key. Avoid vague phrases like “worked on data” and instead show how your work delivered measurable results. If you have built data warehousing solutions that supported cross-team reporting or optimized distributed jobs across clusters, be explicit. Your attention to detail in the resume mirrors the engineering precision Waymo values.

Recruiter Screen

This 30 to 45-minute conversation is your first direct interaction with Waymo. Your recruiter will explore your experience and enthusiasm for working with petabyte-scale autonomous vehicle data. You should be ready to explain how you have designed or scaled data pipelines, especially those processing real-time streams or safety-critical outputs. The recruiter will gauge your understanding of Waymo’s data scope—where one car can produce up to 1.8TB per hour—and your motivation to contribute to safety-first engineering.

Expect questions about your collaboration style, timeline expectations, and basic compensation discussions. This is also your chance to express curiosity about Waymo’s long-term vision, their ML infrastructure, and how you might fit into a team building the future of transportation.

Virtual Coding & SQL Screen

You will then face 1 or 2 technical interviews focused on core coding and SQL proficiency. These 45 to 60-minute sessions usually include medium-level questions involving arrays, strings, dynamic programming, and graph traversal algorithms. The SQL portion goes beyond basic SELECTs.

You will need to write multi-join queries, optimize aggregations, and reason through partitioning strategies in datasets exceeding billions of rows. Some sessions are conducted via CoderPad, where you solve problems live while articulating your thought process. You might also be asked to sketch out scalable schema designs or handle large data transformations. Interviewers are keen to see how you approach performance bottlenecks and whether you can maintain data quality under tight operational constraints common in real-time autonomous fleet systems.

On‑site Loop (pipeline design, Python coding, behavioral)

The virtual on-site loop spans four to five interviews, each lasting about 45 minutes. One critical interview tests your ability to architect scalable data pipelines for handling telemetry from thousands of vehicles in near real time.

You may be asked to design systems that ingest, validate, and store 40Gbit/s of incoming sensor data per vehicle. You will also face two Python rounds that include problems in BFS/DFS, matrix transformations, and performance-sensitive string manipulation.

These questions often emulate real-world fleet data processing tasks. Behavioral interviews test how you collaborate across teams like ML, Mapping, and Infrastructure. Expect questions about technical conflict resolution, iterating under pressure, and contributing to safety-critical systems. Some candidates also encounter a system design round centered on ML data infrastructure or simulation data APIs.

Hiring Committee & Offer

Once interviews are complete, your candidate packet is reviewed by Waymo’s hiring committee. Each member independently evaluates technical feedback, scoring sheets, and recruiter notes. Final decisions often occur within one to two weeks, though strong candidates can receive offers in 48 to 72 hours. Offers are comprehensive and competitive, reflecting Waymo’s Alphabet-backed compensation strategy. Relocation assistance and flexible start dates are typically included.

Even if you are not selected, Waymo often provides constructive feedback and encourages re-application. If you succeed, expect thorough onboarding, team introductions, and immediate exposure to some of the most advanced data systems in autonomous vehicle engineering.

What Questions Are Asked in a Waymo Data Engineer Interview?

Waymo’s interview process is designed to rigorously assess your technical and collaborative skills, with questions that reflect the real-world systems and safety-first infrastructure behind autonomous driving at scale.

Python / SQL Coding Questions

Expect a mix of Python interview questions for data engineer candidates that test your ability to manipulate data, optimize queries, and reason through real-world edge cases in code-heavy environments:

To solve this, filter the dataframe by grade using students_df['grade'] > 90 and by favorite color using students_df['favorite_color'].isin(['red', 'green']). Combine these conditions with the & operator to return the filtered rows.

2. Given a list of integers, write a function to generate a histogram with x bins

To solve this, calculate the range of each bin by dividing the range of the dataset by x. Iterate through the dataset, determine the bin for each value, and count occurrences. Finally, construct a dictionary with bin ranges as keys and counts as values, excluding bins with zero counts.

3. Find Bigrams

To find bigrams in a sentence, split the input string into words using .split(). Then, iterate through the list of words, creating tuples of consecutive words and appending them to a list. Return the list of bigrams, ensuring all words are converted to lowercase for consistency.

4. Write a query to find the top five paired products and their names

To find paired products often purchased together, join the transactions and products tables to associate transactions with product names. Use a self-join on the resulting table to identify pairs of products purchased by the same user at the same time. Filter out duplicate and same-product pairs by ensuring the first product name is alphabetically less than the second. Finally, group by product pairs, count occurrences, and order by count to get the top five pairs.

5. Given a table of bank transactions, write a query to get the last transaction for each day.

To solve this, use a ROW_NUMBER() function with PARTITION BY DATE(created_at) and ORDER BY created_at DESC to rank transactions within each day. Then, filter for rows where ROW_NUMBER = 1 to get the last transaction of each day. Alternatively, use a subquery with MAX(created_at) grouped by date to identify the latest transaction for each day.

6. Get the top 3 highest employee salaries by department

To solve this, use the RANK() function with a PARTITION BY clause to rank employees’ salaries within each department. Then, join the employees and departments tables to include department names, and use the CONCAT() function to combine first and last names into a single column. Finally, filter for ranks less than 4 and sort the results by department name (ascending) and salary (descending).

Data Pipeline & System Design Questions

Waymo’s system design interviews challenge you to build data pipelines that handle event-driven ingestion, streaming reliability, and latency-sensitive infrastructure under massive scale:

7. How would you design the YouTube video recommendation system?

To design a YouTube recommendation system, consider user engagement metrics (e.g., watch time, likes, shares), content similarity, and collaborative filtering techniques. Incorporate personalization by analyzing user history and preferences, and ensure diversity in recommendations to avoid echo chambers. Testing and validating the model with A/B testing and user feedback is crucial for continuous improvement.

8. How would you build a data pipeline for hourly, daily, and weekly active user analytics?

To build this pipeline, you can either run queries directly on the data lake for each dashboard refresh (a “local” solution) or pre-aggregate the data and store it in a database for faster querying (a “unified” solution). The unified approach involves creating a reporting table with pre-aggregated metrics using SQL queries and updating it hourly with an orchestrator like Airflow. This ensures better scalability and performance while reducing query load.

To design this pipeline, start with data ingestion using tools like Airflow to pull daily updates from sources into a raw data lake. Use Spark or dbt for cleaning and normalizing data, ensuring schema consistency and handling missing values. Perform feature engineering to aggregate rental counts, weather data, and event schedules into a feature-rich dataset. Store the final output in an analytics warehouse for batch ML training and online inference, ensuring scalability, data validation, and monitoring for continuous improvement.

10. How would you architect an end-to-end CSV upload and ingestion system?

To design a robust CSV ingestion pipeline, use a presigned URL workflow for file uploads to object storage (e.g., S3). Implement a distributed queue to trigger parsing and validation jobs, which process files in a memory-efficient streaming manner. Store validated data in a columnar data warehouse for fast querying, and expose it via APIs with tenant isolation and caching for analytics. Ensure observability, scalability, and failure handling with metrics, retries, and idempotent processing.

To collect and aggregate unstructured video data, start with primary metadata collection and indexing, which involves automating the extraction of basic metadata like author, location, and format. Next, use user-generated content tagging, either manually or scaled with machine learning, to enrich the dataset. Finally, employ binary-level collection for detailed analysis, such as color and audio properties, while balancing resource costs. Automated content analysis using machine learning techniques like image recognition and NLP can further enhance the process.

Behavioral & Collaboration Questions

These questions explore how you align with Waymo’s safety-first culture and evaluate your ability to collaborate across ML, infrastructure, and operations in high-stakes engineering environments:

12. Why Do You Want to Work With Us?

As a data engineer at Waymo, your motivation should reflect the scale and mission of transforming mobility through autonomous technology. You might express enthusiasm for working on real-time telemetry pipelines that process 1.8TB of data per vehicle per hour, or your alignment with Waymo’s safety-first principles. Highlighting your interest in building infrastructure that directly supports ML model reliability and public safety reinforces your fit for a company solving some of the most complex transportation challenges in the world.

13. How comfortable are you presenting your insights?

Waymo values data engineers who can clearly communicate with ML researchers, mapping experts, and operations leaders across disciplines. You should describe your ability to distill complex infrastructure performance metrics or pipeline design trade-offs using tools like Looker or custom dashboards. Sharing a recent example—perhaps explaining latency improvements in a real-time data stream to a non-technical audience—demonstrates your confidence and adaptability in high-stakes, cross-functional settings.

14. What do you tell an interviewer when they ask you what your strengths and weaknesses are?

A strong answer might involve highlighting a strength like building scalable ETL systems under tight latency budgets, supported by a STAR example involving terabyte-scale processing. For a weakness, you could mention a tendency to over-index on pipeline perfection, followed by your strategy of incorporating early stakeholder feedback to prioritize business value. This response shows not only technical depth but also the self-awareness and iterative mindset that Waymo encourages in its engineers.

15. How would you convey insights and the methods you use to a non-technical audience?

At Waymo, you’ll frequently present insights to stakeholders like safety teams or city operations leaders who may not have deep technical backgrounds. Start by framing your explanation around how the data impacts fleet safety or efficiency, then use analogies or visualizations to clarify complex topics like stream processing latency or sensor fusion quality checks. For example, explaining how a data lag in telemetry could delay hazard detection makes your technical point tangible and mission relevant.

How to Prepare for a Data Engineer Role at Waymo

Waymo is a leader in autonomous vehicle technology, so preparing for a data engineer interview here means gearing up for cutting-edge challenges. You’ll be expected to build and maintain robust data pipelines for self-driving systems and machine learning, requiring strong Python and SQL skills alongside big data tools and Google Cloud experience. The interview process is competitive – with multiple rounds of coding and design – but with the right preparation strategy, you can showcase your expertise and passion. The tips below will guide you through focused practice in key areas so you walk into your Waymo interview confident, technically sharp, and ready to impress.

Drill Python + SQL Challenges

You want to demonstrate rock-solid coding fundamentals in Python and SQL – the bread and butter of data engineering. In fact, Python is mentioned in about 70% of data engineer job postings, with SQL close behind at 69%, confirming how essential these skills are in 2025. Waymo’s own technical screens focus heavily on coding in these languages. To excel, immerse yourself in Python interview questions for data engineer roles and practice solving them under timed conditions. Repeating various Python interview questions for data engineer scenarios will sharpen your problem-solving speed and accuracy. Leverage resources like the Interview Query question bank for realistic exercises – you’ll build confidence as you see your proficiency grow. Remember, every challenge you crack in practice is one fewer surprise in the actual interview.

Build Mini Streaming Pipeline Project

Nothing shows hands-on ability like a project – consider building a mini streaming data pipeline to showcase your skills. Real-time data processing is becoming the norm, with Apache Kafka (a popular streaming platform) appearing in about 24% of data engineer job postings. In industry, Kafka’s usage is ubiquitous, with over 80% of Fortune 100 companies using it for streaming, highlighting that streaming expertise will set you apart. Design a pipeline that ingests data through Kafka and lands it into a warehouse like BigQuery for analytics. Waymo values experience with Google Cloud tools, so a BigQuery + Kafka project aligns perfectly with their tech stack. By discussing this project, you demonstrate end-to-end data engineering competence – from handling high-throughput sensor data to managing storage and analytics – proving that you can build scalable systems just like those powering Waymo’s self-driving technology.

Practice Pipeline‑Design Whiteboards

Be ready to whiteboard the architecture of a data pipeline, as system design questions are common in data engineering interviews. Often, data engineer python interview questions go beyond code, asking you to sketch out solutions for data movement and processing. In fact, pipeline design topics – from scalability to schema evolution – are frequently cited among top interview questions. A notable example is, “How do you handle back-pressure in a data pipeline?” – a question probing whether you can manage data flow when a consumer lags behind a fast producer. In your answer, you’d explain techniques like flow control and buffering to prevent overload. Practising these scenarios will hone your ability to communicate a clear design under pressure. As you sketch on the whiteboard, talk through your assumptions, how you’d ensure reliability (think error handling, recovery), and how you’d handle scale (back-pressure, fault tolerance, etc.). This shows the interviewer that you can thoughtfully design the kind of resilient data architectures Waymo relies on.

Mock Interviews & Peer Feedback

Technical know-how isn’t enough – you also need poise and clarity under interview conditions. One great way to build that confidence is through mock interviews with peers or mentors. Try to simulate the real Waymo interview experience: for example, their onsite technical rounds last about 45 minutes each, so practise answering questions and solving problems in 45-minute sessions to get used to the pace. Use platforms like Interview Query to find realistic data engineering questions and AI tools for practice. Don’t skip the feedback step – an outside perspective can reveal habits or gaps you might miss. This practice pays off. Candidates who undergo structured mock interviews are 36% more likely to excel in the real thing. So treat each mock as a learning opportunity. You’ll refine your communication, fix any weak spots, and reduce anxiety. By the time you face the actual Waymo panel, you’ll feel as if you’ve done it before – and you’ll be ready to deliver your answers with calm self-assurance.

FAQs

What Is the Average Salary for a Waymo Data Engineer?

Average Base Salary

Average Total Compensation

How Long Does the Waymo Data Engineer Interview Take?

The Waymo data engineer interview process typically takes between four to six weeks from application to offer. Factors that influence timing include how quickly you can schedule each interview round and the availability of the interview panel and hiring committee. To avoid delays, keep your calendar flexible for virtual onsite rounds and respond to recruiter messages promptly. Some candidates report that once the final onsite loop is complete, they receive an offer decision within 48 to 72 hours. On the other hand, internal approvals for offer letters can extend the timeline slightly, especially for roles requiring relocation or special clearance. Staying engaged and communicative with your recruiter can often help speed things up.

Where Can I Read Discussion Posts on Waymo DE Roles?

To read first-hand experiences, tips, and technical breakdowns from actual candidates, visit the Interview Query community forums. You can explore threads tagged under Waymo – Data Engineer, which includes user-submitted insights on interview questions, interview difficulty ratings, and even compensation data. These discussion posts are a valuable way to benchmark your own prep strategy and get realistic expectations about the process. Engaging with this content can help you anticipate technical areas of focus and behavioral trends specific to Waymo’s interview format.

Are There Job Postings for Waymo Data Engineer Roles on Interview Query?

Yes, Interview Query regularly features open roles at Waymo on its curated job board. You can browse current listings, set alerts, and apply directly by visiting the Waymo Data Engineer Jobs on Interview Query page. It’s a great resource for staying updated on new openings, especially for specialized roles in telemetry, real-time analytics, and ML infrastructure.

Conclusion

Landing a Waymo data engineer role means preparing for challenges at a scale few companies can match. To succeed, start with a structured foundation using the Interview Query data engineer learning path, which covers everything from Python to real-time systems. For inspiration, read how Jeffrey Li succeeded in landing his offer through persistence and targeted prep. Once you’re ready to drill questions, check out the complete data engineer questions collection tailored for top tech companies. With rigorous preparation and focused practice, you’ll be ready to build the next generation of data systems powering safe and scalable autonomous mobility.