Walmart Data Engineer Interview Guide: Questions, Process, and Preparation

Introduction

If you’re preparing for a Walmart data engineer interview in 2025, you’re stepping into a role that sits at the heart of one of the world’s most dynamic retail transformations. Walmart is hiring data engineers to power its aggressive growth strategy, which includes a $108.7 billion Q1 sales report and a 4.5% rise in comparable store sales. This momentum is fueled by e-commerce expansion, AI integration, and omnichannel innovation. As a data engineer, you’ll contribute to scalable pipelines, real-time analytics, and cutting-edge AI tools like the Wallaby LLM and content personalization platforms. Your expertise enables smarter decisions, faster innovation, and better customer experiences—all essential to helping Walmart stay ahead in a competitive, data-driven retail landscape.

Role Overview & Culture

As a data engineer at Walmart, you play a critical role in designing and maintaining large-scale data systems that drive everything from inventory accuracy to personalized customer experiences. During your Walmart data engineer interview, you’ll be evaluated on your ability to build scalable pipelines, manage complex data workflows, and ensure high data quality using tools like Spark, Scala, and Google Cloud Platform. You’ll work closely with data scientists, analysts, and product teams to turn raw data into actionable insights that support real-time decision-making across a global operation. Walmart’s engineering culture is collaborative, fast-moving, and focused on innovation. You’ll be encouraged to share ideas, mentor others, and grow your skills while making a direct impact on millions of lives every day.

Why This Role at Walmart?

If you’re looking to level up your career, the Walmart data engineer role offers unmatched scale, pay, and technical exposure. You’ll work on systems that process millions of transactions daily, giving you the chance to build pipelines and platforms that few companies can match in complexity or impact. This means your code doesn’t just sit in a repo—it drives real-time decisions, fuels personalization engines, and touches millions of users. You’ll sharpen your skills with Spark, Scala, and GCP while gaining visibility across teams and initiatives. Plus, Walmart offers excellent compensation, career mobility, and learning opportunities. If you want a role where your work is challenging, visible, and future-proof, this is where you want to be.

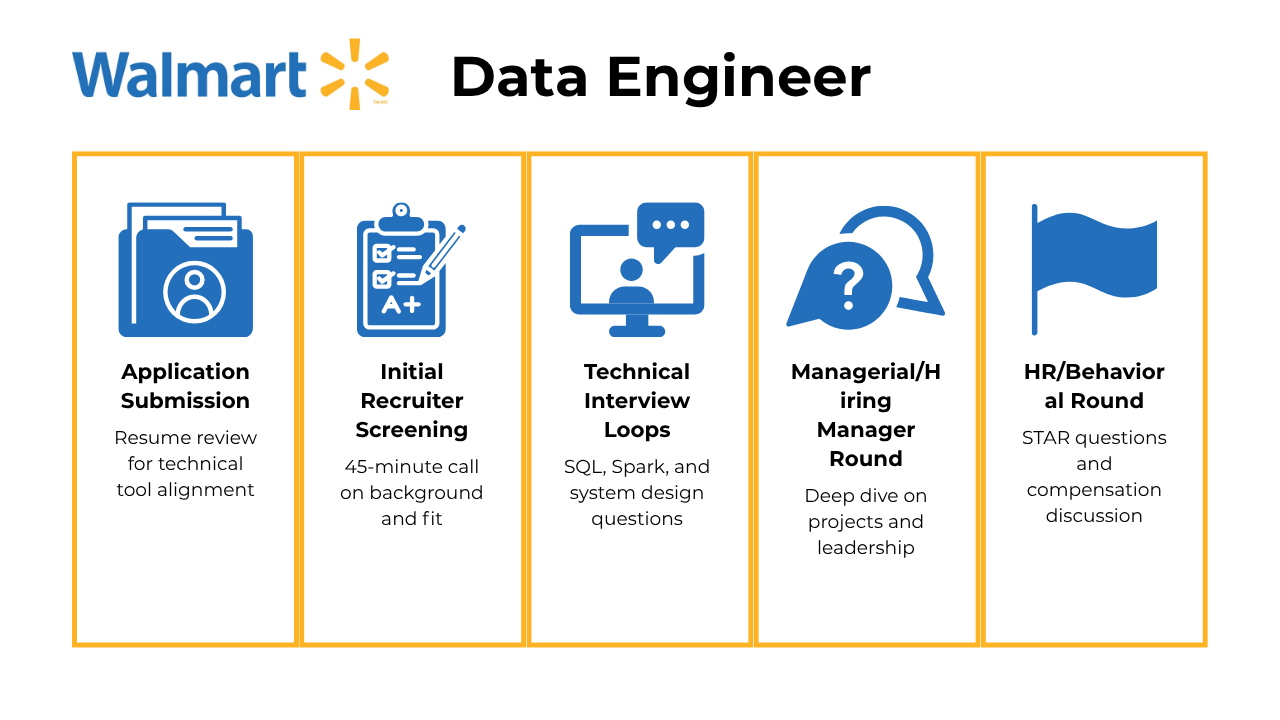

What Is the Interview Process Like for a Data Engineer Role at Walmart?

The Walmart data engineer interview process at Walmart is structured and comprehensive, designed to evaluate both technical expertise and cultural fit. Based on recent Walmart data engineer interview experience feedback from candidates, the process typically spans 2-3 weeks and consists of multiple rigorous rounds, like:

- Application Submission

- Initial Recruiter Screening

- Technical Interview Loops

- Managerial/Hiring Manager Round

- HR/Behavioral Round

Application Submission

Candidates can apply directly through Walmart’s careers portal at careers.walmart.com, where they submit their resume and complete basic application details. The application process is streamlined to take approximately 15-20 minutes, focusing on relevant experience and technical skills. Walmart’s personnel associates review applications systematically, matching candidates to specific positions and shifts based on requirements. The initial application triggers an automated review process where candidates are screened for basic qualifications and experience levels. Strong applications highlighting relevant data engineering experience, particularly with big data technologies like Spark, Scala, and cloud platforms, significantly improve selection chances.

Once your application passes the initial review, the next phase involves a conversation with a recruiter.

Initial Recruiter Screening

The first interactive stage involves a 45-minute phone call with a recruiter or HR representative. This screening focuses on resume review, project discussions, and basic motivation assessment. Recruiters ask fundamental questions about the candidate’s background, interest in Walmart, and expectations from the role. Simple technical questions may be included to gauge basic competency levels. The conversation also covers logistical aspects, role details, and provides candidates an opportunity to ask questions about the position and company culture. Successful completion of this round indicates alignment between candidate expectations and role requirements.

Passing the recruiter screening leads you into the most critical part of the process: the technical interviews.

Technical Interview Loops

Technical interviews form the core of Walmart’s assessment process, typically consisting of 2-3 rounds conducted on the same day. The first technical round emphasizes data structures and algorithms (DSA) with medium to hard difficulty questions, alongside complex SQL queries involving window functions, joins, and optimization. The second technical round focuses on big data technologies including Spark optimization, PySpark, Kafka concepts, and system design scenarios. Recent interview experiences highlight questions on memory management, performance tuning, and real-time data processing architectures. Advanced rounds may include Scala programming, Lambda/Kappa architectures, and cloud platform expertise, particularly Google Cloud Platform.

Once the technical depth is evaluated, Walmart shifts focus to assessing your leadership and communication through a managerial interview.

Managerial/Hiring Manager Round

The hiring manager interview combines behavioral assessment with technical project discussions lasting approximately 60-90 minutes. This round involves deep-dive conversations about previous projects, architectural decisions, and scalability considerations. Managers evaluate problem-solving approaches, team collaboration skills, and ownership mentality through scenario-based questions. Candidates face detailed cross-questioning about their technical choices, production challenges, and stakeholder communication abilities. The discussion also covers leadership potential, adaptability, and alignment with Walmart’s core values and mission. This round determines cultural fit and assesses the candidate’s potential for growth within the organization.

Finally, once you’re through with the hiring manager round, the last step centers on HR conversations and aligning on the offer.

HR/Behavioral Round

The final HR round focuses on compensation negotiation, work culture discussion, and long-term career alignment. HR representatives explore the candidate’s growth expectations, work preferences, and cultural fit within Walmart’s environment. Behavioral questions assess teamwork, initiative, communication skills, and adaptability using the STAR format. This round also covers benefits, work-life balance, and organizational growth opportunities. Compensation discussions reveal that Walmart has limited negotiation flexibility, with focus primarily on base salary and joining bonuses rather than stocks or performance bonuses. The HR round typically concludes within a week, with offer processes taking 1-2 weeks after successful completion.

What Questions Are Asked in a Walmart Data Engineer Interview?

To prepare effectively, you need to understand the range of Walmart data engineer interview questions, from SQL-heavy technical screens to system design and behavioral evaluations. SQL is particularly emphasized, and Walmart SQL interview questions often test your ability to write optimized, production-ready queries.

Coding / Technical Questions

In this section, you’ll encounter challenging problems that test your SQL proficiency, Python scripting for ETL, and data modeling logic. Walmart SQL interview questions commonly focus on joins, window functions, and real-time data handling:

1. Write a query to find the top five paired products and their names

To solve this, first join the transactions and products tables to associate transactions with product names. Then, use a self-join on the resulting table to find pairs of products purchased together by the same user at the same time. Filter out duplicate and same-product pairs by ensuring the first product name is alphabetically less than the second. Finally, group by the product pairs, count their occurrences, and order by the count in descending order to get the top five pairs.

To compute the metric, calculate the average rating for each query using the AVG function and round it to two decimal places. To incorporate position into the ranking, use the inverse of the position (1/position) as a weighted factor in the calculation. This ensures higher relevance for top positions.

3. Given an integer N, write a function that returns the longest common prefix

To solve this, iterate through numbers from 2 to N and check if each number is prime. A number is prime if it is greater than 1 and not divisible by any number other than 1 and itself. Optimizations include checking divisors only up to the square root of the number and considering numbers of the form (6n \pm 1).

4. Write a function to sample from a truncated normal distribution

To solve this, calculate the truncation limit using the percentile threshold and the percentage point function (PPF) of the normal distribution. Then, generate random samples from the normal distribution and filter them to ensure they fall below the truncation limit. Repeat until the desired number of samples is obtained.

5. Priority Queue Using Linked List

To implement a priority queue using a linked list, create a Node class to represent each element with its value and priority. Use a PriorityQueue class to manage the linked list, supporting insert, delete, and peek operations. Insert elements in the correct position based on priority, with smaller priority values indicating higher priority. For delete and peek, return the element with the highest priority, considering the order of insertion for ties.

System / Architecture Design Questions

These questions assess your ability to design scalable data systems and explain architectural trade-offs. You’ll be expected to tackle realistic business cases that reflect the scope and depth of Walmart data engineer interview questions.

6. Address Schema: Create a schema to keep track of customer address changes

To track customer address changes, design a schema with three tables: Customers, Addresses, and CustomerAddressHistory. The CustomerAddressHistory table links customers to addresses with move_in_date and move_out_date fields, allowing you to record occupancy periods. Use move_out_date IS NULL to identify current occupants and sort by move_in_date for historical data.

7. Clickstream Data: Design a solution to store and query raw data from Kafka on a daily basis

To design a cost-effective solution for storing and querying 600 million daily events with a two-year retention period, use Amazon Redshift for scalable storage and analytics. Transfer data from Kafka to Redshift using Spark Streaming or Amazon Kinesis Data Firehouse, ensuring scalability and cost efficiency. Optimize query performance by configuring Redshift’s SORTKEY and DISTKEY, and use an orchestrator like Airflow for automation and monitoring.

8. Swipe Payment API: Determine the requirements for designing a database system to store payment APIs

To design a database system for Swipe Inc., functional requirements include handling API requests, verifying API keys, encrypting sensitive user data, and supporting machine learning pipelines for fraud detection. Non-functional requirements emphasize upgradability, flexibility for diverse queries, and speed for efficient API call processing.

To model the schema, create a crossings table with columns for id, license_plate, enter_time, exit_time, and car_model_id. Additionally, create a car_models table with columns for id and model_name. Use a foreign key relationship between car_model_id in crossings and id in car_models to save space and represent car models efficiently.

To approach this migration, start by identifying the entities and relationships in the current document database. Normalize the data by creating separate tables for users, friends, posts, and interactions, ensuring proper foreign key relationships. Design the schema to optimize for analytics and consistency, and implement ETL processes to transfer and transform the data into the relational database.

Behavioral & Culture Fit Questions

Walmart evaluates more than just your coding skills—expect questions that explore collaboration, ownership, and adaptability. Drawing from your Walmart data engineer interview experience, use STAR-format stories that demonstrate leadership, communication, and problem-solving in complex team environments.

11. Given a question about your strengths and weaknesses, how should you respond?

Walmart places high value on self-awareness and continuous improvement. When asked about your strengths and weaknesses, it is important to show how your strengths can contribute to large-scale data solutions and how you actively work to address areas for growth, particularly in fast-paced environments like retail technology. Using the STAR method ensures your examples are structured and impactful.

12. How comfortable are you presenting your insights?

As a data engineer at Walmart, you will often need to communicate complex insights to cross-functional teams. When discussing your comfort with presenting data, highlight your preparation process, how you simplify insights for non-technical stakeholders, and the tools you use for both live and virtual presentations. Show that you can adapt your communication style to match the audience’s understanding and business needs.

13. What are some effective ways to make data more accessible to non-technical people?

Walmart’s business thrives on data-driven decision making that reaches associates and executives alike. Therefore, you need to demonstrate your ability to make data accessible by using intuitive visuals, concise storytelling, and tools that support interactive exploration. Showing how you’ve done this before in real scenarios will underscore your ability to bridge the technical and business gap.

Strong communication is essential for Walmart data engineers who work closely with business stakeholders to align technical solutions with operational goals. If asked about a time you struggled to communicate with stakeholders, focus on how you identified the gap—such as using too much technical jargon—and how you adapted your approach. Showing that you learned to tailor your message and improve clarity will demonstrate growth and your ability to collaborate effectively.

15. Why Do You Want to Work With Us

Walmart looks for candidates who are not only technically skilled but also aligned with the company’s mission of helping people save money and live better. When asked why you want to work at Walmart, connect your goals and values to the company’s focus on scale, innovation, and impact. Refer to specific initiatives, the company culture, or your excitement about solving large-scale data challenges to show you’ve done your research and are genuinely interested.

Senior & Level-Specific Questions

At higher levels, like those found in Walmart senior data engineer interview questions or Walmart data engineer 3 interview questions, you’ll need to show depth in architectural strategy, mentoring, and cross-functional leadership. These questions go beyond execution and focus on impact, scale, and technical influence:

16. How have you influenced data strategy or architecture decisions at your previous company?

At the senior level, Walmart expects engineers to contribute to long-term planning. Use this question to highlight your role in shaping data pipelines, choosing technologies, or establishing best practices. Explain how your decisions impacted scalability, cost-efficiency, or team productivity.

17. Describe a time when you led a cross-functional project involving multiple teams.

Walmart’s data systems span supply chain, merchandising, e-commerce, and more. Share how you coordinated with engineers, analysts, and stakeholders, managed timelines, and aligned on goals. Emphasize how you handled conflicting priorities or unclear requirements.

18. Tell us about a time you mentored junior engineers or onboarded new team members.

Senior engineers are often expected to uplift their team. Describe how you guided someone through complex systems or helped them develop skills in tools like Spark, Airflow, or data modeling. Show your investment in team growth and technical leadership.

How to Prepare for a Data Engineer Role at Walmart

Successfully navigating the Walmart data engineer interview takes focused preparation across technical, behavioral, and communication areas. You’ll need to show strong SQL skills, data modeling expertise, and the ability to design scalable data systems. Just as important, you must clearly explain your thought process and collaborate well with others. Start early—ideally over a few weeks—and prioritize practicing real-world scenarios aligned with Walmart’s scale and tech stack.

Sharpen your SQL by solving complex query problems involving window functions, joins, and optimizations. Be ready to model schemas and explain SCD-2 patterns and normalization. For system design, practice sketching data pipelines for high-throughput systems, clarify requirements, and communicate trade-offs during whiteboard sessions.

Use AI Interviewer to simulate Walmart’s multi-stage process. These helps refine your technical storytelling and surface communication gaps. Record yourself to improve pacing and clarity.

Since Walmart heavily uses Spark, Kafka, and Google Cloud Platform, learn key concepts like Spark partitioning, Kafka stream handling, and GCP services like BigQuery and Pub/Sub. If you lack GCP experience, focus on cloud fundamentals that translate across platforms.

Finally, prepare 5 to 7 STAR-based stories that showcase your technical achievements, leadership, and problem-solving. Choose examples where you drove outcomes, mentored teammates, or handled ambiguity under pressure. Make your results measurable and emphasize communication with both technical and business stakeholders. Consistent practice across these areas will set you up for success.

FAQs

What is the average salary for Walmart Data Engineers?

Average Base Salary

Average Total Compensation

How many rounds are there in a Walmart Data Engineer interview?

The Walmart data engineer interview process typically includes 4 to 6 rounds. These start with an initial recruiter screening and continue through technical interviews, a hiring manager round, and an HR or behavioral round. Some rounds may be grouped into back-to-back sessions, especially during technical assessments. The structure may vary slightly depending on role level, with staff positions involving more system design components.

Where can I find real interview experiences?

You can find real Walmart data engineer interview experience threads on platforms like Blind, Glassdoor, and LeetCode Discuss. These forums feature detailed accounts from candidates covering questions asked, difficulty level, and tips for preparation.

Do SQL questions dominate the technical screen?

Yes, SQL is a core part of the evaluation. Walmart SQL interview questions are consistently included in early technical rounds, often focusing on complex joins, window functions, and query optimization. While big data and architecture skills are important, SQL mastery is essential to clear the first technical hurdle.

Conclusion

Preparing for the Walmart data engineer interview can feel intense, but with the right structure, tools, and mindset, you can absolutely succeed. Whether you’re brushing up on Spark optimization or reviewing common Walmart data engineer interview questions, take the time to practice with realistic scenarios and timed mock interviews. For extra guidance, check out our full Walmart Data Engineer Learning Path. If you’re looking for inspiration, read Alex Dang’s success story. And when you’re ready to drill deeper, explore this complete collection of curated data engineer interview questions. You’ve got this—and we’re here to help every step of the way.