TikTok Product Manager Interview Questions – Process & Analytics Guide 2025

Introduction

The Tiktok product manager interview is your gateway to a role where you’ll own the end-to-end lifecycle of features used by over a billion monthly active users. As a TikTok Product Manager, you’ll translate user insights into clear roadmaps, set and track key performance indicators, and coordinate closely with engineering and design teams to ship quickly. You’ll thrive in TikTok’s “Always Day 1” culture, where rapid experimentation, data-driven decision-making, and autonomy are core to delivering viral, delightful experiences. Collaboration across cross-functional pods ensures you maintain a single source of truth while iterating at breakneck speed. Success here hinges on balancing strategic vision with hands-on execution, all within a global, high-impact environment.

Role Overview & Culture

In this role, you’ll define product vision for features ranging from discovery algorithms to in-app social tools, crafting PR-FAQs and refining requirements through A/B tests and user research. Daily responsibilities include prioritizing backlogs, monitoring real-time metrics, and removing roadblocks for engineering and design partners. TikTok’s culture emphasizes “Always Day 1” — you’ll be expected to iterate continuously, learn rapidly from both successes and failures, and pivot with minimal bureaucracy. Autonomy comes with accountability: you own the outcomes, own the data, and own the narrative you present to stakeholders. Above all, TikTok values a bias for action and a passion for understanding and surprising its vast user base.

Why This Role at TikTok?

Joining TikTok means working on features that influence global culture, from short-form video creation to emerging social commerce experiences. With more than one billion users worldwide, your decisions will drive meaningful engagement metrics and shape the next generation of mobile interaction. TikTok invests heavily in experimentation velocity — you’ll have access to robust analytics platforms, generous RSU packages, and a clear path for rapid career acceleration. If you’re excited to build products that can trend overnight and impact communities on every continent, you’ll need to master the TikTok product manager interview process outlined below.

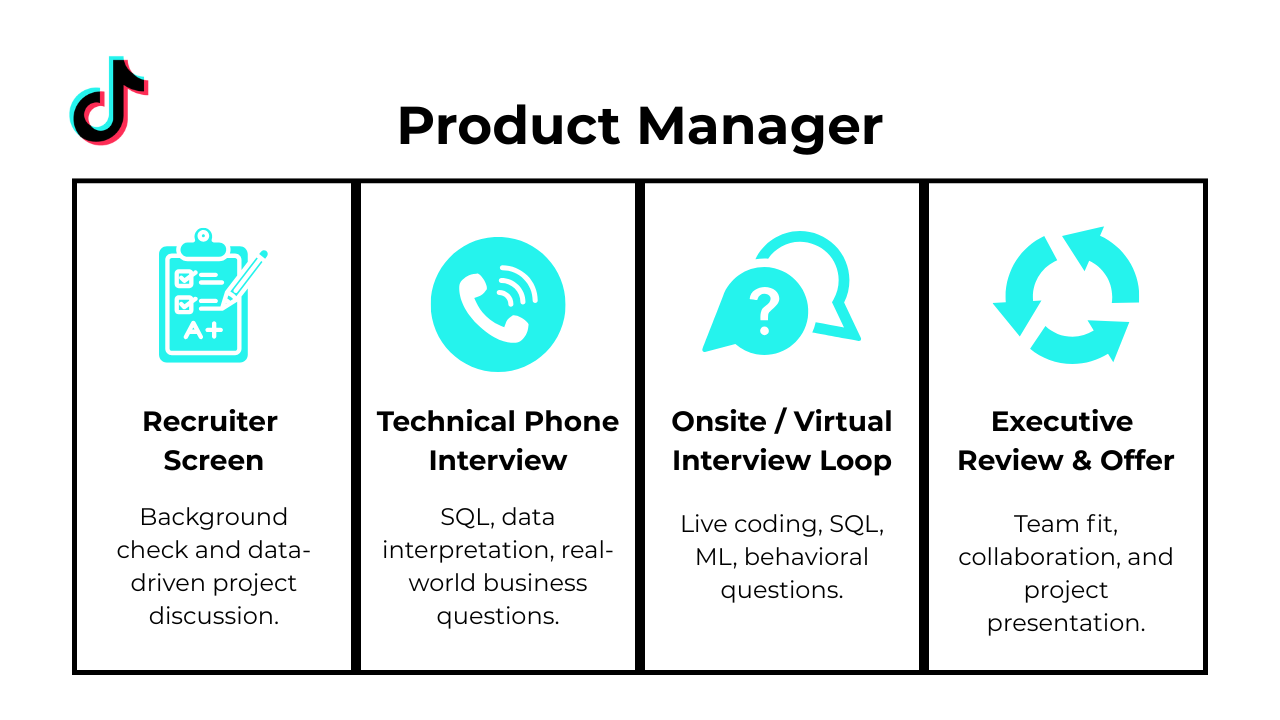

What Is the Interview Process Like for a Product Manager Role at TikTok?

Navigating the Tiktok product manager interview involves a structured yet fast-paced sequence designed to assess both strategic thinking and execution ability. TikTok’s hiring teams prioritize candidates who can move from high-level product vision to data-driven case analysis with ease. Below is a breakdown of each stage you can expect.

Application & Recruiter Screen

Your journey begins with an online application, followed by a recruiter phone screen focused on résumé fit, motivation for the role, and basic cultural alignment. Be prepared to discuss your experience as a Tiktok product manager—highlighting relevant metrics and examples of rapid experimentation.

Product Sense / Business Case Online Assessment

Successful applicants receive an invitation to complete an online assessment that tests product sense and business acumen. You may be asked to draft a brief product critique, propose improvements for existing features, or solve a quantitative case related to user growth or engagement.

Onsite / Virtual Loop

Candidates who pass the assessment move into a virtual loop of four interviews:

- Product Sense: Deep dives into user problems, feature ideation, and prioritization frameworks.

- Execution: Questions on roadmaps, stakeholder management, and delivery trade-offs.

- Analytical: Data interpretation, metrics definition, and A/B test design scenarios.

- Behavioral: STAR-based questions probing collaboration, leadership, and “Always Day 1” mindset.

Hiring Committee & Offer

Feedback from all interviews is consolidated and presented to a hiring committee that calibrates across teams and levels. Upon approval, you’ll receive an offer outlining compensation, RSUs, and next steps—completing the Tiktok product manager interview process.

What Questions Are Asked in a TikTok Product Manager Interview?

When preparing for Tiktok product manager interview questions, you’ll encounter a blend of product vision, execution rigor, data fluency, and culture-fit prompts designed to mirror real-world challenges at TikTok. Your ability to ideate breakthrough features, drive them through rapid experimentation, and collaborate across global teams will be assessed. Below is an overview of the core question types you’ll face.

Product Sense / Strategy Questions

These questions probe your ability to empathize with creators and consumers, uncover pain points, and define innovative solutions. You might be asked to design a new creator monetization feature or reimagine the For You feed algorithm. Exemplary answers incorporate user personas, prioritization frameworks (e.g., RICE), and clear success metrics.

Execution / Metrics Questions

TikTok’s “Always Day 1” culture demands near-immediate feedback loops. Here, you’ll define the most meaningful KPIs—perhaps referencing Tiktok product analytics depth—explain how you’d instrument and monitor them, and outline steps to iterate on feature rollouts. Questions could include identifying signal fatigue in short-form video or diagnosing a sudden drop in session length.

-

Start by slicing total watch-time, impressions, and click-through rate by creator-tier segments (e.g., <10 K subs = “amateur,” >1 M = “superstar”) over time; then track share-of-voice trends, distribution shifts in recommendation slots, and Gini or Herfindahl indexes of view concentration. Complement these with funnel ratios—impressions → views → watch-time—to see whether exposure or engagement changed. A/B-like “era” comparisons (pre vs. post model update) and regression-adjusted time-series help isolate causality from seasonality. Finally, highlight north-star creator metrics such as new-channel activation or median revenue per creator to judge ecosystem health.

How would you statistically validate that a 1 % week-over-week DAU decline is real and actionable?

Fit a time-series model (ARIMA or Prophet) to six-plus months of DAU and inspect confidence intervals to see if current values fall below the expected band; alternatively, apply a rolling two-sample t-test or CUSUM control-chart on log-transformed DAU. Segment by platform, geo, and acquisition source to pinpoint outliers and prevent Simpson’s paradox. If seasonality or holidays explain the drift, you’ll observe offsetting rebounds in comparable periods. Conclude with a decision rule—e.g., “investigate if the z-score < -2 for two consecutive weeks”—to align teams on when to escalate.

-

Propose an inactivity threshold (commonly 30 min) between consecutive events from the same user/device to mark a new session; justify it by examining histogram gaps or KS-tests on inter-event times. Once defined, use

LAG()to flag new sessions, accumulate a runningsession_id, andCOUNT(DISTINCT session_id)per day divided by distinct active-day users. Stress-test the threshold via sensitivity analysis (15, 45 min) to show metric stability. Document mobile-web gaps and multi-device stitching so PMs trust trend movements. -

Begin with a Sankey or Markov model of navigation steps (landing → search → thread → reply) to reveal high-drop edges; compute median time-to-first-reply and depth of scroll as friction proxies. Cohort retention curves by acquisition source expose where novice users churn. Correlate UI elements (e.g., inline reply vs. modal) with engagement deltas via causal-impact or diff-in-diff after small layout tests. Final recommendations prioritize fixes that shorten the path to posting and boost thread reciprocity, backed by potential lift estimates.

-

Define primary metrics such as incremental watch-time per session, average concurrent viewers, and party completion rate; pair them with social lift indicators like comments per minute and friend-invite conversions. Set guardrails on streaming latency and moderation queue backlog to avoid degraded experience. Pre-launch, run geo-bucket experiments to measure cannibalization of solo viewing and downstream retention. Post-launch, monitor creator RPM changes to ensure revenue neutrality and run cohort stickiness checks to validate long-term value.

How would you triage and diagnose a sudden 10 % drop in Google Docs usage?

First, verify analytics integrity—check event ingestion lag and client SDK error rates—then slice the drop by platform, region, doc-type, and user segment to isolate scope. Examine leading indicators (session starts, file opens) versus lagging ones (edits, comments) to locate funnel breakpoints. Correlate the timestamp with releases, auth outages, or third-party-API errors; leverage anomaly-detection alerts on latency and crash-free sessions for supporting evidence. Finish with a severity matrix and mitigation plan: roll back culprit release, communicate to affected users, and schedule a post-mortem to harden monitoring.

Analytical / Case Questions

These exercises test your quantitative rigor and business acumen. Expect to estimate annual ad revenue for TikTok Shop, size the opportunity for a new live-stream shopping feature, or build a simple financial model for a subscription product. Strong solutions clarify assumptions, show back‐of‐the‐envelope calculations, and consider edge cases.

-

Begin with a waterfall that decomposes total-revenue delta into price × volume for every category, sub-category, and discount bucket; this quickly shows whether mix shifts or unit economics drive the drop. Next, run cohort analyses on marketing-source and discount-level to see if margins eroded in specific channels. A time-series on average selling price, quantity per order, and promotion depth reveals if the decline is episodic (e.g., one failed sale) or structural. Finally, regress revenue on attribution source, margin, and discount to quantify each factor’s contribution and surface the biggest levers for recovery.

-

Start by segmenting DAU into returning-user retention, new-user activation, and creator-driven re-engagement to see which component has the largest headroom. For each hypothesis, design an A/B or geo-holdout: feed-algorithm tweaks should lift session depth and retention; acquisition spend should move installs and day-1 activation; creator-tool launches should raise uploads and downstream viewer DAU. Compare the incremental DAU per engineering week or marketing dollar to rank ROI. Triangulate with funnel metrics (first-video view, follow events) to validate causality and avoid cannibalizing existing engagement.

Is sending a blanket revenue-boosting email blast to every B2B SaaS customer a good idea—and why?

A mass blast risks fatigue, opt-outs, and deliverability penalties that may outweigh short-term revenue. Instead, analyze customer propensity scores or recent engagement to target high-likelihood upsell accounts and throttle sends to stay within ISP spam thresholds. Simulate expected lift versus churn by comparing historical conversion and unsubscribe rates under similar campaigns. Propose an A/B split that measures incremental revenue per 1 000 emails against negative signals like complaint rate, providing a data-backed go/no-go decision.

-

Treat each scan as a binary information gain problem and apply a divide-and-conquer strategy akin to binary search: first split the 16 cells into two 8-cell halves, then recursively bisect the positive half until a single cell remains—guaranteeing location in ⌈log₂ 16⌉ = 4 scans. If scans can target any subset size, adaptively choose partitions that keep the yes/no probability balanced to minimize worst-case steps. Explain why entropy reduction drives this design and how overlapping scans could shorten the expected (not worst-case) number further. The exercise tests structured reasoning about experiment design under query-cost constraints.

-

Begin with an interrupted-time-series or diff-in-diff using pre-redesign cohorts as controls, adjusting for seasonality and marketing spend. If historical drift shows natural reversion from 45 → 40 %, incorporate a trend term to isolate the campaign’s incremental effect. Validate assumptions by comparing identical signup segments that did not receive the new flow (hold-back or country-holdout). Supplement with propensity-score matching to control for mix changes in acquisition channels during the test window.

-

In a steady-state churn model, LTV ≈ ARPU × (1 / monthly churn) = $100 × (1 / 0.10) = $1 000; alternatively, using observed 3.5-month lifetime gives $100 × 3.5 = $350. Reconcile the gap by noting 3.5 mo implies effective churn ≈ 28 %, so sanity-check the inputs. Include gross-margin adjustment if the CRO wants contribution margin, and discount future cash flows if finance follows NPV conventions. Present both churn-rate and observed-tenure methods to align stakeholders on definition.

Which real-time metrics signal ride demand and how would you flag high-demand/low-supply conditions?

Track requests-per-minute, average wait time, and surge multiplier by geohash to gauge demand intensity; compare these against active-driver count and acceptance rate to infer supply. Compute a demand–supply ratio (requests ÷ available drivers) and fit thresholds where pickup ETAs or cancellation rates spike—this is the tipping point for “too much demand.” Visualize heat-maps and alert ops when the ratio or ETA exceeds historical 95th percentile for consecutive intervals. Segment by time-of-day and event calendars to differentiate predictable peaks from anomalies.

How would you measure the frequency of incorrect pickup locations reported by Uber riders?

Join rider feedback tags with trip start-location deltas between user GPS pings and driver-recorded pickups to quantify discrepancy rates. Build a funnel: total trips → trips with “incorrect map pin” complaint → trips where GPS delta > X meters to filter noise. Slice by city, app version, and map-tile provider to surface systematic issues. Cross-validate with driver messages about “can’t find rider” to catch silent failures.

How would you choose the optimal Lyft cancellation fee when testing $1, $3, and $5 variants?

Design an A/B/C experiment randomizing riders (or regions) to each fee and track primary outcomes: cancellation rate, ride completion, and net revenue (fee + ride profit minus lost demand). Use elasticity curves to see if higher fees deter cancellations enough to offset potential ride loss. Apply CUPED or hierarchical Bayesian models to tighten variance since cancellations are sparse events. Pick the fee that maximizes long-run contribution margin while meeting rider-satisfaction guardrails such as CSAT or complaint rate.

Behavioral / Culture-Fit Questions

TikTok values bias for action, cross-cultural collaboration, and resilience. Using the STAR method, you’ll share stories of launching features under tight deadlines, influencing stakeholders in different regions, and adapting to fast-changing priorities. Interviewers look for evidence of “Always Day 1” mindset—learning rapidly, iterating on feedback, and owning outcomes end-to-end.

What would your current manager say about you? What constructive criticisms might they give?

At TikTok, PMs operate in a hyper-agile environment where speed and impact matter most. Frame strengths that highlight your ability to rapidly identify user pain points—perhaps you pioneered a new engagement metric or optimized a feature funnel under time pressure. For constructive feedback, be honest yet forward-looking, such as learning to incorporate cross-regional nuances earlier in your roadmap. This demonstrates self-awareness and a commitment to continuous improvement—qualities TikTok prizes in its product leaders.

Why did you apply to our company?

TikTok PMs don’t just manage features—they spark global trends and build community. Share how TikTok’s “Always Day 1” ethos and its magic algorithm inspire you to innovate at viral scale. Emphasize your passion for creating experiences that delight over one billion users and how your background in rapid experimentation and creative problem-solving aligns with TikTok’s mission to entertain and empower.

-

Success at TikTok is measured by impact and velocity. Describe a scenario where you delivered beyond scope under tight deadlines—perhaps you launched an A/B test series that boosted session time by double digits or rolled out a creator-focused feature ahead of schedule. Highlight your bias for action, data-driven iterations, and how you mobilized cross-functional pods to achieve those outsized results.

Describe a time when you had to lead a team through a significant change or challenge. How did you manage stakeholder concerns and keep the team focused?

In TikTok’s fast-evolving landscape, priorities can pivot overnight. Share how you rallied engineering, design, and analytics partners during a major roadmap shift—such as deprioritizing a low-impact feature to accelerate a high-visibility initiative. Focus on transparent communication, collaborative decision-making, and maintaining morale, illustrating how you drove alignment without losing momentum.

Tell me about a data-driven insight you uncovered that directly influenced a product decision.

TikTok PMs must turn metrics into action at warp speed. Discuss how you spotted an unexpected user segment trend—maybe a spike in a new hashtag behavior—and proposed a feature tweak that capitalized on that insight. Outline the analysis methods (cohort breakdowns, funnel conversions), the recommendation you made, and the resulting uplift you tracked post-launch.

How do you ensure diversity and inclusion are considered in your product decisions and stakeholder collaborations?

Serving a global community means building features that resonate across cultures and contexts. Describe how you solicited feedback from diverse user groups—perhaps via localized user research or community forums—and adapted your feature design. Demonstrate how you champion accessibility and cultural sensitivity, reinforcing TikTok’s commitment to an inclusive platform.

Share a time when you had to influence a decision without formal authority. How did you build consensus?

TikTok’s agile squads thrive on persuasion over hierarchy. Explain how you used data narratives, prototype demos, or micro-experiments to win support for your proposal—maybe convincing a senior engineer to adopt a new performance metric or aligning marketing and product teams on a launch strategy. Highlight your empathy, storytelling skills, and how you secured buy-in to drive execution forward.

How to Prepare for a Product Manager Role at TikTok

A successful Tiktok product manager interview hinges on demonstrating deep product intuition, data fluency, and clear storytelling. Preparation should mirror the role’s fast-paced, metrics-driven environment, blending framework mastery with real-world simulation. Below are five targeted strategies to set you up for success.

Deep-Dive on Short-Form Video KPIs

Become fluent in the key performance indicators that drive TikTok’s core experience—metrics like watch time distribution, completion rates, response rates to in-app prompts, and creator engagement growth. Practice interpreting these metrics in context: how would a dip in the 3-second view rate influence your roadmap, and what levers would you pull to optimize it? This depth of understanding shows you can not only define the right metrics but also triangulate root causes and propose data-backed improvements.

Practice Product-Sense Frameworks

Hone product ideation skills by applying structured frameworks—such as market-user-metrics-MVP—to common TikTok scenarios. For example, if tasked with improving video caption discoverability, walk through user segmentation, competitive analysis, desired outcomes, and a minimum-viable feature set. Articulate trade-offs between speed and scope, and always tie back to measurable impact. Consistent practice with these lenses will help you move from vague ideas to concrete plans under time constraints.

Run Mock Strategy Cases

Simulate the 45-minute strategy rounds by pairing with a peer or mentor. Choose TikTok-relevant topics—like expanding live-stream commerce to new markets—and time yourself through problem definition, hypothesis generation, and recommendation. Record or observe each other’s pacing, logical flow, and communication style to refine clarity and confidence. Iterative feedback loops mirror TikTok’s “Always Day 1” ethos and prepare you for rapid-fire questioning.

Build a STAR Story Bank

Curate a collection of Situation-Task-Action-Result narratives highlighting your impact, especially around global collaboration. Include examples of launching features across diverse regions, adapting to local content policies, or coordinating with engineering teams under tight deadlines. Ensure each story quantifies outcomes (e.g., “increased DAU by 12% in three weeks”) and emphasizes your bias for action and ownership—all traits TikTok prizes in its PMs.

Stay Current on TikTok Launches

Keep abreast of the latest TikTok feature rollouts—Shop expansions, Effect House updates, ad product launches—and think through their product implications. For each release, consider: Who are the target users? What metrics define success? How might you iterate on the launch based on early signals? Demonstrating this level of preparation signals genuine passion for the platform and an ability to hit the ground running.

Conclusion

Mastering the TikTok product manager interview process and drilling high-impact questions will give you the confidence and skills to thrive in TikTok’s “Always Day 1” culture. To deepen your expertise, explore our Product Metrics Learning Path for targeted analytics practice, draw inspiration from Asef Wafa’s success story, and hone your approach with a mock interview under realistic conditions.

If you’re branching into other TikTok roles, check out our Software Engineer guide or sharpen your analysis skills with the Data Analyst guide. Good luck—your next viral feature starts with exceptional preparation!