TikTok Machine Learning Engineer Interview Guide: Coding, System Design & ML Qs

Introduction

The Tiktok machine learning engineer interview is your gateway to a position where you’ll design, implement, and scale the models that power TikTok’s addictive “For You” feed, real-time video filters, and ad targeting algorithms. In this guide, you’ll find an overview of what to expect—from coding and system design rounds to ML theory and product-driven case studies—so you can tailor your preparation to the role’s unique demands. TikTok’s recruitment emphasizes not only technical depth but also your ability to iterate quickly on data, collaborate across disciplines, and drive measurable user engagement. Whether you’re optimizing an edge-deployed neural network or tuning Bayesian CTR estimators, this interview loop will assess both your engineering rigor and your product intuition.

Role Overview & Culture

As a Machine Learning Engineer at TikTok, you’ll own end-to-end ML pipelines: collecting and labeling massive video and behavioral datasets, engineering robust real-time feature stores, and deploying low-latency models on cloud and edge. You’ll collaborate daily with data scientists to prototype new algorithms, software engineers to integrate models into production, and product managers to define success metrics and A/B test designs. TikTok’s “Always Day 1” culture drives rapid experimentation—meaning you’ll launch dozens of model variants each quarter, learn from live traffic signals, and pivot based on results. Autonomy comes with accountability: you’ll troubleshoot inference failures, ensure data quality, and continuously monitor model performance. In this fast-paced environment, clear communication and cross-team empathy are just as critical as your coding and mathematical skills, making the Tiktok MLE interview a true test of both technical and collaborative excellence.

Why This Role at TikTok?

Joining TikTok as an MLE means influencing the experiences of over one billion global users by delivering cutting-edge machine learning solutions at unprecedented scale. You’ll tap into rich internal research budgets, leverage specialized hardware accelerators, and collaborate with world-class AI talent to push the boundaries of on-device inference and recommendation systems. Competitive compensation packages and accelerated career paths recognize both your technical contributions and leadership growth. If you’re eager to tackle production challenges—like optimizing memory-constrained models for mobile or architecting fault-tolerant streaming pipelines—you’ll need to master the Tiktok machine learning engineer interview process described in the sections that follow.

Engineer

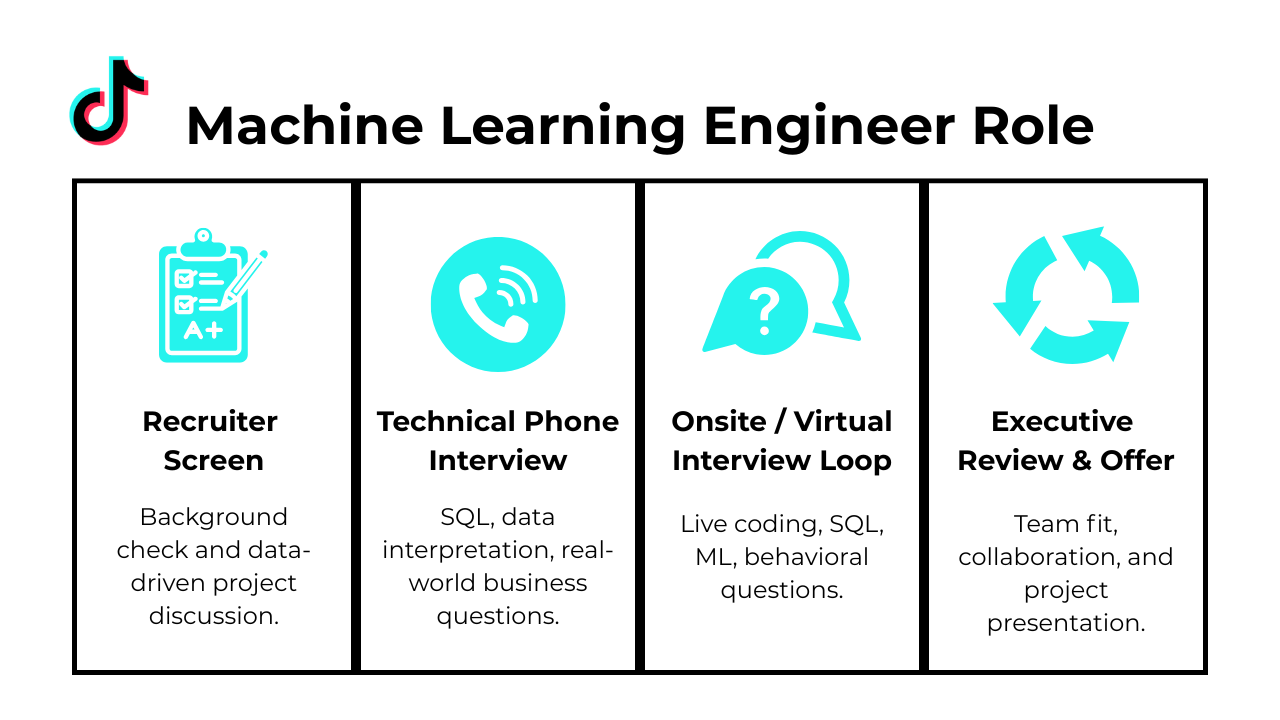

The Tiktok machine learning engineer interview follows a structured, fast-moving sequence designed to evaluate both your coding prowess and ML expertise. Here’s how it typically unfolds:

Application & Recruiter Screen

You’ll submit your résumé and have an initial call with a recruiter to confirm fit, discuss your background, and gauge your interest in TikTok’s “Always Day 1” engineering culture.

Technical Online Assessment

This stage combines LeetCode-style coding problems with an ML quiz covering fundamentals like model evaluation metrics, feature engineering, and basic system design—essentially simulating key rounds of the Tiktok MLE interview.

Onsite/Loop Interviews

In a series of deep-dive sessions, you’ll tackle:

- Coding: Data structures and algorithms under timed conditions.

- ML System Design: Architecting scalable pipelines, real-time inference systems, and trade-offs between accuracy, latency, and resource constraints.

- Behavioral: STAR-based questions focusing on collaboration, ownership, and rapid iteration in ambiguous settings.

Hiring Committee & Offer

Feedback from all interviewers is consolidated and presented to a cross-functional committee. Upon approval, you receive an offer detailing compensation, RSUs, and next steps—completing the process.

What Questions Are Asked in a TikTok Machine Learning Engineer Interview?

When preparing for Tiktok machine learning engineer interview questions, you’ll face a mix of coding, system design, and behavioral prompts that reflect the role’s multidisciplinary demands. TikTok seeks engineers who can write efficient algorithms, architect scalable ML systems, and collaborate effectively under its “Always Day 1” ethos. Below is an overview of the core question categories.

Coding / Technical Questions

Expect to solve algorithmic challenges—such as implementing gradient descent from scratch or optimizing joins in a feature store—under time constraints. These problems test your mastery of data structures, numerical methods, and system-level performance considerations. Interviewers look for clean, well-documented code and the ability to reason about time and space complexity, as well as edge cases relevant to video and user-behavior datasets.

-

The solution joins

employeestodepartments, groups by department, filters groups that meet the ten-employee threshold, and uses conditional aggregation to computeSUM(CASE WHEN salary > 100000 THEN 1 END) / COUNT(*). ApplyingRANK()orDENSE_RANK()over that percentage and limiting to three returns the final list. Good answers mention how ties are handled and why a composite index on(department_id, salary)speeds the scan. Edge-case talk—e.g., contractors without departments—shows mature data awareness. -

You isolate the most-recent row per employee with

ROW_NUMBER() OVER (PARTITION BY employee_id ORDER BY salary_effective_date DESC)(or by the highest auto-incrementid), then filter onrow_number = 1. Returning the surviving rows restores a one-to-one view of employees to salaries without touching historical data. Mentioning an audit of the ETL and adding a unique constraint for future loads elevates the answer. A covering index on(employee_id, salary_effective_date DESC)keeps the query fast. -

The canonical approach uses recursive back-tracking that explores each element once, accumulates a running total, and prunes branches when the partial sum exceeds N. Storing combinations in a list of lists avoids duplicates, while sorting the input first lets you skip repeated values efficiently. For very large lists, a dynamic-programming table keyed by

(index, remaining)lowers repeated work at the cost of memory. Clear complexity analysis (O(2^n) worst-case) shows you understand trade-offs that arise in exhaustive search problems. How would you select a uniformly random element from an infinite data stream using only O(1) memory?

Implement reservoir sampling: keep the first item as the provisional choice, then for the k-th incoming element replace the stored value with probability

1/k. This guarantees every item seen so far is equally likely to be selected without storing the stream. Explaining the inductive proof of uniformity and how to integrate a cryptographically secure RNG demonstrates rigor. The idea is widely used in telemetry pipelines where full retention is infeasible.How could you simulate drawing balls from a jar when you know each color’s count?

Convert the count list into cumulative weights, draw a random float in

[0,total], and binary-search the cumulative array to pick a color with correct probability. Updating counts after each draw—and re-normalizing totals—models sampling without replacement; leaving counts intact models sampling with replacement. The exercise mirrors weighted randomization tasks in recommender systems and A/B bucketing. Highlighting the impact of large counts on numerical precision adds depth.-

You must iterate over every feature permutation to create deterministic decision trees that split on the query vector’s value for that feature, then aggregate their majority vote. Using only NumPy and pandas forces manual implementation of tree traversal, bootstrapping, and ensemble voting logic. The question evaluates algorithmic fluency, coding discipline, and understanding of bias-variance trade-offs without relying on scikit-learn shortcuts. Discussing run-time (exponential in features) and ways to limit permutations shows practical realism.

-

You’re given a 2D array where each cell contains one of ‘N’, ‘E’, ‘S’, or ‘W’, indicating which door in that room is unlocked. Your goal is to traverse from the top-left cell to the bottom-right cell in the fewest moves, or return –1 if no valid path exists. A breadth-first search (BFS) that enqueues neighboring cells according to each cell’s unlocked door and tracks visited positions naturally finds the shortest route. Interviewers will look for correct boundary checks, cycle detection using a visited set, and clear handling of the no-path case. Discussing the O(m × n) time and space complexity on an m×n grid shows you appreciate scalability concerns.

-

Given a list of integers, determine its minimum and maximum, split that range into equal-width bins, and count values per bin, omitting any bin with zero count. You can compute the bin index for each value in O(1) time and accumulate counts in a dictionary keyed by bin boundaries or indices. Edge cases like a zero-range dataset (all values equal) should map to a single bin. Interviewers expect you to handle non-integer boundaries and explain why your approach runs in O(n) time with O(b) space, where b is the number of bins. Highlighting how this method supports quick exploratory data analysis without heavy dependencies demonstrates practical coding judgment.

-

The algorithm iteratively assigns each of the n data points to the nearest of k centroids—measuring distance in m-dimensional space—then recomputes each centroid as the mean of its assigned points until convergence. You’ll need to vectorize distance calculations with NumPy for performance and handle empty clusters by reinitializing or skipping them. Returning a cluster label list of length n requires careful indexing and ensuring deterministic behavior across runs. Interviewers evaluate both correctness (convergence criteria, max iterations) and efficiency (minimizing Python loops, using array operations). Discussing the overall complexity O(i × k × n × m) and memory trade-offs shows you can scale clustering to real-world datasets.

System / Product Design Questions

You’ll be asked to architect end-to-end ML pipelines— for example, designing a real-time content-ranking system that balances freshness, relevance, and latency. When framing your solution, tie it back to how ML machine learning is used in Tiktok to personalize feeds at scale, safeguard against inappropriate content, and support live features. Strong answers discuss data ingestion, feature extraction, model serving, monitoring, and failure-handling strategies.

How would you design the video recommendation algorithm?

You’d start by defining your objective—maximizing watch time, engagement, or retention—and selecting appropriate offline and online metrics. A hybrid approach combining collaborative filtering (user–video interaction graphs), content-based features (video metadata, embeddings), and real-time signals (recent views, search queries) often works best. Scalability is critical: you might pre-compute candidate sets via batch jobs, then serve personalized reranks via a lightweight real-time model. Don’t forget diversity and freshness to avoid filter bubbles, and guardrails for safe content. Finally, A/B test new ranking features with clear metrics and monitor for feedback loops that could bias the system.

How would you build the recommendation algorithm for type-ahead search?

Type-ahead needs sub-100ms responses, so you’d likely maintain a prefix index (e.g., trie or n-gram inverted index) of titles and search terms in memory or via a low-latency store like Redis or Elasticsearch. Initial ranking by global popularity or weighted with user-history signals (recently watched genres) gives a coarse candidate list. A second-stage ML model can rerank these suggestions using contextual features (device type, locale, time of day). Caching common prefixes and pre-warming popular queries reduces load. Finally, monitor suggestions for relevance drift and run continual offline evaluation to adapt to new titles.

-

First, define “optimal” via historical engagement data—moments with scene changes, natural pauses, or when drop-off risk is low. Extract features from the video stream: audio energy, scene boundary timestamps, speech vs. silence, on-screen context, and viewer behavior (e.g., rewind or skip events). Train a supervised model (e.g., gradient boosted trees or a small neural net) to predict break-suitability scores at regular intervals. Inference must run in real time or pre-compute scores per video segment offline. Finally, validate with A/B experiments measuring ad completion rates and post-break engagement uplift.

-

Architect a modular ETL pipeline: separate connectors for Reddit (JSON posts/comments) and Bloomberg (CSV or JSON price feeds) with retry and rate-limit handling. Use a scheduler (e.g., Airflow) to orchestrate daily or hourly data pulls. Transform raw data into standardized schemas—tokenize text, compute sentiment scores, align timestamps, calculate daily returns—and store in a feature store or data warehouse. Apply validation checks and anomaly detection (e.g., missing fields, API schema changes) to trigger alerts. Provide downstream teams with easy access via well-documented tables or APIs and maintain lineage for auditability.

How would you create a recommendation engine for a rental listings website?

Begin with a content-based layer: encode each listing’s metadata (price, location, amenities, tags) and user profiles (demographics, saved searches) into embeddings. Overlay collaborative filtering from user interaction graphs (views, favorites, inquiries) to capture latent preferences. Train a ranking model that fuses these signals, optimizing for click-through or booking rates. Address cold-start with popularity priors or item similarities. Deploy real-time inference behind a low-latency service, then iterate via online experiments and incorporate feedback loops when users engage or convert.

-

Model a

cardstable (card_id PK, suit, rank) and aplayer_handstable (hand_id PK, player_id, card_id FK) with one row per dealt card. To evaluate hand strength, maintain ahand_rankingslookup table mapping sorted 5-card combinations to a numeric score. To find each player’s best five-card hand, join their nine cards tohand_rankings, select the max score per player, then compare across players. A finalORDER BY score DESC LIMIT 1yields the winner. Indexes on (player_id,card_id) and efficient joins ensure this runs quickly even with many concurrent games.

Behavioral / Culture-Fit Questions

TikTok values bias for action, ownership, and rapid iteration. Through STAR-format prompts, you’ll illustrate experiences where you shipped ML models under tight deadlines, coordinated across data science and engineering teams, or responded to unexpected production issues. Emphasize how you learn from failures, adapt based on user feedback, and maintain alignment with TikTok’s dynamic product priorities.

Tell me about a time when you exceeded expectations during a project. What did you do, and how did you accomplish it?

At TikTok, MLEs are judged on both model impact and deployment velocity. Illustrate how you went beyond a baseline metric boost—perhaps you tuned a recommendation model that not only improved click-through rate by 15% but also halved inference latency for live video filters. Walk through how you identified the opportunity (data analysis or user feedback), rapidly prototyped improvements, and rallied cross-functional partners to integrate the change in production. Emphasize your bias for action, ownership of end-to-end implementation, and how your work accelerated business or engagement outcomes.

-

TikTok’s fast-paced environment often surfaces competing demands between research depth and business deadlines. Describe how you assessed trade-offs—quantifying potential gains from a complex model tune versus the immediate value of a lightweight feature fix. Explain how you communicated transparently with product and analytics teams to set realistic timelines, perhaps delivering an interim solution first while completing the deeper model work. Highlight your framework for prioritization (impact, effort, risk) and how you maintained stakeholder trust and momentum.

Describe a time when a production ML model’s performance unexpectedly degraded. How did you detect the issue, diagnose the cause, and restore service quality?

In real-time systems like TikTok’s feed ranking, performance drift can directly impact user engagement. Detail how you set up monitoring alerts (e.g., sudden CTR drops), performed drift analysis on input features, and isolated a data pipeline change or schema shift as the root cause. Walk through your mitigation steps—rolling back the offending commit, retraining the model with corrected data, and implementing automated checks to prevent recurrence—demonstrating your commitment to reliability and rapid incident response.

Tell me about a time you championed privacy-preserving or ethical ML practices under tight product deadlines.

TikTok places a premium on user privacy and responsible AI. Share how you introduced techniques like differential privacy, on-device processing, or anonymized feature representations when stakeholders pushed for more granular targeting. Explain how you built a business case—showing negligible performance trade-offs alongside compliance benefits—and guided the team through integrating these measures, ensuring that product velocity never compromised user trust or platform integrity.

Share an example of collaborating with product managers and designers to define measurable success metrics for an ML feature launch.

Effective ML deployments at TikTok hinge on clear metrics. Describe how you worked with PMs to align on primary KPIs (e.g., watch time uplift) and guardrail metrics (e.g., algorithmic fairness, session start latency). Highlight your role in translating model outputs into dashboards, running initial A/B tests, and iterating on metric definitions based on early user feedback, showcasing your ability to bridge technical and business perspectives.

Describe how you mentored or onboarded a junior engineer on a complex ML system.

Investing in team growth amplifies impact at TikTok. Explain how you structured knowledge transfer—through one-on-one code walkthroughs, pairing sessions on feature store integration, and documentation of model training pipelines. Share how this mentorship accelerated their ramp-up, improved overall code quality, and fostered a collaborative culture of continuous learning and shared ownership.

How to Prepare for a Machine Learning Engineer Role at TikTok

Landing a TikTok MLE role requires more than just strong coding skills—it demands in-depth knowledge of large‐scale ML systems, rapid experimentation, and seamless collaboration across global teams. Your preparation should mirror TikTok’s emphasis on end-to-end ownership, low-latency inference, and data-driven iteration. Below are five focused strategies to help you excel in every stage of the interview loop.

Master TikTok’s ML Stack

Familiarize yourself with the core technologies powering TikTok’s pipelines—TensorFlow or PyTorch for model development, Apache Flink or Google Beam for streaming feature extraction, and Kubernetes for scalable serving. Build small end-to-end projects that ingest streaming data, train a model, and deploy it via a RESTful API. Demonstrating hands-on proficiency with these tools reflects your readiness to work with TikTok’s production environments.

Simulate the Online Assessment

Time yourself on LeetCode Medium-level algorithm problems and combine that with targeted ML quizzes on model evaluation, feature engineering, and A/B test design. This practice mirrors the Tiktok MLE interview online assessment and builds confidence under timed conditions. Review both correct solutions and common pitfalls to sharpen your ability to explain trade-offs clearly during live sessions.

Practice System Design for ML

Design mock architectures that cover the full ML lifecycle: data ingestion, offline training pipelines, feature store management, real-time inference, and monitoring. Consider challenges like model versioning, drift detection, and schema evolution. Articulate how you’d ensure consistency between batch and online features, and how you’d optimize for both latency and throughput at TikTok scale.

Build a STAR Story Bank

Curate concise Situation–Task–Action–Result stories highlighting your ML impact—such as reducing model latency by 50%, doubling prediction accuracy, or automating retraining triggers. Emphasize collaboration with cross-functional partners (data scientists, SREs, product) and your ability to iterate quickly based on live user metrics. Having these narratives at your fingertips ensures you can confidently address behavioral rounds.

Peer Mock Interviews

Check out Interview Query’s mock interview service to simulate full TikTok MLE loops—including coding, system design, and behavioral rounds. You’ll receive actionable feedback on your problem-solving approach, communication clarity, and cultural fit. This rehearsal not only refines your answers but also acclimates you to TikTok’s fast-paced interview style, making the actual loop feel like a familiar simulation.

FAQs

What Is the Average Salary for a Machine Learning Engineer Role at TikTok?

Average Base Salary

Are There Job Postings for TikTok Machine Learning Engineer Roles on Interview Query?

You can always browse our job board for the latest MLE openings and get insider insights into each role.

Conclusion

Conquering the TikTok machine learning engineer interview process and practicing high-impact Tiktok machine learning engineer interview questions will give you the confidence and skills to excel in TikTok’s data-driven, “Always Day 1” environment. For targeted learning, dive into our Modeling & Machine Learning Learning Path and simulate full loops with IQ’s mock interview service.

If you’re exploring other TikTok technical roles, check out our Data Analyst guide or Data Engineer guide for equally comprehensive prep. And don’t just take our word for it—see how candidates like Jeffrey Li transformed their careers by mastering these processes in our success stories. Good luck!