Shopify Data Engineer Interview Questions + Guide in 2025

Introduction

Shopify powers millions of merchants and processes billions in annual GMV, which means every query, job, and event you ship can influence real revenue at a global scale. If you are aiming for a Shopify data engineer role, this guide gives you a precise roadmap to prepare with confidence. You will see how the interview process works end to end, what skills the team screens for, and the exact types of coding, architecture, and behavioral questions that come up.

We break down pair-programming expectations, the system design depth by level, and the life-story round so you know how to frame your impact. You will also get targeted practice areas, sample answers, and checklist-style tips for each stage. By the end, you will know how to present clean SQL and Python, narrate trade-offs in modern data platforms, and connect every technical decision to merchant outcomes.

Use this as your prep trailer: what to study, how to practice under time pressure, and how to tell stories that show ownership, reliability, and cost awareness in a data platform that ingests real-time events at petabyte scale.

Role overview and culture

A Shopify data engineer works at the intersection of data infrastructure and merchant experience by building scalable pipelines, maintaining data lakes, and ensuring business teams have fast and reliable access to insights. You will own ETL development, design event-driven systems, and optimize data flow across services in a digital-by-default, fully remote environment.

Engineers operate in autonomous squads and are empowered to ship quickly while solving real-world problems. Teams thrive on impact and consistently align data efforts with Shopify’s core value of helping merchants succeed.

Why this role at Shopify?

Data engineers at Shopify sit on the critical path between raw signals and merchant value. You will design and run pipelines that feed product decisions, risk systems, payments, and growth analytics. The stack spans streaming transports like Kafka, warehouse and lakehouse layers such as Snowflake, and object storage, transformation frameworks like dbt, and service integrations that touch Ruby and Python services. The impact is immediate and measurable because the platform powers storefronts, checkout, and post-purchase flows used by merchants every day.

Growth potential is strong. Shopify supports parallel tracks for individual contributors and managers, with staff and principal IC roles that emphasize architecture leadership, reliability, and developer experience. Engineers commonly rotate across product areas such as Checkout, Shop Pay, Markets, and Capital to broaden domain depth. If you favor people leadership, you can move into tech lead and engineering management roles that own program health, on-call quality, and cross-team platform strategy. If you prefer the deep IC path, you can lead design reviews, own cost and performance roadmaps, and mentor teams on data quality and contract governance.

There are parallel ladders where senior ICs drive architecture and reliability at scale while product-aligned managers orchestrate cross-functional outcomes. Shopify offers a similar dual-track model. You can build a career that alternates between platform stewardship and product-embedded impact without losing seniority. That flexibility, together with equity, global pay parity, and the chance to influence merchant-facing experiences, makes this a compelling place to grow as a data engineer.

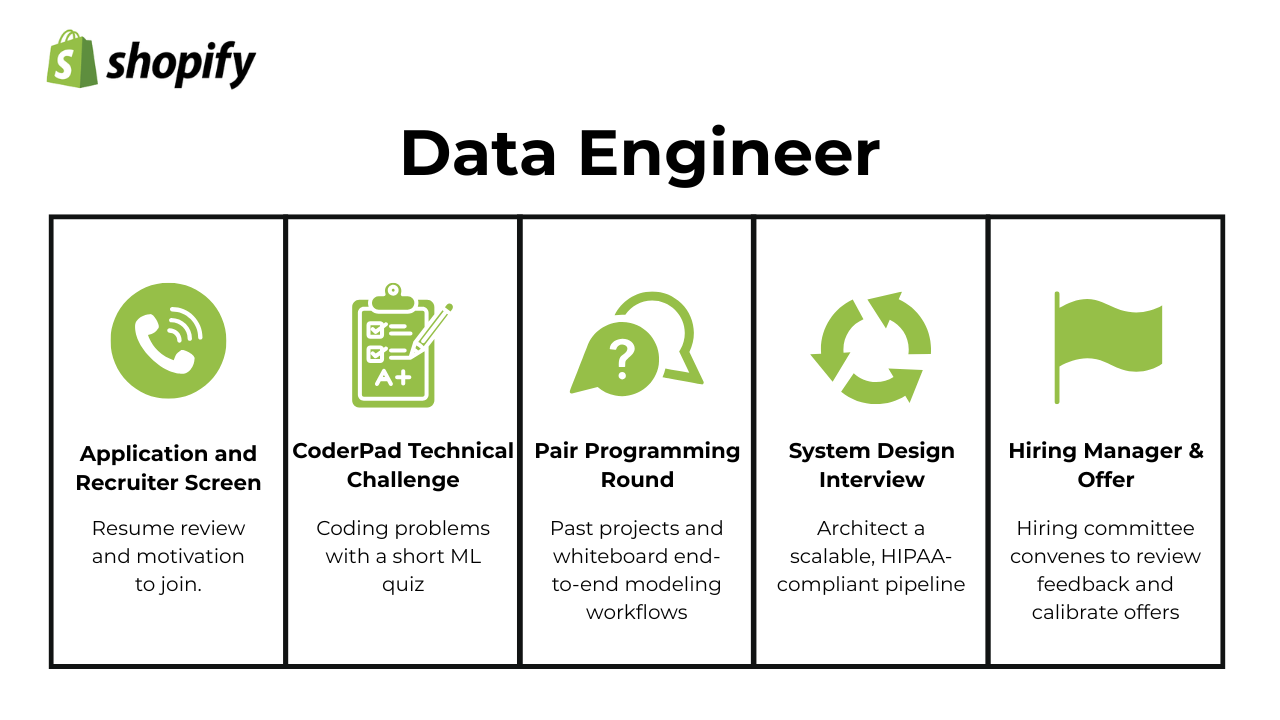

What is the interview process like for a data engineer role at Shopify?

Shopify’s data engineer interview process measures three things above all: technical mastery, structured thinking, and a sense of ownership. The company looks for engineers who can design scalable data systems, communicate clearly, and tie every technical decision back to merchant impact. The entire process usually takes three to five weeks from initial contact to final offer, depending on scheduling and role level.

Application and recruiter screen

The process begins with a short, 30-minute recruiter call that introduces you to the role and team. Recruiters use this stage to gauge your motivation for joining Shopify and your experience building data solutions that drive business outcomes. They look for signs of strong communication, curiosity, and familiarity with modern tools like Kafka, Snowflake, and dbt.

An ideal candidate connects their experience to merchant value, explaining how they solved real data problems such as improving data freshness or streamlining ETL efficiency. Clear articulation of how your work influenced decisions or metrics leaves a strong first impression.

Tip: Use one or two well-chosen examples that show both technical contribution and collaboration. Ask one thoughtful question about team priorities to show genuine engagement.

Technical challenge (SQL or Python)

The technical challenge assesses how you write, test, and reason about data transformations. Tasks usually include cleaning datasets, aggregating metrics, or writing logic for event tracking. Shopify wants to see logical structure, maintainable code, and sound problem-solving under time constraints.

Ideal candidates show they can translate messy business problems into clean, efficient code. They write readable queries, validate results, and explain their reasoning clearly in short notes or comments.

Tip: Write production-ready code even for small tasks. Handle nulls, edge cases, and time zone differences, then summarize your reasoning at the top of your script. Clarity matters as much as correctness.

Pair-programming or debugging session

Next comes a live session with an engineer where you solve a small but realistic data problem together. This could involve debugging a slow job, optimizing a SQL query, or improving pipeline reliability. Interviewers evaluate how you approach problems, communicate your logic, and respond to feedback in real time.

Shopify values calm, collaborative problem solvers who can explain what they are doing as they go. The ideal candidate walks through their thought process, tests small pieces of logic, and adjusts based on new information.

Tip: Talk through each step before typing. Verbalizing your approach helps the interviewer follow your reasoning and mirrors how Shopify teams collaborate remotely.

System and data architecture interview

This round focuses on your ability to design scalable, reliable systems. You will receive a design prompt such as “Build an ingestion pipeline for real-time orders” or “Design a data lake for analytics and experimentation.” Interviewers look for clear structure, justified trade-offs, and awareness of cost, latency, and data quality.

An ideal candidate organizes their response into layers: ingestion, storage, transformation, and monitoring. They explain why each component exists and how it interacts with the rest of the stack. The best responses show both technical depth and judgment — not overengineering, but choosing what fits Shopify’s scale and merchant needs.

Tip: Start by defining the business goal before jumping into architecture. Walk through each layer logically and explain how you would test and monitor it once deployed.

Life story behavioral interview

Shopify’s behavioral interview explores how you think, adapt, and grow. It is called a “life story” because it focuses on decision points rather than a chronological resume. Interviewers look for ownership, self-awareness, and evidence of growth through real experiences.

An ideal candidate describes a few moments that shaped their approach to engineering — for example, leading a complex migration, managing an incident, or mentoring teammates. They discuss challenges honestly and connect lessons learned to current practices.

Tip: Prepare three concise stories: one that shows impact, one that shows resilience, and one that shows learning. Use the STAR format and focus on results that improved reliability, performance, or team effectiveness.

Offer and compensation review

Candidates who pass all rounds move to the offer stage, typically within two to three business days of the final interview. You will receive a verbal offer followed by a written package outlining level, base pay, equity, and benefits. The recruiter may also walk you through Shopify’s leveling framework and total rewards philosophy, which includes equity, flexibility, and global pay parity.

This stage is your chance to clarify expectations and negotiate professionally. Ask about growth paths, RSU vesting, and performance cycles before finalizing. Shopify values transparency and will explain how salary bands correspond to your level.

Negotiation tips:

- Express enthusiasm first before discussing numbers.

- Research market benchmarks through sites like Levels.fyi or Glassdoor to anchor your ask.

- Highlight the scope of your prior responsibilities rather than comparing company names.

- Ask about non-cash components such as equity, signing bonuses, or relocation support.

- Always confirm final details in writing before resignation from your current employer.

Tip: End on a collaborative note by asking what success looks like in your first 90 days. This reinforces your commitment to contributing immediately.

What questions are asked in a Shopify data engineer interview?

Shopify’s interview process is designed to assess real-world problem-solving—not just textbook answers. Below are the types of questions you can expect at each stage.

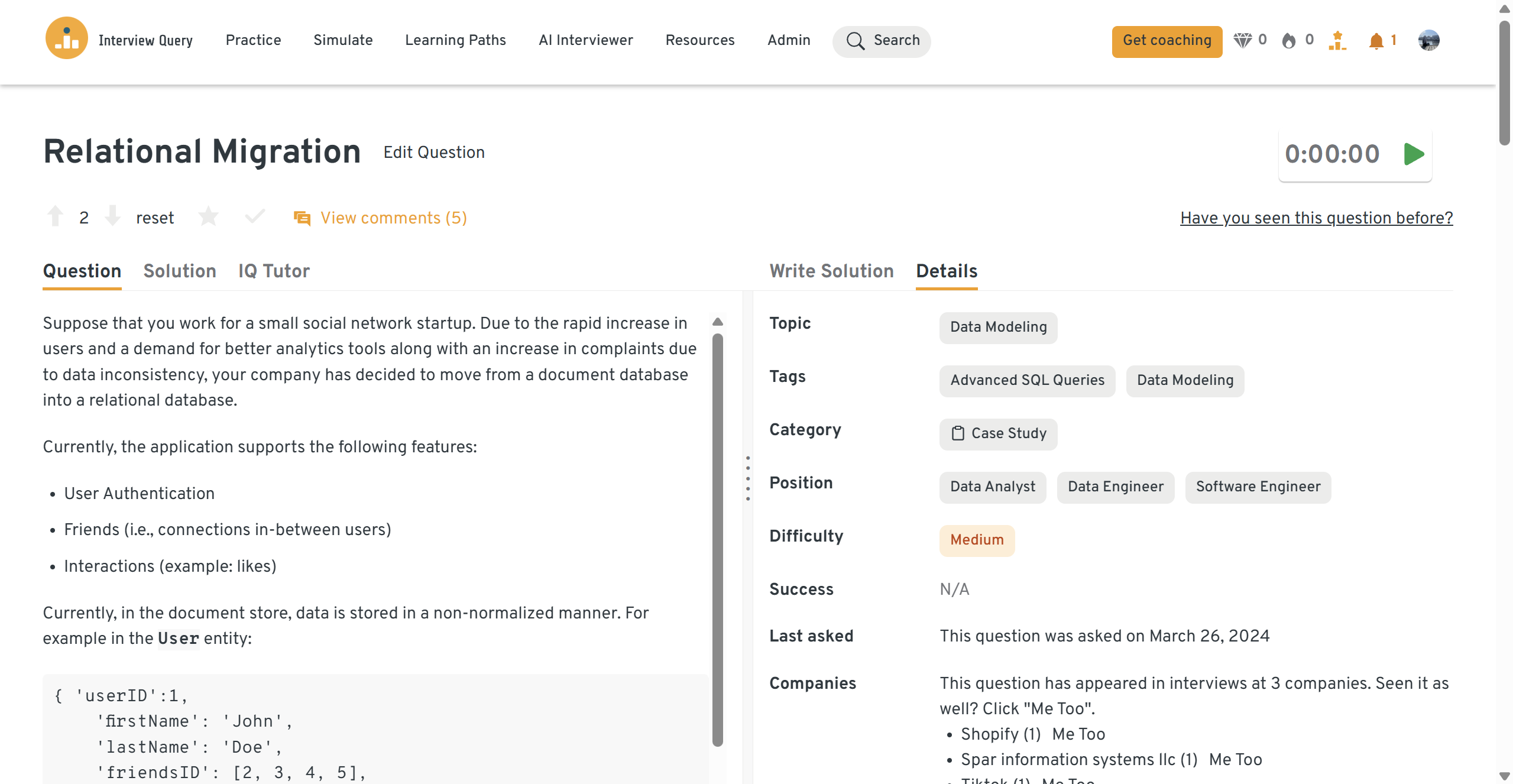

Coding/technical questions

Expect SQL and Python questions involving joins, date logic, and edge-case cleanup. Some problems simulate Shopify-specific contexts like calculating retention metrics or processing event logs in batch. One Shopify data engineer interview example might ask you to refactor a pipeline that computes daily GMV with messy timestamp data.

Another scenario may involve diagnosing why an A/B experiment’s conversion metric appears inflated, blending data skills with analytical reasoning.

-

This question probes large-scale market-basket reasoning: generate product pairs per user/order, dedupe pairs within the same order, aggregate to pair frequencies, then rank. A practical approach is a self-join on order items scoped by order (and implicitly user via the orders table), normalize pair naming so p2 is alphabetically first, distinct-ify within order to avoid double-counts, aggregate, and return the top five. For scale, call out pre-aggregating order→product lists (then exploding to pairs), approximate counts for >1B rows, and sort/distribution keys on the fact table.

-

Emphasize deriving MSE gradients w.r.t. slope and intercept, choosing a learning rate, convergence criteria, and feature scaling if x spans large ranges. For a data engineer, explain why knowing basic optimization helps when validating feature pipelines or reproducing offline training steps in ETL.

Given an integer N, return all prime numbers up to N.

Discuss algorithmic choices (Sieve of Eratosthenes vs. trial division), big-O tradeoffs, and memory considerations for large N. Tie back to DE work: efficient primitives matter in UDFs, backfills, or validation jobs where naive loops blow up runtime.

-

Explain maintaining a sorted list vs. head/tail strategies and the resulting complexities (insert O(n), delete/peek O(1)). Note when you’d prefer a heap in production, but why bespoke structures appear in streaming schedulers, compaction queues, or backpressure control.

-

Describe partitioning by product, ordering by date, and using window functions with “islands and gaps” logic (e.g., running SUM over segments defined by cumulative restock counts). Mention late-arriving data, idempotent backfills, and validating totals against inventory snapshots.

Given a list, move all zeros to the end while preserving the relative order of non-zero elements.

Frame this as a stable in-place partition using a write pointer in one pass, O(n) time / O(1) space. Connect to data engineering use cases like compacting sparse feature arrays or optimizing serialization by shoving sentinel values to the tail.

-

Call out XOR accumulation (or sum diff with overflow caveats) as the robust approach. Relate this to streaming integrity checks, dedupe reconciliation, or quickly pinpointing dropped IDs during batch transfers without materializing large hash structures.

Tip: Treat every prompt like production code: state assumptions first (time zones, nulls, duplicates), then write readable SQL/Python with small, testable steps (CTEs, helper functions). Validate with quick sanity checks (row counts, distinct keys, min/max dates) and call out edge handling (late events, idempotency, daylight savings). If pressed on performance, discuss practical levers like predicate pushdown, partition pruning, clustering/Z-ordering, and file compaction. Then tie the result back to a business decision (e.g., “this query surfaces which cohorts to re-engage”). Clarity > cleverness.

Test your skills with real-world analytics challenges from top companies on Interview Query. Great for sharpening your problem-solving before interviews. Start solving challenges →

System/product design questions

These questions explore how you think about scale, latency, and failure. You might be asked to design a system to capture real-time product page views or build a warehouse schema for merchant order data.

For instance, you could be tasked with building a fault-tolerant real-time pipeline that processes flash-sale transactions with strict SLA guarantees. You’ll need to weigh streaming vs. batch, partitioning, retries, and schema evolution.

-

Explain a two-stage approach: (a) candidate generation (standardize text, SKU/GTIN matching, brand catalogs, fuzzy tokens, embeddings for near-duplicates) and (b) resolution (pairwise scoring model + thresholds, or clustering). Cover human-in-the-loop queues for low-confidence matches, canonical record selection rules, and idempotent merge operations. Call out data model needs (canonical_product, product_alias, merge_events), rollback strategy, offline/online latency, and monitoring for precision/recall drift.

-

Outline an event envelope (event_id, user/anon ids, session_id, timestamp, page/resource, referrer, device, geo, experiment bucketing) plus a flexible properties blob. Describe edge collection (SDKs/Beacons), streaming transport (Kafka/Kinesis), validation/enrichment (schemas, PII handling, user stitching), and storage tiers (raw, bronze, silver, gold). Discuss partitioning (event_date, app), deduping, late-arrival handling, GDPR deletion, and query serving (columnar warehouse, materialized views for DAU/retention/attribution).

-

Propose normalized entities (artist, album, track, genre, track_genre, track_artist for many-to-many), track attributes (title, duration, release_year, track_number), and audit fields. Address secondary indexes (artist_name, album_id+track_number), surrogate vs. natural keys (ISRC), and slowly changing attributes (aliases, remasters). Extend to Shopify-like use cases: think of “track” ≈ “variant” and “album” ≈ “collection,” then call out content moderation, dedupe, and catalog versioning.

-

Describe core tables (menu_item, order_header, order_line, payment, discount, tender_type) and dimensions (daypart, promo). Explain constraints (FKs, price at time of sale), and capturing modifiers/combos. For analytics, note incremental loads from POS, late receipts, tax/tip treatments, and building a semantic layer (facts: sales_line, dims: item, date, channel). Mention performance tactics (partition by business_date, Z-order/order clustering on item_id) and data quality (balancing totals to payments).

-

Lay out phases: requirements + target schema design (users, friendships, posts, reactions), choose keys and constraints, and define SLOs. Plan migration via dual-write/CDC: backfill historical data, enable dual writes with idempotency, reconcile diffs, then flip reads. Cover normalization vs. denormalization trade-offs, indexing strategies for feed queries, sharding/partitioning (by user_id), transaction boundaries, and rollback.

Include governance (schema registry, migrations via Flyway), and post-cutover validation (consistency checks, query performance baselines).

Tip: Start from the merchant outcome and SLOs (freshness, accuracy, p95 latency, cost), then walk layer-by-layer: ingestion → storage → transform → serve → observability. Justify trade-offs (batch vs. streaming, schema-on-write vs. read, CDC vs. snapshots), and design for reliability (exactly-once where needed, idempotent upserts, dead-letter queues, backfills) and evolution (schema registry, data contracts, versioned models). Close with how you’d measure success (alerting, lineage, data tests, unit/integration checks) and a rollback plan. Prefer minimal viable architecture, you can iterate, not over-engineering.

Behavioral questions

Good answers tie into Shopify’s values:

- Be Merchant Obsessed: Did your work directly help merchants make better decisions?

- Thrive on Change: How did you adapt to shifting priorities or requirements?

- Act Like an Owner: Did you go beyond your scope to prevent future issues?

These questions focus on ownership, ambiguity, and resilience. Expect prompts like “Tell me about a time your data pipeline broke right before a launch” or “How have you prioritized between data quality and delivery speed?”

-

Use a tight STAR flow. For Shopify, highlight hurdles like schema drift from upstream services, late-arriving events, backfill pains, or exploding warehouse costs. Explain how you clarified ambiguous requirements, added data contracts and validation, built idempotent, checkpointed pipelines, and introduced monitoring (freshness, volume, schema) to prevent regressions. Close with measurable impact (e.g., % reduction in failures or query latency).

Sample answer: When I joined my previous team, we were re-architecting a product analytics pipeline that processed clickstream events from over 200 million daily sessions. The schema changed frequently because product managers added new event properties without versioning, which caused schema drift and nightly job failures. I worked with the upstream product teams to implement data contracts in JSON schema, added validation at ingestion, and made pipelines idempotent and checkpointed in Airflow. I also built a freshness and schema drift monitor that alerted us in Slack. As a result, nightly job failures dropped by 92%, and query latency for analysts improved by 35%. The project taught me to prioritize clear contracts and observability early in the design process.

What are effective ways to make data more accessible to non-technical partners?

Frame accessibility as product work: define canonical metrics, build semantic models and governed data marts, and layer role-based access with PII tagging. Mention documentation (data catalog, column lineage), self-serve dashboards, and templated queries. Call out enablement (office hours, playbooks), SLAs on freshness/quality, and “data contracts” with source teams to keep downstream models stable.

Sample answer: In my last company, business teams relied heavily on the data engineering team for manual pulls, which created a backlog. I treated accessibility as a data product problem. I worked with stakeholders to define key metrics, then built a semantic layer in Looker that standardized metric definitions and applied role-based access controls for sensitive fields. I also led weekly training sessions for self-serve dashboards and created documentation in Confluence with lineage diagrams. Within two months, self-serve queries increased by 60%, and ad-hoc ticket volume dropped by 40%. The main takeaway was that empowering non-technical teams with context and guardrails leads to better decision-making and reduces bottlenecks.

What would your manager say are your top strengths and areas to improve?

Choose Shopify-relevant strengths (e.g., building reliable streaming + batch pipelines, communicating trade-offs, mentoring). Pair each with a short impact example. For a growth area, pick a real, low-risk one (e.g., over-optimizing too early, taking on too much), then show your mitigation (guardrails, time-boxing, RFC reviews) and results. Keep the ratio 2–3 strengths to 1 growth area.

Sample answer: My manager would describe my top strengths as system reliability, proactive communication, and mentoring. I’m known for building dependable data pipelines and documenting designs clearly, which reduced escalations by 30% last quarter. For improvement, I used to over-optimize early, spending time tuning before verifying business value. I’ve since added a “proof-before-polish” step to my design process where I validate the utility of a job before optimizing. That shift helped me deliver faster while keeping the right focus on impact.

Tell me about a time you struggled to communicate with stakeholders. How did you resolve it?

Describe a misalignment (e.g., a team wants sub-second “real-time” when hourly is sufficient). Show how you reframed goals into SLOs, provided side-by-side cost/latency scenarios, and converged on an MVP (e.g., near-real-time for key metrics; daily for the long tail). Emphasize artifacts—requirements doc, SLA, runbook—and the outcome (adoption, fewer incidents).

Sample answer: At my previous company, a marketing team requested “real-time” campaign dashboards. Initially, I built near-instant streaming pipelines using Kinesis, but the team later clarified that daily updates were sufficient. The miscommunication had caused unnecessary cost and complexity. I took ownership by organizing a joint session to define SLAs, where we compared cost and latency trade-offs. Together, we decided on hourly updates for top campaigns and daily reports for long-tail metrics. I documented this in a data SLA playbook for future projects, which reduced similar confusion later. The experience taught me to translate technical options into clear business outcomes before implementation.

Why do you want to work at Shopify, and why this data engineering role?

Tie your motivation to Shopify’s mission (arming entrepreneurs), multi-tenant commerce data at scale, and the chance to productize trustworthy metrics (orders, GMV, fulfillment). Map your experience to the stack (event streams, lakehouse/warehouse, orchestration, quality). Mention how you’ll raise the bar on reliability, cost efficiency, and developer experience.

Sample answer: Shopify’s mission to empower entrepreneurs globally aligns strongly with what I value about data work — building systems that directly help users make better decisions. I’m drawn to the scale and complexity of Shopify’s multi-tenant commerce data, where accuracy and latency directly affect merchants’ revenue and trust. I’ve worked on event-driven architectures using Kafka and Snowflake, built cost-efficient ETL jobs, and improved data quality frameworks for downstream analytics. I see this role as an opportunity to contribute to the backbone that enables merchant insights and product growth. I’m particularly interested in improving data observability and reducing warehouse costs, which aligns with Shopify’s focus on long-term sustainability.

Describe a time you prevented a major data incident before it hit production. What early signals did you catch and what controls did you implement afterward?

Walk through the detection (anomaly in freshness/volume, schema diff, contract violation), the immediate containment (circuit breaker, quarantine table, fallback), and long-term fixes (publisher tests, shadow pipelines, contract enforcement, lineage-based blast-radius alerts). Quantify avoided impact.

Sample answer: While monitoring our ETL pipelines, I noticed a subtle drop in daily row counts for transaction data. It wasn’t enough to trigger alerts but enough to hint at missing events. I manually compared ingestion logs and discovered that a partner API had changed timestamp formats. I immediately quarantined the affected partition, prevented downstream jobs from consuming incomplete data, and coordinated a backfill once the fix was deployed. Afterward, I added schema validation checks, contract tests, and a volume anomaly detector based on historical baselines. Catching the issue early prevented a potential multi-day reporting outage and saved over $100K in reconciliation effort. It reinforced my belief in proactive monitoring and lightweight data contracts.

Tell me about a project where you materially reduced platform cost or improved query latency at scale. How did you decide what to optimize?

Explain how you profiled workloads (warehouse telemetry, EXPLAIN plans), found the highest-leverage levers (partitioning, file compaction, clustering/Z-ordering, predicate pushdown, caching), and set success targets (e.g., 40% cost reduction, p95 latency −60%). Call out guardrails and ongoing monitoring to keep regressions from creeping back.

Sample answer: At my last company, our warehouse costs had doubled in six months due to unoptimized queries and over-partitioned data. I led a cost audit using query telemetry and job logs, then ranked optimization opportunities by cost-to-effort ratio. We discovered that 80% of costs came from only five queries. I introduced Z-order clustering, predicate pushdown, and file compaction for the most accessed tables. I also added query caching for repetitive Looker dashboards. These steps reduced warehouse spend by 45% and average query latency by 65%. The project also inspired a monthly Data Cost Review ritual, where engineers surface inefficiencies. This experience strengthened my bias toward measurable, high-leverage optimizations rather than blanket cost-cutting.

Tip: Use tight STAR stories that highlight ownership, reliability, and cost awareness. Keep situation/task to a line, go deep on actions (contracts, monitoring, backpressure controls, RFCs), quantify results (e.g., “failures −92%, spend −45%, p95 −60%”), and finish with one learning you institutionalized (runbooks, templates, SLAs). Map your choices to Shopify values: Be Merchant Obsessed, Thrive on Change, Act Like an Owner. Reference durable artifacts (dashboards, data contracts, postmortems) to show impact that lasts.

How to prepare for a data engineer role at Shopify

Preparation for a data engineer role at Shopify requires both technical mastery and strong communication. Shopify’s engineers work in autonomous squads, which means interviewers look for candidates who can design scalable systems, explain trade-offs clearly, and think about merchant impact in every solution.

Brush up on the modern data stack

Familiarize yourself with tools commonly used at Shopify, such as Kafka for real-time event streaming, Snowflake or Redshift for warehousing, dbt for transformation and modeling, and Airflow for orchestration. Interviewers often ask how you’ve used these tools to address real scalability challenges or reduce latency.

Tip: Focus on practical implementation stories instead of generic tool knowledge. Be ready to explain a specific case where you improved data freshness, optimized cost, or reduced failure rates using these tools.

Practice SQL challenges under time pressure

Expect SQL rounds that test your ability to join datasets, compute rolling metrics, or handle messy timestamp logic. Shopify values correctness and clarity more than obscure optimizations. Try solving SQL problems within 30–45 minutes to simulate real test conditions and time management.

Tip: Use tools like Mode Analytics, LeetCode, or Interview Query’s SQL dashboard to practice in a realistic environment. Always read your results for sanity. Shopify interviewers often care more about how you validate than how fast you code.

Draft architecture narratives

Shopify interviews test not only whether you can build data pipelines but also whether you can explain your architectural decisions clearly. Prepare by writing short “architecture narratives” for common prompts like building a streaming ingestion system, migrating from batch to near-real-time, or designing merchant metrics pipelines. Break your explanations into ingestion, storage, transformation, serving, and monitoring.

Tip: Use Shopify’s real-world scale as context. Think about how your design would handle billions of events from thousands of merchants, each requiring consistent, auditable data. Clarity in assumptions is often more impressive than excessive technical depth.

Strengthen data quality and observability fundamentals

Shopify puts a heavy focus on data reliability and lineage because inaccurate metrics can directly affect merchant trust. Review how to design data contracts, schema validation, freshness checks, and monitoring dashboards using tools like Monte Carlo or Great Expectations.

Tip: Be prepared to describe one incident where you improved data quality. Explain what went wrong, what signal alerted you, and how you built a long-term safeguard. Shopify values proactive ownership over reactive firefighting.

Refine life story examples

The life story interview evaluates reflection and ownership, not just achievements. Prepare two or three stories where your decisions shaped the outcome of a project—such as leading a critical migration, unblocking dependencies, or scaling a failing system. Each story should show the problem, your reasoning, and the measurable result.

Tip: Practice summarizing your stories in under three minutes each. Shopify interviewers often appreciate structured but conversational storytelling that ties every example to a tangible business or merchant outcome.

Practice pair-programming

During pair-programming sessions, communication is just as important as logic. Practice working through problems aloud with a peer or using mock-interview tools. Focus on explaining what you’re checking, why you’re changing something, and how you’re testing the fix.

Tip: Narrate your thought process even when you’re unsure. Shopify values curiosity and teamwork more than perfection. Demonstrating composure and collaboration under feedback is a strong positive signal.

Review data modeling and warehouse optimization

Shopify’s scale means candidates should understand star schemas, partitioning, clustering, and incremental loads. Study query plans, performance tuning, and warehouse cost management. You may be asked to diagnose slow queries or suggest ways to reduce compute spend while maintaining accuracy.

Tip: Learn to interpret EXPLAIN plans and warehouse telemetry. Interviewers often ask how you would find the most expensive queries and optimize them, so practice quantifying improvements with concrete metrics like “cut query time by 60%.”

Need 1:1 guidance on your interview strategy? Interview Query’s Coaching Program pairs you with mentors to refine your prep and build confidence. Explore coaching options →

Study Shopify’s ecosystem and data products

Knowing how Shopify operates helps you tailor your examples and vocabulary. Review the company’s merchant dashboards, Shop Pay, and analytics products, and understand how data supports merchant decisions. Interviewers appreciate candidates who connect their technical skills to Shopify’s mission of empowering entrepreneurs.

Tip: Spend 15 minutes exploring Shopify’s documentation and developer blog. If you can explain how data engineering enables better merchant insights, you’ll stand out immediately.

Mock your final presentation and walkthrough

Many candidates underestimate how important storytelling is during system design or project walkthroughs. Record yourself explaining a past project or a mock prompt, then review whether your explanation sounds structured and confident.

Tip: Keep a consistent structure: context → problem → approach → trade-offs → outcome. Practice ending with measurable results, even if approximate (“reduced pipeline latency by 40%” or “increased data availability to 99.9%”).

Want to practice real case studies with expert interviewers? Try Interview Query’s Mock Interviews for hands-on feedback and interview prep. Book a mock interview →

FAQs

What is the average salary for a data engineer at Shopify?

As of 2025, compensation for Shopify Data Engineers varies significantly depending on geography and seniority. Total pay typically includes a base salary, stock options, and occasional performance bonuses, though Shopify’s structure leans heavily toward equity at higher levels.

In the United States (New York City area), compensation rises to reflect higher market rates and cost of living. Senior Data Engineers (L6) earn about US$287,000 per year, composed of approximately US$209,000 in base salary and US$78,000 in stock.

In Canada, annual pay ranges from US$118,000 (L5) to US$270,000 (L7), with a median package around US$172,000 per year. Most of the compensation comes from base salary and stock, with minimal reliance on bonuses. (Levels.fyi)

- L5: ~US$118,000 per year (US$91,000 base, US$26,000 stock)

- L6: ~US$184,000 per year (US$163,000 base, US$20,000 stock)

- L7: ~US$270,000 per year (US$254,000 base, US$16,000 stock)

Tip: Shopify’s pay philosophy emphasizes high equity ownership and sustained technical leadership. Engineers at L6 and above are rewarded for long-term product impact, system design influence, and mentorship rather than short-term output, making stock a central part of total compensation.

Average Base Salary

Average Total Compensation

How long does the Shopify data engineer interview process take?

The process typically takes three to five weeks from application to offer. Candidates usually complete a recruiter screen, technical challenge, pair-programming or debugging session, architecture discussion, and a behavioral interview. Timelines may vary by level or location, but Shopify’s recruiting team communicates updates clearly at every stage.

Tip: Stay proactive by sending polite check-ins after each round. It shows genuine interest and professionalism.

What skills should I highlight in my resume for this role?

Focus on practical experience with data pipelines, ETL frameworks, and warehouse optimization. List hands-on skills in SQL, Python, dbt, Kafka, Airflow, and Snowflake. Include measurable outcomes such as “reduced query latency by 40%” or “cut data pipeline failures by 80%.” Highlighting results over responsibilities makes your impact clearer.

What does Shopify look for in a data engineer candidate?

Shopify values engineers who combine strong technical judgment with business empathy. The best candidates show they can design scalable, maintainable systems and connect their work to merchant success. Ownership, curiosity, and clarity of communication are strong signals of fit.

How should I prepare for the system design round?

Practice building and explaining end-to-end pipelines. Use prompts like “streaming ingestion for checkout data” or “event-driven architecture for merchant analytics.” Shopify interviewers look for clarity of trade-offs, modularity, and data reliability. Use simple diagrams or narratives to explain your design flow.

Tip: Focus on explaining why you chose a certain design, not just how. Shopify prioritizes engineers who think in terms of scalability and long-term maintenance.

Are there Shopify data engineer jobs posted on Interview Query?

Yes. Interview Query regularly posts openings for Shopify data engineers as well as related roles in data science and analytics. You can browse current listings or set alerts to be notified of new opportunities.

What is the best way to practice for the technical challenge?

Simulate time-boxed exercises by solving SQL and Python problems under 45 minutes. Review your code for readability and edge-case handling. Platforms like Interview Query’s coding workspace can help you replicate Shopify-style problems focused on real data situations.

Tip: Always explain your reasoning through inline comments or summary notes. Shopify appreciates engineers who can make their thought process easy to follow.

Take the Next Step Toward Your Shopify Interview

Succeeding in the Shopify data engineer interview requires more than writing clean code. It is about showing how your technical decisions improve merchant outcomes. Whether building resilient pipelines or debugging live ETL failures, frame your stories in terms of impact on merchants and long-term system health.

For structured preparation, explore these resources:

- Mock interviews to practice real data engineering questions with experienced interviewers

- AI interview simulator to rehearse technical prompts

- Learning path: data engineering for a step-by-step curriculum

For inspiration, read Hanna Lee’s success story about landing her dream data engineering role with Interview Query.