OpenAI Machine Learning Engineer Interview Guide (2025 Prep)

Introduction

If you’re preparing for an OpenAI machine learning engineer interview in 2025, you’re targeting one of the most dynamic and impactful roles in artificial intelligence today. OpenAI’s machine learning department is at the heart of AGI and superintelligence development, driving innovation across reasoning, alignment, and agentic systems. With the release of o3, which reduces major real-world task errors by 20% compared to o1, and o4-mini scoring 99.5% pass@1 on AIME 2025 with code execution, your expertise will directly support systems that exceed prior benchmarks. This guide will help you navigate the interview process by aligning your technical depth, research rigor, and problem-solving mindset with OpenAI’s mission to shape the future of safe and capable AI.

Role Overview & Culture

As an OpenAI machine learning engineer, you’ll be part of a fast-moving team that transforms cutting-edge research into production-level AI systems. Your daily work will blend model development, large-scale optimization, and deployment using tools like PyTorch, Azure, and H100 GPUs. You’ll tackle adversarial threats, model drift, and evolving platform misuse while collaborating in small, cross-functional DERP teams that value engineering and research equally. The pace is intense—described as “manic” by insiders—but you’ll be surrounded by teammates who share your passion for AI safety, alignment, and real-world impact. With access to trillions of training tokens and responsibility for models used at a global scale, your contributions won’t just matter—they’ll define the frontier of artificial intelligence in 2025.

Why This Role at OpenAI?

In 2025, choosing an OpenAI machine learning engineer role means placing yourself at the epicenter of innovation, career acceleration, and financial upside. You’ll work on transformative projects with the sharpest minds in AI, gaining hands-on access to world-class tools, trillion-token datasets, and bleeding-edge research. Your compensation reflects this impact—top engineers earn up to $900,000 annually through performance-linked PPUs, which outperform standard equity. This role fast-tracks your expertise and prestige, giving your resume elite status and opening doors across the industry. With flexible work options, exceptional benefits, and constant exposure to complex technical challenges, your time at OpenAI will sharpen your skills, expand your network, and amplify your long-term career potential.

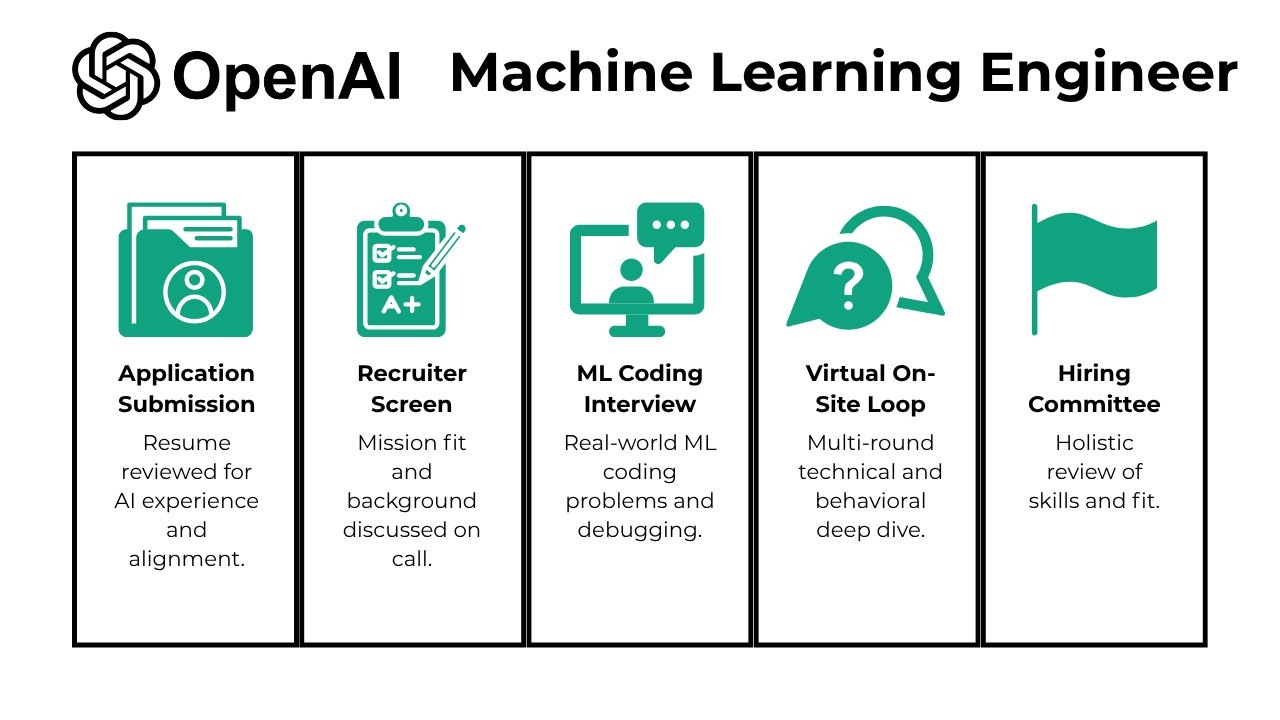

What Is the Interview Process Like for a Machine Learning Engineer Role at OpenAI?

Interviewing for a machine learning engineer position at OpenAI involves a structured, multi-stage process designed to assess your technical depth, research fluency, and alignment with OpenAI’s mission. From the moment you apply, every step is geared toward evaluating your ability to work on high-stakes, real-world AI systems.

- Application Submission

- Recruiter Screen

- ML Coding Interview

- Virtual On-Site Loop

- Hiring Committee

Application Submission

You begin your OpenAI journey by submitting your application through their careers portal or via referral. The recruitment team conducts a comprehensive review of your resume within approximately one week, evaluating your technical expertise, AI-specific experience, academic achievements, research background, and publications. Your application undergoes scrutiny from both recruiters and technical reviewers who assess your alignment with OpenAI’s mission and values.

Recruiter Screen

Your first human interaction occurs during a 30 to 45-minute video or phone conversation with OpenAI’s recruiting team. You will walk through your resume systematically, explaining your career trajectory, technical experiences, and motivations for joining OpenAI’s mission. The recruiter will probe your understanding of OpenAI’s current developments, including GPT models, AI agents, and industry-specific applications, so you should demonstrate familiarity with their research blog and recent announcements.

You will discuss your preferred team dynamics, project interests, and receive an overview of subsequent interview stages. This conversation emphasizes mission alignment, collaboration skills, and communication clarity rather than technical depth. The recruiter may also address logistical preferences, such as your interest in onsite versus virtual interviews, and answer your questions about OpenAI’s culture and expectations.

ML Coding Interview

You face a 45 to 60-minute technical assessment conducted via screen-sharing platforms like CoderPad, focusing on real-world engineering challenges rather than abstract algorithmic puzzles. The interviewer presents practical problems such as implementing LRU caches, designing time-based data structures, or solving scalable system components while you explain your reasoning process.

For machine learning roles specifically, you encounter questions on model architectures, training methodologies, gradient descent optimization, and recent research papers in your domain of expertise. The session evaluates your code quality, scalability considerations, debugging skills, and ability to incorporate feedback during the problem-solving process. You should expect problems that mirror actual work scenarios at OpenAI, such as data preprocessing pipelines, feature engineering challenges, or model deployment considerations, all while maintaining clear communication about your approach and trade-offs.

Virtual On-Site Loop

You participate in an intensive 4 to 6-hour interview marathon spanning one to two days, engaging with 4 to 6 different OpenAI team members across multiple technical and behavioral sessions.

The technical portions challenge you with complex coding problems grounded in real engineering work, system architecture design for ML infrastructure, and deep dives into your area of specialization. You may present a significant past project, defend your technical decisions under scrutiny, and engage in discussions about AI ethics and safety considerations.

For senior ML engineering positions, you will face system design challenges involving scalable model training pipelines, distributed computing architectures, or real-time inference systems. The behavioral components assess your collaboration skills, handling of ambiguous situations, and alignment with OpenAI’s values through scenario-based questions. Throughout this process, interviewers evaluate your ability to work effectively across functions while maintaining technical excellence under pressure.

Hiring Committee

Following your virtual on-site interviews, a comprehensive evaluation committee reviews your performance across all interview stages within approximately one week. This committee conducts a holistic assessment considering your technical capabilities, problem-solving approach, cultural fit, growth potential, and mission alignment with OpenAI’s values. The review process may include reference checks and additional verification of your background during this final stage. The entire interview timeline from application submission to final decision typically spans 6 to 8 weeks, though OpenAI can expedite the process when candidates have competing offers or exceptional circumstances.

The committee’s decision-making process emphasizes not only technical excellence but also your potential to thrive within OpenAI’s collaborative, fast-paced environment and contribute meaningfully to their mission of developing beneficial artificial general intelligence.

What Questions Are Asked in an OpenAI Machine Learning Engineer Interview?

Interviewing for a machine learning engineer role at OpenAI means demonstrating deep technical skill, production readiness, and strong cross-functional communication. Here’s how the questions break down across different focus areas.

Coding / Technical Questions

These questions test your ability to write performant, debuggable code under real-world constraints. In an OpenAI ML coding interview, you may need to optimize vector operations or build models from scratch. You’ll also face debugging scenarios that reflect challenges from OpenAI’s deployment environments, making clarity and iteration essential:

1. Interpolating Missing Temperatures

To solve this, use Pandas’ groupby method to group the data by city and apply the interpolate function with the linear method to estimate missing temperature values. This ensures interpolation is performed independently for each city, filling in missing values based on adjacent valid data points.

2. Decreasing Subsequent Values

To solve this, iterate through the array and maintain a dictionary to track indices of values. Sort the dictionary by values in descending order, and filter out values based on their indices to ensure subsequent integers are less than later ones. This approach ensures continuous decreasing values are retained.

3. Given a list of integers, write a function to compute variance.

To calculate variance, first compute the mean of the list, then calculate the sum of squared differences from the mean for each element. Divide this sum by the number of elements in the list and round the result to two decimal places.

4. Level Of Rain Water In 2D Terrain

To calculate the trapped rainwater, create two arrays (leftMax and rightMax) to store the maximum height to the left and right of each index. Iterate through the terrain array to calculate the minimum height between leftMax and rightMax at each index, subtract the terrain height at that index, and sum up the values. This algorithm efficiently computes the total trapped rainwater with a time complexity of O(n) and space complexity of O(n).

The model would not be valid because the removal of the decimal point causes some independent variables to be multiplied by 100, distorting the relationship between the variable and the target label. To fix the model, errors can be visually identified using histograms, or clustering techniques like expectation maximization can be applied to detect anomalies in large ranges of data.

When running logistic regression on perfectly linearly separable data, the model fails to converge. This is because the loss function has no peak, resulting in an infinite slope that prevents the gradient ascent algorithm from finding a maximum. To address this, regularization techniques are introduced to penalize large coefficients and create a tradeoff, allowing the model to converge.

System / Product Design Questions

System design interviews evaluate how you architect scalable, secure, and production-ready machine learning systems. Whether you’re asked to build a real-time feature store or propose a red-teaming pipeline, these problems often resemble OpenAI ML debugging interview contexts—especially around deployment, reliability, and safety:

7. How would you design a machine learning system for the detection of unsafe content?

To design an ML system for detecting unsafe content, begin by defining the content types to be identified (e.g., hate speech or violent imagery). Collect diverse and labeled training data, preprocess it, and extract relevant features such as word embeddings for text or color histograms for images. Select suitable models like transformer-based models for text or CNNs for images, and address imbalanced data issues using techniques like resampling or adjusting class weights. Ensure the system complies with legal requirements, monitors bias, and incorporates user feedback for continuous improvement.

To scale the training of a recommender system for Netflix, techniques such as distributed computing, parallelization, and optimization strategies can be employed. Using frameworks like Apache Spark or TensorFlow enables handling large-scale data efficiently, while techniques like matrix factorization or deep learning models allow processing millions of users and movies effectively.

9. Designing a secure and user-friendly facial recognition system for employee management

To design the system, start by defining both functional and non-functional requirements, such as registration, clock-in/out, scalability, and distributed access. Use a pre-trained facial recognition model enhanced with company-specific data, and employ triplet loss networks for dynamic user enrollment. Integrate the system with HR and security databases via middleware, store images securely, and utilize tools like TensorFlow Extended (TFX) for model serving. Ensure scalability with stateless backend software and automate updates using orchestration tools like Kafka.

To address this, start by preprocessing the data to clean and tokenize text, followed by feature extraction using methods like TF-IDF or word embeddings. Use scalable machine learning models, such as transformer-based architectures (e.g., BERT), and train them on distributed systems to handle the large dataset effectively. Implement efficient storage and retrieval systems to manage the high volume of posts and ensure regular updates to the model to adapt to new topics.

11. Using APIs for Downstream Tasks

To design an ML system leveraging Reddit and Bloomberg APIs, you start by extracting data through API calls and storing it in a staging area. Next, preprocess and transform the data into structured formats suitable for downstream models, ensuring data quality and consistency. Finally, store the transformed data in a centralized database or data warehouse, accessible to modeling teams, while maintaining scalability and reliability.

Behavioral or Culture-Fit Questions

Behavioral questions focus on how you think, collaborate, and uphold OpenAI’s values. You’ll be asked how you navigate ambiguity, communicate complex ideas, and contribute to responsible AI. These questions are designed to assess your alignment with OpenAI’s mission and your ability to thrive in its fast-paced, impact-driven culture:

12. How would you convey insights and the methods you use to a non-technical audience?

At OpenAI, machine learning engineers often collaborate with policy teams, product leads, and external stakeholders who may not have deep technical backgrounds. When answering this question, focus on your ability to translate complex ML techniques into intuitive explanations that tie directly to stakeholder goals. For instance, you could describe how you simplified a model’s behavior when briefing a product team on safety trade-offs in a large language model. Emphasize clarity, structured communication, and using analogies or visualizations to bridge knowledge gaps.

13. Why Do You Want to Work With Us?

This question is an opportunity to show that you understand OpenAI’s mission and how your own values align with it. Rather than giving a generic response, point to specific initiatives such as alignment research, reinforcement learning from human feedback (RLHF), or OpenAI’s approach to responsible deployment. You can also mention your interest in contributing to cutting-edge research with real-world impact. Make sure to explain why the organization’s unique challenges or principles resonate with you as an engineer.

14. Describe an analytics experiment that you designed. How were you able to measure success?

As a machine learning engineer at OpenAI, experiments often involve model performance evaluations, safety interventions, or fine-tuning tasks. In your answer, walk through a project where you designed an experiment to test a hypothesis. For example, you could describe an ablation study to compare different training objectives or a fine-tuning experiment to improve factuality. Highlight how you selected metrics like BLEU scores, perplexity, human feedback ratings, or bias measurements. Be sure to explain the statistical rigor behind your conclusions and any unexpected outcomes you learned from.

15. How comfortable are you presenting your insights?

OpenAI emphasizes cross-functional collaboration and transparency, so your ability to communicate clearly is critical. When answering, describe situations where you have shared insights from ML experiments with diverse teams. For example, talk about preparing a presentation on model interpretability for policy researchers or walking through evaluation metrics with a product team. Explain how you tailored the content to the audience’s background and used tools like notebooks, dashboards, or data visualizations to support understanding. Also, mention your adaptability in both remote and in-person communication settings.

How to Prepare for a Machine Learning Engineer Role at OpenAI

Preparing for a machine learning engineer interview at OpenAI in 2025 requires a sharp mix of research depth, engineering precision, and real-world readiness. Start by mastering model debugging. Practice identifying issues like exploding gradients, vanishing signals, or batch norm instability. It’s not enough to know how models work when they’re healthy—you need to know how to trace and repair them under pressure.

Next, review production machine learning patterns. OpenAI’s systems operate at a massive scale, so understanding tools like feature stores, Canary releases, and robust data pipelines is essential. This goes beyond academic ML. You should be able to reason about latency, resource efficiency, and system reliability while keeping performance and safety intact. Practice with our AI Interviewer to refine your responses.

Then, solve past ML coding challenges. Use platforms or repositories that mimic high-pressure environments. Be sure to search for OpenAI ML problems and case studies to align your practice with the types of scenarios you may face. This reinforces both fundamentals and domain-specific knowledge.

Finally, run full-loop mock interviews. Simulate OpenAI’s typical four-round process, covering everything from model design to systems thinking and behavioral alignment. Ask for feedback from experienced ML peers or mentors. This process helps you refine not just your answers, but your confidence, clarity, and adaptability—traits that matter as much as raw technical skill in a high-stakes, fast-moving environment like OpenAI.

Conclusion

Preparing for a machine learning OpenAI interview in 2025 means combining technical mastery with real-world problem solving and a deep understanding of safety and alignment. As you refine your prep strategy, remember to build confidence through full-loop mock interviews and stay current with production ML best practices. For more guidance, check out our full ML system design interview questions collection, explore a tailored OpenAI ML and Modeling Learning Path to sharpen your skills, and get inspired by Hanna Lee’s success story. You can also browse other OpenAI role guides and return to our main interview questions hub for broader prep support.