OpenAI Data Scientist Interview Guide (2025) – Process, Questions, and Prep Tips

Introduction

OpenAI data scientist roles in 2025 place you at the forefront of some of the most advanced AI systems in the world. You help shape tools like “deep research,” which can synthesize and report insights from hundreds of sources in minutes using GPT-4.5 and o3-powered agents. Your work powers core products and protects users by detecting abuse, forecasting growth, and guiding enterprise strategy. With over 500 million weekly users and $12.7 billion in projected revenue, OpenAI’s momentum depends on your ability to translate data into decisions. Whether you are designing A/B tests, building predictive models, or making AI more accessible, your technical expertise directly fuels innovation and impact.

This guide strives to help you with the challenge of navigating the interview process and acing the interview for the OpenAI data scientist role.

Role Overview & Culture

OpenAI data scientist interview preparation should help you get ready for a role that blends deep technical work with real-world impact. You will analyze massive datasets, design predictive models, and run large-scale A/B tests that shape products used by over 500 million people weekly. Using Python, SQL, and tools like PyTorch, Looker, and Jupyter Lab, you will create dashboards, build scalable inference models, and deliver insights that guide strategy and growth. You will also collaborate across research, engineering, product, and finance, working in small, integrated teams with a flat structure and strong mission focus. The pace is fast, and the problems are novel, but you will be supported by a culture that values humility, diversity, and ethical responsibility.

Why This Role at OpenAI?

If you’re preparing for an OpenAI data scientist interview, you’re targeting a role with industry-leading personal upside. The median compensation is $810,000 annually, with top performers earning over $850,000, including generous equity through PPUs tied to OpenAI’s $300 billion valuation. You also gain elite benefits, remote flexibility, and access to some of the most prestigious projects and professionals in tech. The resume signal alone can unlock future roles at nearly any company. On top of that, you’ll work with massive datasets, cutting-edge models, and high-impact systems that make you vastly more marketable. This isn’t just a job—it’s a fast track to wealth, influence, and long-term career leverage that few data science positions can match.

What Is the Interview Process Like for a Data Scientist Role at OpenAI?

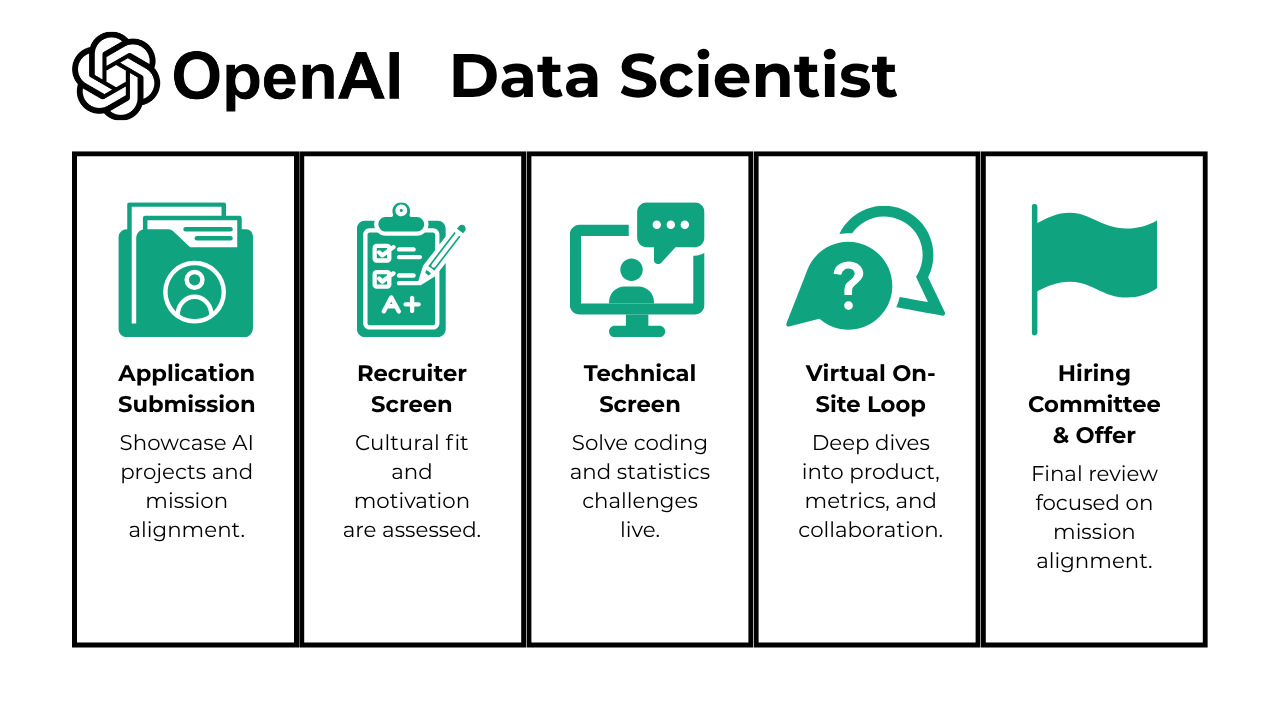

The OpenAI data scientist interview process is designed to assess your technical depth, product thinking, and mission alignment across five core stages. These include:

- Application Submission

- Recruiter Screen

- Technical Screen

- Virtual On-Site Loop

- Hiring Committee & Offer

Application Submission

Your OpenAI journey begins with a carefully crafted application that demonstrates both technical excellence and mission alignment. You’ll submit through their careers portal, highlighting projects involving machine learning, AI ethics, or large-scale data systems. The recruiting team conducts thorough resume reviews, prioritizing candidates with relevant AI experience, academic achievements, and research background over traditional credentials. They specifically look for keywords like “AI-driven solutions,” “ethical AI,” and evidence of cross-functional collaboration. Strong applications showcase your ability to work with massive datasets, implement ML models, and contribute to AI safety initiatives. Getting an internal referral significantly increases your chances, as these applications receive priority review from the talent acquisition team.

Recruiter Screen

The 30-minute recruiter screen is your first impression opportunity, focusing on background verification, motivation assessment, and cultural fit evaluation. Expect questions like “Why OpenAI?” and “How do you handle competing priorities?” The recruiter evaluates your passion for AI safety, alignment with OpenAI’s mission to benefit humanity, and ability to thrive in a fast-paced research environment. They’ll also assess your communication skills and collaborative mindset, essential for working in OpenAI’s interdisciplinary DERP teams. Sometimes hiring managers join this call to provide role-specific context and answer technical questions about team dynamics. This conversation sets the foundation for your entire interview experience, so demonstrating genuine enthusiasm for advancing AI responsibly is crucial for progression.

Technical Screen

The one-hour technical screen evaluates your coding proficiency, statistical knowledge, and problem-solving approach through practical challenges. You’ll tackle real-world problems like optimizing Pandas operations for millions of rows, implementing machine learning algorithms from scratch, or designing SQL queries for complex data analysis. Statistics questions cover probability distributions, hypothesis testing, and maximum likelihood estimation. Expect deep learning concepts like transfer learning, vanishing gradients, and model evaluation techniques. The interviewer assesses code quality, algorithmic thinking, and your ability to explain complex concepts clearly. Preparation should include practicing with large datasets, reviewing ML fundamentals, and being ready to discuss trade-offs between different approaches. Success here demonstrates your technical readiness for OpenAI’s data challenges.

Virtual On-Site Loop

The 4-6 hour virtual onsite represents the most intensive evaluation phase, featuring multiple interviews with cross-functional team members. Product sense questions assess your ability to design metrics for AI features, such as measuring user engagement with ChatGPT or evaluating the success of API improvements. A/B testing discussions focus on experimental design, statistical power, and bias mitigation for large-scale product rollouts. Behavioral interviews explore your experience explaining complex data insights to non-technical stakeholders, handling model failures, and collaborating across diverse teams. You’ll also face scenario-based challenges like designing recommendation systems or building real-time anomaly detection for production environments. This stage tests your strategic thinking, communication skills, and ability to translate technical work into business impact while maintaining OpenAI’s ethical standards.

Hiring Committee & Offer

The final decision process reflects OpenAI’s commitment to thorough evaluation and safety alignment. Within 24 hours of your onsite, the hiring committee reviews feedback from all interviewers, weighing technical excellence equally with mission alignment and collaborative potential. They conduct comprehensive assessments considering problem-solving capacity, growth potential, and cultural fit within OpenAI’s unique environment. The committee specifically evaluates your safety-conscious approach to AI development and ability to contribute to responsible AI advancement. Successful candidates receive competitive offers with median total compensation around $810,000, including equity vesting over four years. The process concludes with reference checks and detailed discussions about role expectations, team placement, and OpenAI’s hybrid work model requiring three days per week in San Francisco.

What Questions Are Asked in an OpenAI Data Scientist Interview?

Understanding the types of questions in an OpenAI data scientist interview is key to building the right preparation strategy—expect a balance of technical, product, and behavioral challenges that test both your analytical skills and your ability to influence outcomes.

Coding / Technical Questions

In the OpenAI data science interview, you’ll be expected to write clean, efficient SQL and Python code to analyze large datasets, solve algorithmic problems, and demonstrate a deep understanding of data manipulation and computational logic:

To calculate the probability of rain on the nth day, set up a transition matrix representing the probabilities of moving between states (RR, RT, TR, TT). Use matrix exponentiation to compute the nth power of the transition matrix, and sum the probabilities of being in states RR or TR after n transitions. This approach leverages Markov chains for efficient computation.

To solve this, use the LEAST and GREATEST functions to ensure consistent ordering of source and destination locations. Then, group by these ordered pairs to eliminate duplicates and create the desired table with unique pairs.

To solve this, iterate through the list of truck locations and group them by model. For each model, count the frequency of each location and determine the most frequent one using a statistical mode function. This will give the top location for each truck model.

To solve this, use a self-join on the scores table to compare each student’s score with every other student’s score. Filter out duplicate comparisons and calculate the absolute score difference. Sort the results by score difference and student names alphabetically, then limit the output to the top result.

To solve this, Dijkstra’s algorithm can be used. Initialize distances for all nodes as infinity except the start node, and use a priority queue to explore nodes. Update distances and paths for neighbors if a shorter path is found. Finally, reconstruct the path from the end node back to the start node.

6. Write a function to find which lines intersect within a given x_range

To solve this, iterate through all pairs of lines, calculate their intersection point using the formula (x = \frac{c_2 - c_1}{m_1 - m_2}), and check if the intersection lies within the given x_range. If it does, add both lines to the output list.

Experiment & Product-Metrics Questions

These questions test your ability to design experiments, measure impact at scale, and think critically about product metrics, trade-offs, and causal inference in dynamic, real-world scenarios:

7. How would you measure the success of the Instagram TV product?

To measure the success of Instagram TV, start by clarifying its goals, such as increasing user engagement and retention. Define metrics like retention rates (30-day, 60-day, 90-day), daily active users, and average time spent per user. Analyze cohorts of users based on their Instagram TV usage and explore secondary metrics like feature drop-off rates and creator behavior to assess the impact comprehensively.

To address this concern, you would first define “amateur” and “superstar” channels based on metrics like subscriber count, views, and posting rate. Then, analyze growth rates, subscriber-to-view ratios, and YouTube recommendation percentages over time to compare performance trends. Additionally, segment data by time periods to understand historical versus current growth patterns and assess algorithmic impacts on visibility.

9. How would you assess the validity of the result in an AB test with a .04 p-value?

To assess the validity of the result, examine the setup of the AB test by ensuring user groups were properly randomized and variants were equal in all other aspects. Additionally, evaluate the measurement process, including sample size, duration of the test, and whether the p-value was monitored continuously, as this can lead to false positives or negatives. Properly designing the experiment with a predetermined sample size and effect size is crucial for reliable results.

To determine whether the carousel should replace store-brand items with national-brand products, start by defining a success metric, such as Margin per ATC (Add-to-Cart). Analyze customer demand for product substitution using online and offline data, and conduct an A/B test comparing store-brand and national-brand carousel versions. Finally, monitor performance post-implementation and iterate based on user behavior and feedback.

11. How many unique users visited pages on mobile only?

To count unique users who visited pages on mobile only, perform a left join between the mobile_tbl and web_tbl tables on user_id. Filter rows where web_tbl.user_id is NULL, indicating users who are not present in the web table. Then, count distinct user_id values from the filtered result.

Behavioral & Mission Alignment Questions

Expect questions that assess how you collaborate, communicate, and make decisions in ambiguous or high-stakes situations—while showing that your values align with OpenAI’s broader mission:

12. What are your strengths and weaknesses?

When answering this question at OpenAI, it is important to highlight strengths that reflect analytical rigor, curiosity, and ethical awareness in data work. Use real examples that show your strengths in action, especially those that align with OpenAI’s focus on responsible and impactful AI. For weaknesses, choose areas you are actively improving in and explain how you address them in ways that reflect self-awareness and a growth mindset.

13. How comfortable are you presenting your insights?

At OpenAI, presenting insights often means translating complex models or analyses into actionable findings for both technical and non-technical audiences. You should describe how you prepare, the tools you use for visualization, and how you tailor communication to different stakeholders. Include examples that show your ability to engage others, respond to feedback, and communicate data in a mission-driven, high-impact environment.

14. Describe an analytics experiment that you designed. How were you able to measure success?

In this context, discuss an experiment that involved AI systems, model performance, or user behavior, especially where ethical or interpretability concerns were relevant. Define success clearly in terms of measurable outcomes, such as improved prediction accuracy, user engagement, or reduced bias. Make sure to explain how you selected metrics and why those metrics were meaningful within the broader goals of the project.

15. What are some effective ways to make data more accessible to non-technical people?

Data scientists at OpenAI often need to make complex findings interpretable for policy teams, researchers, or external collaborators. Talk about methods like using intuitive dashboards, visual storytelling, or structured briefings that reduce technical barriers. Highlight any experience you have designing tools, documentation, or workflows that made advanced data more usable across teams.

Choose a situation where you had to explain a technical decision or result that was misunderstood or resisted. Describe how you identified the disconnect and adjusted your language, format, or timing to better meet the audience’s needs. This shows your ability to bridge the gap between data science and stakeholder impact, which is essential in a cross-functional setting like OpenAI.

How to Prepare for a Data Scientist Role at OpenAI

To succeed in an OpenAI data science interview, start by sharpening your skills in experiment design. You should be confident explaining A/B test structures, calculating p-values, and discussing the implications of sequential testing. OpenAI cares deeply about rigor, so expect to justify your choices under realistic product constraints. Your ability to frame trade-offs clearly will set you apart.

Next, focus on Python and SQL. Most OpenAI data scientists use SQL to extract insights from large datasets and Python for modeling, inference, and automation. Practice on databases and explore top-rated Kaggle notebooks to build fluency and structure your code for readability and efficiency.

It’s also important to study OpenAI’s recent product releases, including GPT-4.5, the deep research tool, and the Operator assistant. Weave product context into your answers—this shows strategic thinking and real interest in OpenAI’s mission. Highlight how you would design metrics or evaluate success for a specific feature or use case.

Finally, mock the entire interview loop. Record yourself walking through experiments, interpreting metrics, or debating feature rollout strategies. Listen back to identify gaps in clarity or logic. The goal is to sound precise and confident when discussing real-world data problems, just like you would as part of a high-stakes OpenAI team.

Conclusion

The OpenAI data scientist role is one of the most rewarding and competitive positions in the industry, offering unparalleled compensation, world-class projects, and a powerful career signal. Preparing well for the OpenAI data scientist interview means mastering experimentation, strengthening your SQL and Python fluency, and aligning your answers with OpenAI’s mission and tools. Focus on high-leverage study, consistent practice, and clear communication. To get started, follow our full OpenAI Data Scientist Learning Path, browse our hand-picked Data Scientist Interview Questions Collection, or read Muhammad’s inspiring Success Story. With the right preparation, you can turn this opportunity into a major career breakthrough.