eBay Data Scientist Interview Guide: Process, Questions & Salary

Introduction

Whether you’re working on recommender systems or running A/B tests at scale, cracking the eBay data scientist interview means preparing for both technical rigor and real-world product impact. eBay’s marketplace isn’t just big—it’s complex, and the data science team is central to how the company optimizes search, trust, and seller tools across a global platform.

Role Overview & Culture

As an eBay data scientist, you’ll spend your days building experiments, analyzing performance, and collaborating with PMs and engineers to make the buyer and seller experience smarter and safer. Common projects span marketplace health metrics, A/B test design, anomaly detection, and algorithm performance monitoring. Every analysis ties back to business impact—whether that’s GMV growth, improved NPS, or operational efficiency.

eBay’s culture emphasizes customer-first thinking, evidence-based decision-making, and fast, iterative experimentation. Data science teams are embedded throughout the org and often shape product direction, particularly when it comes to personalization, pricing, and buyer-seller trust signals.

Why This Role at eBay?

The eBay data science team works on some of the most data-rich and complex problems in ecommerce—from ranking optimization and demand forecasting to fraud detection and listing quality scoring. The impact is immediate and measurable, touching hundreds of millions of users. With a clear growth path from Data Scientist to Senior to Staff, the role offers both challenge and upward mobility.

To secure the role, you’ll navigate a multi-stage eBay data scientist interview that blends analytics, machine-learning depth, and culture fit.

What Is the Interview Process Like for a Data Scientist Role at eBay?

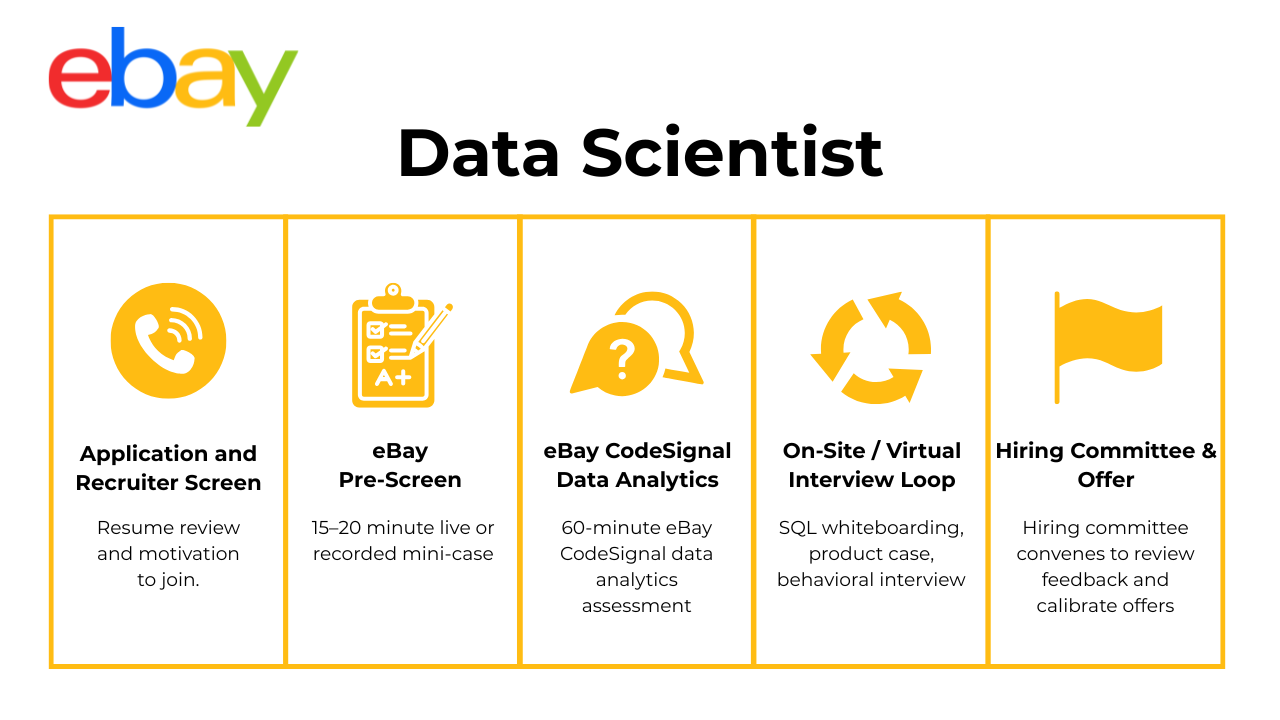

The eBay data scientist interview is built to test your technical fluency, statistical thinking, product sense, and team alignment. The process typically involves five stages.

Application & Recruiter Screen

This initial stage focuses on resume alignment and basic eligibility checks, such as work authorization and location. The recruiter may briefly touch on your technical background, tools you’ve worked with (SQL, Python, etc.), and your motivation for joining eBay.

Technical Phone/Virtual Screen

Next, you’ll have a 30-minute virtual screen focused on SQL and basic statistics. Expect practical SQL queries involving joins, aggregations, or time-window analysis. Statistical questions may cover distributions, hypothesis testing, confidence intervals, and metrics design. Clear explanations and code readability matter here.

Take-Home / Case Study

If you pass the screen, you’ll be given a take-home case. This might involve pulling data (or working with a provided dataset), generating insights, and delivering either a Jupyter notebook or a slide deck. Typical tasks include identifying anomalies, running cohort analyses, or analyzing experiment results. This stage simulates a real work assignment—showcase storytelling, clarity, and business impact.

On-Site / Virtual Interview Loop

The final loop consists of four rounds:

- A live SQL or Python coding round

- A product sense round centered on experiment design

- A machine learning deep dive on model building or deployment

- A behavioral interview that assesses collaboration, ownership, and alignment with eBay’s values

You’ll be evaluated on structured thinking, model evaluation, communication, and decision-making. For senior candidates, this loop may also include an ML system design interview.

Hiring Committee & Offer

Once interviews conclude, a hiring committee reviews feedback and calibrates level. Offers are customized to role level and experience, and include a breakdown of base, bonus, and equity. Feedback is typically gathered within 24 hours to maintain momentum.

Behind the Scenes: eBay uses a “bar-raiser” model, where at least one interviewer ensures consistent hiring standards across teams.

Differences by Level: Senior candidates usually complete an additional ML system design or stakeholder strategy panel. eBay data science intern applicants typically skip the full loop and hiring committee review.

What Questions Are Asked in an eBay Data Scientist Interview?

eBay’s questions cover four core domains: technical execution, machine learning, experimentation, and team alignment. Here’s how they break down.

Coding / Technical Questions

These test your ability to extract, manipulate, and clean data—often from messy or nested marketplace tables. You may be asked to write a SQL query to identify duplicate listings or detect changes in GMV trends by category. Your code will be assessed for clarity, efficiency, and edge-case handling. Big-data optimization and practical logic are key here.

This portion of the interview is also where eBay data science teams evaluate your familiarity with ETL, partitioning strategies, and performance trade-offs.

-

The solution normalizes direction by sorting the two city columns inside a

LEAST()/GREATEST()pair (or a CASE expression) before grouping. Insert results intoflight_routesviaSELECT DISTINCT LEAST(origin,destination) AS city_a, GREATEST(origin,destination) AS city_b FROM flights;. Add a composite primary key(city_a, city_b)so future duplicates raise conflicts. Clarify assumptions: airport codes vs. city names and exclusion of self-loops. This pattern shows mastery of de-duplicating undirected relationships—handy for shipping-route or buyer–seller matching at eBay scale. Write a query that returns each user’s total distance traveled, ordered from highest to lowest.

Join

userstoridesonuser_id, thenSUM(distance)in miles or kilometers, aliasing astotal_distance. UseCOALESCEto treat users with zero rides as 0 miles and still include them if requirements call for it. Indexrides.user_idto keep the aggregation fast on multi-billion-row logs. From a DS perspective, this metric feeds lifetime-value calculations or personalized coupon targeting for eBay’s motors or shipping verticals.-

Create a CTE that filters

ad_interactionsto the last 48 hours, groups byuser_id, and keeps those withCOUNT(DISTINCT ad_type_id) ≥ 3. Join this qualified set back to interactions, computeSUM(click)/SUM(impression)*100perad_type, and order by engagement descending. Discuss the impact of impression spam on denominators and why sessionization granularity matters. Highlight how such filtered KPIs prevent noisy power-users from skewing eBay Ads optimization. Count “liker’s likers”: users who liked someone who in turn has been liked, but never double-count.

Self-join the likes table: first alias

l1(user → liker), then join tol2wherel2.user_id = l1.liker_id. Aggregate onl1.user_idto count distinct second-degree admirers. Explain edge cases—users liking themselves or circular like-chains—and howDISTINCTprevents over-counting. Such recursive queries translate directly to measuring influence propagation or referral depth in eBay’s social-shopping experiments.-

Partition by

DATE(created_at), applyROW_NUMBER() OVER (PARTITION BY date ORDER BY created_at DESC), and filter whererow_number = 1. Index on(created_at)to keep the window scan efficient. Optionally store the result in a daily rollup table to accelerate finance dashboards. This pattern underpins end-of-day GMV snapshots or fraud checks for high-value payments. -

Join page sponsorships to user recommendation events, filter where

user.postal_code = sponsorship.postal_code, then divide by total recommendations per page. Guard against division by zero withNULLIF(denominator,0). The metric informs geo-targeting ROI; a low in-zip percentage may trigger broader radius bidding for eBay Local ads. Consider materializing intermediate daily facts to avoid repeated heavy joins. Calculate annual retention for a B2B SaaS product from the

annual_paymentstable.Label each customer’s first payment year as the cohort, then LEFT JOIN the following calendar year to flag renewals. Retention =

COUNT(renewed)/COUNT(cohort)per year. Discuss nuances: partial refunds, early renewals, or multi-year contracts. Such cohort views guide upsell timing for eBay Pro seller tools or Store subscriptions.Sample a truly random row from a 100 M-row table without full scans or locking.

If IDs are dense, pick

SELECT * FROM table WHERE id >= FLOOR(RANDOM()*max_id) ORDER BY id LIMIT 1;. If not dense, pre-compute a random hash column or use reservoir sampling in a background job to refresh a staging table. Explain trade-offs: MySQL’sTABLESAMPLE SYSTEMvs. Postgres’BERNOULLIvs. Vertica’sRANDOM—each with performance caveats. Random sampling is vital for offline modeling or manual bug repro without overloading eBay’s production clusters.

Machine-Learning / Modeling Questions

Expect questions on how to build or evaluate models in production contexts. For example: “How would you build a click-through-rate prediction model for new listings?” You’ll be expected to walk through feature engineering, model selection, cold-start problems, and online vs. offline evaluation metrics (AUC, precision/recall, business KPIs). Bonus points for discussing experimentation and model decay over time.

What’s the difference between Lasso and Ridge Regression?

Lasso (L1) adds the absolute value of coefficients to the loss function, driving less-informative features to exactly zero and yielding sparse models that aid feature selection. Ridge (L2) penalizes the squared magnitude of coefficients, shrinking them toward—but rarely to—zero, which stabilizes estimates when predictors are highly collinear. In practice Lasso can improve interpretability at the cost of higher variance, while Ridge reduces variance but keeps all predictors. eBay data scientists often trial ElasticNet to blend both, striking a balance between sparsity and shrinkage. Hyper-parameter tuning via cross-validation decides which penalty best minimizes out-of-sample error on marketplace data.

How would you interpret logistic-regression coefficients for categorical and boolean variables?

Each coefficient represents the log-odds change of the outcome per unit increase in the predictor, holding others constant. For a boolean variable (0/1),

exp(β)is the odds ratio when the flag switches from false to true. For a k-level categorical feature encoded with one-hot vectors, the coefficient of each dummy shows the odds ratio relative to the omitted reference level. Confidence intervals aroundexp(β)reveal statistical significance; wide intervals warn of sparse categories. At eBay this interpretation guides listing-quality audits by quantifying how specific badge flags shift sell-through probability.Given keyword and bid-price data, how would you build a model to bid on an unseen keyword?

Start by converting raw keywords into numerical vectors—TF-IDF, word2vec, or transformer embeddings—so that semantic similarity informs price. Frame bidding as a regression problem predicting expected cost-per-click (CPC) or as a contextual-bandit that maximizes expected profit under budget constraints. Incorporate seasonality, historical click-through rate, and advertiser vertical as features. Train with regularized models (e.g., Gradient Boosting) and calibrate with isotonic regression to keep predicted bids in realistic ranges. Finally deploy an online-learning loop that updates weights daily as eBay’s ad marketplace dynamics shift.

Without feature weights, how can you give each rejected loan applicant a reason for rejection?

Use post-hoc explainability tools such as SHAP or LIME to approximate local feature importance per prediction. These methods perturb inputs around the rejected instance, fit surrogate models, and surface the top contributing factors—credit score drop, high debt-to-income ratio, etc. For fairness, cap the explanation list to actionable factors and audit for disparate impact across demographics. Cache the per-applicant explanations so customer-support staff can reference them quickly. This approach satisfies regulatory transparency without exposing proprietary weight vectors.

What are the logistic and softmax functions, and how do they differ?

The logistic (sigmoid) maps any real input to the (0,1) interval, modeling binary-class probabilities. Softmax generalizes this to multi-class settings, converting a vector of logits into a probability distribution that sums to one. Whereas sigmoid has one output neuron, softmax emits k probabilities whose cross-entropy loss penalizes the full distribution. In logistic regression you need only one decision boundary; in softmax you learn (k–1) independent boundaries simultaneously. eBay relies on softmax for multi-category recommendation tasks and sigmoid for binary fraud detection.

How would you automatically detect prohibited firearm listings on a marketplace?

Build a two-stage pipeline: (1) an NLP classifier that scans titles, descriptions, and category metadata for gun-related keywords and semantic matches using a fine-tuned transformer; (2) a vision model (CNN) that flags firearm images. Fuse the outputs with a rules engine—e.g., high NLP probability AND image match triggers immediate takedown, otherwise send to human moderation. Continuously update the model with adversarial examples (code words, partial images) discovered by reviewers. Precision is critical to avoid false positives on toy guns; threshold tuning uses the business cost of missed firearms versus seller friction.

We have one million ride records—how do we know if that’s enough to train an accurate ETA model?

Estimate learning curves by subsampling data (10 k, 50 k, 100 k, …) and plotting validation MAE; flattening indicates sufficiency. Compute feature coverage: ensure rare routes and rush-hour periods have hundreds of samples to avoid high variance. Use k-fold cross-validation stratified by geographic grid to simulate unseen pickup–drop-off pairs. If error bars remain wide or bias persists for specific neighborhoods, collect more targeted data. This principled approach balances training cost against marginal accuracy gains—directly relevant for eBay’s latency predictions in shipping estimates.

How would you tune the ratio of public versus private content in a news-feed ranking model?

Frame the mix as a continuous control variable and run multi-armed bandit experiments that vary the public-content weight per user. Features include relationship strength, content freshness, and past engagement with public pages versus friends. Offline, train a gradient-boosted ranking model with pairwise loss; online, evaluate click-through, dwell time, and negative feedback. Monitor trade-offs: public-content boosts session length but may hurt social interactions. Incorporate diversity constraints so high-GMV listings (e.g., eBay deals) don’t overwhelm personal updates.

-

The model’s coefficients were estimated on corrupted scale, so predictions for affected rows can be wildly off, especially if the variable dominates. Re-training is necessary after correcting or removing bad observations; simple rescaling post-hoc fails because the model learned nonlinear interactions. Identify faulty rows via range checks (e.g., values > 10 × historical max) and impute or exclude them, then retrain and re-validate. Add data-quality checks to the ETL to prevent recurrence—critical for eBay pricing models where unit scale matters.

Design an ML system that minimizes wrong or missing orders for a food-delivery app.

Collect labeled incidents of wrong/missing items and engineer features across restaurant (menu complexity), courier (drop-off distance), and order (customizations). Train a gradient-boosted classifier to predict risk in real time; high-risk orders trigger redundancy actions—photo confirmation at pickup, double-bag tagging, or courier re-routing. Evaluate with precision-recall because incidents are rare; simulate cost savings from prevented refunds. Deploy as a streaming service integrated into order-dispatch APIs, with feedback loops from customer-support tickets to keep the model fresh—an architecture blueprint transferable to eBay’s fulfillment-defect mitigation.

Product & Experimentation Questions

Data scientists at eBay are often embedded in product teams, so product sense matters. You might be asked to design an A/B test measuring the impact of a shipping badge on conversion. Interviewers look for strong hypothesis formation, metric prioritization (e.g., conversion vs. bounce rate), and understanding of trade-offs in power analysis and test duration. Knowing when to run a holdout or how to mitigate selection bias will set you apart.

-

Propose a client-side A/B test that randomizes users 50 / 50 at first launch, stratified by platform and baseline posting frequency to balance heavy and casual posters. Primary metrics are post-creation rate and downstream engagement (comments, shares) per user; guardrail metrics include time-to-post and negative feedback on UI friction. Because the change may affect social propagation, monitor cross-network spill-over with “ghost holdouts” who never see treatment but are friends of treated users. Run for at least one posting cycle (e.g., two weeks) and use CUPED to reduce variance. Declare success only if lift is statistically significant and no guardrail regresses by more than 1 %.

-

Use stratified random sampling across key dimensions—watch-time decile, genre affinity, device type, and churn risk—to mirror the full population; oversample high-feedback users to guarantee survey responses. Apply an exclusion window so no household gets multiple concurrent pilots that might interact. During the two-week preview, track completion rate, episode drop-off curves, and incremental viewing minutes versus a matched control of 10 000 users held out. Estimate full-launch lift with inverse-propensity weighting to correct any residual sampling bias. Finally, run sentiment surveys and compare Net-Promoter Score to the genre median before green-lighting a global release.

-

Unequal sample sizes do not by themselves bias the estimator as long as randomization is preserved; they only widen the confidence interval for the smaller arm. Show mathematically that the difference-in-means remains unbiased and that the test-statistic’s pooled variance formula already accounts for n₁ ≠ n₂. The risk is power asymmetry—variant A may need a larger effect to reach significance—so pre-compute minimal-detectable-effect (MDE) for each arm. Emphasize running CUPED or regression adjustment to tighten intervals instead of re-balancing mid-test, which would introduce bias.

Your manager ran an experiment with 20 variants and found one “significant” winner. Anything fishy?

Yes—multiple-comparison inflation means a 5 % false-positive rate applies to each variant independently, so expected false discoveries ≈ 1 . 0 with 20 arms. Recommend Holm–Bonferroni or Benjamini–Hochberg correction, or a multi-armed bandit that controls family-wise error. Ask for the raw p-value; if unadjusted, the win could be noise. Suggest re-running with hierarchical Bayes or folding variants into a two-stage experiment to mitigate “p-hacking” risk.

Compare user-tied versus instance-tied A/B tests—pros and cons.

User-tied (between-subjects) randomizes at user-id, eliminating cross-variant contamination and simplifying retention analyses, but requires larger samples when outcomes are rare per user. Instance-tied (within-subjects) randomizes at page-view or query, boosting power via repeated measures but risks carry-over bias, learning, and network interference. In marketplaces like eBay, listing-impression tests (instance-tied) can measure CTR faster, yet recommender changes often warrant user-level splits to avoid feed-pollution. State that the choice hinges on interference risk, metric volatility, and engineering feasibility.

How would you estimate the effect on teen engagement when their parents join Facebook?

Direct randomization is unethical, so use difference-in-differences: identify teens whose parents join during a window and matched teens whose parents were already onboard. Verify parallel pre-trends in sessions and messages; then compute post-parent-join engagement delta. Add household fixed effects to absorb unobserved family traits. Sensitivity checks include placebo joins a month before the actual event. Report causal estimates with robust standard errors clustered by household.

Spotify launched curated playlists without an A/B test—how do you measure impact?

Apply synthetic-control or propensity-weighted interrupted-time-series: construct a counter-factual engagement series from users with low probability of noticing the feature. Model engagement as a function of seasonality, release calendar, and user tenure; the post-launch residual gap estimates treatment effect. Validate by back-testing on historical launches. If logs record first-playlist-exposure timestamps, create staggered adoption panels and run generalized difference-in-differences.

-

Use a CTE joining

ab_teststosubscriptions, thenCASE WHEN variant='control' AND subscription_id IS NOT NULL THEN 1 WHEN variant='trial' AND subscription_id IS NOT NULL AND (cancel_date IS NULL OR cancel_date >= start_date+INTERVAL '7 day') THEN 1 ELSE 0 ENDasconverted. AggregateAVG(converted)per variant for conversion rate. This blended logic respects variant-specific definitions while keeping one query plan, important for large fact tables at eBay. How would you design an Instagram “close friends” experiment that accounts for network effects?

Use cluster randomization on ego-nets: treat a seed set of users and all their direct followers to minimize diffusion across boundaries. Calculate “graph cut” to keep contamination < 5 %. Measure outcome both on treated egos and indirectly affected alters to estimate total-network effect. Alternatively, deploy a self-selection phase then instrumental-variable analysis using assignment as an instrument. Explain power trade-offs: cluster designs need larger sample but preserve causal validity under interference.

Uber Fleet runs an A/B with non-normal metrics—how do you pick a winner?

For skewed, heavy-tailed KPIs (e.g., revenue per fleet) use non-parametric tests like Mann–Whitney U or permutation bootstrap of the mean/median difference. Alternatively, apply log-transformation plus Welch’s t-test if zeros are rare. Estimate effect size with robust Huber M-estimators, report bootstrap confidence intervals, and validate with Bayesian posterior probability that variant > control. Power-analysis should reflect the chosen non-parametric statistic’s variance.

-

Define primary outcome as trade volume within 30 minutes of open; secondary metrics are session starts and opt-out rate. Because only active users were eligible, verify randomization balance on past-7-day trades to avoid survivorship bias. Compute incremental lift using CUPED to adjust for pre-experiment behavior and present both relative (%) and absolute ($) effect sizes. Check heterogeneous treatment effects across risk tiers to ensure push doesn’t spur excessive speculative trading. Conduct a sequential-test boundary to stop early if the notification underperforms or harms retention.

How would you validate that an eBay recommender-model launch did not degrade long-tail seller exposure?

Before rollout, define a seller-equity metric: share of impressions going to listings outside the top‐10 % sellers. Run a stratified A/B test with traffic splitting at user-id and compute change in this metric alongside GMV. If GMV rises but equity falls beyond a pre-set threshold, trigger a rollback or re-weight long-tail prior in the model. Include counterfactual logging to attribute impression shifts to model scores, enabling what-if simulation without more experiments.

Describe a time you had to persuade stakeholders to delay a feature because the experiment results were inconclusive. What data did you show, and how did you reach alignment?

Interviewers want a STAR narrative that blends statistical rigor with soft skills: discuss explaining confidence intervals, running additional power analysis, and offering alternative quick wins while the team collected more data. Highlight how trust built through transparent dashboards led to consensus without eroding delivery momentum.

Behavioral & Culture-Fit Questions

The behavioral interview digs into how you collaborate and problem-solve in ambiguous or high-pressure situations. You may be asked about a time you owned a high-priority bug fix, resolved a conflict with a PM or engineer, or dealt with incomplete data. Focus on impact, initiative, and how you use data to drive clarity. eBay values bias to action, resilience, and customer empathy in its DS org.

Describe a data project you worked on. What were some of the challenges you faced?

Choose a project where insights changed product direction—e.g., building a churn-risk model for eBay Stores. Highlight one technical roadblock (schema drift, massive joins) and one organizational hurdle (misaligned KPIs across teams). Explain how you unblocked pipelines, validated assumptions with stakeholders, and quantified the lift ( “reduced voluntary churn 3 pp” ). Conclude with the lesson you’d carry into eBay’s fast shipping or ads domains.

What are effective ways to make data more accessible to non-technical people?

Discuss tiered dashboards that translate SQL fields into business language, with hover-tooltips and natural-language summaries. Mention scheduled insight emails, Looker Explores locked to curated views, and self-serve notebooks backed by governed feature stores. Stress role-based access that protects PII while empowering category managers to pull seller metrics themselves—cutting ad-hoc analyst tickets and accelerating decision-making.

What would your current manager say about you—strengths and constructive critiques?

Pick two strengths that map to eBay’s culture—say, “obsession with data quality” and “ability to convert experiments into revenue actions.” Offer one candid growth area (e.g., delegating ETL tasks) and outline concrete steps you’ve taken (pair-programming, documenting pipelines). Support each point with evidence—Sprint burndown charts, peer-review feedback—to demonstrate self-awareness and high ownership.

Talk about a time you had trouble communicating with stakeholders. How did you overcome it?

Use a STAR story where engineers wanted a metric based on log-level events while finance insisted on booking-level numbers. Describe running a workshop to align on definitions, producing a one-pager with SQL snippets, and rolling out an alert that caught discrepancies. Emphasize empathy, iterative feedback, and how the shared metric unlocked a unified ads-revenue dashboard.

Why do you want to work with us?

Tie your passion for circular commerce and big-data experimentation to eBay’s mission of creating economic opportunity for all. Reference recent initiatives like Promoted Listings Advanced or the Guaranteed Fit program to show homework. Explain how your experience in causal-inference frameworks and scalable feature engineering can accelerate seller trust and buyer conversion.

How do you prioritize multiple deadlines and stay organized?

Outline an impact-versus-effort matrix synced to OKRs, daily stand-ups for risk surfacing, and a Kanban board that visualizes ETL, modeling, and dashboard tickets. Mention using automated data-quality tests and Airflow SLA alerts to avoid fire drills. Give an example where this system rescued a Black-Friday pricing model from last-minute scope creep without sacrificing scientific rigor.

Describe a situation where an experiment’s results contradicted senior leadership’s expectations. How did you handle the conversation and next steps?

Interviewers look for backbone and diplomacy: walk through presenting p-values, confidence intervals, and scenario analysis; proposing follow-up tests rather than conceding to HiPPO pressure; and turning skepticism into an action plan—illustrating data-driven integrity.

Tell me about a time you discovered a bias in a model post-launch. How did you detect it, communicate the risk, and remediate?

Highlight monitoring dashboards that flagged performance drops for a specific segment, the root-cause deep-dive (feature leakage, sample imbalance), and the mitigation (retraining, fairness constraint, or feature removal). Stress transparency with product and legal teams—critical in marketplaces handling diverse sellers and buyers.

How to Prepare for a Data Scientist Role at eBay

To succeed in the eBay data scientist interview, candidates need to combine technical precision with product intuition. Because the role sits at the intersection of marketplace experimentation and scalable ML systems, preparation should reflect both analytical depth and business context.

Study Marketplace Metrics

Start by getting fluent in eBay-specific KPIs like GMV, conversion rate, and listing quality score. These metrics form the basis of many case studies and experiments you’ll encounter during interviews. Knowing how to segment users, normalize for seasonality, or attribute metric shifts to product changes will give your answers real-world weight.

Balance Prep

Your study plan should mirror the interview’s structure: dedicate 40% of your time to SQL and Python coding practice, 30% to statistics and experimentation, 20% to machine learning design, and 10% to behavioral questions. Each round is different, but all require structured thinking and the ability to justify your approach.

Think Out Loud

During technical and product rounds, it’s not just about the right answer—it’s about the clarity of your process. Voice your assumptions, articulate trade-offs, and flag unknowns as you go. This helps interviewers follow your logic and signals maturity in handling ambiguous datasets or business scenarios.

Mock Interviews

Practice makes a huge difference—especially when you’re under time pressure. Set up a Mock Interview with a former eBay data scientist or try the AI Interviewer to simulate real interview scenarios. Recording your answers helps you refine structure, tone, and timing.

FAQs

What Is the Average Salary for an eBay Data Scientist?

Average Base Salary

Average Total Compensation

The eBay data scientist salary typically includes a base salary, annual performance bonus, and equity through RSUs. Total compensation varies by level and location, with Senior and Staff-level roles receiving significantly higher equity grants and scope-related bonuses.

Does eBay Hire Data Science Interns?

Yes. The eBay data science intern program runs primarily during the summer, with applications opening in early fall. Interns are matched with product or platform teams and work on real-world projects like experiment analysis or ML prototyping. Conversion rates to full-time roles are strong, especially for Master’s and PhD candidates with strong SQL and storytelling skills.

Conclusion

The best way to prepare for the data science interview at eBay is to build a toolkit that blends analytics, experimentation design, and marketplace product sense. From crafting SQL queries to justifying uplift metrics in A/B tests, you’ll need both speed and strategic depth.

For more help, visit our broader eBay interview questions & process hub, or check out role-specific prep for data science and machine learning. Ready to test yourself? Try a timed drill or book a mock interview today.

For inspiration, read how Dhiraj Hinduja transitioned from analyst to Data Science Manager using a structured prep approach. You’re one interview away—go get it.