eBay Machine Learning Engineer Interview Guide: Process, Questions & Salary

Introduction

Joining through the eBay machine learning engineer interview process means stepping into one of the most data-rich environments in tech. As an eBay ML engineer, you’ll build and deploy real-time models that power search, ads, personalization, and fraud detection—touching billions of marketplace interactions each week. Your day-to-day might involve fine-tuning ranking models, optimizing GPU inference via Triton, or collaborating with PMs and infra engineers to scale models from notebook to production.

eBay’s engineering culture centers on “technology-enabled economic opportunity.” That means machine learning isn’t theoretical—it drives measurable value for buyers and sellers around the world. Engineers work in fast feedback loops, deploying experiments quickly and using rich A/B testing infrastructure to iterate on everything from relevance to trust signals.

The machine learning engineer interview questions you’ll face at eBay reflect this mindset: they test your ability to balance theory, system design, and practical constraints. Let’s walk through the full process and what to expect.

Why This Role at eBay?

Machine Learning Engineers at eBay shape the engine behind hundreds of millions of listings and billions of daily searches. You’ll work on a modern tech stack—GPU-based Triton inference, Airflow pipelines, PySpark, and scalable feature stores. Growth is built into the role, with clear steps from MLE to Senior to Staff Engineer and beyond.

Landing the role means acing a multi-stage eBay machine learning engineer interview covering applied ML, coding, and product sense.

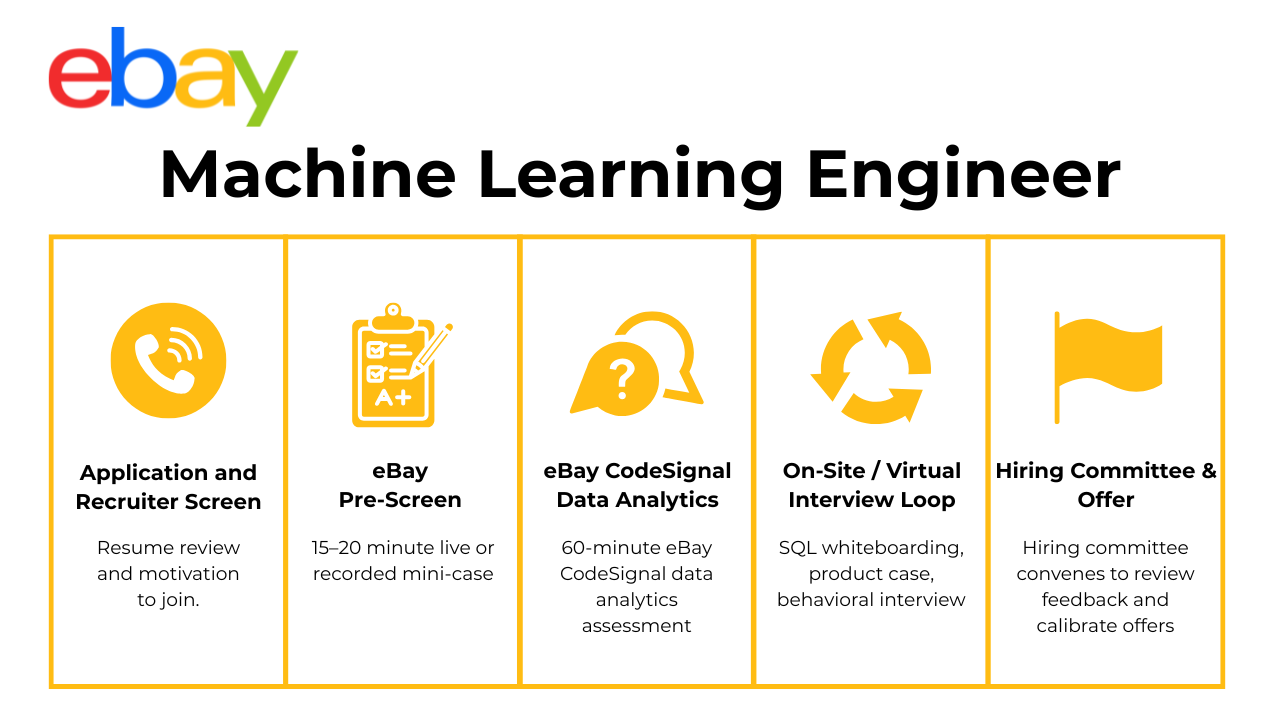

What Is the Interview Process Like for a Machine Learning Engineer Role at eBay?

The interview process evaluates your end-to-end ML skillset—from coding and algorithms to system design and deployment awareness. Expect five key stages.

Application & Recruiter Screen

Your journey begins with a recruiter call focused on your resume, technical fit, and compensation expectations. They’ll also explain the team’s structure and where you might align—Search, Advertising, Fraud, or Infrastructure.

ML Assessment (CodeSignal or In-House)

This stage, sometimes referred to as the eBay ML assessment, involves a 60-minute technical screen. You’ll complete a mix of LeetCode-style coding and a machine learning task, such as tuning a model’s AUC on tabular data or implementing a mini feature selection pipeline. It tests both programming fluency and applied ML understanding.

Technical Phone Screens

You’ll then complete two virtual interviews—one focused on data structures and algorithms, and the other on ML theory and problem-solving. Expect to whiteboard implementations, walk through model evaluation metrics, and discuss trade-offs in model choice or feature design. These interviews dig into how well you generalize across model types and how deeply you understand your past ML projects.

On-Site / Virtual Interview Loop

The final loop includes 3–4 interviews:

- A coding round

- An ML system design interview

- A behavioral interview focused on collaboration

- A session with your potential manager

In some cases, the system design round will center on a prompt similar to the eBay ML challenge, where you’re asked to design a live-serving pipeline (e.g., near-real-time re-ranking for search using online learning). You’ll be expected to explain feature storage, model retraining frequency, latency considerations, and trade-offs between batch and streaming inference.

Hiring Committee & Offer

Once the loop is complete, interviewers submit written feedback within 24 hours. A hiring committee evaluates your overall performance and calibrates your level. If successful, you’ll receive a tailored offer including base, bonus, equity, and leveling.

Behind the Scenes: eBay uses a “bar-raiser” system to ensure consistent hiring standards across roles and teams.

Differences by Level: Senior candidates will face a deeper ML architecture design round and are expected to discuss long-term scalability and stakeholder influence. Junior candidates typically face lighter system questions and more hands-on coding.

What Questions Are Asked in an eBay Machine Learning Engineer Interview?

eBay’s machine learning engineer interview questions are designed to test your depth across four pillars: algorithms, modeling, applied ML systems, and collaboration.

Coding / Algorithm Questions

Expect implementation-based prompts such as “Implement feature-hashing for sparse text input” or “Create a deduplication function for user search queries.” These questions test your coding speed, data structure choices, and understanding of time and space complexity. You may also be asked to optimize for memory in a real-time prediction context—typical for production-grade ML.

Write a SQL query that returns only the duplicate rows from a

userstable.Group by every column that defines row equality (or by a composite natural key) and use

HAVING COUNT(*) > 1, then join this set back to the base table to surface the full duplicates—including their primary keys—for downstream cleanup. Emphasize why counting alone is insufficient if you later need to delete or archive the extras. Mention adding a surrogate hash column to detect multi-column dupes efficiently in nightly dedupe jobs. This pattern is foundational for ML engineers who must guarantee unique training examples before feature generation.Fill missing daily temperature readings per city via linear interpolation in Pandas.

Ensure

dateis parsed to aDatetimeIndex, re-index each city to a complete day range, and rungroupby("city").apply(lambda g: g.interpolate("linear")); follow withffill()/bfill()for edge gaps. Complexity is O(N)O(N)O(N) and fully vectorized, safe for millions of rows. Explain why you shouldn’t leak future information—linear interpolation keeps locality—critical when these values feed time-series models. Conclude with.reset_index()so the cleaned DataFrame flows into subsequent Spark or Airflow tasks.-

Partition by

(first_name, last_name)—oremployee_idif that exists—and pick the row with the latesteffective_dateusingROW_NUMBER() OVER (PARTITION BY name ORDER BY effective_date DESC) = 1. Index on(name, effective_date)to prevent full scans. Highlight why relying on auto-incrementidalone can mislead if backfills insert older records later. ML platforms that calculate compensation forecasts need this logic to avoid label drift. -

Compute two aggregates per department—

total_empandhigh_paid—then filtertotal_emp ≥ 10and calculatehigh_paid / total_empaspct_gt_100k. Order by that percentage, applyLIMIT 3, and round for readability. Explain how this type of cohort filter informs ML feature engineering for org-level attrition models and the importance of post-filtering after aggregation to avoid wrong denominators. Produce a 2020 monthly customer report: users, orders, and GMV per month.

Left-join

userstoorders, bucketcreated_atwithDATE_TRUNC('month', …), and computeCOUNT(DISTINCT user_id),COUNT(order_id), andSUM(order_amount)grouped by month. Add a calendar table to ensure zero-filled months render in the BI layer. Mention why ML feature stores often materialize such month-level snapshots to accelerate model-training without rescanning raw fact tables.What percentage of search queries have all result ratings below 3?

Aggregate by

query, computeMAX(rating)per query, flag queries wheremax_rating < 3, then divide their count byCOUNT(DISTINCT query)overall and round to two decimals. State that poor-quality-query percent is a key offline metric for search-ranking models; a drop after re-ranking signals recall loss.-

Use a window function ordered by

sale_dateandROWS BETWEEN 2 PRECEDING AND CURRENT ROW, then apply weighted coefficients withSUM(sales * weight)inside aCASEladder or join to a weights CTE. Filter rows whereROW_NUMBER() OVER (PARTITION BY product_id ORDER BY sale_date) > 2to honor the “two preceding dates” rule. Stress that weighted windows are common in eBay demand-forecast pipelines to smooth volatility while biasing recent signals. Explain how you’d productionize a PyTorch model that predicts seller-level shipping-time variance, ensuring both low latency for inference and easy roll-backs.

Interviewers want you to walk through ONNX conversion, model-bundle versioning, feature-vector caching, canary deployment via Kubernetes, and shadow-mode monitoring with Prometheus/Grafana dashboards.

Walk me through designing a real-time anomaly-detection system that flags sudden drops in search-result click-through rate across thousands of query clusters.

Cover streaming aggregation with Flink or Kafka Streams, seasonality-adjusted EWMA baselines, hierarchical alerting thresholds, and feedback loops where analysts label true/false positives to retrain the detector—all while keeping p95 detection latency under five minutes.

ML Theory & Modeling Questions

This section focuses on your foundational understanding of supervised learning, evaluation metrics, and model selection. A sample question might be, “Compare XGBoost vs. Wide-&-Deep for a click-through prediction use case.” Interviewers will assess how well you explain bias-variance trade-offs, how you measure success, and how you’d handle data imbalance or feature importance.

How would you interpret logistic-regression coefficients for categorical and boolean variables?

Explain that each coefficient represents a log-odds change in the target, with

exp(β)giving the odds ratio. For a boolean flag, the odds ratio compares the outcome when the flag flips from 0 to 1; for a one-hot categorical level, it’s relative to the omitted reference category. Stress why wide confidence intervals or sparse groups can make large coefficients meaningless and how you’d address this with regularization or hierarchical encoding. Close by noting that a correct interpretation lets eBay’s product teams translate model outputs into actionable UI tweaks (e.g., “Free-returns badge raises purchase odds 1.3×”).What’s the difference between Lasso and Ridge regression?

Contrast the L1 penalty’s ability to drive coefficients exactly to zero—yielding sparse, interpretable models—with Ridge’s L2 shrinkage that keeps all predictors but reduces variance, especially under multicollinearity. Discuss bias-variance implications, cross-validation to tune α, and when ElasticNet blends both to capture group sparsity. Mention that for eBay’s high-dimensional text features, Lasso often improves latency by trimming weights, while Ridge can stabilize click-through estimates when similar n-grams compete.

What are the logistic and softmax functions, and how do they differ?

The logistic (sigmoid) maps a real number to (0, 1) for binary-class probability, whereas softmax converts a vector of logits into a full k-class distribution that sums to 1. Highlight that logistic regression learns a single decision boundary, while softmax optimizes (k-1) simultaneous boundaries through cross-entropy loss. Explain numerical-stability tricks (log-sum-exp) and why softmax is preferred for multi-category item-type prediction on eBay, while sigmoid suits binary fraud flags.

Given keyword-bid and price data, how would you build a model to bid on an unseen keyword?

Outline transforming raw words into embeddings (TF-IDF, FastText, transformers) so semantic similarity transfers price signals. Frame the task as regression on expected cost-per-click or as a contextual bandit maximizing ROI under budget. Discuss handling long-tail sparsity with sub-word models, regularizing to prevent over-bidding on outliers, and validating with offline replay plus small on-policy tests. Tie success metrics to incremental ad GMV and return on ad spend.

-

Propose post-hoc explainability tools—SHAP or LIME—that perturb inputs locally and approximate feature contributions per prediction. Detail generating a ranked reason list, filtering out sensitive attributes, and caching results for customer-service queries. Address regulatory fairness by auditing explanation consistency across demographic slices. Emphasize that eBay could use the same workflow to explain listing-suspension models to sellers.

-

Recommend plotting learning curves: train on logarithmic subsamples (10k, 50k, 100k …) and observe validation MAE flattening. Check feature-coverage tables—rare origin-destination pairs or rush-hour slices need hundreds of samples each to limit variance. Use bootstrapped confidence intervals to see if error bars meet product SLAs. If curves haven’t plateaued, prioritize targeted data collection, mirroring eBay’s approach to ensuring sufficient examples for new shipping lanes.

Why is bias monitoring crucial when predicting restaurant prep times for a food-delivery app?

Point out that prep-time errors propagate to courier dispatch and customer ETAs; systematic under-prediction for certain cuisines or peak hours erodes trust and driver utilization. Define bias as residuals correlated with protected or operational attributes (e.g., neighborhood income, restaurant size) and illustrate detection via stratified error heat-maps. Propose remedies: group-aware loss weighting, on-device calibration, or model ensembles. Draw a parallel to eBay’s need to avoid systematic ETA underestimates for international sellers.

Compare gradient-boosted trees with a transformer encoder for listing-title click-through prediction.

Start with bias-variance trade-offs: GBDTs handle tabular sparse features well and offer fast inference; transformers capture word order and context at the cost of higher latency and larger memory footprints. Explain why transformers may yield higher recall on rare, nuanced titles but require distillation or caching for production. Describe evaluation via AUC and offline replay metrics, and propose a hybrid model—tree features + transformer embeddings—if budget allows.

How would you handle a fraud-detection dataset with only 0.1 % positives?

Discuss class-imbalance remedies: focal loss, label-aware sampling, and up-weighting positives in the loss function; caution against naive over-sampling that inflates duplicate rows. Highlight precision-recall vs. ROC for evaluation and propose threshold-moving based on expected cost of false negatives. Mention anomaly-detection pre-filters to cut search space and active-learning loops to enrich the minority class—key for catching emerging scams on eBay Marketplace.

Applied ML / System-Design Questions

This round evaluates your ability to deploy ML at scale. You might be asked to “Design an online learning pipeline to re-rank search results in near-real time.” If the eBay ML challenge is used, this is where you’ll walk through the architecture—from data ingestion and preprocessing to model retraining triggers, caching layers, and inference latency constraints. Bonus points if you suggest real-world monitoring strategies (e.g., data drift detection or shadow deployments).

How would you build an automated system to detect firearm listings on an online marketplace?

Lay out a two-stage architecture: an NLP classifier that scans titles and descriptions for gun-related language and a vision model that flags firearm imagery. Fuse scores in a rules engine that triggers immediate takedown above a precision-tuned threshold, else routes to human moderation. Describe the data pipeline for continual re-training on reviewer-labeled edge cases, a near–real-time inference service (<200 ms p95), and feedback dashboards measuring precision, recall, and false-positive seller appeals. Explain how you’d protect against adversarial attempts (e.g., euphemisms, partial images) and comply with evolving legal requirements.

-

Propose a streaming ETL (Kafka → Flink) that validates and aggregates ridership feeds, writes features to an online store, and pushes hourly batches to an incremental XGBoost or Prophet model. Serve predictions through a low-latency REST endpoint backed by Redis caching and expose a batch API for historical backfills. Include data-drift detectors that trigger retraining when Kolmogorov–Smirnov p-values fall below 0.05. Outline functional SLAs (≤50 ms inference, 99.9 % uptime) and non-functional requirements (TLS, RBAC, GDPR compliance) before detailing a blue-green deployment strategy.

Build a real-time feature store that delivers 99-percentile lookup latency under 5 ms for 300 million sellers while keeping feature freshness below 15 minutes.

Partition hot features in a key-value store like Redis Cluster for sub-millisecond reads, back it with columnar storage (BigQuery / Iceberg) for cold queries, and use CDC streams to update both layers. Employ deterministic feature-generation code in a Spark Structured Streaming job to guarantee training/serving parity. Add TTL-based invalidation, versioned feature schemas, and lineage metadata so downstream models can reproduce experiments exactly. Finish with Prometheus metrics and SLO dashboards alerting on latency and staleness.

Sketch an automated model-rollback framework that activates whenever live GMV-prediction error doubles the baseline.

Log real-time inference outputs and ground-truth GMV, stream them into a monitoring service that computes rolling MAE and triggers a canary alarm when error > 2 × historical. Upon alarm, Kubernetes flips traffic from “current” to “previous” model Deployment, emits Slack / PagerDuty alerts, and snapshots offending inputs for root-cause analysis. Store shadow data to replay against patched models, and require two back-to-back green windows before re-promoting. Close with governance touches: audit logs, approval gates, and automated post-mortems.

Design a real-time anomaly-detection pipeline that flags sudden drops in search click-through rate across thousands of query clusters.

Consume click-impression streams via Kafka, aggregate CTR per cluster every minute in Flink, and compare to a seasonality-aware EWMA baseline. If the z-score < –3 for three consecutive windows, raise an alert enriched with top-impacted listings. Persist features and predictions for offline root-cause notebooks. Discuss handling multiple testing (FDR), suppressing alert floods with hierarchical clustering, and back-testing the detector on six months of replay data to tune sensitivity.

Create an online-learning loop to re-rank search results in near real time.

Start with zero-MQ or Kafka to log user clicks, feed them into a feature-processor that bins dwell time and reformats sparse text features, then train a lightweight online gradient-boosting model (e.g., LightGBM with

--boosting_type=rf) every 15 minutes. Deploy the updated model behind a feature-flag; hold out 10 % of traffic for shadow evaluation to prevent regressions. Cache top-N recommendations per hot query in Memcached to keep p95 latency < 50 ms. Include safeguards: weight decay on fresh data, concept-drift alarms, and a kill-switch routed through the rollback framework above.

Behavioral & Collaboration Questions

Finally, you’ll discuss how you work with others and lead ML projects cross-functionally. Expect prompts like “Tell me about a time your model underperformed in production” or “How did you partner with infra/PM to improve a delivery timeline?” Strong answers highlight ownership, iteration post-launch, and a clear grasp of trade-offs between performance and stability.

Describe a data project you worked on. What were some of the challenges you faced?

Pick an ML pipeline that moved to production—say, fraud-detection or image-moderation. Walk through the end-to-end lifecycle, highlighting a technical hurdle (Kafka consumer lag, schema drift) and an organizational hurdle (conflicting SLAs with Site Ops). Detail how you diagnosed root causes—profiling Spark stages, drafting an RFC for new data contracts—and the measurable impact after fixes (e.g., 30 % latency drop, 25 % false-positive reduction). Conclude with the key lesson that will help you ship reliable models on eBay’s petabyte-scale logs.

What are some effective ways to make data more accessible to non-technical people?

Explain how you encapsulate complex features behind clear APIs and build Looker dashboards that surface “insight cards” in plain language. Mention automated data-quality badges so business users trust the numbers, and in-app tooltips that map column names to real-world concepts (e.g., “seller_id → Storefront”). Describe running “office hours” and async Loom walkthroughs to up-skill partners, which cut ad-hoc JIRA requests by half. Tie this to eBay’s need for seller-tool PMs to self-serve A/B results without pinging engineering.

What would your current manager say about you—strengths and constructive criticisms?

Choose two strengths that align with eBay’s “Courage and Customer Focus” values—perhaps “obsession with automated testing” and “ability to translate research into deployable micro-services.” Offer one genuine growth area, such as over-engineering early prototypes, then share the concrete fixes: design-doc templates and 30-minute architecture reviews. Support claims with metrics (e.g., “reduced rollback incidents from 3 → 0 last quarter”). Authentic reflection shows you can level-up quickly inside a large marketplace org.

Talk about a time you had trouble communicating with stakeholders. How were you able to overcome it?

Use a STAR story where product asked for 5 ms p99 latency but the initial design hit 12 ms. Describe how jargon blurred expectations, so you created latency-budget diagrams and a red-yellow-green alert matrix. Weekly syncs plus a shared Confluence page aligned trade-offs—product accepted 8 ms if precision improved 2 pp. End with the outcome: launch unblocked, on-call pages down 40 %. Emphasize empathy, visual aids, and iterative alignment.

Why do you want to work with us?

Tie your passion for recommender-systems and circular commerce to eBay’s mission of enabling economic opportunity. Reference recent tech posts—e.g., eBay’s open-sourcing of Krylov for large-scale embedding training—to show homework. Explain how your experience scaling real-time feature stores and drift-detection services can accelerate Promoted Listings and Guaranteed Fit initiatives. Finish by noting culture fit: customer-first experimentation and measurable impact.

How do you prioritize multiple deadlines, and how do you stay organized?

Outline an impact-versus-effort matrix synced to quarterly OKRs, with capacity buffers for unplanned P0 incidents. Tool stack: Jira swim-lanes for sprint work, Airflow SLA alerts for pipeline health, and a Notion weekly “decision log” that records trade-offs. Describe a real example where this system surfaced a data-skew bug early, allowing you to re-slice traffic and still meet the model-refresh cutoff. Highlight that disciplined backlog hygiene reduces context-switches and keeps experimentation velocity high.

Tell me about a time you introduced an automated test or monitoring check that caught a critical data issue before it reached production.

Interviewers want tangible evidence of “defense in depth.” Describe identifying a silent NULL-bleed in a feature column, writing a Great Expectations rule plus Grafana alert, and preventing a 3 % uplift drop in search conversion. Emphasize the cost saved and the culture shift toward test-driven data engineering.

Describe a situation where you mentored a junior engineer or data scientist—what was the challenge, and what was the outcome?

Pick a mentoring story that shows leadership without authority: maybe guiding an intern through optimizing a PyTorch data-loader to cut GPU idle time by 60 %. Detail your coaching style (pair programming, code-review checklists) and how the mentee’s contribution shipped to production. Highlight feedback loops and how mentorship scales team throughput—valued in eBay’s collaborative environment.

How to Prepare for a Machine Learning Engineer Role at eBay

Acing the eBay machine learning engineer interview means more than brushing up on models—it’s about simulating real production trade-offs. Because the interview mirrors the responsibilities of deploying ML at global scale, your prep should cover system design, latency constraints, and iterative experimentation.

Study eBay’s Marketplace Metrics

Familiarize yourself with platform KPIs like Gross Merchandise Volume (GMV), click-through rate (CTR), and latency SLAs. These metrics drive prioritization at eBay, and many interview scenarios will ask you to optimize one without compromising the others. Understanding how GMV maps to ranking quality or how latency affects search performance will sharpen your product thinking.

Balance Prep

Split your preparation into key focus areas: 40% coding (data structures, language fluency), 30% ML theory (modeling techniques, eval metrics), 20% system design (pipeline architecture, feature stores), and 10% behavioral. Structure your study schedule to mimic interview pacing—short problems in early rounds, open-ended architecture prompts later.

Mock the ML Assessment

The eBay ML assessment is often the first hurdle, so simulate the format under timed conditions. Practice a 60-minute mix of SQL, Python coding, and a lightweight ML problem (e.g., cleaning tabular data or optimizing AUC). Focus on speed, accuracy, and clean implementation—you’ll need to pass this round to move forward.

Think Out Loud

Throughout all technical rounds, voice your trade-offs. Whether discussing accuracy vs. latency, batch vs. stream processing, or offline vs. online metrics, interviewers want to see how you think under ambiguity. Clear articulation of assumptions and fallback strategies will help you stand out.

Conclusion

Succeeding in the Machine Learning Engineer process at eBay requires more than technical skill—it demands business alignment, systems thinking, and product context. From optimizing search latency to deploying scalable retraining workflows, strong candidates balance machine learning depth with engineering pragmatism.

To dive deeper, check out our parent eBay Interview Questions & Process guide, or explore role-specific guides for Data Scientists and Data Analysts. Ready to simulate the process? Book a mock interview or try our AI Interviewer to practice on your own schedule. And for inspiration, read Jerry Khong’s success story—from ML prep to a breakthrough role in tech leadership, all starting with structured, role-specific interview practice. Now it’s your turn—go build the model that gets you in.