Docusign Data Engineer Interview Guide: Process, Questions & Salary

Introduction

Preparing for a Docusign data engineer interview in 2026 means getting ready for a role that sits at the center of large-scale data reliability, analytics, and AI-driven workflows. Docusign now serves over 1.5 million customers and more than a billion users across 180+ countries, which means even small data issues can ripple across compliance, revenue reporting, and product intelligence at scale.

Data engineers at Docusign play a critical role in powering the company’s Intelligent Agreement Management platform. The work goes far beyond writing SQL. Teams are responsible for building robust ELT pipelines, maintaining trusted semantic layers, enforcing data governance, and increasingly supporting AI-enabled workloads such as agentic pipelines and copilots.

In this guide, we’ll break down the Docusign data engineer interview process, explain what each stage is really testing, and show how the interview maps directly to the skills Docusign expects from modern data engineers working in cloud-native, security-sensitive environments.

Docusign Data Engineer Interview Process

The Docusign data engineer interview process is designed to evaluate deep technical fundamentals, system-level thinking, and collaboration in a regulated, enterprise-scale environment. Most candidates go through five to seven rounds over three to five weeks, depending on role seniority and location.

Unlike general software engineering loops, Docusign places heavy emphasis on SQL depth, data modeling, pipeline reliability, and cloud architecture, alongside behavioral signals tied to technical excellence and ownership.

Interview Process Overview

| Interview stage | What happens | What Docusign is evaluating |

|---|---|---|

| Recruiter screen | Introductory conversation and role alignment | Communication clarity, motivation, and expectations |

| Online technical assessment | Timed coding or SQL challenge (often HackerRank) | Core SQL, Python, and problem-solving fundamentals |

| Hiring manager interview | Deep dive into past data engineering projects | Ownership, impact, and technical judgment |

| Virtual onsite loop | 3–5 panel interviews (technical + behavioral) | End-to-end data engineering capability and culture fit |

If you want to simulate the pacing and pressure of these rounds, practicing with Interview Query’s mock interviews can help surface gaps early.

Recruiter Screen (30 minutes)

The recruiter screen is a short but important calibration step. This call focuses on your background, interest in Docusign, and alignment with the data engineer role you’re being considered for.

You can expect questions around:

- Your experience with SQL, data pipelines, and cloud platforms

- The types of data products or analytics systems you’ve supported

- Preferences around in-office, hybrid, or remote work

Recruiters also confirm seniority alignment, since Docusign hires across Data Engineer I/II and Senior Data Engineer bands.

Tip: Be explicit about the scale and reliability requirements of the data systems you’ve worked on. Docusign values engineers who think in terms of SLAs, downstream consumers, and failure impact.

Initial Technical Assessment (Online Coding Challenge)

Most candidates complete an online technical assessment, commonly delivered via HackerRank or a similar platform. This stage typically includes two to three questions with a 70–90 minute time limit.

| Assessment focus | What it looks like | What is being tested |

|---|---|---|

| SQL querying | Complex joins, aggregations, window functions | Ability to extract reliable insights from messy data |

| Data logic / algorithms | Light data-structure or transformation problems | Problem-solving and edge-case handling |

| Performance awareness | Large-table scenarios | Query efficiency and scalability thinking |

SQL questions often resemble real Docusign scenarios, such as analyzing agreement activity logs or identifying high-value enterprise users. Reviewing applied SQL patterns through the SQL learning path is especially useful here.

Tip: Prioritize correctness and readability over clever shortcuts. Interviewers care about whether your logic would hold up in production datasets.

Hiring Manager Interview

This round is more conversational and focuses on how you operate as a data engineer day to day. Hiring managers typically ask you to walk through one or two past projects in depth, covering architecture decisions, trade-offs, and lessons learned.

Common themes include:

- Designing and maintaining ELT pipelines using tools like dbt and Airflow

- Building dimensional models or semantic layers for analytics

- Ensuring data quality, freshness, and governance

Tip: Anchor your answers around impact. Explain who depended on the data, what broke when things went wrong, and how you improved reliability over time.

Virtual Onsite or Panel Rounds (3–5 interviews)

The final stage is a virtual onsite or panel loop consisting of multiple back-to-back interviews. Each round evaluates a different slice of data engineering competency.

| Panel round | What happens | What is evaluated |

|---|---|---|

| Coding and algorithms | Live coding (often via CoderPad) | Core Python/SQL logic and reasoning |

| Data modeling and SQL | Live schema design and query optimization | Analytical modeling and performance tuning |

| System design | Designing scalable ETL/ELT pipelines | Architecture, cloud services, and reliability |

| Behavioral | STAR-based discussion | Ownership, collaboration, and technical excellence |

System design rounds frequently touch on cloud-native patterns using AWS or Azure services, data quality checks, and how pipelines scale with growing usage. Practicing structured explanations with Interview Query’s data engineering interview questions helps build confidence for these discussions.

Tip: In design rounds, explain how you detect failures before you explain how you fix them. Docusign values proactive data reliability.

Docusign Data Engineer Interview Questions

Docusign data engineer interview questions are designed to test whether you can build trusted, scalable data systems that support analytics, compliance, and AI-driven workflows across the Intelligent Agreement Management platform. Interviewers care deeply about how you define metrics, reason about data correctness, and design pipelines that hold up under enterprise-scale usage and regulatory scrutiny.

The question mix closely mirrors the interview loop: SQL and data logic, data modeling and schema design, pipeline and systems architecture, and behavioral ownership. If you want a single place to practice the same patterns across SQL, modeling, and system reasoning, the fastest workflow is to drill directly in the Interview Query question library.

SQL and Data Logic Questions

SQL is the strongest signal in a Docusign data engineer interview. Interviewers evaluate whether you can write correct, maintainable logic for metrics that power reporting, compliance checks, and downstream AI features. They listen carefully to how you define assumptions before writing queries, especially when data is incomplete or delayed.

If you want to tighten fundamentals efficiently, the SQL interview learning path is the most direct prep for this section.

How would you calculate monthly retention for a subscription or recurring user base?

This question tests whether you can translate a business concept into a precise and defensible metric. For Docusign, retention directly affects contract renewals, expansion, and customer lifetime value. A strong answer clearly defines what “active” means, explains cohort construction, and handles edge cases such as plan changes or low-activity users. Interviewers also look for how you would validate the metric over time rather than trusting a single snapshot.

Tip: State your retention definition out loud first, then justify why it matches the decision being made.

How would you identify users who are likely to churn based on recent activity?

This evaluates segmentation judgment and how you convert behavioral signals into an actionable dataset. Docusign interviewers care about distinguishing temporary inactivity from true churn risk, especially in enterprise accounts with irregular usage. Strong answers explain threshold selection, validation against historical outcomes, and how to protect against false churn signals caused by delayed event ingestion.

Tip: Explain how you would backtest your churn definition using historical data.

-

This question tests careful denominator handling and user-level aggregation, which maps closely to agreement ownership, signer consistency, and fraud or compliance signals. Interviewers want to hear how you define “primary,” how you handle missing metadata, and how you avoid double-counting. The strongest answers also include how you would sanity-check the output distribution.

Tip: Call out how you handle users with no primary address explicitly.

How would you detect and handle duplicate events in a high-volume logging table?

This evaluates data hygiene and reliability thinking. Docusign cares about this because agreement events often arrive from multiple services. A strong answer discusses natural keys, timestamps, idempotency, and reconciliation checks rather than ad hoc deletes.

Tip: Tie your deduplication approach to downstream trust and auditability.

Given a table of items, write a query that outputs a random category with equal probability.

This tests whether you understand sampling bias in SQL. Docusign interviewers use questions like this to see if you recognize that naïve randomness can overweight frequent values and corrupt downstream experiments. Strong answers explain why deduplication is required before sampling and how biased selection impacts decision-making.

Tip: Explain the bias mechanism clearly, not just the corrected query.

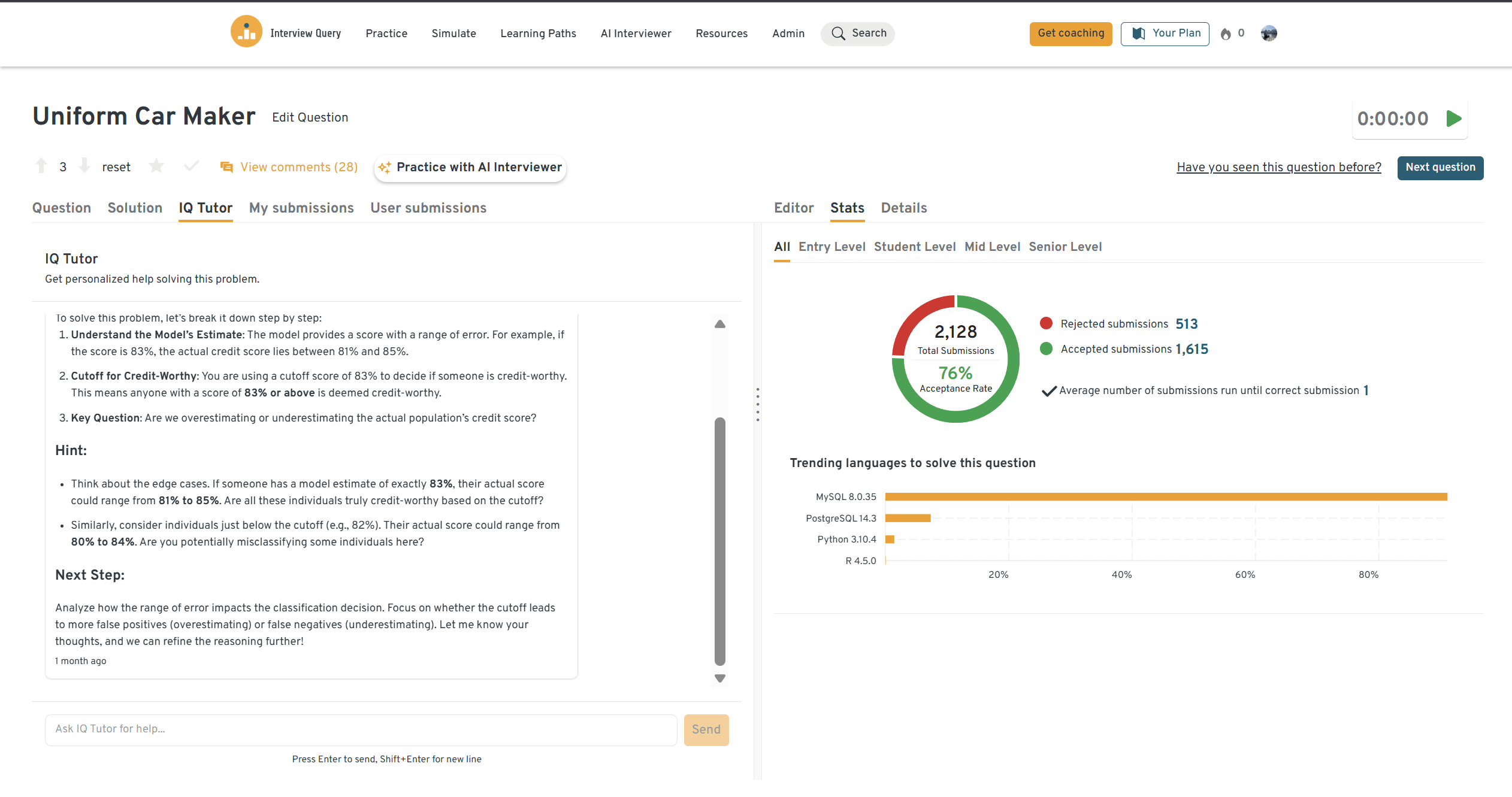

Try this question yourself on the Interview Query dashboard. You can run SQL queries, review real solutions, and see how your results compare with other candidates using AI-driven feedback.

Data Modeling and Schema Design Questions

Schema design questions test whether you can model data as a long-lived product rather than a one-off table. Docusign interviewers expect you to preserve history, support multiple consumers, and prevent silent metric drift.

If you want more reps in this style, the data engineering learning path is the most aligned prep.

How would you design a database schema to capture and analyze client clickstream data?

This evaluates high-volume event modeling. A strong answer describes an append-only events table, explicit grain definition, and derived session or aggregate tables. Interviewers listen for how you manage schema evolution and enforce contracts so analytics remains stable as instrumentation changes.

Tip: Explicitly mention event versioning and backward compatibility.

How would you design a database schema to track customer address history over time?

This tests temporal modeling and slowly changing dimensions. Docusign values point-in-time accuracy for compliance and audit workflows. Strong answers explain effective start and end dates, overlap handling, and why overwriting records breaks historical truth.

Tip: Tie the design to audit or dispute-resolution use cases.

How would you design a schema for tracking agreement lifecycle states?

This evaluates whether you can model state transitions cleanly without losing history. Interviewers care about how you handle retries, reversals, and partial completion.

Tip: Make the grain explicit and separate events from derived states.

What are common mistakes teams make when designing dimensional models?

This tests experience and judgment. Strong answers mention grain mismatch, duplicated metrics, and poorly managed slowly changing dimensions.

Tip: Explain how these mistakes surface months later as trust issues.

How would you design a unified metrics layer that multiple teams can rely on?

This assesses semantic-layer thinking. Interviewers want to hear about metric definitions, versioning, governance, and documentation.

Tip: Focus on preventing metric drift, not just enabling reuse.

Data Pipeline and Systems Design Questions

Pipeline questions evaluate whether you can build observable, resilient systems that meet freshness and correctness SLAs. Docusign interviewers expect you to reason through late data, backfills, and cost trade-offs.

For broader practice, Interview Query’s data engineer interview questions hub is the most relevant resource.

How would you design a data pipeline that computes hourly, daily, and weekly active users?

This tests end-to-end pipeline reasoning. Strong answers explain aggregation strategy, idempotency, and how you keep definitions consistent across grains.

Tip: Emphasize reproducibility and incremental computation.

How would you add and backfill a new column in a billion-row table without downtime?

This evaluates safe change management. Interviewers listen for phased rollout, verification, and rollback strategies.

Tip: Mention how you protect downstream consumers during the transition.

-

This tests investigative rigor during incidents. Strong answers include query logs, time-window isolation, and controlled reproduction.

Tip: Explain how you validate that your trace is complete.

How would you design a pipeline with strict freshness SLAs?

This evaluates operational judgment. Interviewers want to hear about monitoring, alerting, and escalation thresholds.

Tip: Separate detection from remediation.

How do you handle schema changes from upstream application teams?

This tests governance and collaboration. Strong answers mention contracts, versioning, and automated validation.

Tip: Tie schema evolution to long-term reliability.

Behavioral and Collaboration Questions

Behavioral interviews for the Docusign data engineer role focus on ownership, technical judgment, and cross-functional communication. Interviewers want to see whether you treat data systems as long-lived products, how you prevent failures before they happen, and how you work with partners in security- and compliance-sensitive environments. You should use the STAR method, but always lead with the decision you made and the impact it had.

If you want to rehearse these under pressure, the mock interview format is the closest simulation to the real loop.

What makes you a good fit for the data engineer role?

This question evaluates alignment with Docusign’s expectations around ownership, reliability, and technical excellence. Strong answers show that you view data engineering as a product discipline rather than a support function. Interviewers listen for evidence that you build systems others trust and that you can communicate trade-offs clearly when requirements conflict.

Tip: Tie your fit to ownership and reliability, not just the tools you’ve used.

Sample answer:

I’m strongest when I own data systems end to end, from ingestion to modeling to quality checks and stakeholder adoption. In my previous role, I rebuilt a core pipeline that frequently broke and had unclear metric definitions. I introduced data contracts, freshness SLAs, and automated validation, which reduced incident tickets by about 40 percent. What excites me about Docusign is the emphasis on trusted data in a compliance-sensitive environment, where correctness and clarity matter as much as speed.

Talk about a time you had trouble communicating with stakeholders. How were you able to overcome it?

This tests whether you can translate technical constraints into decisions stakeholders can act on. Docusign data engineers often work with product, security, and risk teams with different priorities. Strong answers show how you clarified the decision being made rather than defending technical details.

Tip: Focus on how you changed your communication approach, not just the final outcome.

Sample answer:

I once delivered a metric update that stakeholders felt conflicted with prior reports. Instead of insisting the new logic was correct, I asked what decision the metric was meant to support. We realized the issue was definition drift, not computation. I proposed a versioned metric approach with side-by-side reporting for two weeks and a clear change log. Adoption improved quickly, and we avoided breaking dashboards that leadership relied on.

Tell me about a time you owned a data pipeline end to end.

This question tests ownership, prioritization, and whether you drive outcomes rather than just shipping code. Interviewers want to hear how you defined success, handled ambiguity, and ensured the pipeline was actually used and trusted.

Tip: Quantify impact in terms of reliability, adoption, or reduced manual effort.

Sample answer:

I owned a revenue attribution pipeline used by both marketing and finance. It frequently failed and required manual fixes. I aligned stakeholders on a single source-of-truth definition, rebuilt the pipeline with incremental loads and validation checks, and added alerting tied to freshness SLAs. Refresh time dropped from six hours to under two hours, and incident escalations fell by roughly 50 percent the following quarter.

Describe a time you shipped a solution that significantly improved data quality or reliability.

This evaluates whether you treat data quality as an engineering responsibility rather than an analytics afterthought. Docusign cares deeply about this because unreliable data can create compliance and trust risks.

Tip: Explain both how you detected issues and how you prevented recurrence.

Sample answer:

We had upstream instrumentation changes that caused silent drops in event volume while pipelines still succeeded. I added automated volume and distribution checks at ingestion, with alerts only when changes crossed a business-impact threshold. I also implemented a quarantine pattern so suspicious data never reached dashboards. This reduced time-to-detection from days to under an hour and prevented incorrect metrics from appearing in executive reviews.

Tell me about a time you prevented a data issue before it impacted users or stakeholders.

This tests proactive thinking and risk awareness. Interviewers want to see that you anticipate failure modes rather than reacting after incidents occur.

Tip: Emphasize early signals and preventative controls, not hero debugging.

Sample answer:

Before launching a new dbt model, I noticed a mismatch between expected and actual row growth during testing. I paused the rollout, traced it to a join that would multiply rows under certain conditions, and fixed it before production deployment. I also added row-count expectations as a guardrail for future runs. That prevented a reporting incident that would have affected multiple downstream teams.

For deeper preparation, explore a curated set of 100+ data engineer interview questions with detailed answers. In this walkthrough, Jay Feng, founder of Interview Query, breaks down more than 10 core data engineering questions that closely mirror what Docusign looks for, including advanced SQL patterns, distributed systems reasoning, pipeline reliability, and data modeling trade-offs. The explanations focus on how to think through real-world scenarios, making it especially useful for preparing for a data engineer interview loop.

How to Prepare for the Docusign Data Engineer Interview

Preparing for the Docusign data engineer interview means showing that you can build reliable, scalable, and well-governed data systems that support analytics, AI, and compliance-heavy workflows. Interviewers are less interested in obscure tricks and more focused on whether your decisions would hold up in production at Docusign’s scale.

Here’s how to prepare in a way that mirrors how the role actually operates.

Strengthen SQL as a decision-making tool, not just a query language.

SQL is a core signal in Docusign interviews because it reflects how you define metrics, handle edge cases, and protect data correctness. Practice writing queries that involve joins, window functions, deduplication, and time-based logic, then explain your assumptions out loud. Use the SQL interview learning path to reinforce fundamentals that map directly to pipeline reliability and analytics trust.

Practice data modeling with long-term maintainability in mind.

Docusign data engineers are expected to build dimensional models and semantic layers that multiple teams rely on. Focus on designing schemas that preserve history, support point-in-time accuracy, and remain stable as products evolve. If you want structured reps, the data engineering learning path covers the exact modeling trade-offs interviewers look for.

Prepare to design production-grade pipelines end to end.

System design interviews emphasize ETL and ELT pipelines built with tools like dbt and Airflow, backed by cloud warehouses such as Snowflake. Practice explaining how you handle late-arriving data, backfills, SLAs, monitoring, and idempotent reruns. Interviewers want to hear how you prevent silent failures, not just how you move data from source to destination.

Be ready to talk about data quality, security, and governance.

Given Docusign’s compliance-sensitive environment, expect questions around validation, access control, encryption, and lineage. Prepare examples where you implemented checks, contracts, or monitoring that improved trust without blocking the business. Tie these decisions back to customer impact and risk reduction.

Rehearse behavioral answers around ownership and collaboration.

Strong candidates show that they own data systems beyond initial delivery. Practice STAR-based stories where you drove alignment across product, security, or risk teams and made trade-offs explicit. You can pressure-test clarity and pacing through mock interviews or simulate behavioral rounds using the AI interview tool.

Role Overview: Docusign Data Engineer

A data engineer at Docusign builds the analytical foundation that powers the company’s Intelligent Agreement Management platform. The role sits at the intersection of data infrastructure, analytics, and AI enablement, with a strong emphasis on trust, scalability, and operational rigor.

Data engineers are responsible for designing and maintaining pipelines that ingest product, customer, and operational data into cloud warehouses, where it supports reporting, experimentation, and machine learning workloads. Much of the work centers on building unified data models and semantic layers that ensure consistent definitions across product, finance, risk, and security teams.

Day to day, the role typically includes:

- Designing and maintaining ETL and ELT pipelines using tools such as dbt, Airflow, and Matillion.

- Building dimensional models and curated datasets that support analytics and downstream AI use cases.

- Implementing data quality checks, freshness monitoring, and SLAs to ensure reliability at scale.

- Partnering with product, AppSec, and risk teams to translate business and compliance requirements into technical solutions.

- Supporting AI and agentic workflows by enabling clean, well-modeled data inputs for advanced analytics and machine learning systems.

Culturally, Docusign values technical excellence, ownership, and collaboration. Data engineers are expected to think beyond individual tasks and consider how their systems affect trust, compliance, and customer experience over time. The environment rewards engineers who anticipate failure modes, communicate trade-offs clearly, and build data products that other teams rely on with confidence.

If you want, next we can finish this article with salary benchmarks, FAQs, and final metadata so it’s fully ready for publication.

Average Docusign Data Engineer Salary

Docusign uses a standardized P1–P6 leveling framework across technical roles. While data engineer titles on Levels.fyi are sometimes grouped under software engineering due to sample size, the ranges below reflect current market-aligned compensation for data engineering scope at Docusign, adjusted for responsibility, ownership, and system complexity.

Total compensation typically includes base salary, annual bonus, and RSUs with a standard 4-year vesting schedule (25 percent per year).

| Level | Typical scope | Estimated total compensation (USD) |

|---|---|---|

| P1 | Entry-level data engineer | $138,000 – $147,000 |

| P2 | Intermediate data engineer | $181,000 – $186,000 |

| P3 | Mid-level data engineer | $244,000 – $247,000 |

| P4 | Senior data engineer | $306,000 – $343,000 |

| P5 | Lead or staff data engineer | $450,000 – $520,000 |

| P6 | Principal data engineer | $635,250+ |

At junior levels, compensation is largely base-salary driven, with smaller equity grants. As engineers move into senior and staff bands, equity becomes a much larger share of total compensation, reflecting ownership of core pipelines, data models, and reliability standards across teams. Principal-level roles are rare and reserved for engineers with company-wide impact on data architecture, governance, and AI enablement.

Average Base Salary

Average Total Compensation

Compared with many SaaS peers, Docusign’s upper-level compensation skews higher for data engineers who own mission-critical data systems, particularly those supporting compliance, security, and large-scale analytics.

Source: According to Levels.fyi, aggregated and adjusted for data engineering scope.

FAQs

How many rounds are in the Docusign data engineer interview process?

Most candidates go through 5 to 7 rounds. This typically includes a recruiter screen, an online technical assessment, a hiring manager interview, and a virtual onsite loop with multiple rounds covering SQL, data modeling, system design, and behavioral questions. The exact structure can vary by team and region.

How technical is the Docusign data engineer interview?

The interview is highly technical, with strong emphasis on SQL, data modeling, and pipeline design. You should be comfortable writing complex queries live, reasoning about schema design, and explaining how you would build reliable ETL or ELT pipelines in production. Behavioral rounds also test technical judgment and ownership, not just soft skills.

What tools and technologies should I expect to be tested on?

You can expect questions grounded in modern data stack tools, including SQL, Python, cloud data warehouses like Snowflake, and orchestration tools such as Airflow and dbt. System design discussions often involve cloud-native architectures on AWS or Azure, as well as data quality, monitoring, and governance patterns.

Does Docusign test LeetCode-style algorithms?

Yes, but usually at an easy-to-medium level. Coding rounds may include algorithmic problems via platforms like HackerRank or CoderPad, but they are generally designed to test clean logic, correctness, and edge-case handling rather than advanced computer science theory.

What does Docusign look for in behavioral interviews?

Docusign evaluates ownership, collaboration, and technical excellence. Interviewers want to see that you can own data systems end to end, communicate clearly with product and security partners, and proactively prevent data quality or reliability issues. Strong candidates frame their answers around decisions and measurable impact.

Is prior experience with e-signature or legal tech required?

No. While understanding Docusign’s product and compliance environment is helpful, interviewers care more about your ability to build trustworthy data systems at scale. Experience working in regulated or security-sensitive environments can be a plus, but it is not mandatory.

Final Thoughts: Preparing for the Docusign Data Engineer Interview

The Docusign data engineer interview is designed to identify engineers who can build reliable, scalable, and trusted data systems in a compliance-heavy, AI-enabled environment. Strong candidates demonstrate more than technical correctness. They show disciplined thinking around data modeling, pipeline design, quality guarantees, and stakeholder communication.

To prepare effectively, focus on the same skills Docusign values on the job:

- Practice production-grade SQL and metric definition using the SQL interview learning path.

- Sharpen your pipeline and system design thinking with the data engineering learning path.

- Drill realistic scenarios from the data engineer interview question library.

- Pressure-test your communication and ownership stories through mock interviews or the AI interview tool.

If you’re aiming to stand out as a data engineer who can be trusted with core analytics and AI foundations at Docusign, preparation that mirrors real production decision-making is your strongest advantage.

Ready to start preparing? Practice Docusign-style data engineering interview questions today on Interview Query and walk into your interview with confidence.