Atlassian Data Engineer Interview Guide: Process, Questions, System Design & Salary Insights

Introduction

Atlassian products generate more than 200 billion events each month, spanning everything from Jira issue updates to Confluence page edits and Bitbucket deployments. Data engineers sit at the center of this ecosystem and build the pipelines, models, and platforms that keep Atlassian’s analytics and AI features accurate, resilient, and fast. Their work directly shapes how millions of users plan work, ship code, and collaborate.

If you are preparing for Atlassian data engineer interview questions, this guide explains what the role involves, how the interview process works, and the skills assessed across SQL, coding, system design, and behavioral rounds. Use it as your starting point for a focused and realistic preparation plan.

What does an Atlassian data engineer do

Atlassian data engineers design and maintain the data systems that power product analytics, AI assistants, and internal tools. They work across batch and streaming pipelines, manage large scale warehouse environments, and collaborate with product, analytics, and engineering teams to deliver trustworthy and high quality data.

Key responsibilities include:

- Building and optimizing ETL and ELT pipelines using Spark, Kafka, Airflow, and cloud platforms

- Modeling large event datasets so teams can measure activation, engagement, retention, and collaboration patterns

- Partnering with analysts and data scientists to translate product questions into technical designs

- Implementing data quality checks, monitoring, lineage, and alerting to reduce downstream issues

- Managing cost and performance for data storage and compute across cloud environments

- Contributing to shared data foundations such as Atlassian’s teamwork graph

Atlassian values open communication and clear documentation, so engineers work transparently and collaborate closely with distributed teams.

Why this role at Atlassian

A data engineering role at Atlassian gives you the chance to build systems that directly influence how millions of people plan work and collaborate. You work with modern, cloud native tools, handle large scale event data, and contribute to the pipelines and platforms behind Atlassian’s AI features and product analytics. It is a role where strong engineering translates into visible product impact, meaningful ownership, and long term growth in both technical depth and architectural influence.

Atlassian Data Engineer Interview Process

Atlassian’s data engineer interview process evaluates how you design scalable pipelines, write reliable SQL and production code, reason about data quality, and collaborate with distributed teams. It combines technical rigor with values driven communication, and each stage builds on the last to understand how you solve problems end to end. Most candidates move through the following sequence:

| Stage | Primary Focus |

|---|---|

| Recruiter Screen | Background, communication, motivation |

| Technical Screen | Coding and SQL fundamentals |

| System Design Interview | Architecture, scalability, data modeling |

| Behavioral Interviews | Collaboration, communication, values alignment |

| Final Review | Cross interviewer evaluation and hiring bar |

Recruiter screen

The recruiter screen introduces the process and explores whether your background aligns with the scope of Atlassian’s data engineering work. You will be asked to walk through recent projects, describe the data volumes and pipelines you handled, and explain how you collaborated with analysts or product teams. Recruiters listen for structured explanations, clarity when discussing your responsibilities, and familiarity with tools such as Spark, Kafka, Airflow, and Snowflake. This is also where they assess your motivation for joining Atlassian and your alignment with its values, which emphasize openness, teamwork, and customer focus.

Tip: Prepare one concise project story that clearly communicates the business problem, your technical solution, and the measurable impact.

Technical screen

The technical screen evaluates your ability to write SQL, code, and reason about data in real time. Expect multi step SQL questions that involve joins, window functions, event cleaning, and aggregation logic. You will also complete a short Python exercise focused on data manipulation or algorithmic reasoning. Interviewers look for correctness, readability, and your ability to articulate assumptions, handle edge cases, and think through performance considerations. They pay close attention to how you validate your logic, since data quality is central to the role.

| Focus Area | What Interviewers Look For |

|---|---|

| SQL | Clean joins, window functions, filtering, grain consistency |

| Coding | Python fluency, clear logic, error handling, readability |

| Data intuition | Ability to catch anomalies, duplicates, or inconsistent timestamps |

| Communication | How clearly you explain tradeoffs and assumptions |

Tip: Narrate your thinking step by step so interviewers can follow your reasoning even if you refine your answer along the way.

System design interview

The system design interview assesses how you approach building data platforms that scale reliably with Atlassian’s global usage. You might design an ingestion pipeline for high volume event data, outline a warehouse model for product analytics, or propose a monitoring strategy for distributed ETL flows. Interviewers look for structured thinking, a clear definition of the problem, an early lock on the data grain, and an architecture that balances latency, cost, maintainability, and resilience. They also evaluate whether you can simplify complexity, choose appropriate technologies, and explain why your design fits the use case. This conversation mirrors real work, where clarity and tradeoff reasoning matter as much as technical detail.

Tip: Anchor your design by stating the use case, the data grain, and the expected scale before discussing components or technologies.

Behavioral interviews

Behavioral interviews explore how you operate in Atlassian’s collaborative, distributed environment. Expect prompts about resolving data quality issues, navigating misaligned priorities, supporting cross functional partners, and learning from technical setbacks. Interviewers look for evidence of clear communication, thoughtful decision making, and openness in how you share context and handle conflict. They want to understand how you work with analysts, PMs, and engineers when definitions evolve, incidents occur, or tradeoffs need alignment. The strongest answers highlight both your technical decision making and your ability to communicate transparently across teams.

| Theme | What Interviewers Listen For |

|---|---|

| Collaboration | How you partner with product, analytics, and engineering |

| Ownership | How you diagnose and resolve pipeline or data issues |

| Communication | Clarity, documentation, and proactive updates |

| Values alignment | Openness, teamwork, customer impact, continuous improvement |

Tip: Choose stories where you influenced an outcome through communication, not just through technical execution.

Final review

In the final review, Atlassian brings together feedback from all interviewers to evaluate whether you meet the company’s hiring bar. The review focuses on your overall performance across technical depth, communication, collaboration, and cultural alignment. The team checks for consistency in how you approached problems and whether any conflicting signals require clarification. Occasionally, candidates are invited for a short follow up conversation if a specific competency needs more evidence. Once the review is complete, the hiring team aligns on leveling, role fit, and next steps.

What this stage considers:

- Whether you met or exceeded expectations across interviews

- How consistently you demonstrated system thinking and data quality instincts

- Your ability to collaborate and communicate in a distributed environment

- Feedback patterns across technical and behavioral rounds

- Your potential for long term growth within Atlassian

Tip: Keep communication with your recruiter clear and timely, since they guide you through offer discussions and next steps.

Atlassian Data Engineer Interview Questions

Atlassian structures its data engineer interview questions around the real work engineers handle across Jira, Confluence, Trello, and Bitbucket. Expect a blend of SQL, coding, data modeling, pipeline design, and values based questions that mirror the challenges of operating large scale, event driven systems. The goal is not to test obscure syntax or trick questions, but to understand how you reason about data quality, system reliability, and cross functional problem solving.

The questions below are organized into the core categories you will encounter during the interview loop. Each category highlights what interviewers look for, how to approach the prompts, and the skills you need to demonstrate. Use these sections to benchmark your preparation and identify areas where more focused practice will help you stand out.

SQL and coding interview questions

SQL and coding are central to the Atlassian data engineer interview because much of the work involves transforming high volume event data, validating pipelines, and supporting product analytics with clean, reliable datasets. You can expect questions that combine window functions, joins, filtering, and performance considerations, along with coding prompts that test your ability to manipulate data and reason about edge cases. Interviewers look for correctness, clarity, and a disciplined approach to data quality.

-

Approach this by grouping employees by department, calculating the percentage of high earners, and filtering to departments with at least ten employees. Use a window function such as

DENSE_RANK()to ensure ties are handled consistently. This mirrors real scenarios where analysts need clean departmental metrics for reporting.Tip: State your data grain early so your grouping and filtering stay consistent.

Calculate first touch attribution for each user who converted.

Identify all converting users, then join sessions and conversion tables to find the earliest session timestamp using

MIN()orROW_NUMBER(). Assign the matching channel to determine the first touch source. Atlassian uses similar logic for understanding onboarding funnels and user journeys.Tip: Always mention how you handle incomplete or missing session data.

Write a query to get the number of customers that were upsold.

Define what “upsold” means in the scenario, such as moving to a higher tier or increasing order value. Compare behavior over time by grouping at the user level and applying clear business logic in your filters. Interviewers watch for how well you convert an ambiguous definition into explicit SQL conditions.

Tip: Clarify assumptions up front because Atlassian values precision in metric logic.

Find the average number of right swipes for different ranking algorithm variants.

Join swipe events with experiment assignment tables, group by algorithm variant, and compute averages at the correct grain. Similar questions appear in product analytics when comparing algorithm performance or ranking changes.

Tip: Explain why you chose your aggregation grain and what alternative grains would change.

Get the current salary for each employee after an ETL job duplicated records.

Use

ROW_NUMBER()partitioned by employee, ordered by the latest timestamp, to isolate the correct record. This shows your ability to debug data quality issues and recover from pipeline inconsistencies, which is a core expectation at Atlassian.Tip: Tie your answer to how you would prevent similar ETL errors upstream.

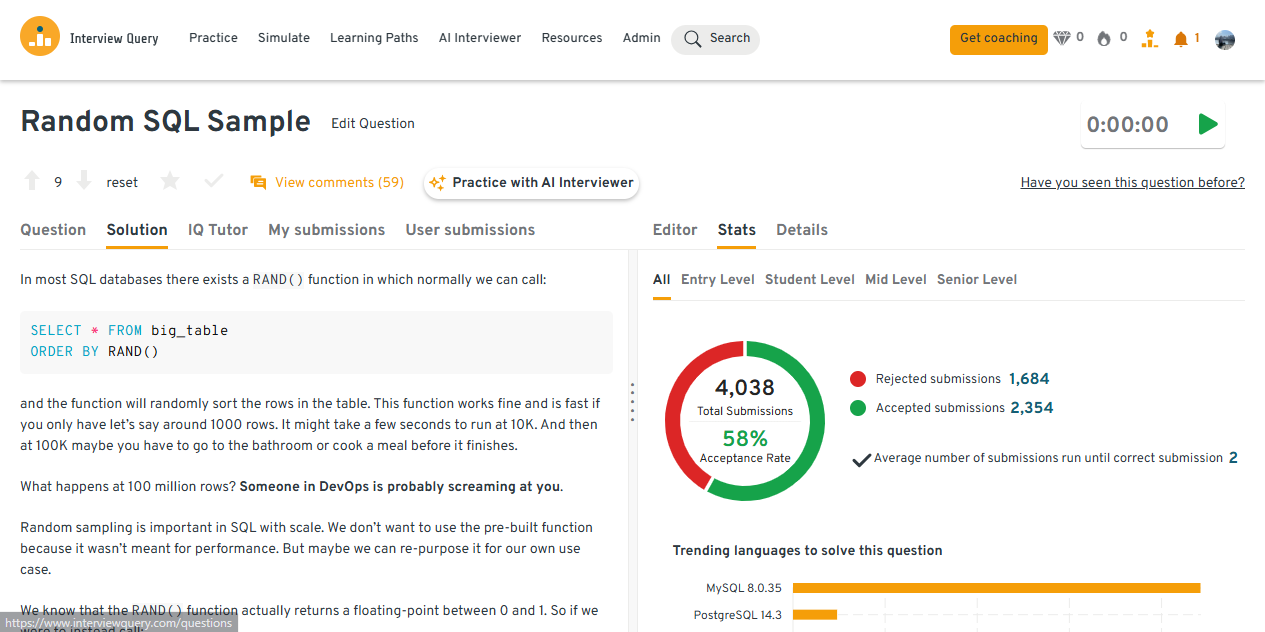

Randomly sample a row from a 100M row table without ordering the entire table.

Avoid full table scans by using probabilistic filters or ID based sampling. The goal is to demonstrate efficiency and scalability, especially when working with extremely large data sets like Atlassian’s product logs.

Tip: Mention performance tradeoffs because interviewers want evidence of practical engineering judgment.

Try this question yourself on the Interview Query dashboard. You can run SQL queries, review real solutions, and see how your results compare with other candidates using AI-driven feedback.

Want more practice with realistic SQL patterns? The SQL Scenario Based Questions set aligns closely with Atlassian’s interview style. Practice scenario based SQL questions →

Data architecture and system design interview questions

System design is one of the most important parts of the Atlassian data engineer interview. You will be asked to design schemas, outline end to end pipelines, reason about ingestion and quality checks, and explain architectural tradeoffs for large scale, event driven systems. Interviewers look for clarity, structure, and the ability to lock the data grain early, choose appropriate technologies, and explain how each component contributes to reliability and observability.

How would you create or modify a schema to keep track of customer address changes?

The best approach is to design a slowly changing dimension style table or a historical table that contains customer ID, address, start date, end date, and an indicator for the current record. You can link each address record to the next tenant by referencing move out and move in events. This allows full history tracking while keeping current lookups efficient.

Tip: Call out how you enforce uniqueness and prevent overlapping date ranges for the same customer.

-

Start with raw event data in the data lake and create a pre aggregation workflow that computes hourly active users, then derives daily and weekly aggregates from the hourly table. Use an orchestrator to refresh aggregates on a fixed cadence, and include cutoff logic to handle late arriving events. This design balances freshness with performance and is easy to scale.

Tip: Explain how you validate metric consistency across the three grains to prevent mismatched dashboard numbers.

How would you design a data mart or warehouse for a new online retailer?

Frame the business process first, such as individual product sales, and choose that as your fact table grain. Add dimensions for customers, products, stores, promotions, and time, then organize everything into a star schema that supports both detailed and aggregated queries. This structure provides clarity, avoids duplication, and keeps performance predictable.

Tip: Mention how you would manage slowly changing dimensions, since retail systems often change product or customer attributes.

How would you design an end to end pipeline to support bicycle rental demand forecasting?

Ingestion begins with daily raw files for station logs, weather feeds, and event schedules. Normalize each source into structured tables, apply quality checks, join them into a single modeling table keyed by station and date, and store the output in a warehouse for downstream predictive models. Include metadata tracking and pipeline monitoring for reproducibility.

Tip: Highlight how you join data sources at a consistent grain to avoid misaligned features in the modeling dataset.

How would you design a database system to support payment APIs for Swipe?

Begin by modeling core entities such as users, payment methods, transactions, and API logs. Add tables for idempotency keys, fraud checks, and status transitions so the system can recover safely from retries or partial failures. Emphasize security, auditability, and the need for strong constraints on transaction states.

Tip: State how you prevent duplicate charges, since payments require strict consistency.

How would you use MapReduce to process petabytes of log data for behavior insights?

Describe the mapping step that parses raw logs into key value pairs, followed by reduce tasks that aggregate metrics such as counts, sessions, or unique users. Explain how data is partitioned to distribute work efficiently across nodes. This workflow is well suited for very large datasets that exceed the capacity of a single machine.

Tip: Call out how you choose your map keys and reduce groups, since they drive both performance and correctness.

What factors would you consider when designing an international e commerce architecture?

Start with clarifying questions about data residency, compliance, time zones, return flows, multi region warehouses, and latency requirements. Then outline an architecture with regional ingestion, a centralized or federated warehouse, and a reporting layer that supports multi grain vendor dashboards. Focus on scalability, fault tolerance, and regional synchronization.

Tip: Acknowledge the role of local regulations because international systems require explicit compliance planning.

How would you design a resumable fact table load so failures can resume without duplication?

Use a checkpointing mechanism that records the last successfully loaded record or batch. On rerun, the pipeline should validate existing data, skip completed segments, and load only the remaining rows using idempotent merge logic. This ensures reliability without introducing partial data.

Tip: Mention how you detect and clean up partial writes, since they are a common failure point.

How would you architect an end to end CSV ingestion pipeline for large engagement datasets?

Support uploads through both UI and API by landing files in object storage and validating schema, data types, and row counts before ingestion. Transform validated files into canonical tables, apply deduplication and quality checks, and load them into a warehouse optimized for reporting. Build monitoring for latency, file status, and ingestion success rates.

Tip: Explain how you ensure exactly once ingestion when multiple users upload overlapping files.

For hands on practice with real world pipeline and architecture prompts, you can explore Interview Query’s challenges, which mirror the complexity of system design rounds. Start solving challenges →

Behavioral and values based interview questions

Behavioral questions at Atlassian focus on how you collaborate, communicate, recover from failure, and make decisions in ambiguous environments. Data engineers work closely with analysts, product managers, and other engineering teams, so interviewers want to understand how you handle conflict, align on definitions, respond to incidents, and uphold Atlassian’s values. Strong answers combine clear storytelling with measurable outcomes and thoughtful reflection.

What are your strengths and weaknesses?

This question evaluates your self awareness and your ability to reflect on how you operate within a team. Strong answers highlight a strength that directly improves collaboration or system reliability and a weakness that you are actively improving with a concrete strategy.

Sample answer: One of my strengths is building clarity during ambiguous data work by documenting assumptions and aligning stakeholders early. A weakness I continue to work on is spending too much time perfecting pipeline details, so I now share early drafts sooner to speed up feedback loops.

Tip: Always pick a weakness that is real but manageable, and show how you have improved it.

Why do you want to work with us?

Interviewers assess whether you understand Atlassian’s products, values, and data ecosystem. Strong responses connect your engineering interests with Atlassian’s scale, collaboration tools, and commitment to openness.

Sample answer: I want to work at Atlassian because its tools directly influence how global teams collaborate, and I enjoy building data systems that support real user workflows. The company’s emphasis on transparency and its modern data stack align closely with how I like to work.

Tip: Mention at least one product or value that genuinely resonates with you.

Talk about a time you had trouble communicating with a stakeholder and how you resolved it.

Atlassian wants engineers who can collaborate across technical and non technical partners. Choose an example where misunderstanding or misalignment created friction and explain how you clarified expectations, simplified communication, or used documentation to regain alignment.

Sample answer: I once worked with a PM who found my pipeline diagrams confusing, so I created a simpler flowchart with clear ownership steps. This improved alignment immediately and helped us make cleaner prioritization decisions.

Tip: Show empathy by acknowledging the other person’s perspective.

How comfortable are you presenting your insights?

Data engineers often need to explain pipeline changes, data issues, or reliability improvements to cross functional teams. Interviewers look for confidence, clarity, and an ability to simplify technical details for non technical audiences.

Sample answer: I am very comfortable presenting my work and usually start with the problem, the data involved, and the impact of the change. In my past role, I regularly presented pipeline improvements to analytics teams and used simple visuals to keep discussions focused.

Tip: Emphasize how you tailor explanations to different audiences.

Tell me about a time you influenced a technical or product decision using data.

Choose an example where your data analysis or pipeline insights changed a team’s priorities or exposed an unseen risk. Atlassian values engineers who show ownership and frame insights in terms of customer and product impact.

Sample answer: I discovered an unexpected drop in event coverage for a key feature and traced it to schema changes that were not backward compatible. After sharing the findings with the PM and engineering lead, we implemented a migration plan and restored data reliability within two days.

Tip: Make sure the outcome of your story is measurable and clear.

Give an example of a time you worked through ambiguity during a data project.

Atlassian engineers often work with incomplete information or evolving requirements. Pick a scenario where definitions, schemas, or metrics were unclear and show how you created structure through alignment, documentation, or short experiments.

Sample answer: When building a new activation metric, different teams had conflicting definitions. I drafted a simple proposal with examples and edge cases, reviewed it with PMs and analysts, and aligned everyone within a single meeting.

Tip: Show how you created momentum instead of waiting for perfect clarity.

Tell me about a time you handled a major data quality issue.

Data reliability is core to Atlassian’s engineering culture. Interviewers want to see how you diagnose root causes, communicate impact, and put long term safeguards in place.

Sample answer: A pipeline change once caused duplicated rows in our engagement table, which impacted dashboards. I paused downstream jobs, fixed the transformation logic, backfilled clean data, and added validation checks to catch future anomalies.

Tip: Always mention both the short term fix and the long term prevention mechanism.

Describe a time you worked in a distributed or remote team and had to resolve an urgent issue.

Atlassian’s distributed teams rely on clear communication to manage incidents. Pick an example that shows how you coordinated across time zones, delegated tasks, and kept everyone aligned during a high pressure situation.

Sample answer: During a late night pipeline failure, I coordinated with teammates in two regions using Slack updates, assigned responsibilities clearly, and restored the flow within hours. Afterward, I documented the incident and improved our alert thresholds to prevent recurrence.

Tip: Emphasize your communication cadence and transparency during the incident.

If you want more practice with behavioral prompts across engineering roles, you can explore Interview Query’s mock interviews, which include tailored feedback and realistic scenario walkthroughs. Book a mock interview →

In this video, Jay Feng, co-founder of Interview Query and former data scientist at companies like Nextdoor and Monster, walks through over ten common data engineer interview questions and provides step-by-step explanations for how to approach them. You’ll see real examples of SQL, Python, pipeline, and system design problems, along with strategies for articulating your reasoning clearly. Watch it after reviewing the questions above to reinforce your preparation and gain insight into how experienced interviewers think about strong answers.

How to prepare for an Atlassian Data Engineer interview

Preparing for an Atlassian data engineer interview requires a mix of strong technical fundamentals, clear communication, and a structured approach to solving data problems. The most successful candidates focus on SQL and Python fluency, system design frameworks, data quality instincts, and values aligned storytelling. Below are the most effective strategies to build a complete and targeted prep plan.

Strengthen your SQL and data transformation fundamentals

Atlassian evaluates your ability to write clean, readable queries that solve multi step problems involving event logs, metrics, and quality checks. Practice window functions, joins, date logic, and data cleaning on large realistic datasets.

Tip: Use scenario questions instead of isolated puzzles because they mirror Atlassian’s datasets. Practice here: SQL Scenario Based Interview Questions

Practice coding patterns that support pipeline reliability

Coding exercises often involve parsing data, applying transformations, or reasoning through edge cases. Focus on Python patterns like grouping, dictionary based transformations, error handling, and writing logic that is easy to test and reuse.

Tip: Build the habit of thinking aloud during coding sessions so interviewers can follow your reasoning.

Develop a reusable system design framework

Atlassian expects data engineers to design pipelines that scale with high volume collaboration data. Create a repeatable approach: clarify the use case, lock the data grain, outline components, describe quality checks, plan monitoring, and justify tradeoffs.

Tip: Practice explaining your designs visually or verbally in under four minutes to mimic interview pacing.

Build strong intuition around data quality and observability

Data reliability is a core expectation. Get comfortable discussing deduplication, idempotency, schema evolution, late arriving data, and validation patterns. Be ready to describe how you detect, quantify, and prevent data issues.

Tip: Prepare one concrete example where you discovered or prevented a data quality issue.

Prepare clear STAR stories that connect to Atlassian’s values

Behavioral interviews focus on collaboration, ownership, and thoughtful decision making. Choose examples where you partnered with PMs or analysts, resolved incidents, or led ambiguous work with structure.

Tip: End every STAR story with a measurable or observable outcome. Strengthen your delivery through mock interviews.

Study Atlassian’s products and analytics needs

Understanding workflows in Jira, Confluence, Trello, or Bitbucket helps you reason about events, schemas, and user journeys. This context makes your system design and metric definitions more grounded and relevant.

Tip: Explore how these tools track changes, link entities, and produce event logs so your examples feel realistic.

Simulate timed interview conditions

Practicing under realistic time constraints helps refine pacing and clarity across SQL, coding, and system design. Time boxed sessions train you to communicate while thinking.

Tip: Treat each practice session as a mini loop and track which skills need more repetition. For structured practice: Take Home Test Prep

Get targeted coaching to refine weak areas

A mentor can help you calibrate system design depth, strengthen your behavioral alignment, and tighten your communication. Coaching is especially useful for senior candidates who need to demonstrate architectural maturity.

Tip: Bring one system design you already practiced so your coach can pinpoint exactly where to improve. Explore mentorship: Interview Query Coaching

Atlassian Data Engineer Salary

According to Glassdoor data, Atlassian data engineers in the United States typically earn between $120K and $210K per year in total compensation. Pay varies by experience level, with early career engineers starting around the low to mid $100K range and more experienced engineers reaching total compensation near the $200K mark. The breakdown below summarizes the ranges currently reported on Glassdoor.

| Experience Level | Total Compensation | Base | Stock | Bonus |

|---|---|---|---|---|

| 0–1 years | $155K – $181K | ~$134K – $187K | ~$29K – $53K | ~$11K – $20K |

| 1–3 years | $120K – $156K | ~$134K – $187K | ~$29K – $53K | ~$11K – $20K |

| 10–14 years | $139K – $162K | ~$134K – $187K | ~$29K – $53K | ~$11K – $20K |

| Median (All Levels) | $210K total comp | ~$158K base | ~$38K stock | ~$14K bonus |

Average Base Salary

Average Total Compensation

RSU vesting schedule

Atlassian equity generally vests over four years at a 25 percent per year cadence. Since stock represents a significant portion of total compensation, earnings increase meaningfully as more RSUs vest in later years.

FAQs

How competitive is the Atlassian Data Engineer interview?

The interview is selective because Atlassian evaluates both technical depth and values alignment. Strong SQL, system design reasoning, and clear communication are all required to meet the hiring bar.

What is the typical interview timeline?

Most candidates complete the process within three to five weeks, depending on loop scheduling and team availability. Feedback is usually shared promptly after each round.

Do data engineers need strong Python skills at Atlassian?

Yes. Python is commonly used for transformations, pipeline logic, and debugging. You do not need to be a software engineer level coder, but you must be comfortable writing clean, reliable data manipulation code.

How important is system design in the interview?

System design is one of the most heavily weighted sections for data engineers. Interviewers want to see whether you can design reliable, scalable pipelines that support Atlassian’s high volume event data.

Will I need to know Atlassian’s products for the interview?

Understanding Jira, Confluence, Trello, or Bitbucket is helpful because many system design and data modeling questions reference product analytics. You do not need to be an expert, but familiarity strengthens your reasoning.

Does Atlassian support remote work for data engineers?

Many Atlassian teams operate with remote first or hybrid flexibility depending on the role and location. Candidates should confirm expectations with their recruiter early in the process.

How much weight do behavioral interviews carry?

Behavioral interviews are very important. Atlassian screens heavily for openness, clear communication, and collaborative decision making, especially since teams work across time zones.

What types of SQL questions should I expect?

Expect scenario based SQL involving multi step joins, window functions, deduplication logic, and aggregation. These questions mirror real datasets from Atlassian’s analytics environment.

Are there take home assignments in the data engineer interview?

Some teams use small take home tasks focused on SQL, data modeling, or pipeline logic. The format varies, but all tasks emphasize clarity and real world reasoning.

Build Your Atlassian Data Engineering Edge Starting Today

Breaking into Atlassian as a data engineer means showing that you can design resilient pipelines, reason clearly about event data, and collaborate with the same openness the company is known for. The right preparation focuses on repeatable frameworks, realistic practice, and sharpening the judgment you bring to data architecture and quality.

Interview Query gives you the structure to prepare at a higher level. Work through real pipeline and warehouse problems in our curated scenario-based SQL questions, pressure test your reasoning through engineer-led mock interviews, and deepen your architectural intuition with comprehensive learning paths. Start your prep with intention and walk into your Atlassian interview ready to demonstrate true engineering impact.