Tech Workers Are Gaming AI Hiring Tools Just to Get Interviews, Research Shows

Today’s Tech Hiring Feels Broken

Many tech workers would agree that the job search now feels like shouting into the void. Dozens, sometimes even hundreds, of applications are sent, only to be met with silence or instant automated rejection.

This only points to how the promise of AI in hiring is broken. Instead of making recruiting faster, fairer, and more objective with automated tools, it’s fueling frustration on both sides of tech candidates and employers.

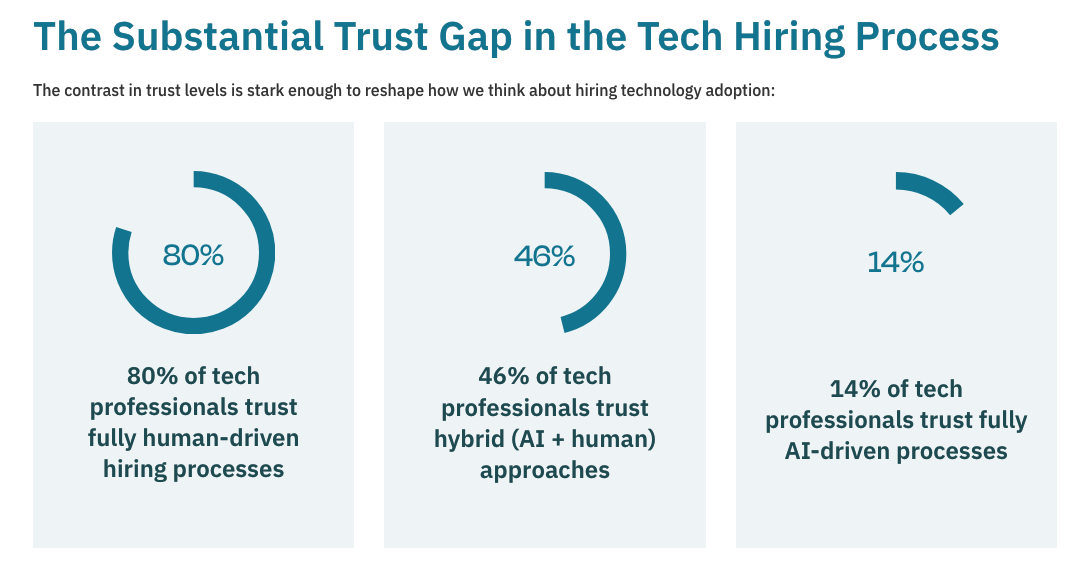

Research from Dice suggests that the problem isn’t just bad luck, but broken trust. The Trust Gap in Tech Hiring 2025 report found that 68% of tech professionals distrust fully AI-driven hiring, as the majority (80%) still prefer human-led processes.

As a result, candidates aren’t focusing on showcasing their real skills. They’re learning how to beat the system.

The Tech Hiring Trust Problem

Source: Dice’s The Trust Gap in Tech Hiring 2025 report

Source: Dice’s The Trust Gap in Tech Hiring 2025 report

The report paints a stark picture of how little confidence tech workers have in AI-based screening. Only 14% say they trust fully AI-driven recruitment processes, as many believe automated tools are blunt instruments.

To put it into context, 63% worry AI favors keywords over real qualifications, and even qualified candidates are susceptible to getting rejected when they don’t fit the narrow criteria dictated by the system. Meanwhile, over half (56%) believe no human ever sees their résumé at all.

To job seekers, that means nuance, context, and hard-earned experience are being filtered out long before a real decision-maker gets involved.

This erosion of trust has consequences. When candidates assume qualified people are being rejected by default, faith in the entire hiring process collapses.

Employers are starting to notice the fallout too. A recent article by IT magazine CIO.com suggests that many companies now worry they’ve overcorrected. They’re trading speed and efficiency for damaged candidate relationships, and at the same time shrinking the pool of genuinely engaged and qualified applicants.

Tech Workers Respond by Gaming the System

Faced with opaque screening systems, candidates are not just losing trust but also adapting, often in ways that undermine hiring quality. The same report found that 92% of tech professionals believe AI screening tools miss qualified candidates who don’t optimize for keywords.

Applicants respond by exaggerating their résumés through keyword stuffing (78%), while others say they tried to improve hiring visibility by using AI tools to tailor applications, not for humans but for machines (65%).

In a recent interview with CIO, Dice President Art Farnsworth noted the implications for authenticity and individuality. “When candidates feel they have to exaggerate just to stay competitive, it chips away at authenticity and trust,” he said.

Another aspect of this issue is that many candidates don’t even view this behavior as dishonest, but simply a means of survival. If honesty leads to automatic rejection, optimization feels rational.

Why AI Hiring Feels Like a Headache for Candidates

While tech candidates have adapted to AI-driven hiring, what frustrates them the most is the lack of transparency. Beyond rejection itself, fewer and fewer companies are making an offer to explain how screening works, why an applicant was rejected, or whether a human was ever involved.

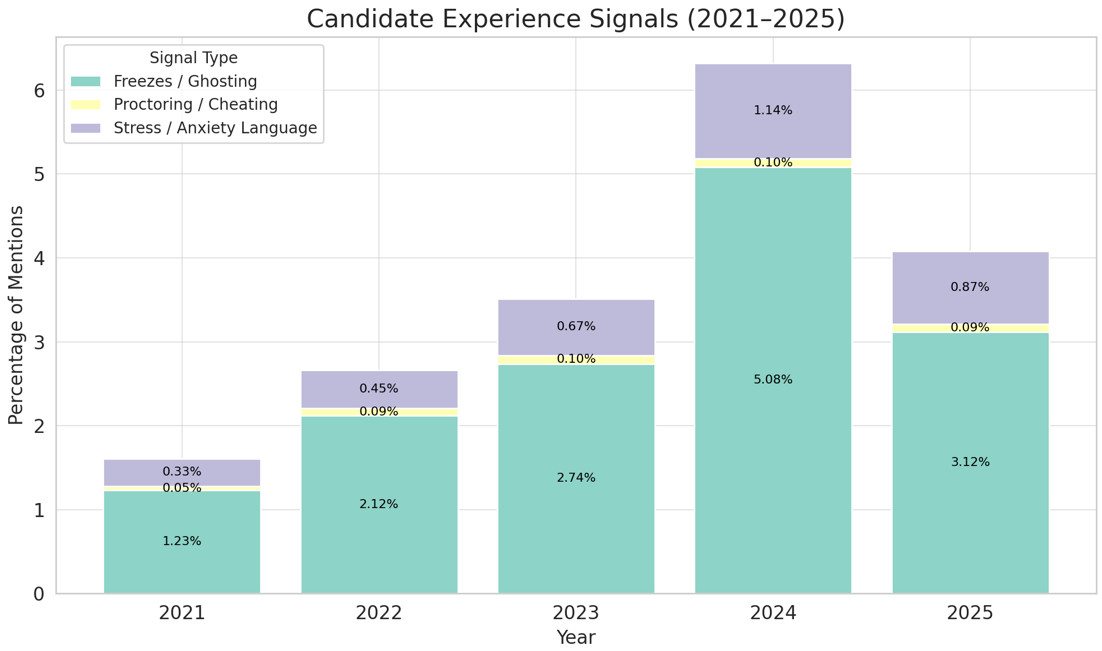

Psychologically, this turns job searching less personal and more arbitrary. These dynamics echo trends highlighted in Interview Query’s analysis of AI interview practices. According to the State of Interviewing 2025 report, the use of AI tools like remote helpers during interviews eroded recruiter trust. Recruiters, overwhelmed and unsure how to validate candidates, increasingly responded by ghosting applicants instead of clarifying concerns.

As a result, candidates feel like they’re fighting a system rather than being evaluated by people. Repeated rejection without feedback leads to burnout, self-doubt, and disengagement, eroding trust at exactly the wrong time. Many are thus already opting out before the process even begins, with Dice reporting that nearly 30% of tech pros even consider leaving the industry entirely in light of these hiring frustrations.

Where Tech Hiring Goes From Here

The takeaway is clear: AI has reshaped tech hiring, but trust hasn’t kept pace. While candidates don’t reject automation outright, they clearly want clarity, human checkpoints, and basic communication.

Until hiring systems balance efficiency with transparency and human judgment, many tech workers will continue gaming the process rather than believing in it.

And a system designed to surface the best talent may keep selecting the best résumé optimizers instead. Tech hiring may continue evolving into a contest not of who is the best long-term fit, but who understands the algorithm best, creating adverse consequences for talent management and organizational growth.