AI Hype Is Surging—But New Data Shows the Public Still Isn’t Buying It

The AI Boom vs. Public Skepticism

Despite big companies plowing billions into data centers, specialized chips, and artificial intelligence research, a new survey reveals a startling disconnect. Business leaders and investors are mostly bullish on AI’s future, but the general public remains far more skeptical.

According to the latest report from nonprofit JUST Capital, a whopping 93% of corporate leaders and 80% of investors believe AI will be a net positive for society in the next five years. However, this enthusiasm far outpaces public sentiment, as only 58% are optimistic about AI.

Other insights from the poll also reveal a growing trust gap, which the article will explore, alongside what it means for the future of AI adoption, regulation, and social impact.

Corporations and Investors Are Doubling Down on AI

Corporate leaders and investors’ sentiments about AI don’t begin and end with optimism. The scale of investment is enormous.

As of writing, global capital deployment toward infrastructure, R&D, chips, and data centers is set to reach $1.5 trillion. Growing startups and tech giants like Microsoft, Google (Alphabet), Oracle, Meta, and Nvidia alike are betting that AI will boost productivity, spark innovation, and cement their competitive advantage.

In other words, there’s a broad business-case appeal that drives enthusiasm among executives and investors, from improved efficiency and productivity to better products and strategic edge. For many of them, AI is no longer just future tech but is the next wave of growth.

But Public Confidence Is Falling Behind

Public sentiment reveals a stark contrast. Aside from only 47% of ordinary people believing that AI will positively impact worker productivity, the JUST Capital report also points to several recurring concerns.

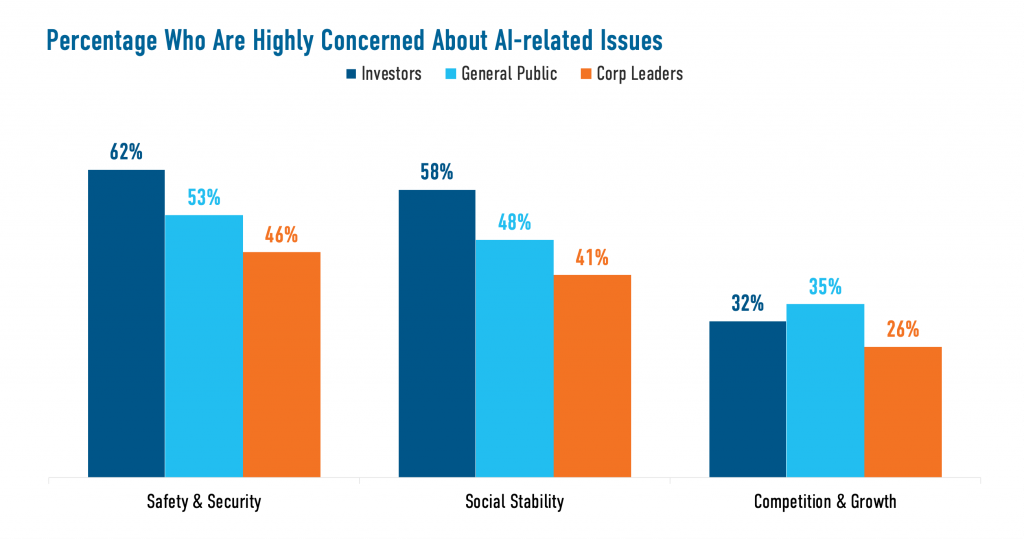

Over half (53%) do not trust due to safety and security issues, while 48% are highly concerned about its impact on social stability and impact. Meanwhile, more than 1 in 3 (35%) rate competition and growth as a top concern.

These aren’t just abstract worries. As AI adoption grows amid a global generative-AI boom, some workers are already seeing job losses or instability even as firms hype up AI’s potential.

For instance, a previous job market report by Indeed notes that tech job postings have plunged way below pre-pandemic levels, despite tech roles like software developers, engineers, and data analysts being critical to the boom.

That helps explain why many people feel uncertain about who will truly benefit from AI, and who might be left behind in the process.

Where the Real Divide Lies

Interestingly, there is some common ground. As the same report report shows, public, investor, and corporate respondents broadly agree on the potential of AI and the importance of safety and security.

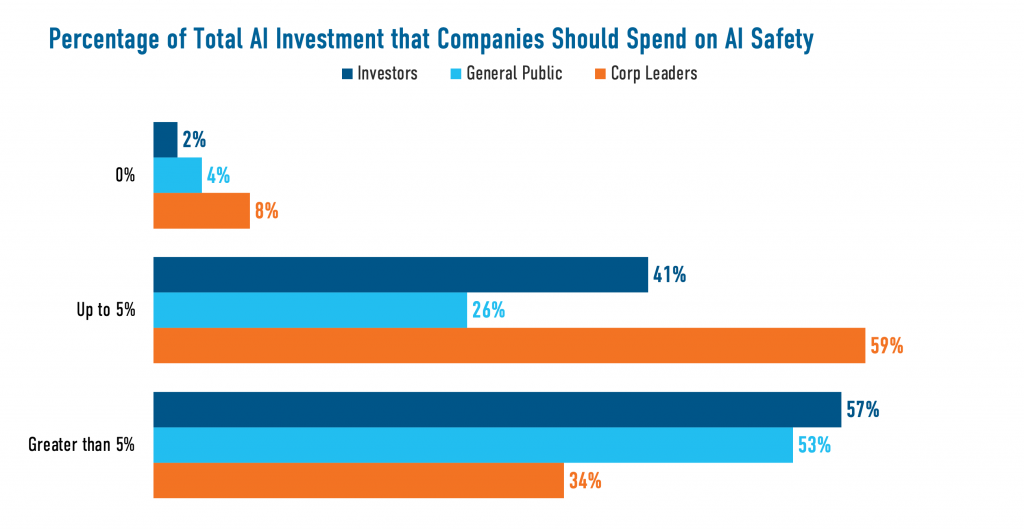

However, the alignment ends there, especially when it comes to how much companies should invest in safeguards and worker support. Most investors and members of the public expect firms to devote more than 5% of their AI budgets to safety; in contrast, many corporate leaders say they plan to commit only 1–5%.

And what’s more interesting is that’s not the only point of divergence. When asked how AI-driven profits should be distributed, executives favor shareholders and R&D (28% and 30%, respectively). Meanwhile, worker training receives just 17%, with many among the public respondents viewing that as inadequate.

This value distribution choice matters, as limited investment in retraining and workforce support fuels fears of job displacement. It also deepens distrust in corporate AI hype, underscoring the idea that AI gains will only be shared among the top executives and shareholders.

Trust, Transparency, and Responsibility Are the Real Game Changers

Key insights from the investor, corporate, and public survey reveals that the divide is primarily values, priorities, and fairness. The public seems less skeptical about AI’s capabilities than about who controls it, who benefits, and how its gains are shared.

JUST Capital’s survey underscores a strong public demand for workforce support, retraining, and responsible governance if AI is to deliver broad societal benefits.

Another major concern worth noting is that of environmental impact. Roughly a third of survey respondents expect increased AI use to worsen environmental pressures. Yet only 17% of business leaders currently factor environmental planning into their AI strategy, implying that this responsibility is not one of their top priorities.

That mismatch amplifies the trust problem. And as AI adoption accelerates, whether companies prioritize transparency, fairness, and long-term thinking could determine if AI becomes a social good or a source of division.

Final Word: Why the Trust Gap Matters

The current boom in AI investment may look unstoppable, driven by corporate confidence and investor money. But hype alone won’t sustain broad adoption.

If corporate stakeholders ignore public concerns by failing to invest enough in safety, worker training, equitable value distribution, and environmental stewardship, the result may be not just distrust, but backlash.

As more sectors adopt AI, the decisions made now will shape not just profits, but social license. The future of AI depends not just on what the technology can do, but on whether people believe it’s being used fairly and responsibly for the benefit of all—not just the select few.