Stripe Data Engineer Interview Guide for 2026

Introduction

Stripe’s infrastructure runs on data. Whether it is powering fraud detection, financial reporting, or real-time observability, the Stripe data engineer plays a pivotal role in how billions of transactions move safely and efficiently. If you are preparing for the Stripe data engineer interview, this guide will help you understand what to expect across technical rounds, system design, and cultural fit so you can prepare confidently.

In this guide, we will help you build both technical readiness and clarity of communication. You will learn what Stripe looks for in candidates, the key types of questions that appear in each round, and how to approach them strategically. From SQL and data modeling to large-scale system design and behavioral alignment, this guide provides you with the structure, examples, and insights needed to feel confident and well-prepared for every stage of the interview.

Role overview & culture

As a Stripe data engineer, you will build and maintain real-time and batch pipelines that support Stripe’s global payment infrastructure. You will work closely with teams in finance, product, and risk to ensure that data systems are reliable, scalable, and analytics-ready. Engineers often work with tools like Snowflake, Airflow, Scala, and Flink to deliver solutions across domains such as revenue recognition, ledger integrity, and experimentation platforms.

Stripe emphasizes bottom-up decision making and values such as “Seek feedback” and “Move with urgency.” Engineers are encouraged to take ownership of their domain while collaborating deeply across functions.

Why this role at Stripe?

Stripe operates at massive scale, handling millions of transactions per second, and its data stack reflects that ambition. As a data engineer, you will tackle challenges in observability, lineage, and real-time orchestration while working at the intersection of infrastructure and analytics. The role provides an opportunity to design systems that impact every part of Stripe’s ecosystem, from payments to financial reporting.

Beyond the technical challenge, this position offers clear career growth and strong upward mobility. Data engineers at Stripe are encouraged to develop deep domain expertise and can progress toward senior, staff, or principal engineering levels that influence data strategy across global teams. Many also transition laterally into roles such as data platform engineering, technical leadership, or product infrastructure, gaining exposure to both engineering and business decision-making. Stripe’s strong culture of ownership, mentorship, and learning ensures that engineers not only build scalable systems but also build meaningful long-term careers within the company.

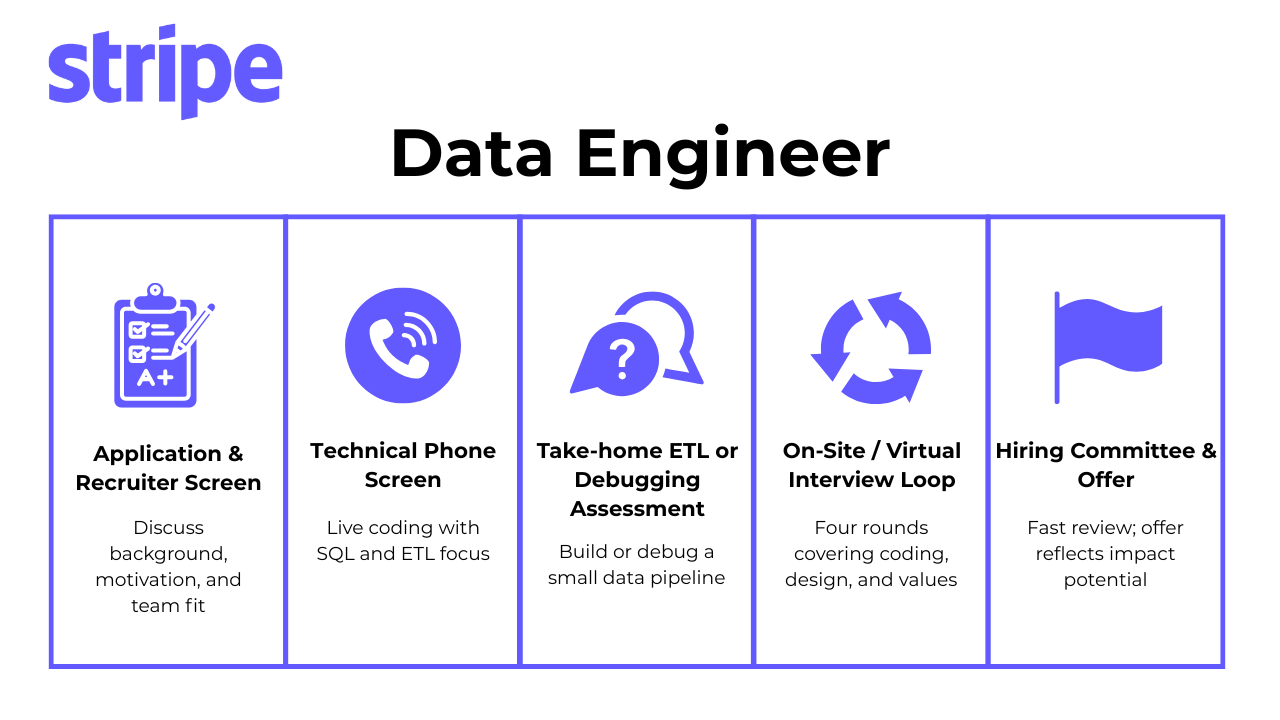

What is the interview process like for a data engineer role at Stripe?

The Stripe data engineer interview process is designed to evaluate your technical depth, problem-solving ability, and alignment with Stripe’s engineering values. Expect a fast-paced but thoughtful sequence of rounds, with detailed feedback and structured decision-making behind the scenes.

Application & recruiter screen

After submitting your application, a recruiter will reach out if your experience aligns with the role. In this stage, expect questions about your background in data engineering, familiarity with Stripe’s mission, and general motivation. Recruiters focus on whether you can communicate clearly, understand the business impact of data systems, and connect your experience to Stripe’s vision of building economic infrastructure for the internet. It is also a chance to clarify which team or domain within Stripe you would be most excited to join.

Tip: Prepare one concise story about a data project that delivered measurable results, such as improving reporting latency or reducing data incidents. Early evidence of ownership and impact helps you stand out from other candidates.

Technical phone screen (45 minutes)

This is a hands-on coding assessment over video call. You will be asked to write clean, efficient logic in Python or Scala and solve one to two problems involving SQL transformations, data structures, or simple ETL logic. Interviewers value code readability, logical structure, and communication while problem-solving more than clever shortcuts.

Tip: Talk through your reasoning as you code. Stripe engineers work in collaborative review settings, and verbalizing your logic shows how you think in real time.

Take-home ETL or debugging assessment

Candidates receive a 48-hour take-home task to either build a small data pipeline or debug a failing one. The prompt may involve cleaning malformed logs, processing nested JSON, or simulating a data pipeline failure. Stripe uses this round to assess your engineering rigor and documentation discipline. Reviewers pay attention to how you structure your code, justify trade-offs, and document assumptions.

For example, you might receive a dataset of payment transaction logs with missing timestamps and duplicate entries, along with a task to build an ETL pipeline that outputs a daily summary of total deposits per merchant. Your submission would need to include clear input validation, duplicate handling, and basic data-quality checks, such as ensuring timestamps are monotonic. Success here comes from treating the task like production work, writing test cases, explaining your design, and including simple logging or monitoring notes that show you understand operational reliability.

Tip: Aim for simplicity and clarity. A well-documented solution with sound reasoning will outperform an overly complex one that lacks explanation.

Struggling with take-home assignments? Get structured practice with Interview Query’s Take-Home Test Prep and learn how to ace real case studies. Practice take-home tests →

On-site or virtual final loop

The core of the Stripe data engineer interview happens during the final loop, which consists of four technical and behavioral interviews. This stage evaluates not only your technical expertise but also how you collaborate, make trade-offs, and communicate under ambiguity.

Coding round:

You will solve a data-focused problem in a collaborative environment, such as stream deduplication, time-windowed aggregations, or scalable joins. Interviewers are looking for correctness, efficiency, and clear thought processes.

Tip: Think aloud as you optimize. Explaining trade-offs between readability and performance shows maturity in engineering judgment.

Data modeling round:

You may be asked to design schemas for high-integrity systems such as payment ledgers, experimentation logs, or audit trails. The interviewer will probe how you reason about normalization, partitioning, and schema evolution. Candidates who succeed tend to discuss both short-term implementation details and long-term maintainability.

Tip: Walk through how your model would adapt as data volume scales or downstream requirements evolve, which shows strategic thinking.

System design round:

This interview explores end-to-end data architecture. You might be asked to design a real-time aggregation system for payment events or a pipeline that ingests global transaction logs with fault tolerance. Interviewers assess how you approach trade-offs between throughput, latency, and consistency.

Tip: Structure your explanation around inputs, processing, storage, and outputs. This keeps your reasoning systematic and easy to follow.

Values & culture fit interview:

In this round, you’ll discuss past experiences that reflect Stripe’s values, particularly ownership, rigor, and bias for action. Expect scenario-style questions about navigating ambiguity, aligning teams around data quality, or pushing for reliability improvements. Interviewers want to see that you approach disagreements constructively and take initiative even without explicit direction.

Tip: Use the STAR framework (Situation, Task, Action, Result) and end with what you learned or improved upon. Stripe values candidates who show both reflection and growth.

Hiring committee & offer

After your interviews, Stripe compiles written feedback from each interviewer within 24 hours. A hiring committee, including a bar-raiser, reviews your overall performance to determine level and fit. The focus here is on consistency—technical strength, clarity of communication, and alignment with Stripe’s principles.

If you pass the committee review, your recruiter will schedule a call to discuss the offer package. Stripe’s compensation typically includes a competitive base salary, annual bonus, and a significant equity component that vests over four years. For strong performers or senior candidates, refresh grants are also common after the first year.

When negotiating, research compensation bands for your level using public benchmarks like Levels.fyi and Glassdoor, and come prepared with evidence of your impact in past roles. Recruiters appreciate it when candidates articulate value confidently and factually rather than aggressively. If you have multiple offers, it’s acceptable to share your timelines and express genuine interest in Stripe while requesting clarity on total rewards.

Tip: Approach negotiation as a professional discussion, not a confrontation. Emphasize alignment with the role and long-term growth potential, which helps the recruiter advocate for your best package internally.

What questions are asked in a Stripe data engineer interview?

If you are prepping for a Stripe data engineer interview, expect questions that test system robustness, data correctness, and real-world tradeoffs, not just textbook pipelines. The scope spans three key areas: coding, system design, and behavioral questions.

Coding/Technical Questions

The stripe data engineer role includes writing reliable jobs in production environments. These questions assess how you write code for streaming jobs, batch transformations, and observability mechanisms.

General Tips:

- Prioritize clarity over cleverness; Stripe values maintainable, production-ready logic.

- Narrate your thought process as you go; communication is part of the evaluation.

- Always test edge cases (empty datasets, nulls, or missing timestamps) before finalizing.

Typical tasks include writing an ETL pipeline, cleaning malformed records, or deduplicating real-time events. These questions often appear in both the technical screen and on-site coding rounds.

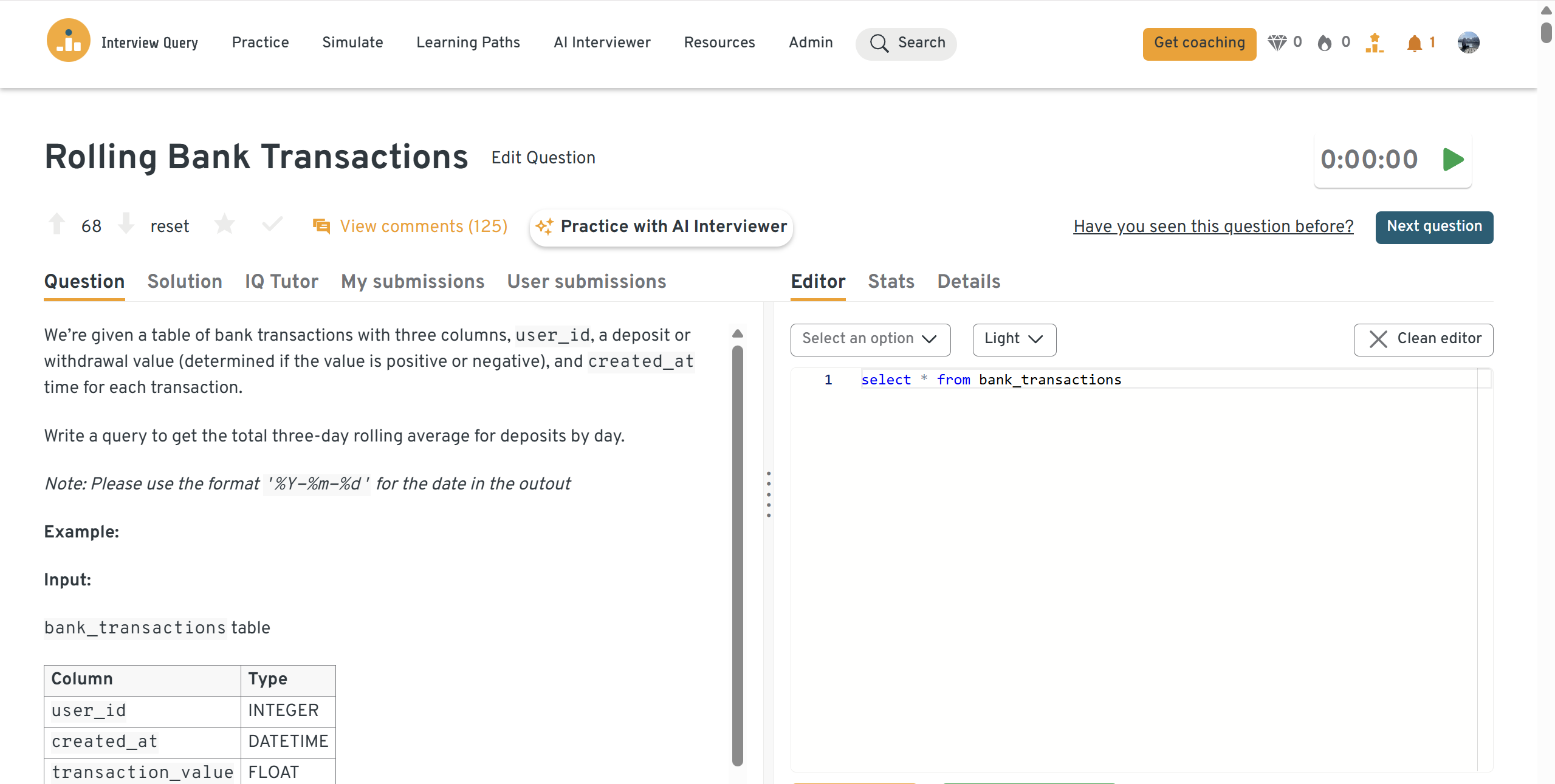

How would you calculate a three-day rolling average of daily deposits?

Stripe’s data engineers are expected to write window-function queries that update quickly yet remain readable. Explain how you’d filter positive

transaction_valuerows, bucket each event into its%Y-%m-%ddate, and apply aSUM(value)over a three-day moving frame, partitioned by nothing, ordered by date, to produce the rolling average. Mention edge-day handling, index support oncreated_at, and why casting timestamps only once inside a CTE keeps the scan efficient. Wrap up by noting how you’d automate validation with test fixtures in SQL-Lint. Check out the dashboard to see the full solution!

How do you compute monthly cohort retention for each plan in the first three months after signup?

This problem checks fluency with cohorting logic. Describe generating a

start_monthcolumn withDATE_TRUNC('month', start_date), joining back to the table to track active subscriptions in months 1–3, and usingCASE WHEN end_date IS NULL OR end_date >= month_end THEN 1 ENDto flag retention. Emphasize partitioning byplan_idandstart_month, usingCOUNT(DISTINCT user_id)to avoid double-counting, and ordering results for dashboard-ready output.How would you derive annual-plan retention from a payments fact table?

Explain how you’d group by

customer_idand invoice year, create a lag to detect consecutive renewals, and compute churn versus retention percentages. Call out Stripe-specific realities such as prorations, refunds, and mid-cycle plan switches that must be filtered to avoid inflating retention.Write a text-justification function that fits lines into a fixed width.

Although rare, Stripe sometimes includes algorithmic challenges to gauge code clarity. Outline splitting words, greedily packing lines until the next word would overflow, then distributing extra spaces with modular arithmetic. Highlight O(n) time, stable word order, and unit tests that cover single-word lines and trailing-space rules.

Return the last transaction of each day, sorted chronologically.

The simplest solution uses

ROW_NUMBER() OVER (PARTITION BY DATE(created_at) ORDER BY created_at DESC)to grab rank = 1 per day. Stress why an index on(created_at, id)keeps the sort in memory, and how this pattern feeds nightly reconciliation jobs that ensure Stripe balances back to the penny.Identify users whose every transaction occurs exactly ten seconds apart.

This anti-fraud query tests event-time reasoning. Show how you’d self-join on consecutive transactions, require

DATEDIFF(second, t1.created_at, t2.created_at)=10, aggregate counts, and ensure no gaps. Discuss window comparisons versus correlated sub-queries and how you’d index(user_id, created_at)to keep latency predictable.Count unique calendar days each employee has logged project hours.

The task checks comfort with date normalization and group-bys. Convert timestamps to dates with

::date, useCOUNT(DISTINCT work_date)peremployee_id, and sort ascending. Mention potential partition pruning if the table is date-sharded in BigQuery or Snowflake.Find the maximum integer in a list, returning

Nonewhen empty.Even tiny utilities matter in data-pipeline glue code. Point out early-exit on

not nums, use Python’s built-inmax()for clarity, and mention typing withOptional[int]for robustness inside Stripe’s typed codebase.

System/Data‑Model Design Questions

Design prompts are used to evaluate your architectural thinking. You may be asked to propose data models for financial systems, observability tooling, or experimentation logging. Expect to balance trade-offs across storage format, partitioning, performance, and downstream use.

General Tips:

- Think in layers: ingestion → processing → storage → serving.

- Explain how you’d handle growth (scalability) and change (schema evolution).

- Mention monitoring, data contracts, and cost management. Stripe prizes operational maturity.

Stripe loves candidates who think from first principles and weigh long-term maintainability.

Design an end-to-end ETL pipeline that ingests Stripe payment data into the analytics warehouse.

Stripe expects a layered answer: webhooks or event streams land raw JSON into an S3-backed data lake; AWS Glue or Airflow stage incremental loads into curated Parquet partitions; dbt models create fact and dimension tables in Snowflake; and Looker explores power near-real-time dashboards. Discuss idempotent upserts keyed on

payment_intent_id, schema-evolution handling, and GDPR deletion hooks.Model the backend for an API-first payments platform (Swipe).

Cover tables for

api_keys,merchants,transactions, andwebhook_endpoints, with foreign-key enforcement and rotated-key history. Address rate-limiting metrics, shard strategy onmerchant_id, encrypted column storage, and audit logging for PCI compliance.Track historical address changes without losing referential integrity.

Propose a slowly-changing-dimension table (

customer_address_history) storingvalid_from/valid_to, linked tocustomer_id. Use surrogateaddress_idkeys so past orders retain original geography even after movers update profiles.Serve hourly, daily, and weekly active-user metrics that refresh every hour.

Lay out a spark-structured-streaming job that writes incremental HLL sketches per user bucket, materializes them to a Delta table, and populates aggregate tables via dbt incremental models. Mention Z-ordering on

(event_date, event_type)to speed time-range scans.Design the core schema for a ride-sharing marketplace.

Detail separate

riders,drivers,rides, andpaymentstables, plus geo-tiled indexes for pickup clustering. Explain how soft deletes and status enums allow live-trip tracking, and how you’d partition largeridesfact tables by event month.Architect an international e-commerce warehouse with vendor, inventory, and refund flows.

Your answer should span multi-region S3 buckets, CDC-based ingestion from OLTP MySQL into Redshift Spectrum, and dimensional models (

dim_vendor,fct_inventory_snapshot,fct_return). Clarify how you’d handle currency conversions and daylight-saving edge cases.Build a historical-feature store and pipeline for bicycle-rental demand forecasting.

Discuss ingesting station logs via Kafka, enriching with weather API pulls, storing in Iceberg tables, and exposing point-in-time feature materializations for model training in SageMaker. Cover data quality checks and drift alerts on station offline spikes.

Sketch YouTube-style recommendation architecture.

Break the system into candidate-generation (content-based, collaborative-filtering) and ranking layers, feature embeddings store, real-time user-event stream, and a Redis/BigTable online inference cache. Tie it back to Stripe use-cases like Smart-Retries prioritization.

Design a robust CSV-ingestion service for customer uploads.

Outline a presigned-URL upload flow to S3, Lambda-triggered schema inference, streaming parse into a staging table, data-quality rule engine, and Airflow-driven merge into analytics tables. Mention back-pressure controls to protect the analytic cluster.

Behavioral/Culture Fit Questions

Stripe wants engineers who demonstrate ownership and understand business priorities. These questions focus on how you’ve handled trade-offs, pushed for best practices, or navigated ambiguous stakeholder requests. Interviewers assess whether you reflect Stripe’s values, especially ownership, rigor, and willingness to dive deep.

Describe a data project you worked on. What hurdles did you face and how did you overcome them?

Stripe wants to hear about real bottlenecks: flaky upstream APIs, skewed partitions, or back-pressure that broke SLAs. Highlight how you diagnosed root causes, aligned cross-team owners, and shipped an incremental fix that unblocked product launches or reduced incident pages.

Sample answer: I led a project to consolidate user billing data from multiple sources into a unified warehouse model. We hit major delays because an upstream API produced duplicate records that broke our ETL jobs. I implemented idempotent inserts, added a data-quality check in Airflow, and worked with the API team to fix pagination logic. Once resolved, our nightly load time dropped by 40% and incident alerts fell to zero for three months.

What are the most effective ways you’ve made data accessible to non-technical stakeholders?

Discuss translating warehouse tables into governed Looker explores, adding data contracts, or embedding lineage diagrams in Confluence. Emphasize how self-serve dashboards shortened decision cycles and let product or finance teams run ad-hoc queries without engineering tickets.

Sample answer: At my previous company, finance teams relied on weekly CSV exports for revenue reports, which slowed decisions. I partnered with analytics to define a certified dataset in Snowflake and built Looker dashboards with plain-language metrics and clear definitions. Within a month, 90% of recurring data requests became self-serve. It showed how good data modeling and documentation can unlock massive efficiency across teams.

How would you answer if your current manager were asked about your strengths and growth areas?

Show self-awareness and a learning mindset. Pair a spike (e.g., “I write clear, test-driven SQL pipelines”) with a genuine weakness you’re improving, such as delegating low-level tasks earlier, and illustrate concrete steps (mentorship, retros) you’ve taken.

Sample answer: My manager would describe me as detail-oriented and dependable—I build pipelines that rarely fail and prioritize data quality. One area I’ve worked to improve is delegating implementation earlier so I can focus on architecture and mentoring. I started documenting pipeline templates and running onboarding walkthroughs so newer engineers can contribute faster. It’s helped me scale my impact while growing as a technical lead.

Tell us about a time you struggled to communicate with stakeholders. How did you resolve it?

Stripe teams are global; misalignment is costly. Share a scenario where finance needed different granularity than engineering, explain the clash, then walk through how you prototyped a reconciliation view, looped in legal for PII guidance, and shipped a unified spec.

Sample answer: I once worked with a regional finance team that needed per-transaction data, while our pipeline only exposed monthly aggregates. Our discussions stalled until I proposed building a prototype reconciliation table with masked PII. This helped both sides visualize trade-offs in latency and compliance. After aligning on a shared schema, we automated daily reconciliations that met compliance standards without adding new dependencies.

-

Tie Stripe’s economic-infrastructure mission to your own experience (e.g., building ledger-quality data systems). Mention specific products, Billing, Radar, and how your skill set improves their reliability or unlocks new analytics use-cases.

Sample answer: I’ve always been drawn to systems that combine precision with scale, and Stripe embodies that balance. My background in financial data engineering—especially building reconciliation and fraud analytics pipelines—aligns closely with products like Billing and Radar. I admire Stripe’s focus on documentation and data trust, which matches how I work. This role feels like the right place to build impactful, high-integrity data systems at global scale.

Tip: When preparing for this section, focus on depth and authenticity rather than memorized answers. Use the STAR method (Situation, Task, Action, Result) to structure your stories, but make sure the emphasis is on your reasoning: why you made certain decisions and what you learned afterward. Stripe values engineers who combine technical skill with accountability and clarity of communication, so prioritize examples where you directly improved reliability, sped up a process, or empowered teams to make better data-driven decisions. End each story with reflection: what you learned, what you’d do differently, and how that experience shaped your current approach. This helps demonstrate growth and self-awareness, two qualities Stripe prizes in all engineering roles.

How to Prepare for a Data Engineer Role at Stripe

Cracking the Stripe data engineer interview means more than just solving SQL queries; it is about demonstrating end-to-end system thinking, data integrity, and clear communication under pressure. The steps below map directly to Stripe’s bar.

Drill Streaming Patterns

Stripe’s real-time systems process millions of transactions per second. Practise with tools like Apache Flink or Spark Structured Streaming, focusing on watermarking, windowed aggregations, and handling out-of-order data.

Tip: Be ready to state a concrete strategy for late events and exactly how you would tune watermark lag for a fraud or auth stream.

Need 1:1 guidance on your interview strategy? Interview Query’s Coaching Program pairs you with mentors to refine your prep and build confidence. Explore coaching options →

Master SQL and Warehouse Optimization

Know how to tune Snowflake, BigQuery, or Redshift queries, partitioning strategies, clustering columns, and query cost monitoring. Stripe values engineers who balance performance and budget.

Tip: Practise explaining why a specific table should be partitioned by date and clustered by merchant_id, then show the before-and-after runtime.

Practise Design Docs

Expect to walk through a one-pager on a hypothetical pipeline or architecture decision. Practise structuring your docs with clear diagrams, tradeoffs, and success metrics within 30 minutes using a simple SPEED outline.

Tip: End every doc with a rollback plan and an explicit test strategy so reviewers see operational thinking, not just design.

Mock Values Interview

Stripe’s culture prizes intellectual honesty, speed, and ownership. Craft stories that show initiative under uncertainty, how you ship fast without sacrificing accuracy, and how you de-risk changes.

Tip: Use STAR and finish each story with one sentence on what you changed in your process afterward.

Data Quality and Contracts

Payments data must be correct and auditable. Be ready to discuss schema evolution, idempotency, late-arriving events, and data contracts with upstream teams.

Tip: Memorize three ingest checks you always ship: row-count deltas against control totals, uniqueness on primary keys, and null thresholds on required columns.

Observability and Incident Readiness

Strong answers include monitoring, alerting, lineage, and on-call playbooks. Interviewers look for pragmatic plans to detect and contain bad data quickly.

Tip: Describe the exact alerts you would configure for a new ETL job: SLA miss, spike in duplicate keys, and sudden drop in event volume.

Cost and Performance Management

Warehouses are powerful but not free. Show that you think about query cost, storage layout, and job scheduling to keep spend predictable.

Tip: Be ready to justify when to pre-materialize aggregates vs. compute on read, and how you would measure savings after the change.

Want to practise real case studies with expert interviewers? Try Interview Query’s Mock Interviews for hands-on feedback and prep. Book a mock interview →

FAQs

What Is the Average Salary for a Data Engineer at Stripe?

Stripe compensation is typically composed of base salary, annual bonus, and a significant equity component. While exact figures vary, stripe data engineer salary bands increase noticeably by level (L3 to L5), with remote and SF-based roles offering slightly different multipliers. Highlight any infra wins or leadership impact during negotiation to improve your offer.

At Stripe, senior data engineers report total compensation (base + bonus + stock) between $306,000 and $459,000 USD, with a median base salary of around $193,000 USD. (Glassdoor). For data infrastructure engineers (a closely related role), median total pay is about $253,000 USD. (Glassdoor).

Average Base Salary

Average Total Compensation

How Long Does the Stripe Data Engineer Interview Process Take?

The stripe data engineer interview process usually spans 3–4 weeks, from recruiter screen to offer. To avoid delays, many candidates batch their interviews into a single week.

Are New Data Engineer Roles Posted on Interview Query?

Yes! Browse live openings and gain access to insider prep content, curated specifically for data engineers applying to Stripe and other top tech firms.

Can I negotiate my Stripe offer?

Yes. Stripe encourages transparent discussions around compensation. Come prepared with salary data from reliable benchmarks like Levels.fyi, clearly communicate your expectations, and highlight your impact from past roles. Recruiters respect data-driven negotiation and are open to adjusting base, equity, or signing bonuses within reason.

What are Stripe’s core engineering values, and how do they relate to data roles?

Stripe’s engineering culture emphasizes rigor, speed, and long-term thinking. For data engineers, this translates into building systems that are not just functional but also auditable, observable, and future-proof. Showing that you prioritize correctness and transparency over quick fixes aligns closely with the company’s values.

What tools and technologies should I review before the interview?

Refresh your knowledge of Python (pandas, typing, exception handling), SQL (window functions, CTEs), Airflow or Prefect for orchestration, and warehouse tools like Snowflake, BigQuery, or Redshift. For design interviews, understand event streaming (Kafka, Kinesis), cloud storage (S3, GCS), and monitoring systems such as Datadog or Prometheus.

How can I stand out during the behavioral interview?

Stripe values clarity, ownership, and reflection. Use structured answers that emphasize impact and learning. Share examples where you solved reliability issues, improved data quality, or streamlined workflows. Demonstrating that you can think critically, document decisions, and collaborate across teams will set you apart from other candidates.

Does Stripe expect data engineers to know machine learning?

Not directly. Stripe’s data engineers collaborate closely with data scientists and ML engineers but are not expected to build models. However, familiarity with ML data pipelines, feature stores, or model monitoring is valuable—especially for teams that support risk, fraud, or revenue forecasting systems.

What skills does Stripe look for in a data engineer?

Stripe looks for engineers who can design reliable, scalable, and well-documented data systems. Strong candidates combine SQL and Python proficiency with experience in orchestration tools like Airflow, streaming frameworks like Flink or Kafka, and modern warehouse technologies such as Snowflake or BigQuery. Communication and system-level reasoning are equally important, as Stripe expects engineers to own projects end to end.

Next Steps in Your Stripe Data Engineer Prep

Succeeding in the Stripe data engineer interview takes more than just solid code—it requires structured thinking, performance-aware design, and cultural alignment. By focusing your prep on streaming patterns, warehouse optimization, and system design fundamentals, you’ll be better equipped for each round.

Want to go further? Try mock interviews or the AI interview simulator to simulate real rounds. Explore our data engineering learning path to master concepts, or get inspired by success stories like Jeffrey Li who used Interview Query to land his dream role.