Amazon Data Scientist Interview Guide (Process, Questions & Preparation Tips)

Introduction: Why the Amazon Data Scientist Interview Stands Out

The demand for data scientists is projected to grow by 414% over the next decade, and Amazon’s data science role is evolving to match this need. Rather than focusing on traditional work like routine analytics or dashboards, the role blends innovative research with clear, impactful storytelling to turn metrics into decisions for one of the world’s most data-driven companies.

The Amazon data scientist interview reflects this breadth and complexity. While Google might drill deeper into theoretical ML concepts and Facebook might emphasize system design, Amazon’s interview process mirrors the multi-faceted role itself. Candidates move fluidly between SQL, statistical reasoning, and discussions of business impact in alignment with Amazon’s 16 Leadership Principles.

This guide outlines what to expect, how to prepare, and what distinguishes successful candidates.

What Does a Data Scientist Do at Amazon?

As an Amazon data scientist, you play a critical role at the intersection of data, experimentation, and large-scale business operations. Whether working on Alexa, Prime Video recommendations, or supply chain optimization, data scientists transform raw data into insights and deploy models that directly influence customer experience and operational efficiency.

Core responsibilities include:

- Designing and deploying machine learning models at scale: Developing predictive and optimization models that run on massive datasets across AWS infrastructure.

- Translating business questions into analytical solutions: Partnering with business leaders to identify key problems and frame them into measurable, data-driven objectives.

- Working with large datasets through experimentation and metrics: Conducting A/B tests, designing experiments, and defining success metrics to validate product and feature changes.

- Collaborating cross-functionally: Working with product managers, engineers, and business analysts to align analytical insights with real-world applications.

- Driving automation and operational efficiency: Building tools or dashboards to democratize data access and enable faster decision-making across teams.

Amazon’s scale and diversity of data make the role especially complex and rewarding. Data scientists are expected to be both hands-on builders and strategic thinkers, seamlessly blending experimentation with applied machine learning to deliver measurable business value.

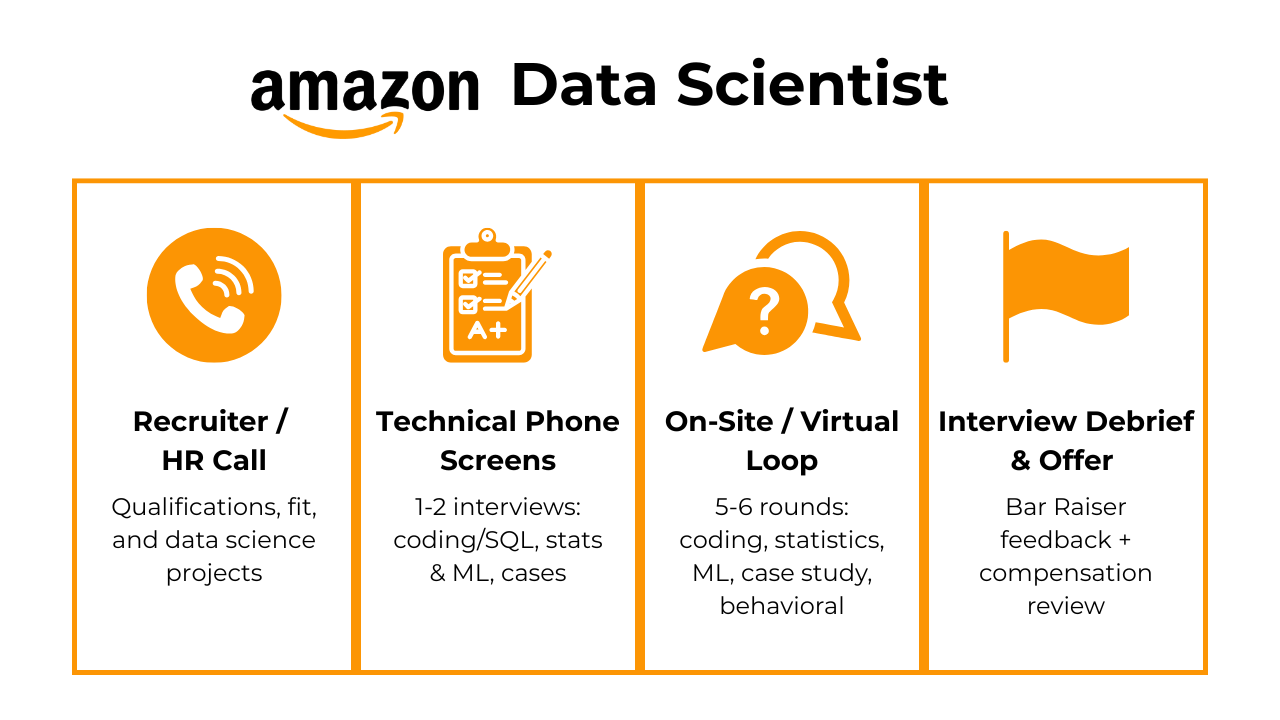

Amazon Data Scientist Interview Process Explained

Understanding the interview structure helps you prepare strategically as a data scientist candidate. Amazon’s process is thorough, designed to evaluate you from multiple angles over several weeks.

Recruiter Screening Call

This initial 30-minute conversation assesses basic qualifications and mutual fit. The recruiter will ask about your background, why you’re interested in Amazon, and walk through your resume. They’ll also explain the role, team, and next steps.

Come prepared with specific examples of data science projects you’ve led, questions about the team’s focus area, and a clear articulation of why Amazon appeals to you (beyond “it’s a big company”). This call also sets salary expectations—if there’s a major mismatch, it’s better to know now.

Tip: Instead of rehearsing a generic pitch, tailor your story around measurable outcomes by using metrics like model accuracy, revenue lift, or customer engagement gains.

Technical Phone Screen(s)

Most candidates face 1-2 technical screens, with each lasting 45-60 minutes. These typically combine:

Coding/SQL: SQL questions test your ability to extract insights from relational database. Expect multi-table joins, window functions, and aggregations. The problems are practical, not Leetcode-style brainteasers: “Write a query to find the top 10 products by revenue growth over the last quarter.”

Tip: Review real-world problems using sample Amazon datasets and Interview Query’s SQL learning path. This helps build muscle memory for analytical reasoning under time pressure.

Statistics & ML fundamentals: Expect questions like “How would you detect if a coin is biased?” or “Explain how random forests work and when you’d use them versus logistic regression.” They’re testing both theoretical knowledge and practical judgment.

Tip: Practice explaining concepts aloud as if you’re teaching them instead of simply getting the answer right.

Case-style problems: You might get: “How would you measure the success of Amazon Prime Video’s recommendation system?” This evaluates structured thinking, metric design, and your ability to handle ambiguity.

Tip: Frame your answers using a repeatable structure (e.g., Objective → Approach → Metrics → Limitations) so interviewers can easily follow your logic.

The technical screen is your first major filter. Demonstrate clean code and ask clarifying questions when needed.

On-Site/Virtual Interview Loop

The Amazon data scientist interview stages culminate in the on-site loop, typically 5-6 back-to-back interviews (45-60 minutes each). Each interviewer evaluates different competencies:

Coding Interview: This round involves algorithm and data structure problems similar to software engineering interviews, but calibrated for data scientists. You might implement a solution to parse logs, optimize a function, or solve a moderate-difficulty algorithm problem.

Tip: Focus on writing code that’s readable and modular. Adding short comments or function names that reflect intent can subtly convey clarity and professionalism.

Statistics & Probability Deep Dive: This goes beyond surface-level questions as interviewers want to see rigorous statistical thinking. Be ready to derive concepts from first principles, explain hypothesis testing nuances, work through Bayesian inference problems, or discuss experimental design challenges.

Tip: Try statistics and probability questions on Interview Query to practice walking through your reasoning step-by-step. Interviewers often value a transparent thought process even more than immediate accuracy.

Machine Learning Interview: You’ll discuss ML algorithms in depth—how they work mathematically, their assumptions, when they fail, how to debug them. Questions might include: “How would you handle class imbalance?” or “Walk me through how gradient boosting works.” Be prepared to whiteboard model architectures and discuss trade-offs.

Tip: Instead of purely theoretical answers, draw from personal project examples by linking concepts to real outcomes.

Case Study / Product Sense: Given an Amazon-specific business scenario, you’ll need to structure an analytical approach, identify data sources, propose metrics, and discuss potential pitfalls. This tests your ability to think like a business partner, not just a technician.

Tip: Anchor your analysis in Amazon’s customer-first mindset. Show how your proposed metrics connect directly to improving user experience or long-term value.

Behavioral Interviews (Leadership Principles): Amazon dedicates substantial interview time to behavioral questions anchored in their Leadership Principles. You’ll answer questions like “Tell me about a time you had to make a decision with incomplete data” or “Describe a situation where you disagreed with your manager.”

Tip: Use the STAR (Situation, Task, Action, Result) framework, but emphasize the Result to reflect ownership of quantifiable outcomes and learnings.

Bar Raiser Interview: One interviewer is a “Bar Raiser,” referring to a specially trained evaluator from outside your target team whose job is to ensure Amazon doesn’t lower hiring standards. This person has veto power and typically probes harder on both technical depth and leadership principles.

Tip: Treat this as a holistic review. Stay composed, connect your experiences to Amazon’s principles naturally, and avoid sounding scripted or rehearsed.

Interview Debrief & Offer

After the on-site loop, interviewers meet to discuss your performance. They evaluate whether you meet the bar for the level you’re interviewing for (L4, L5, L6, etc.) and whether there’s consensus to move forward. The Bar Raiser’s opinion carries significant weight.

If you pass, you’ll receive an offer that includes base salary, sign-on bonus, and RSUs (Restricted Stock Units). Amazon’s compensation is back-loaded; year 1 and 2 include larger sign-on bonuses to offset minimal stock vesting, with more significant RSU vesting in years 3-4.

The entire process typically takes 4-8 weeks from initial screen to offer, though timelines vary. Staying responsive, asking thoughtful questions, and following up appropriately keeps momentum going.

Tip: Once the offer comes, evaluate the total compensation over a 4-year horizon. Consider comparing it against other industry salaries to determine whether it aligns with your long-term career goals.

Amazon Data Scientist Interview Questions

The Amazon data scientist interview evaluates candidates across multiple dimensions, including technical coding skills, business problem-solving, and behavioral alignment with the company’s Leadership Principles. You can expect a mix of SQL and programming challenges, case studies requiring data-driven decision-making, and behavioral questions designed to assess ownership, customer obsession, and cross-functional collaboration.

Below are key questions and answers to help you understand the structure and focus of each round.

Read more: Top 110 Data Scientist Interview Questions

Technical & Coding Round

This round is designed to measure both your technical proficiency and your ability to apply it to real-world data challenges. SQL questions test joins, window functions, and aggregations, while coding in Python, R, or Java covers data structures, algorithms, and scripting. Interviewers also test your grasp of statistics and probability, such as hypothesis testing. Finally, expect machine learning and modeling questions that cover feature engineering, evaluation metrics, and the trade-offs involved in selecting algorithms.

1 . Find the manager with the largest team size using employee and manager data.

The problem checks your ability to work with hierarchical relationships, joins, and group-level aggregations. It’s meant to assess whether you can identify patterns in employee–manager structures by linking data within the same table. You can solve it by performing a self-join on the employee table using the manager_id, grouping by each manager, counting their direct reports, and returning the manager with the highest total.

Tip: Draw a small sample of employee–manager pairs before writing SQL, as it often clarifies the logic for grouping and counting correctly.

2 . Generate a monthly report showing total customers, transactions, and order amounts.

Building this report demonstrates your grasp of data aggregation and time-series analysis. The goal is to see how effectively you can summarize trends over specific time periods to produce actionable insights. To complete it, filter transactions for the year 2020, group by month using functions like DATE_TRUNC() or EXTRACT(), and compute metrics such as total customers, transactions, and revenue with COUNT() and SUM().

Tip: Always validate that the date column is in the correct timezone and format before applying time-based grouping to prevent subtle reporting errors.

3 . Calculate the sample variance of a list of integers rounded to two decimal places.

Computing variance highlights your understanding of basic statistics and your ability to translate mathematical formulas into code. The question checks whether you can perform numerical computation step-by-step without relying on prebuilt functions. To solve it, calculate the mean, determine the squared deviations for each element, divide their sum by (n − 1) to get the sample variance, and round the final value to two decimal places.

Tip: Confirm if the problem specifies sample or population variance to avoid confusion.

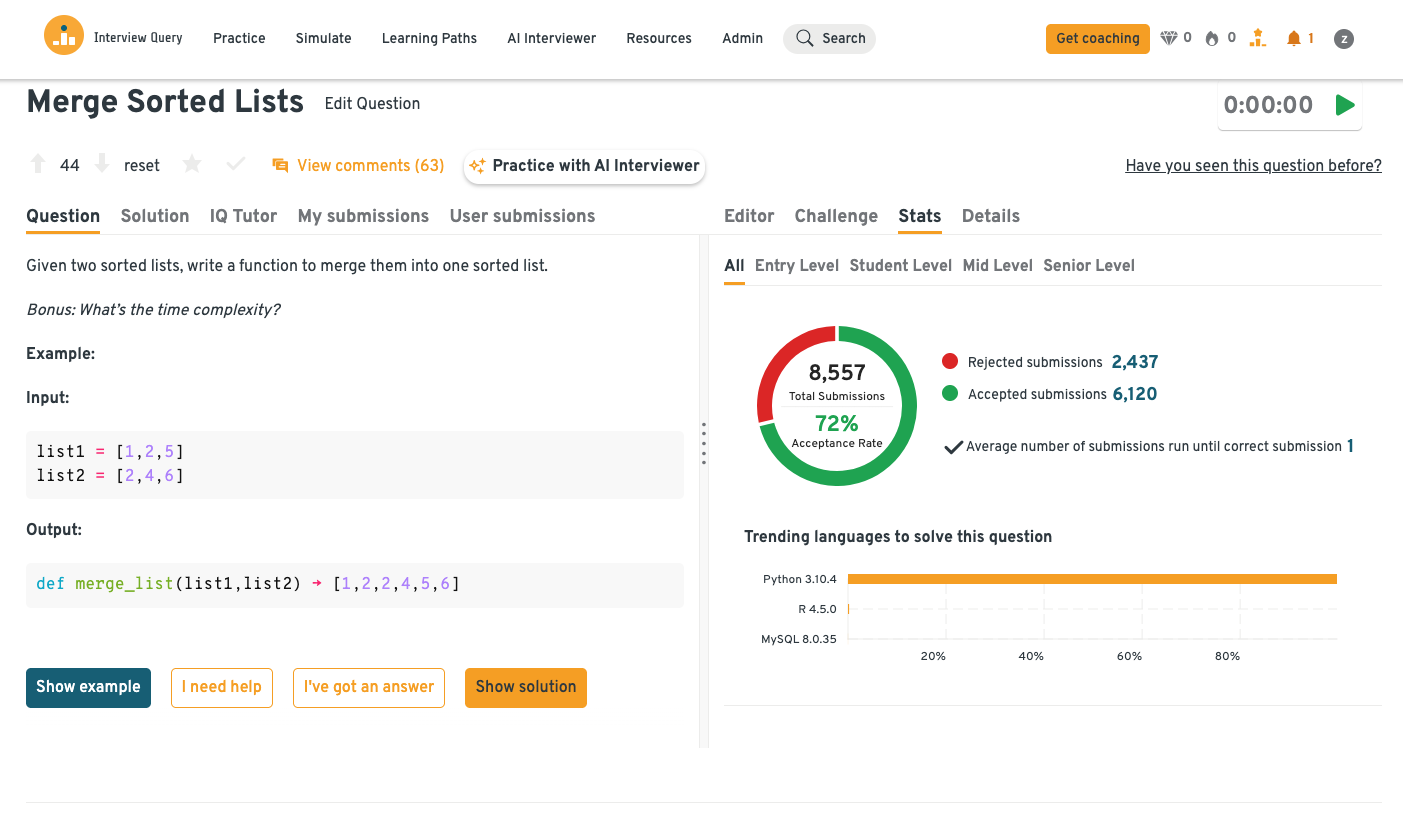

4 . Merge two sorted lists into one sorted list and analyze the time complexity.

Merging sorted lists is a fundamental algorithm challenge that reveals how well you understand sorting logic and computational efficiency. The question examines your ability to combine data efficiently without performing a full re-sort. You can approach this with two pointers, each moving through one list, comparing current elements, and appending the smaller one to a new list until both are exhausted.

Tip: Practice merging lists of uneven sizes to get comfortable handling edge cases and confirming your loop conditions handle all elements cleanly.

Head to the Interview Query dashboard to test your solution, explore hints from IQ Tutor, and review community comments to learn how other candidates optimized their approaches.

Implementing logistic regression manually tests both your mathematical intuition and coding discipline. The question measures how well you understand iterative optimization and how model weights adjust based on gradients. To build the model, initialize weights, apply the sigmoid function for predictions, calculate gradients from prediction errors, and update weights through gradient descent until loss convergence.

Tip: Track the loss value after each iteration to ensure your gradient descent is actually converging and not diverging.

Case Study & Business-Impact Questions

This section evaluates your ability to apply data science skills to real business problems. You’ll need to make strategic decisions, design experiments, and measure the impact of product or operational changes. Questions focus on understanding trade-offs, driving customer adoption, optimizing metrics, and translating analysis into actionable recommendations.

-

Evaluating business trade-offs in customer acquisition and retention is key in this scenario. It matters because hiring decisions versus scalable self-service solutions directly affect cost, conversion, and long-term engagement. A strong approach involves analyzing projected ROI of a Customer Success Manager compared to expected conversion lift from a free trial, segmenting customers, and considering retention effects.

Tip: Consider customer segments that might benefit most from personalized support versus those who convert well with a trial.

-

Handling data cleaning and entity resolution at scale is central to this challenge, because it improves customer experience, ensures accurate analytics, and maintains catalog quality. A practical method combines string similarity metrics, product attributes, and machine learning models to detect duplicates, followed by merging or flagging records while preserving key data.

Tip: Test your duplicate detection logic on smaller subsets first to refine thresholds before running it across millions of listings.

Propose methods to improve recall for Amazon product search without changing the search algorithm.

Optimizing recall is critical because missing relevant products can reduce sales and frustrate users. Solutions include query preprocessing, synonym expansion, metadata enrichment, or post-processing re-ranking based on relevance signals. Explaining a structured approach highlights your ability to enhance business outcomes within operational limitations.

Tip: Measure recall improvement with metrics such as click-through rate and search abandonment to ensure your methods actually benefit users.

Measure the impact of integrating Amazon Prime Music with Alexa devices on subscription growth.

Analyzing the effects of product integrations requires both metric design and causal reasoning skills. This analysis is important because it informs marketing, product strategy, and retention planning. You can define key metrics such as subscriptions, engagement, and churn, compare pre- and post-integration data, and apply causal inference or A/B tests to quantify the effect. Framing the impact clearly demonstrates the ability to connect data analysis with business strategy.

Tip: Segment users by device type or usage behavior to identify which groups respond most strongly to the integration.

Design a pre-launch test for a new Amazon Prime Video show.

Evaluating how to run controlled pre-launch experiments tests your understanding of sampling, experiment design, and metric definition. The goal is to select a representative and unbiased set of 10,000 users that reflect the broader target audience while controlling for viewing behavior, demographics, and engagement patterns. A solid approach involves stratified sampling across relevant cohorts (e.g., genre preferences, watch frequency), tracking KPIs such as view rate, completion rate, and engagement lift, then comparing to a control group to estimate the show’s potential impact before a full rollout.

Tip: When selecting the test group, balance diversity and engagement level to avoid biasing results toward heavy users or specific content preferences.

Looking for a more detailed solution? In this video, Interview Query co-founder Jay Feng and senior data scientist Rahul Chaudhary walk through a framework for designing and evaluating pre-launch experiments on streaming platforms like Amazon Prime Video.

Watch it for a step-by-step breakdown of sampling strategies, metric tracking, and interpreting early performance signals.

Behavioral Interview & Amazon Leadership Principles

The behavioral interview evaluates how well you embody Amazon’s Leadership Principles, such as ownership, customer obsession, and bias for action. These questions test your ability to make decisions, communicate effectively, and reflect on your strengths and weaknesses while demonstrating real-world impact. Strong answers combine structured storytelling with measurable outcomes.

Explain why you want to work at Amazon and why you would be a good fit for the company.

Hiring managers want to see alignment between your motivations and Amazon’s mission. Demonstrating understanding of the company’s culture and goals helps you stand out.

Example answer: “I am drawn to Amazon’s culture of innovation and customer obsession. In my previous role, I led projects that improved customer retention using analytics, and I’m eager to bring the same mindset to Amazon to drive scalable impact.”

Tip: Share specific examples from your experience that clearly map to Amazon’s Leadership Principles to make your fit tangible.

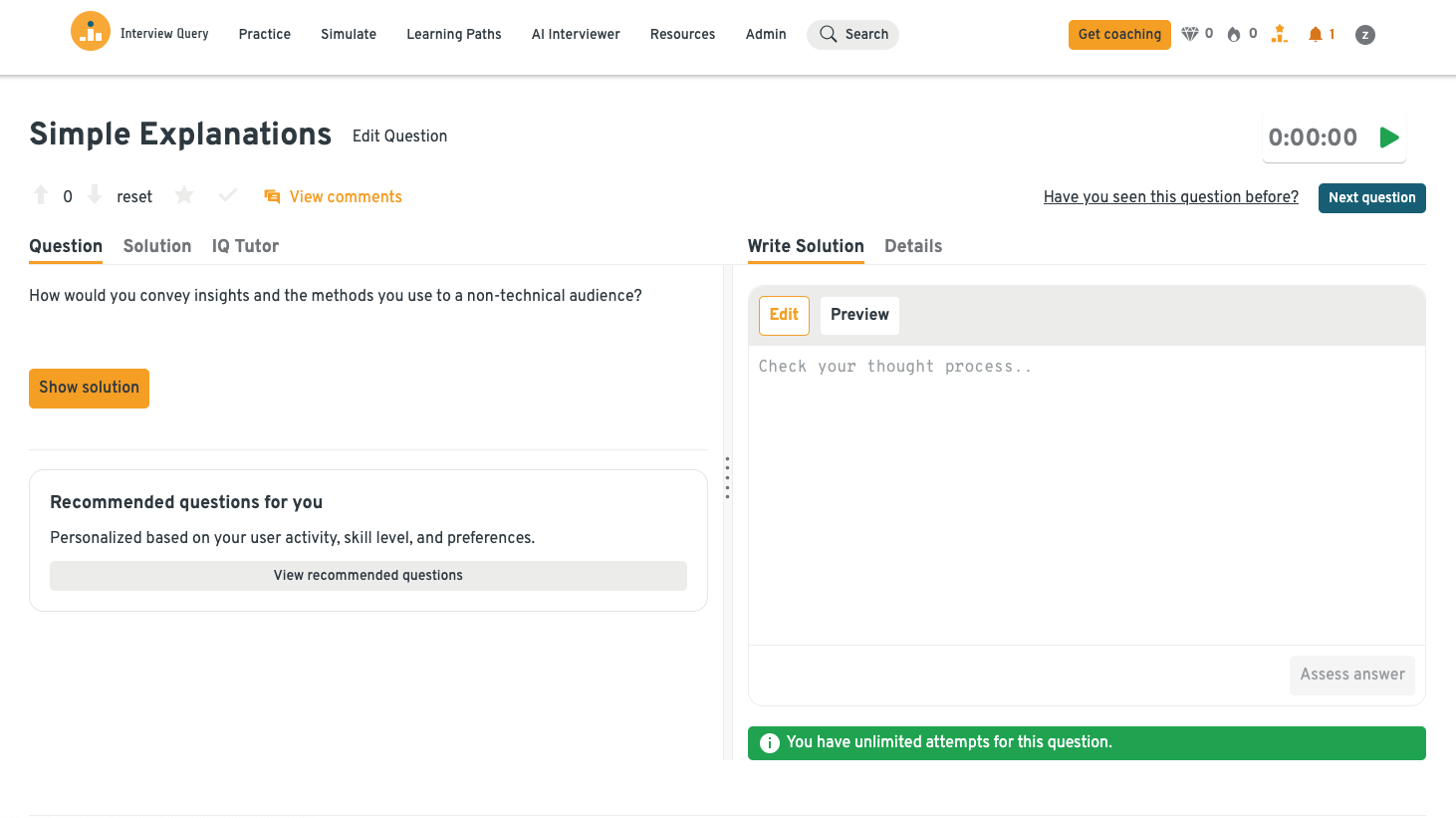

-

Interviewers evaluate your ability to make technical findings understandable and actionable for diverse audiences. It is crucial because Amazon employees often collaborate with teams outside of analytics.

Example answer: “I focus on the main takeaway first and use visual charts to illustrate trends. For instance, when summarizing an A/B test, I highlighted the key effect on customer behavior and suggested actionable next steps, enabling the marketing team to implement changes confidently.”

Tip: Practice distilling complex analyses into one or two key points and use relatable analogies to simplify concepts.

Jump into the Interview Query dashboard to practice crafting concise, high-impact explanations and explore how other candidates approached similar behavioral prompts. You can also drill more behavioral questions across the site and use IQ Tutor for structured guidance.

Discuss your strengths and weaknesses and how they relate to your performance at Amazon.

Amazon values candidates who are reflective, aware of their capabilities, and actively improving themselves. Demonstrating self-awareness shows maturity and potential for growth.

Example answer: “One strength is quickly structuring ambiguous problems into actionable analyses, which helped my team make data-driven decisions efficiently. A weakness I’m addressing is over-involvement in details, so I’ve started using delegation and check-ins to ensure the team works independently while maintaining quality.”

Tip: Frame weaknesses as areas of growth and include concrete actions you’re taking to improve, showing progress and accountability.

Tell me about a time you took ownership of a challenging project at work.

Amazon looks for employees who proactively drive results and take accountability for outcomes. Ownership demonstrates initiative and the ability to influence results.

Example answer: “When recurring reporting errors slowed decision-making, I volunteered to overhaul the data pipeline. By implementing automated checks and redesigning the workflow, errors dropped dramatically and the team saved hours weekly.”

Tip: Highlight the direct impact of your ownership on the team or business and describe steps you took independently.

Describe a situation where you had to make a difficult decision with incomplete data.

Making decisions under uncertainty is a frequent expectation at Amazon, and interviewers want to see your judgment process. Being able to reason through partial information, while balancing risk and impact, is highly valued.

Example answer: “I needed to prioritize features for a product launch without complete usage data. I relied on proxy metrics and consulted stakeholders to make a recommendation, monitored early results closely, and adjusted accordingly, which led to strong adoption post-launch.”

Tip: Focus on explaining your reasoning process, the trade-offs you considered, and how you mitigated risk rather than only the result.

After exploring coding, case study, and behavioral questions on Interview Query, the next step is to focus on effective preparation strategies. The following section provides guidance on study plans, practice exercises, and tips for approaching each type of interview to maximize your performance and confidence.

How to Prepare: Timeline & Strategy

Structured preparation is the key to standing out in the Amazon Data Scientist interview process. The following 8-week plan builds from core technical fundamentals to full interview simulations, incorporating coding, case study, and behavioral practice. Following this roadmap helps ensure you develop both the analytical and business skills Amazon values.

| Focus Area | Key Tasks & Tips |

|---|---|

| Weeks 1-2: Fundamentals Refresh | - SQL: Multi-step queries, window functions, CTEs, complex aggregations; solve 3–4 problems/day. - Python: Pandas, Numpy, function writing; 20–30 easy/medium LeetCode problems. - Statistics & Probability: Hypothesis testing, regression assumptions, derivations, MLE. - Machine Learning: Understand mechanics, implement 2–3 algorithms from scratch, explain trade-offs. |

| Weeks 3-4: Technical Application | - Integrated coding: Work with real datasets (Kaggle, AWS) combining logic and efficiency. - SQL escalation: Amazon-style problems with filters, aggregations, and window functions; practice timed solutions. - Case & ML modeling: Apply structured frameworks; practice churn reduction or product prediction cases. - Mock interviews: Simulate pressure, record yourself, focus on clear explanations. |

| Weeks 5-6: Business Context & Metrics | - Study Amazon segments: Prime, AWS, advertising, customer experience. - Metrics frameworks: North Star, input/output, leading/lagging indicators, metric trees. - Case study practice: Use “Working Backwards” framework; pattern-match with Amazon blog & AWS case studies. |

| Weeks 7-8: Behavioral Prep & STAR Stories | - Build STAR story bank (15–20 stories) aligned to Leadership Principles. - Practice delivery aloud, 2–3 min per story; anticipate follow-ups. |

| Ongoing / Full Loop: Mock Interviews & Debrief | - Schedule 4–5 hour full interview simulations. - Debrief after each session: track mistakes, clarify explanations, optimize STAR stories. - Iterate technical, case, and behavioral prep based on insights. |

By following this plan, you’ll systematically build technical fluency, business intuition, and behavioral readiness. Completing mock interviews and refining STAR stories ensures you can confidently demonstrate impact and judgment under pressure. With this foundation in place, the next step is to focus on optimizing your resume & overall profile in alignment with Amazon’s Data Scientist roles.

Resume & LinkedIn Optimization for Amazon Data Scientist Roles

Your resume and LinkedIn profile are your first impression—often the only opportunity to get past the recruiter screen. Optimize both by highlighting measurable impact, technical expertise, cross-functional collaboration, and alignment with Amazon’s culture.

Quantify Everything

Amazon is metrics-driven. Every bullet should demonstrate measurable impact or scope. Here are examples of how transform weak statements into compelling evidence of impact:

| Weak Statement | Strong Statement |

|---|---|

| Built machine learning models to improve predictions | Developed gradient boosting model that reduced forecast error by 18%, enabling $2.3M reduction in excess inventory costs |

| Analyzed customer data to provide insights | Identified root causes of 23% churn increase through cohort analysis and survival modeling, informing product roadmap that recovered 15% of at-risk customers |

| Improved model performance | Increased recommendation CTR from 2.1% to 3.4% by implementing collaborative filtering with 40M+ user-item interactions, driving $800K incremental revenue quarterly |

Tip: Even if you cannot quantify outcomes, indicate scale: “Analyzed 50M transactions across 12 product categories.”

Highlight Technical Skills

Create a dedicated Technical Skills section near the top of your resume:

- Programming Languages: Python, SQL, R (proficiency levels)

- ML/Stats Tools: scikit-learn, TensorFlow, PyTorch, XGBoost, statsmodels

- Big Data & Cloud: Spark, AWS (SageMaker, Redshift, S3, EMR), Databricks, Airflow

- Databases: PostgreSQL, MySQL, Redshift, DynamoDB

- Visualization: Tableau, Matplotlib, Plotly, QuickSight

- Other: Git, Docker, A/B testing, experiment design

Include AWS experience if relevant, since Amazon uses these tools extensively.

Tip: Arrange skills by relevance to the specific Amazon team or role to increase recruiter visibility.

Showcase Cross-Functional Collaboration

Demonstrate ability to work across teams with examples like:

- Partnered with product managers and engineering teams to design a recommendation system serving 5M daily users, aligning across three teams over six months.

- Collaborated with finance and operations to develop an inventory optimization model, presenting findings to VP-level leadership.

Use action verbs that embody cross-functional stakeholder work: partnered, coordinated, aligned, influenced, presented.

Tip: Highlight how you influenced outcomes without formal authority, showing impact in a matrixed environment.

Align Language With Amazon Culture

Incorporate Leadership Principles subtly:

- Drove end-to-end ownership of churn prediction pipeline from ideation to production deployment (Ownership)

- Reduced model training time from 6 hours to 45 minutes (Invent and Simplify)

- Advocated for model interpretability despite pressure to optimize solely for accuracy, ensuring stakeholder trust (Have Backbone; Disagree and Commit)

- Identified critical data quality issue impacting 30% of predictions, preventing flawed deployment (Dive Deep)

Tip: Weave cultural alignment naturally into your bullet points without overloading them with buzzwords.

When your resume tips for data scientist applications at Amazon emphasize quantified impact, technical depth, cross-functional experience, and cultural alignment, you dramatically increase your odds of landing that first recruiter call. Schedule a session with one of Interview Query’s coaches, who can share resume enhancement tips based on their industry expertise and experience with Amazon’s hiring process.

Salary, Levels & Career Growth for Amazon Data Scientists

Understanding Amazon’s compensation structure and career ladder helps you negotiate effectively and plan your career trajectory. Total compensation includes base salary, sign-on bonus, and RSUs.

Average Amazon Data Scientist Salary

Here’s what to expect based on recent data on Levels.fyi:

| Level | Total Comp. | Base Salary | RSUs | Sign-On Bonus |

|---|---|---|---|---|

| Data Scientist I (L4) | ~$180K | $140K | $34K | $13K |

| Data Scientist II (L5) | ~$270K | $170K | $87K | $14K |

| Senior Data Scientist (L6) | ~$430K | $200K | $214K | $16K |

| Principal Data Scientist (L7) | ~$630K | $240K | $352K | $40K (Negotiable) |

Notes & Insights:

- Total compensation varies by team, location, and negotiation.

- High-cost areas and hot teams (AWS, Alexa, Advertising) can see L6+ packages exceeding $400K.

- According to Business Insider, average total comp for Amazon data scientists is around $230,900.

- Geographic considerations: Base salaries adjust for cost of living, but RSU grants typically don’t. A Seattle-based L5 might earn $170K base while an Austin-based L5 earns $155K, but both might receive similar RSU grants of $87K-$100K.

Tip: When negotiating, focus not only on base salary but also on RSUs and sign-on bonuses, as these can significantly impact total compensation.

Average Base Salary

Average Total Compensation

Negotiation Leverage

Amazon has room to negotiate, particularly on sign-on bonuses and RSU grants. Base salary is less flexible due to leveling bands, but sign-on bonuses can increase by $20K-$40K with competing offers. RSU grants also have flexibility, especially if you’re a strong candidate or have leverage.

To negotiate effectively:

- Use websites like levels.fyi, Blind, and Glassdoor to benchmark offers

- Get competing offers from other FAANG/big tech companies

- Articulate your leveling rationale if you think you should come in higher

- Be prepared to walk away; desperation kills negotiation leverage

Tip: Remember that data is self-reported and skewed toward higher earners, so calibrate expectations accordingly.

Career Progression Path

The typical Amazon data scientist career ladder looks like:

Data Scientist I (L4) → Data Scientist II (L5): This promotion usually takes 2-3 years and requires demonstrating technical competence, delivering end-to-end projects independently, and showing growth in business judgment. You’re expected to move from needing guidance to driving projects autonomously.

Data Scientist II (L5) → Senior Data Scientist (L6): Reaching L6 takes 3-5 years and represents a significant bar raise. You need to demonstrate technical depth, influence beyond your immediate team, and leadership and mentorship skills. Many strong L5s plateau here; L6 and beyond require not just doing excellent work, but shaping how others work.

Senior Data Scientist (L6) → Principal Data Scientist (L7): This is an inflection point in career progression. L7 roles are true technical leadership positions where you’re setting vision, defining standards, and influencing organization-wide decisions. Promotions to L7 are rare and require executive sponsorship. At this level, you choose between the IC (individual contributor) track or pivoting to management.

Alternative Path: People Management: At L5 or L6, you can transition to a Data Science Manager role (L6/L7), leading a team of 4-8 data scientists. Management requires different skills (hiring, performance management, stakeholder communication) and isn’t inherently “better” than IC roles since Amazon values both tracks.

Factors Influencing Progression Speed

Career growth at Amazon is based on impact and influence rather than time. Several factors accelerate or slow progression:

- Scope and complexity: Solving hard, ambiguous problems that matter to the business accelerates promotions. Optimizing existing models is valuable but won’t get you promoted as fast as building new capabilities.

- Visibility: Working on high-priority projects with executive attention creates more promotion opportunities than toiling in obscurity.

- Team and manager: Your manager’s effectiveness advocating for you during promotion cycles matters enormously. Strong managers mentor you toward promotion; weak ones let you stagnate.

- Economic climate: During belt-tightening, promotions slow across Amazon. During growth phases, bars may relax slightly.

Amazon’s promotion process happens through a biannual review cycle where managers nominate candidates and make cases to a promotion committee. You need demonstrated performance over multiple review cycles (typically 6-12 months minimum) before nomination.

Top Mistakes to Avoid in the Amazon Data Scientist Interview

Even strong candidates make avoidable mistakes that cost them offers. Here are the most common pitfalls and how to sidestep them.

Treating SQL as an Afterthought

As noted by DataLemur and numerous interview reports, weak SQL performance often eliminates otherwise strong candidates early in the process. Candidates assume basic SQL competency, such as writing simple SELECT statements, joins, and GROUP BY queries, is enough.

However, Amazon’s SQL questions test whether you can translate multi-step business logic into efficient queries under time pressure, including multi-table joins with filtering logic and window functions for ranking and running calculations.

Tip: Solve 3-4 complex SQL problems daily in a plain text editor on Interview Query until you can write queries confidently in under 20 minutes.

Prioritizing Theory Over Business Framing

Answering “How would you improve recommendation quality?” with purely technical approaches like “I’d try a neural collaborative filtering approach with embeddings” without discussing metrics, trade-offs, or business impact signals you’re a model-builder, not a problem-solver. Amazon wants to see that you understand how your technical work translates to customer value and business outcomes.

Tip: Structure every technical answer as: (1) Clarify business objective, (2) Define success metrics, (3) Propose technical approach, (4) Discuss trade-offs—in that order.

Weak or Generic STAR Stories

Vague generalities like “I usually handle conflicts by communicating clearly” lack specificity, measurable outcomes, or clear connection to your individual contributions. Saying “Our team built a recommendation system” doesn’t show what you specifically did, and interviewers will probe until they understand your individual contribution versus your team’s.

Tip: Write out 15-20 detailed STAR stories with specific metrics before your interview, then practice delivering them aloud in 2-3 minutes each, using “I” instead of “we” to clarify your role.

Poor Communication of ML Trade-offs

When asked “What model would you use?” jumping immediately to your favorite algorithm without considering alternatives or failing to acknowledge limitations ignores that every approach has weaknesses. Amazon wants to see that you’ve thought through multiple solutions, understand their relative strengths and limitations, and can make principled choices based on business context.

Tip: For every ML question, discuss at least two alternative approaches and explicitly state the trade-offs. For example: “Option A offers interpretability but may sacrifice accuracy, while Option B handles non-linearity better but requires more data.”

FAQs

What coding topics should I prioritize for an Amazon data scientist interview?

Focus on data manipulation with Python (pandas, numpy) and SQL as your top priorities, since these appear in nearly every Amazon data scientist interview. For algorithms, prioritize arrays, hashmaps, string manipulation, and basic recursion at LeetCode Easy/Medium difficulty.

How many rounds does the Amazon data scientist interview have?

The Amazon data scientist interview process typically includes 1-2 technical phone screens (45-60 minutes each) followed by an on-site or virtual loop with 5-6 interviews (45-60 minutes each). The on-site loop usually consists of: one coding interview, one statistics/probability deep dive, one machine learning interview, one case study/product sense interview, and 1-2 behavioral interviews focused on Leadership Principles.

Does Amazon emphasize machine learning theory or practical implementation more?

Amazon balances both, but leans toward practical implementation and business judgment over pure theory. You need to understand ML algorithms deeply enough to explain how they work, when they fail, and their trade-offs, but interviewers care more about whether you can apply them to solve real business problems. Expect questions like “How would you handle class imbalance in this fraud detection scenario?” rather than “Derive the gradient descent update rule from first principles.”

What SQL dialect / complexity should I expect?

Amazon primarily uses SQL flavors similar to PostgreSQL or Redshift, so focus on standard ANSI SQL that works across platforms. Expect high complexity: multi-table joins with filtering conditions, window functions for breaking down complex queries, date arithmetic for cohort analysis, and aggregations with CASE statements.

How important are the Leadership Principles compared to the technical rounds?

Leadership Principles carry equal weight to technical performance. You can fail the interview by acing technical rounds but bombing behavioral questions, or vice versa. Amazon evaluates every candidate against their 16 Leadership Principles through dedicated behavioral interviews, and the Bar Raiser specifically assesses cultural fit alongside technical competency.

How can I show business impact in my answers for the data scientist interview?

Always connect technical work to measurable business outcomes using specific numbers. Instead of “I built a recommendation model,” say “I built a collaborative filtering model that increased CTR from 2.1% to 3.4%, driving $800K in incremental quarterly revenue.”

Structure answers to lead with business context before technical details: clarify the business objective, define success metrics, propose technical approach, then discuss trade-offs and implementation.

What salary should I expect for a Data Scientist at Amazon?

Amazon data scientist salary varies by level. L4 (entry-level) starts at ~$180K total compensation,, while L5 (experienced) ranges from $220K-$270K. Meanwhile, L6 (senior) average salary is ~$430K, including base salary, sign-on bonuses, and RSUs. There’s typically room to negotiate on sign-on bonuses and RSU grants if you have competing offers.

How do I prepare for the case-study / metrics design part of the interview?

Practice structuring ambiguous business problems by following a consistent framework:

- Ask clarifying questions about business objectives and constraints

- Define success metrics and how you’d measure them

- Identify data sources and potential analyses

- Discuss trade-offs and limitations

- Propose next steps

Study Amazon’s business model across different segments (retail, AWS, Prime, advertising) so you can speak intelligently about relevant metrics like customer lifetime value, conversion rate, or operational efficiency.

Conclusion & Next Steps

The Amazon data scientist interview is demanding by design, but that’s precisely why landing a role feels so rewarding. After all, you’re entering an organization where data drives decisions and your work directly impacts millions of customers’ experiences with Amazon’s products and services.

Interview Query offers targeted resources for Amazon data scientist prep. Beyond exploring real-world interview questions across topics and rounds, build fluency with Amazon-style SQL practice problems through the 14 Days of SQL study plan. As you practice for the full loop, try Interview Query’s Mock Interviews to simulate real interview pressure with timed practice sessions.

Lastly, learn from Nick Bermingham’s success story and be inspired to land a role at Amazon through strategy, structure, and collaboration.