Amazon Data Engineer Interview: Inside the SQL, ETL, and Architecture Rounds

Introduction

Every time you stream a show on Prime Video, reorder a favorite item, or check delivery updates in real time, you’re interacting with the work of Amazon’s data engineers. Behind every customer experience lies a network of pipelines, tables, and systems that move over 160 petabytes of data in a single day during peak events like Prime Day. The Amazon data engineer interview is designed to find the people who can build, scale, and protect those systems and create the backbone of one of the world’s largest data ecosystems.

This guide walks you through everything you need to prepare for the Amazon data engineer interview. You’ll learn what the role entails, what each interview stage covers, and how to approach real Amazon data engineer interview questions with structure and confidence.

What does an Amazon data engineer do?

A data engineer at Amazon designs and maintains the systems that power every key decision across the business. The role bridges software engineering, analytics, and cloud architecture — transforming raw, unstructured data into reliable infrastructure that drives scale.

- Build and automate ETL pipelines using AWS Glue, EMR, or Spark

- Design efficient schemas and data models for Redshift, DynamoDB, or Aurora

- Manage streaming systems on S3 and Kinesis for real-time processing

- Implement monitoring, alerting, and testing frameworks for data reliability

- Partner with scientists, analysts, and engineers to deliver high-quality insights

Amazon data engineers are known for their ownership mindset. They build, deploy, and operate their own systems, ensuring performance and reliability even under the pressure of global scale. The work demands both precision and creativity, reflecting a culture built around accountability, curiosity, and continuous improvement.

Why this role at Amazon

Amazon’s reach gives data engineers an opportunity unlike any other — to design systems that influence how the world shops, streams, and interacts online. You’ll work with some of the most advanced data tools and cloud infrastructure on the planet, solving problems that rarely exist anywhere else. The experience develops a unique blend of architectural thinking and hands-on technical skill, all within a company where ownership is real and innovation never stops. For engineers who want to learn fast, build at scale, and see their work impact millions, this is where it happens.

If you’re exploring multiple roles across the company, our full Amazon interview guide breaks down what different teams test for and how Amazon evaluates candidates across functions.

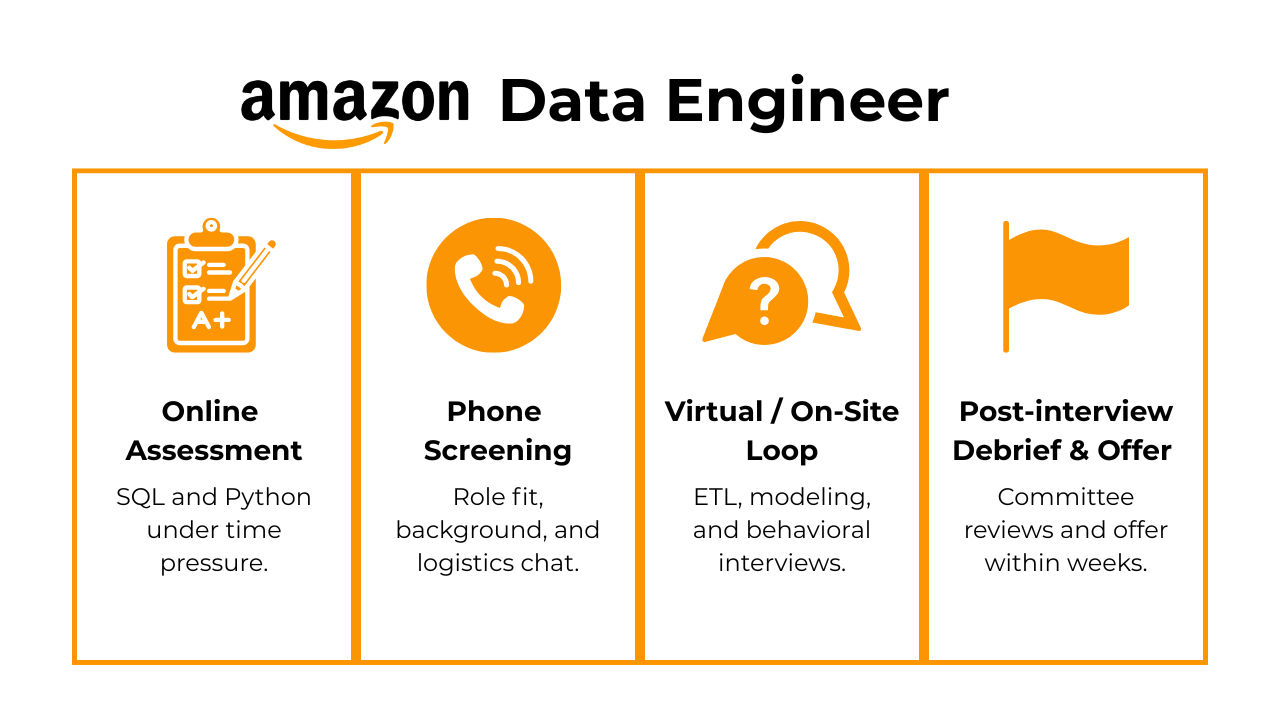

Amazon Data Engineer Interview Process

The Amazon data engineer interview process is built to evaluate how you think, communicate, and solve problems at scale. It is not just about writing SQL or knowing your ETL tools. It is about demonstrating how you approach ambiguity, make trade-offs, and uphold Amazon’s Leadership Principles while building systems that serve millions of customers. The full process usually includes four main stages, each designed to test a different aspect of your technical and behavioral skill set.

Online assessment

The online assessment is the first step for most applicants. It includes two to four timed questions covering SQL, data transformations, and light Python scripting. Tasks simulate real workflows such as filtering customer data, computing aggregates, or parsing semi-structured JSON. Some versions include logic puzzles that test pattern recognition.

What Amazon looks for

| Skill Area | Evaluation Focus |

|---|---|

| SQL accuracy | Correct joins, clean logic, consistent output |

| Data intuition | Handling messy or incomplete data |

| Python basics | Small scripts for manipulation or validation |

| Reasoning | Clear structure under time constraints |

Tip: Practice working under time pressure and double-check your results before submission. Amazon values correctness, readability, and logical consistency. Use test cases to confirm your assumptions instead of guessing, and make sure to structure your queries clearly with comments and indentation.

Technical phone screen

Candidates who pass the OA move to a 45–60 minute technical interview over Amazon Chime. You will share your screen and solve SQL or data modeling tasks live. Expect questions on joins, aggregations, window functions, indexing, and partitioning. Some interviewers also ask how you would design or debug a small data model.

This stage tests

- Ability to write correct and efficient SQL

- Comfort interpreting intermediate outputs

- Understanding of optimization strategies

- Clear communication and structured reasoning

Tip: Think aloud while solving. Amazon interviewers pay close attention to your reasoning, not just the final code. Explain your approach, clarify assumptions early, and refine your solution iteratively. When optimizing a query, describe how indexes, partitions, or caching could improve performance.

On-site or virtual interview loop

The on-site or virtual interview loop includes four back-to-back interviews that collectively test your technical capability, data intuition, and alignment with Amazon’s Leadership Principles. Each round has a distinct focus.

SQL and data modeling round: You will write SQL queries, analyze query performance, and design schemas. Interviewers often frame these around realistic business problems like order management or user engagement tracking. They want to see if you can create models that are efficient and scalable.

Tip: Always explain why you are making specific design decisions. For instance, if you choose a star schema over a snowflake model, justify it based on simplicity, query performance, or maintenance cost.

ETL design or coding round: In this round, you will design or outline an ETL pipeline that processes data from ingestion to storage. You might be asked to handle deduplication, schema evolution, or late-arriving data. Amazon wants to see if you can design systems that are modular, recoverable, and cost-efficient.

Tip: Explain how you would schedule jobs, handle errors, and validate data quality. Mention how you would use AWS tools like Glue, Lambda, or Step Functions to orchestrate your workflows efficiently.

System architecture round: This round tests how you think at scale. You may be asked to design a streaming analytics system, an event-driven data lake, or a metrics pipeline for Prime Video. You will need to reason about partitioning strategies, data consistency, and cost management in the AWS ecosystem.

Tip: Break your design into clear layers such as ingestion, processing, storage, and consumption. Show how you would ensure reliability with replication, fault tolerance, and monitoring. Use concrete examples when discussing scalability or latency trade-offs.

Behavioral or leadership round: This is where Amazon evaluates how you embody its Leadership Principles in your work. You will discuss real experiences where you took ownership, solved ambiguous problems, or improved an existing process. The STAR method (Situation, Task, Action, Result) is key here.

Tip: Choose stories with measurable outcomes, like reducing pipeline runtime or improving data accuracy. Focus on what you learned and how your actions aligned with Amazon’s culture of accountability and impact.

Bar raiser interview

The final stage is the bar raiser, led by a neutral, highly trained interviewer responsible for maintaining Amazon’s hiring standard. They explore your long-term potential, decision-making, and cultural alignment.

You can expect:

- Probing questions about past decisions

- Hypothetical scenarios involving scale, outages, or trade-offs

- Follow-ups that test consistency and technical judgment

What bar raisers emphasize

| Area | What They Assess |

|---|---|

| Judgment | How you balance correctness, cost, and speed |

| Ownership | Whether you step up during failures or ambiguity |

| Technical rigor | Depth of design decisions and reasoning |

| Cultural fit | Alignment with Leadership Principles |

Tip: Stay calm and structured in your answers. Bar Raisers are less interested in perfect answers and more focused on your thought process, clarity, and judgment. Be transparent about trade-offs you have made in the past and how you have balanced technical precision with business value.

The Amazon data engineer interview process rewards candidates who are systematic, data-driven, and comfortable navigating complexity. If you can explain your decisions clearly, anticipate scale challenges, and demonstrate accountability throughout, you will stand out as a strong candidate ready to thrive in Amazon’s fast-paced, data-intensive environment.

Need 1:1 guidance on your interview strategy? Interview Query’s Coaching Program pairs you with mentors to refine your prep and build confidence. Explore coaching options →

Amazon Data Engineer Interview Questions

Amazon data engineer interview questions are designed to test how you think about data end-to-end. They measure not only your ability to write optimized SQL and build scalable ETL pipelines but also your approach to design, communication, and decision-making under ambiguity. The questions fall into three main categories: SQL and data modeling, ETL design and coding, and behavioral and leadership. Each category mirrors the challenges Amazon data engineers face daily when building systems that handle petabytes of information.

Before you dive into the examples below, browse the full set of Amazon data engineer interview questions on Interview Query to see the exact patterns Amazon asks across SQL, ETL, and system design.

SQL and data modeling interview questions

SQL and data modeling are at the core of the Amazon data engineer interview. These questions measure how you approach data analysis and schema design at scale — whether you can translate business logic into optimized queries and structure data in a way that supports speed, integrity, and long-term flexibility. You are not only expected to know SQL syntax but to explain why certain joins, indexes, or schema types make sense given the scenario. Amazon looks for engineers who can think critically about data quality, maintainability, and scalability across billions of records.

-

To solve this, perform a LEFT JOIN between the

neighborhoodsanduserstables onneighborhood_id, then filter whereuser.neighborhood_idisNULL. This returns all neighborhoods with no corresponding users. The goal is to test your understanding of join logic, null handling, and referential integrity. Amazon interviewers often add follow-up questions about indexing and query execution, so explain how you’d optimize joins for large tables.Tip: Highlight that LEFT JOINs ensure completeness, while filtering on NULL identifies non-matching records — a pattern that appears frequently in real-world data validation tasks at Amazon.

-

Join the

customersandshipmentstables oncustomer_id, then check if eachship_datefalls betweenmembership_start_dateandmembership_end_date. This type of query evaluates your ability to filter temporal data correctly while accounting for missing or partial records. You may also be asked to handle edge cases such as overlapping memberships or null date values.Tip: Explain how you would validate date ranges and enforce data consistency with constraints or derived columns. Amazon prioritizes reliability and expects engineers to think about exception handling before deployment.

-

Join

accountsanddownloadsonaccount_id, group bydownload_dateandpaying_customer, and calculate averages usingAVG()orSUM()/COUNT(). This problem measures your fluency in using aggregation functions and your ability to think about performance trade-offs. For Amazon-scale data, a naive aggregation can easily exceed memory limits, so partitioning and pre-aggregation strategies are key discussion points.Tip: Go beyond writing the query. Discuss how you’d use Redshift’s distribution keys or partition pruning to reduce I/O overhead for faster daily rollups.

Implement a Type 2 Slowly Changing Dimension for customer profiles that preserve historical changes in attributes like address and Prime status.

Create a dimension table with

effective_start,effective_end, and acurrent_flagto track record validity over time. New changes insert a new record, while the previous one is marked inactive by updating the end date and flag. This question tests your understanding of historical data versioning, a core concept in Amazon’s analytical environments.Tip: Describe how you’d orchestrate the ETL job using AWS Glue or EMR to detect and process changes efficiently. Include how you’d handle late-arriving updates and ensure downstream systems always reference the correct version of a record.

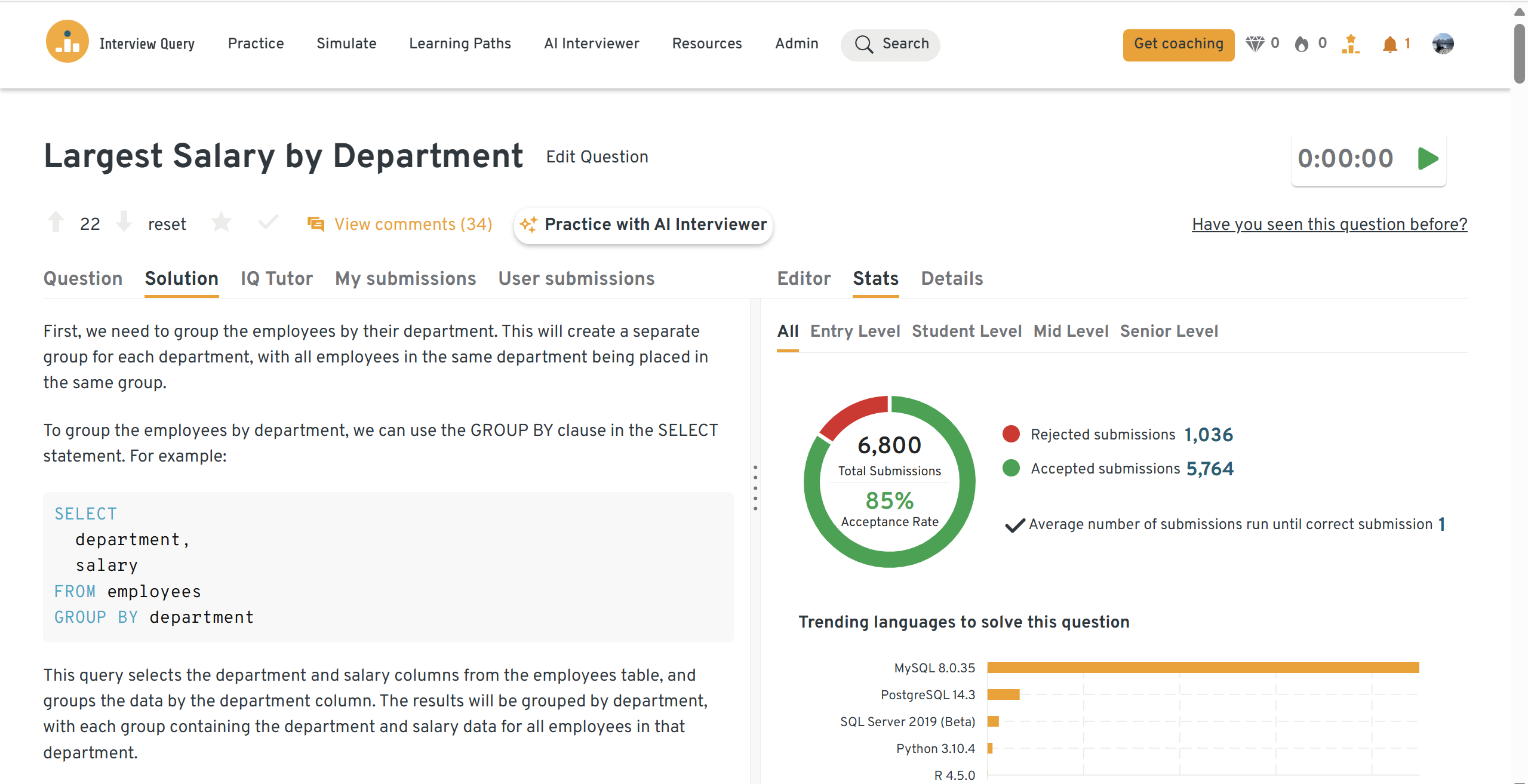

Given an employees table, write a query to retrieve the highest salary within each department.

Use a window function such as

ROW_NUMBER()orDENSE_RANK()over a partition by department to isolate the top salary in each group. This question evaluates your comfort with analytical SQL and your ability to write queries that scale efficiently on distributed data warehouses like Redshift. It also tests whether you can distinguish between methods likeMAX()aggregation versus ranking for flexibility.Tip: Emphasize why window functions are preferred in production systems — they simplify logic, improve maintainability, and often outperform nested subqueries when optimized correctly.

Head to the Interview Query dashboard to practice this question hands-on. With built-in SQL testing, performance analytics, and AI-guided tips, it’s one of the best ways to sharpen your skills for Meta’s data interviews.

ETL design and coding interview questions

ETL (Extract, Transform, Load) and coding questions test how well you can build, maintain, and optimize data pipelines at scale. Amazon’s data engineers are expected to write clean, modular code that can handle high-velocity and high-volume datasets with minimal downtime. These questions focus on how you think about data movement, error handling, and the trade-offs between scalability, cost, and latency. You will often be asked to reason through the design of a pipeline before diving into implementation details.

For more practice, see our curated list of data engineer SQL questions that mirror the complexity and scale of Amazon’s technical rounds.

-

You can solve this using a hash map to store the difference between the target and each element as you iterate through the array. When a match is found, return both indices. The time complexity is O(n) and space complexity is also O(n). This question tests your ability to think algorithmically and reason about efficiency, which is crucial when designing pipelines that must process data in real time.

Tip: Explain how hash-based lookups reduce time complexity, and draw parallels to how this approach applies to deduplication or lookup stages in ETL pipelines.

Given two sorted lists, write a function to merge them into one sorted list.

Use two pointers to iterate through both lists, appending the smaller element each time until one list is exhausted, then append the remaining elements. The goal is to verify that you understand basic algorithmic operations used in sorting and merging datasets. Amazon often uses this problem to assess your ability to reason through data ordering in distributed systems such as MapReduce.

Tip: Relate your solution to how Amazon engineers merge partitioned files during ETL jobs. Show you understand how sorting affects performance when combining large datasets.

You are given a stream of product logs containing timestamps and categories. Design a function to aggregate the top three most purchased categories every hour.

This problem evaluates your ability to handle streaming data aggregation. You can solve it by grouping events into one-hour windows, maintaining counts for each category, and retrieving the top three within each window. Implementation can involve tools like AWS Kinesis or Apache Spark Structured Streaming.

Tip: Explain how you would handle out-of-order events and manage window states efficiently. Amazon looks for candidates who understand both functional correctness and operational challenges in real-time systems.

Design a workflow that processes customer reviews daily and updates an analytics dashboard.

Ingest the raw data from S3, transform it with AWS Glue or Spark to standardize formats, then load it into Redshift for reporting. Set up a Lambda function to trigger the job automatically based on time or file arrival. This question tests whether you can think in terms of orchestration, dependency management, and error recovery.

Tip: Mention how you would monitor pipeline health using CloudWatch, retry failed tasks, and ensure idempotent loads to prevent data duplication. Amazon values candidates who prioritize data reliability and observability.

Find the top five paired products frequently purchased together by the same user.

You can solve this with a self-join on the transactions table by

user_id, grouping by product pairs and counting frequency. This requires an understanding of co-occurrence analysis and efficient aggregation. Amazon expects you to think about memory constraints and scaling techniques when working on datasets with billions of records.Tip: Discuss how you’d optimize this with Spark’s

groupByKeyandreduceByKeytransformations or by pre-aggregating at the user level before pairing. Mentioning data skew mitigation and partitioning strategies will help you stand out.

Behavioral and leadership principle interview questions

Amazon’s behavioral interviews are as important as the technical ones. Every question connects to one or more of the company’s 16 Leadership Principles, such as Ownership, Dive Deep, and Deliver Results. Data engineers are expected not only to build scalable systems but also to take accountability for their reliability, collaborate across teams, and use data to drive business outcomes. In these rounds, interviewers look for self-awareness, clarity, and measurable impact. Use the STAR method (Situation, Task, Action, Result) and end each story with what you learned.

-

Focus on strengths that demonstrate both technical and interpersonal maturity, such as simplifying complex systems, automating manual workflows, or mentoring teammates. For weaknesses, mention a real area for improvement and how you’ve addressed it over time through learning or feedback.

Tip: Balance confidence with reflection. Amazon values those who learn fast, adapt, and show curiosity in solving problems.

Sample answer: My biggest strength is simplifying complexity. In one project, I refactored a 5-step ETL process into a single Glue workflow that reduced runtime by 40%. I received feedback that I used to over-focus on system efficiency at the expense of documentation. Since then, I’ve built templates and written design notes for every project to improve team onboarding and maintainability.

How do you prioritize multiple deadlines and ensure data integrity across projects?

This question tests ownership and judgment under competing demands. Explain how you assess urgency, communicate trade-offs, and protect data quality when timelines tighten.

Tip: Use specific examples that demonstrate “Bias for Action” and “Deliver Results.” Show how you balance speed with accuracy through planning and collaboration.

Sample answer:When supporting both marketing analytics and logistics dashboards, I created a priority matrix to rank tasks by business impact and data risk. I focused first on pipelines affecting real-time sales metrics, then communicated expected delays for lower-priority requests. This ensured continuity for critical systems while maintaining full transparency with stakeholders.

Tell me about a time you resolved a data incident that impacted downstream analytics.

This question measures how you handle ownership, communication, and incident recovery. Amazon wants to see how you identify the root cause, prevent recurrence, and maintain composure during high-pressure moments.

Tip: Emphasize root-cause analysis and post-incident learning. Show that you view every outage as an opportunity for improvement.

Sample answer: A malformed CSV file once caused duplicate entries in a customer revenue table that powered executive dashboards. I paused downstream jobs, fixed the schema mapping, and restored clean data from backup. Afterward, I implemented automated validation scripts that checked file consistency before ingestion. This reduced similar incidents by 90% over the next quarter.

Describe a time you improved the scalability or cost efficiency of a data pipeline.

Amazon’s data engineers are expected to optimize constantly. Interviewers look for your ability to measure, test, and communicate performance improvements.

Tip: Quantify your results and describe trade-offs between performance, reliability, and cost.

Sample answer: I noticed that a Spark job used for daily session aggregation was running with unnecessary shuffle operations, driving up costs. I rewrote the transformation using partition keys and broadcast joins, cutting processing time by 60% and lowering EMR costs by 25%. I documented the optimization process and shared it with my team to replicate similar savings across other pipelines.

Describe a data project you worked on. What were some of the challenges you faced?

This question gauges your ability to overcome ambiguity and technical challenges through structured problem-solving. Focus on complexity, teamwork, and lessons learned.

Tip: Choose a story that highlights “Dive Deep” and “Earn Trust.” Show how you validated assumptions and communicated results clearly.

Sample answer: During a migration from on-prem Hadoop to AWS, data consistency checks between S3 and Redshift revealed unexpected null values. I used Athena to trace data lineage and found that our legacy jobs were skipping empty JSON fields. I fixed the parsing logic and built a cross-system validation dashboard. This prevented future data drift and improved team confidence in the new platform.

Want more challenges? Test your skills with real-world analytics challenges from top companies on Interview Query. Great for sharpening your problem-solving before interviews. Start solving challenges →

Before wrapping up, check out this insightful video featuring Fikayo, guided by Ravi, as they tackle one of the most common data engineer interview questions asked by companies like Amazon and Booking.com. The problem? Identifying and removing duplicate product names in massive e-commerce databases, a real-world challenge that tests your ability to think critically about data quality and system design.

In this tutorial, you’ll learn how to handle tricky cases like differentiating between “iPhone X” and “Apple iPhone 10,” while sharpening the SQL and data-handling concepts crucial for Amazon-style interviews. Whether you’re preparing for your first data engineer role or your next big leap, this walkthrough offers the kind of structured problem-solving approach that top employers look for. Watch below to level up your prep.

How to Prepare for an Amazon Data Engineer Role

Preparing for the Amazon Data Engineer interview requires mastering both scale-aware technical skills and the behavioral mindset Amazon values. You’ll need to demonstrate fluency in SQL, ETL design, and data modeling while showing clear alignment with Amazon’s Leadership Principles. Below are specific, high-impact preparation areas tailored for this role.

For a structured roadmap, explore Interview Query’s learning paths, which organize SQL, ETL, and system design prep into step-by-step modules built for data engineering roles.

Master Redshift, DynamoDB, and AWS-native data architecture

Understand how to model data efficiently within Amazon’s tech ecosystem. Review how Redshift handles distribution and sort keys, and when to use DynamoDB for high-throughput, low-latency operations. Know trade-offs between schema flexibility, query speed, and cost efficiency.

Tip: Practice optimizing queries on sample datasets using Redshift syntax, and explain your partitioning strategy during mock interviews. Amazon values engineers who know why they choose one service over another.

Understand data lake architecture on S3 and Glue orchestration

Many Amazon teams rely on lakehouse setups with S3, Glue, and Athena. Be prepared to explain how you’d structure and partition a data lake to support analytical and real-time workloads.

Tip: Build a mini data lake project using open datasets, then walk through ingestion, schema evolution, and querying. Amazon interviewers appreciate candidates who can translate theory into AWS practice.

Prepare for ETL optimization and monitoring scenarios

You’ll be asked how to ensure reliability, scalability, and fault tolerance in ETL jobs. Review Glue job retries, CloudWatch monitoring, and how to handle schema drift.

Tip: Practice explaining how you’d prevent failures in daily jobs, manage alerting thresholds, and ensure idempotency in pipeline reruns. Tie your examples to ownership and operational excellence.

Know how to design streaming architectures

Amazon’s large-scale systems often depend on Kinesis or Kafka-based streaming pipelines. You should understand concepts like event time, windowing, partition keys, and handling late data.

Tip: Sketch out a high-level design for processing order events or sensor data in real time. Explain where you’d use Lambda, Firehose, or S3 buffers to balance latency and cost.

Develop strong data modeling instincts

Expect to be challenged on schema design for analytics and operations. Know when to use star vs. snowflake models, how to design SCD tables, and how to normalize or denormalize data in Redshift.

Tip: Rehearse explaining trade-offs between query performance and flexibility. Amazon interviewers look for precision in how you reason about scaling and long-term maintenance.

Rehearse your Leadership Principles stories

Every interviewer will test how you embody principles like “Dive Deep” and “Deliver Results.” Be ready with examples where you took initiative, made data-driven decisions, or fixed an operational issue independently.

Tip: Prepare 6–8 STAR-format stories tied to measurable outcomes. Practice concise storytelling—Amazon prefers clear, structured communication that links technical work to business impact.

Strengthen your fundamentals in SQL and Python

SQL remains the backbone of every round. Be comfortable with CTEs, window functions, and debugging long queries. Python proficiency helps when writing scripts for data cleaning or orchestration. If you’re still building confidence with SQL, this guide on how long it takes to learn SQL breaks down realistic timelines and the fastest paths to becoming interview-ready.

Tip: Use online platforms to practice timed SQL challenges and simulate Amazon-style problems. Aim for correctness, clarity, and performance in every solution.

Simulate the full interview loop under time pressure

Mock interviews help you practice transitions between technical and behavioral rounds. Simulate explaining your reasoning out loud and managing your time efficiently. You can also rehearse live scenarios with the AI interview simulator, which generates Amazon-style follow-up questions and evaluates your structure and clarity.

Tip: Record yourself during a 45-minute session covering SQL, data modeling, and behavioral questions. Review how you structure answers, then refine your pacing and clarity.

Average Amazon Data Engineer Salary

Amazon Data Engineers in the United States earn compensation packages that reflect both their technical expertise and the scale of their work. According to Levels.fyi, total annual pay ranges from approximately $144K per year for L4 Data Engineers to $264K per year for L6 Senior Data Engineers, with a median total compensation of around $216K annually. These packages include a mix of base salary, stock grants, and annual bonuses.

| Level | Role | Total (Annual) | Base (Annual) | Stock (Annual) | Bonus (Annual) |

|---|---|---|---|---|---|

| L4 | Data Engineer I | $144K | $108K | $21K | $13K |

| L5 | Data Engineer II | $216K | $144K | $70K | $0 |

| L6 | Senior Data Engineer | $264K | $144K | $108K | $0 |

Regional salary comparisons

Compensation varies by region, reflecting differences in cost of living and team specialization.

- Greater Seattle Area: Median total compensation is $216K per year, ranging from $144K for L4 to $252K for L6. Many of Amazon’s AWS and retail data infrastructure teams are based here. (Levels.fyi)

Amazon’s compensation model prioritizes long-term ownership through restricted stock units (RSUs) that vest over four years on a 5%, 15%, 40%, 40% schedule. This structure rewards engineers who stay and contribute to Amazon’s growth over time.

FAQs

What is the interview process like for Amazon Data Engineers?

The process typically includes four main stages: an online assessment, a technical phone screen, an on-site or virtual interview loop, and a bar raiser interview. Each stage is designed to assess different aspects of your skill set—from SQL fluency and ETL design to leadership and decision-making. For intern roles, the process may be condensed into two stages but will still evaluate technical and behavioral readiness.

How should I prepare for Amazon’s behavioral interview?

Focus on Amazon’s 16 Leadership Principles, which are woven into every round of the interview. Prepare 6–8 STAR-format stories that show how you demonstrated ownership, problem-solving, and customer obsession. The best answers are concise, data-driven, and highlight measurable impact, such as cost savings or performance improvements.

Do I need to be an expert in AWS for the interview?

You don’t need certification-level mastery, but you should be familiar with how core services work—especially Redshift, S3, Glue, and Kinesis. Amazon looks for candidates who can reason through trade-offs in cost, scalability, and fault tolerance. Knowing when and why to use specific AWS tools can make your responses stand out.

What kind of SQL questions does Amazon ask?

Expect intermediate-to-advanced SQL problems involving joins, aggregations, and window functions. Some questions also explore schema design or optimization strategies for large datasets. Amazon tests not only your ability to write correct queries but also your efficiency, clarity, and reasoning for performance improvements.

How important is Python or Java in the Amazon data engineer interview?

Python or Java proficiency is often required for ETL and pipeline design questions. You should know how to write clean, modular code for data transformation, error handling, and logging. While deep algorithmic knowledge isn’t necessary, demonstrating well-structured and production-ready code is a major advantage.

What does the bar raiser interview focus on?

The bar raiser evaluates your long-term fit and consistency with Amazon’s hiring standards. Instead of testing your syntax or coding speed, this round examines your problem-solving structure, technical judgment, and cultural alignment. Expect hypothetical or scenario-based questions that explore how you handle ambiguity, scale, and trade-offs.

Are there differences between L4, L5, and L6 data engineer interviews?

Yes. L4 interviews emphasize SQL, scripting, and data modeling basics, while L5 and L6 roles include advanced design discussions and system-level trade-offs. Senior candidates must show strong architectural thinking, leadership in cross-functional projects, and the ability to mentor others.

How can I practice for the Amazon Data Engineer assessment?

Use online platforms like Interview Query or HackerRank to simulate the time pressure and question format. Prioritize SQL joins, CTEs, and data transformation challenges. The assessment also includes logic-based or short Python tasks, so practice problem-solving in structured, efficient steps.

Does Amazon ask system design questions for data engineers?

Yes, especially for mid- to senior-level positions. You’ll be asked to design scalable data systems such as data lakes, streaming architectures, or ETL pipelines. Interviewers expect clear diagrams, explanation of trade-offs, and attention to latency, reliability, and cost optimization.

Is machine learning experience required for data engineer roles?

No, but understanding how data pipelines support model training and feature generation is beneficial. You’ll collaborate with data scientists, so being able to prepare and validate training datasets or automate data quality checks will strengthen your profile.

Build the Data Systems That Power the World’s Biggest Marketplace

Becoming a data engineer at Amazon means more than landing a high-paying role—it’s joining the engine behind one of the most data-driven companies in history. Every optimized query, pipeline, and schema you design can directly influence how millions shop, stream, or interact with AWS every day. The interview process rewards those who combine sharp technical thinking with real ownership, clarity, and an obsession for scalable solutions.

If you’re ready to take that step, start your prep with the data engineering learning path to master SQL and architecture challenges modeled after Amazon’s interviews. Then, practice under real conditions through a mock interview or review curated Amazon interview questions to fine-tune your approach. With focused preparation and the right mindset, you can turn your Amazon interview into a launchpad for a career building systems that power the world.