GPT-5.2 Claims to Beat Professionals on 70% of Tasks — What It Means for Tech Jobs

GPT-5.2’s Bold Claims About Human-Level Work

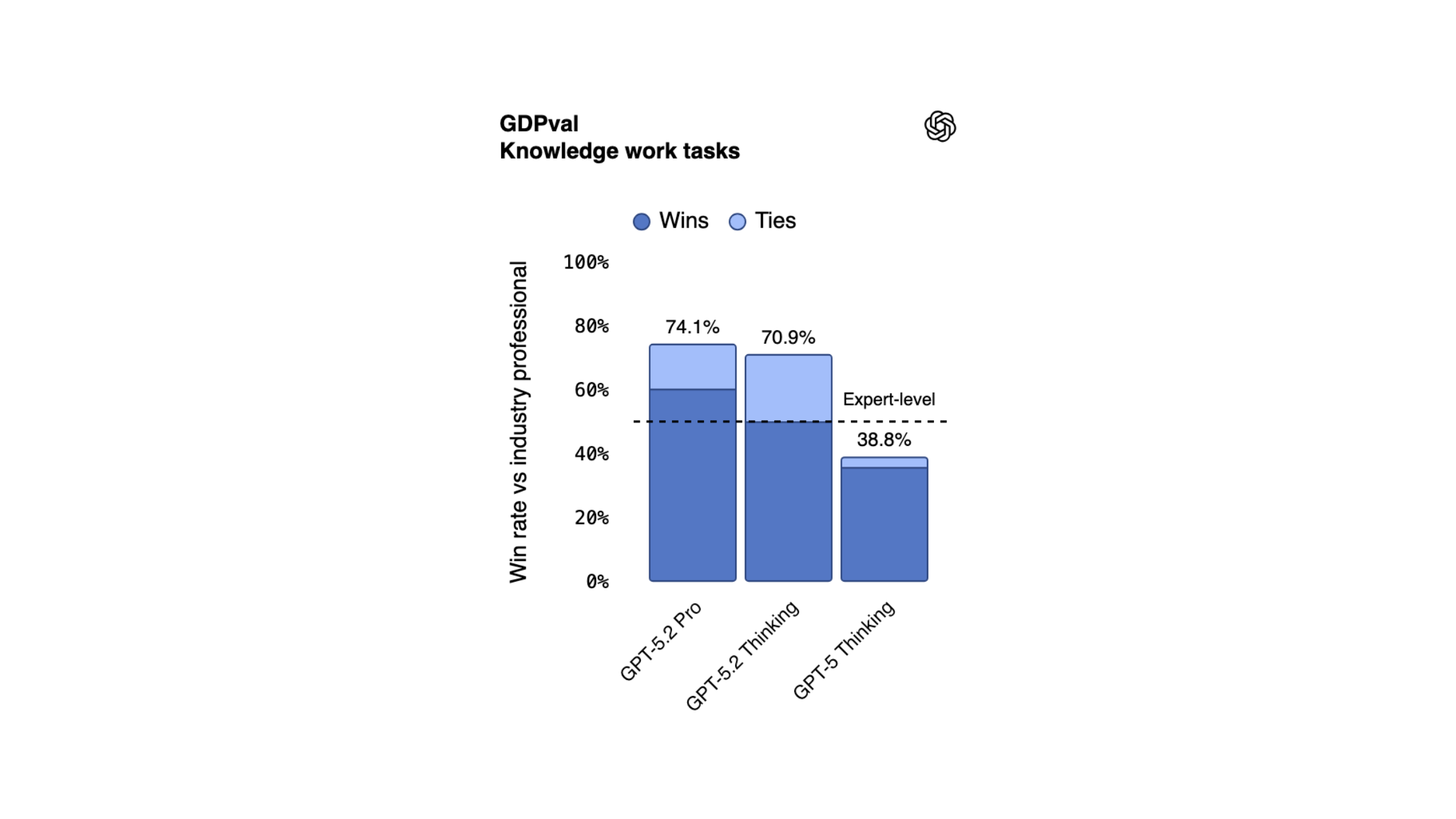

OpenAI’s release of GPT-5.2 marks its most knowledge-focused update yet, promising major jumps in reasoning accuracy, code reliability, and workflow automation. Among its boldest claims is that the updated model reportedly outperforms human professionals on around 70% of benchmarked tasks, and shows “parity or better” across 44 occupations.

Those occupations are selected using OpenAI’s GDPVal methodology, which is a framework designed to classify tasks by economic value, not just difficulty. This means the model isn’t just beating generic benchmarks but targeting jobs that matter to GDP output.

But the bigger question for workers is whether these capabilities represent a path to upskilling, displacement, or a shift into an entirely new category of work, especially in tech-oriented roles. GPT-5.2 is being framed as a “work accelerator,” yet that framing carries an unmistakable tension: acceleration for what and for whom? This article breaks down what GPT-5.2 can actually do, what’s hype, and in what ways it may reshape tech work moving forward.

What GPT-5.2 Actually Improved

Developer tests and OpenAI’s own documentation point to clear, measurable improvements: faster and more consistent reasoning, better code generation, stronger debugging accuracy, and, most importantly, improved performance on multi-step tasks. GPT-5.2 also integrates natively with agent-style workflows such as AutoDev, with better tool invocation and planning capabilities.

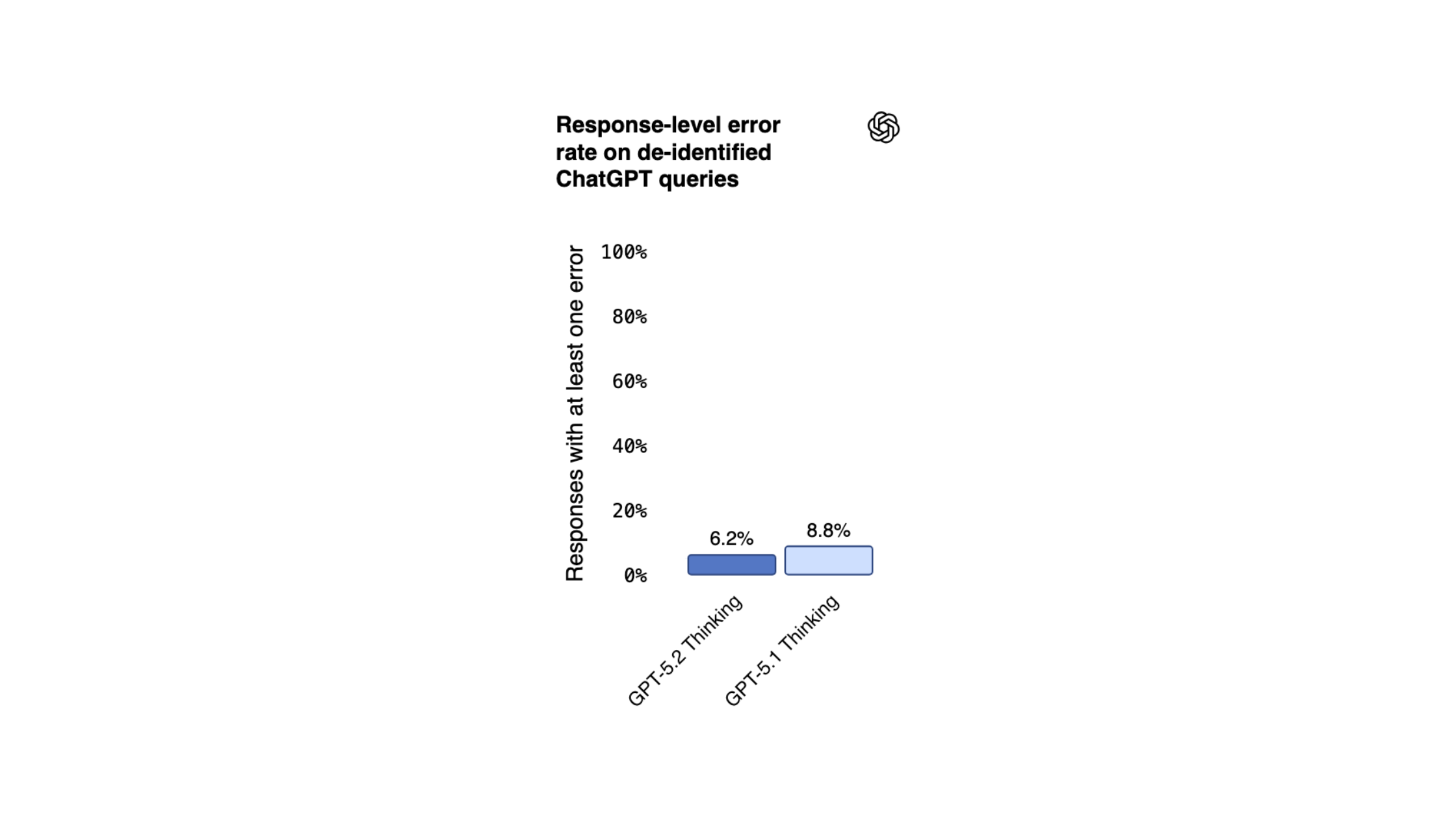

The most meaningful leap appears in hallucination reduction. OpenAI reports a 15–25% drop in “critical hallucinations” across coding and data tasks, and some early testers suggest even larger reductions when models are paired with tool execution. This especially matters for tech roles, as our earlier analysis of Google’s push for “vibe coding” noted unreliable outputs as one of the key concerns regarding AI-assisted engineering adoption.

In hands-on demos, GPT-5.2 reliably performs tasks that typically require junior or mid-level engineering effort, such as writing test suites, generating boilerplate microservices, drafting SQL pipelines, creating formatted analytics reports, and even constructing internal dashboards.

Companies already piloting 5.2, including Jasper, Notion, and multiple unnamed enterprise partners in OpenAI’s Enterprise Tier, report meaningfully higher throughput per engineer, with some citing internal productivity gains near 40–60%.

But higher throughput also raises the question of what happens to team size and junior roles, now that each engineer can potentially complete multiple engineers’ worth of work through the latest model.

What the 70% Claim Measures

The widely shared “70% of tasks” figure needs context. These tests evaluate structured tasks with measurable outputs, e.g., LeetCode-style coding problems, classification challenges, refactoring prompts, or reasoning tests with clear right/wrong answers. Many fall into the category of verifiable, low-context work such as unit tests, code rewrites, or SQL transformations.

This is impressive, but it’s worth stressing that this isn’t the same as outperforming a professional in their full job. Real engineering work involves ambiguity, tradeoff decisions, communication, ownership, and long-term system thinking, none of which are captured in these benchmarks.

The more realistic takeaway is that GPT-5.2 will reshape what humans spend time on, offloading execution while humans move toward oversight, architecture, judgment, and integration.

Will GPT-5.2 Reshape Tech Jobs?

For software engineers, data scientists, and IT workers, the implications feel increasingly concrete. Routine tasks like bug fixes, script generation, documentation, and pipeline setup are now quickly handled by AI.

But entry-level roles face particular pressure. Multiple studies, including SignalFire’s analysis of LinkedIn companies and employees, already show that early-career technical workers are losing access to the “apprenticeship layer” of grunt work that traditionally builds skill.

Companies like Anthropic, Google DeepMind, and startups building agentic workflows are already reorganizing teams around AI copilots, with smaller squads producing more output. With GPT-5.2, this compression may accelerate. Subject-matter experts can now generate code, documentation, analytics, and internal tools without dedicated specialists.

Still, counterforces remain strong. Companies need engineers who understand systems deeply, especially as AI-generated code scales exponentially and expands the attack surface.

Oversight, debugging, architecture, and review become more important, not less. Anthropic’s internal study, for example, found engineers reporting rising anxiety about skill erosion and emphasizing the importance of reviewing AI-generated code for quality and long-term maintainability.

What Tech Workers Should Watch Next

As GPT-5.2 rolls out, workers should keep an eye on several trends:

- Whether companies formally restructure around AI-first workflows

- Whether junior hiring slows in favor of senior-heavy, AI-leveraged teams

- The rise of multi-disciplinary “AI operators” who mix prompting, QA, domain knowledge, and system verification

- New roles such as AI integration engineer, AI workflow reviewer, or agent supervisor, with distinct skill expectations

GPT-5.2 is not a sudden job-replacement engine, but it’s a strong signal that the nature of technical work is shifting faster than the traditional pathways into it. Whether this shift empowers workers or destabilizes the job ladder will depend on how companies implement these tools, and how prepared workers are to adapt in line with evolving demands.