The People Building AI Still Prefer to Do Things Manually — Here’s Why

The AI Builders Are Pulling Back

Roles like AI engineer, model evaluator, and data annotator have surged in demand over the past two years as companies scramble to integrate AI into everything from enterprise software to user-facing apps. For context, Interview Query’s breakdown of why AI engineering jobs are exploding reports that the business impact of such roles has led to salaries that exceed $300K and over 4,000 monthly job openings.

But here’s the twist: even as these workers sit at the center of the AI wave, many of them don’t use AI for the kinds of everyday tasks you’d expect. According to recent reporting, these insiders often avoid letting bots write emails, summarize documents, or manage simple workflows, even when those tools are something they built and now readily available.

While it’s easy to assume that their reluctance is rooted in fear of losing relevance, the reason is much more practical.

AI workers have seen the limits of these systems up close. They’ve debugged the outputs, audited the failures, and watched how easily mistakes slip into the final product. And for other tech workers who’ve ever felt that a new “productivity tool” added more friction than it removed, this insider hesitation sounds familiar.

When Automation Creates More Work, Not Less

The Wall Street Journal’s coverage reveals that AI annotators, raters, and engineers frequently redo the very tasks automation was supposed to streamline. Sometimes, automated labels ignore obvious context. Other times, a bot’s summary misses crucial nuance. In other words, the tool does something that looks correct on the surface but requires enough double-checking that doing it manually would have been faster.

This is the “messy middle” of AI that many outside the industry don’t see. Models aren’t fully autonomous, but they’re not fully reliable either. Small errors compound. Output quality varies. And human oversight becomes a permanent layer; it’s not optional nor a temporary safety net, but an imperative embedded into daily workflows.

For many tech workers, this feels uncannily familiar. In software engineering, IT, and support roles, automation that promises efficiency often creates new tasks, edge cases, and debugging cycles.

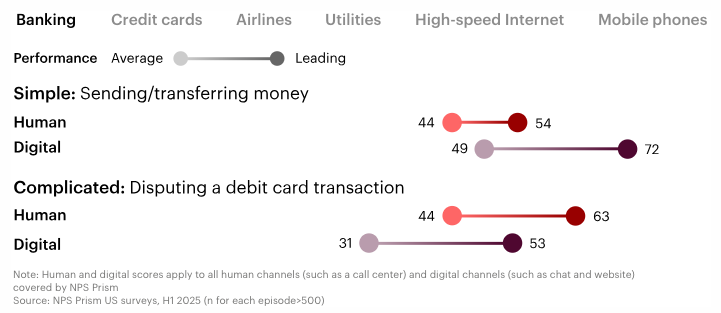

Other sectors feel this too. A Bain & Company study revealed that customer service representatives consistently report that AI chatbots struggle with complex tasks, leading to more escalations instead of fewer.

This presents an overarching problem of how automation fails quietly, in ways that only humans can—and must—catch.

The AI Workforce Setting Personal Boundaries with AI

Meanwhile, The Guardian’s reporting on AI workers adds another dimension.

The same tech researchers and engineers behind AI are advising family members and friends to use AI sparingly. Not because they think AI is dangerous, but because they’re acutely aware of how often it hallucinates, misleads, or mishandles private data.

After all, they’ve seen everything: from how models accidentally fabricate facts to how personal inputs quietly become training data. They understand how much information can be inferred, or other times leaked, from a single query.

This boundary-setting resonates with tech workers who already juggle tool overload, rapid-release AI features, and pressure to adopt new products as soon as they launch.

It also highlights why frameworks for responsible and ethical AI use, mainly involving data privacy, transparency, and oversight, are becoming essential skills for engineers, PMs, analysts, and anyone working in AI-adjacent fields. Knowing when not to rely on a model is now just as important as knowing how to prompt one.

What Insider Skepticism Reveals About the Future of Work

Beyond being a personal preference, the caution coming from AI builders about the very tools they’ve built is a signal about where the job market is headed.

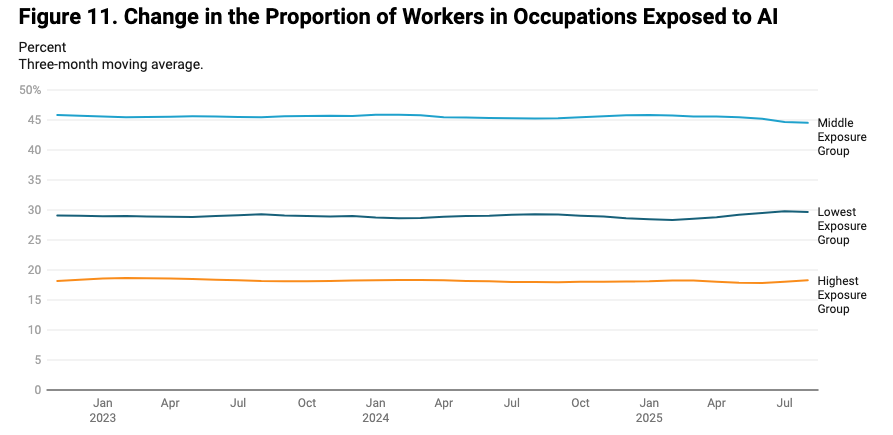

For instance, researchers at Yale’s Budget Lab found that AI isn’t poised to replace broad swaths of workers yet, largely because the technology still struggles with reliability, generalization, and error correction. Instead, AI is reshaping jobs toward tasks involving oversight, verification, and domain judgment.

To put it more simply, the human element isn’t disappearing, as workers are increasingly becoming editors, critics, and supervisors of automated systems. And in high-stakes fields like healthcare, finance, infrastructure, and engineering, the people who understand both the tools and their limitations are becoming more valuable, not less.

Conclusion: Human Judgment Still Matters

Across reports, a clear pattern emerges: the people closest to AI trust humans more than automation for meaningful or error-sensitive work. For tech workers and the broader workforce, this is less a warning and more a guide.

Use AI where it helps. Avoid it where mistakes are costly. And don’t feel pressured to adopt every new tool just because it exists. If the people building these systems are drawing boundaries, the rest of us benefit from being as discerning.